Ambari User's Guide

Overview

Hadoop is a large-scale, distributed data storage and processing infrastructure using

clusters of commodity hosts networked together. Monitoring and managing such complex

distributed systems is a non-trivial task. To help you manage the complexity, Apache

Ambari collects a wide range of information from the cluster's nodes and services

and presents it to you in an easy-to-read and use, centralized web interface, Ambari

Web.

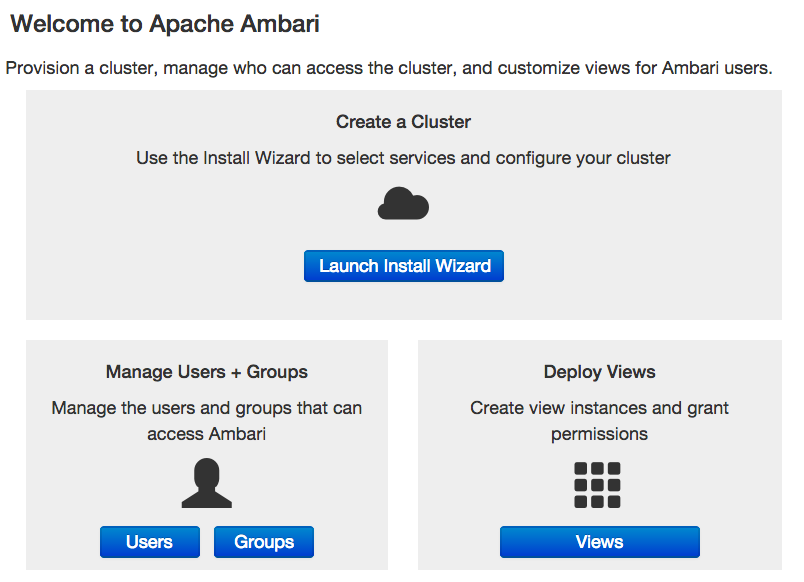

Ambari Web displays information such as service-specific summaries, graphs, and alerts.

You use Ambari Web to create and manage your HDP cluster and to perform basic operational

tasks such as starting and stopping services, adding hosts to your cluster, and updating

service configurations. You also use Ambari Web to perform administrative tasks for

your cluster, such as managing users and groups and deploying Ambari Views.

For more information on administering Ambari users, groups and views, refer to the

Ambari Administration Guide.

Architecture

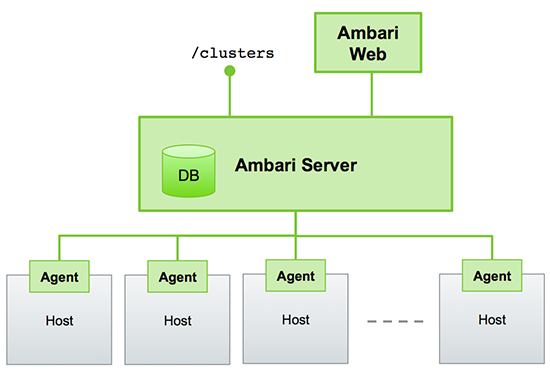

The Ambari Server serves as the collection point for data from across your cluster. Each host has a copy of the Ambari Agent - either installed automatically by the Install wizard or manually - which allows the Ambari Server to control each host.

Figure - Ambari Server Architecture

Sessions

Ambari Web is a client-side JavaScript application, which calls the Ambari REST API (accessible from the Ambari Server) to access cluster information and perform cluster operations. After authenticating to Ambari Web, the application authenticates to the Ambari Server. Communication between the browser and server occurs asynchronously via the REST API.

Ambari Web sessions do not time out. The Ambari Server application constantly accesses the Ambari REST API, which resets the session timeout. During any period of Ambari Web inactivity, the Ambari Web user interface (UI) refreshes automatically. You must explicitly sign out of the Ambari Web UI to destroy the Ambari session with the server.

Accessing Ambari Web

Typically, you start the Ambari Server and Ambari Web as part of the installation process. If Ambari Server is stopped, you can start it using a command line editor on the Ambari Server host machine. Enter the following command:

ambari-server start

To access Ambari Web, open a supported browser and enter the Ambari Web URL:

http://<your.ambari.server>:8080

Enter your user name and password. If this is the first time Ambari Web is accessed,

use the default values, admin/admin.

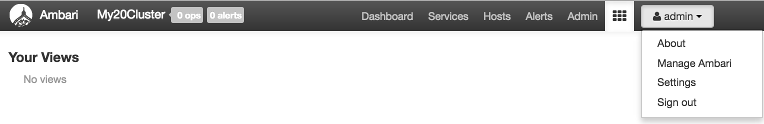

These values can be changed, and new users provisioned, using the Manage Ambari option.

For more information about managing users and other administrative tasks, see Administering Ambari.

Monitoring and Managing your HDP Cluster with Ambari

This topic describes how to use Ambari Web features to monitor and manage your HDP cluster. To navigate, select one of the following feature tabs located at the top of the Ambari main window. The selected tab appears white.

Viewing Metrics on the Dashboard

Ambari Web displays the Dashboard page as the home page. Use the Dashboard to view the operating status of your cluster in the following three ways:

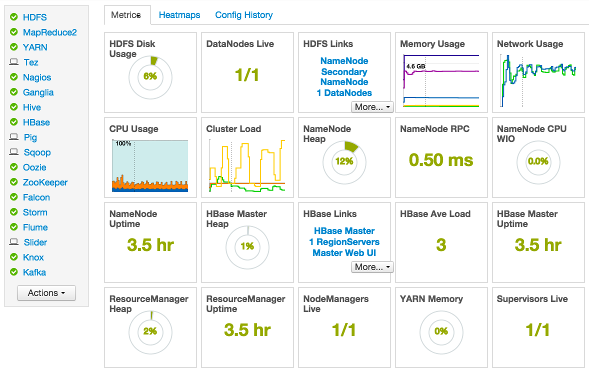

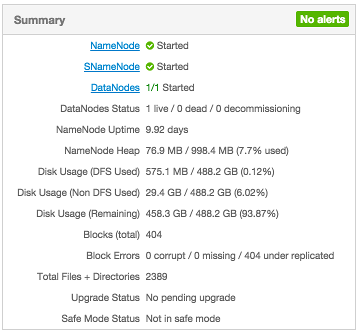

Scanning System Metrics

View Metrics that indicate the operating status of your cluster on the Ambari Dashboard. Each metrics widget displays status information for a single service in your HDP cluster. The Ambari Dashboard displays all metrics for the HDFS, YARN, HBase, and Storm services, and cluster-wide metrics by default.

You can add and remove individual widgets, and rearrange the dashboard by dragging and dropping each widget to a new location in the dashboard.

Status information appears as simple pie and bar charts, more complex charts showing usage and load, sets of links to additional data sources, and values for operating parameters such as uptime and average RPC queue wait times. Most widgets display a single fact by default. For example, HDFS Disk Usage displays a load chart and a percentage figure. The Ambari Dashboard includes metrics for the following services:

|

Metric: |

Description: |

|---|---|

|

HDFS |

|

|

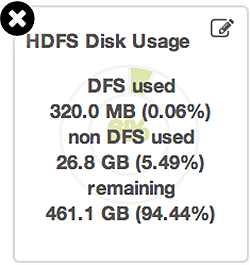

HDFS Disk Usage |

The Percentage of DFS used, which is a combination of DFS and non-DFS used. |

|

Data Nodes Live |

The number of DataNodes live, as reported from the NameNode. |

|

NameNode Heap |

The percentage of NameNode JVM Heap used. |

|

NameNode RPC |

The average RPC queue latency. |

|

NameNode CPU WIO |

The percentage of CPU Wait I/O. |

|

NameNode Uptime |

The NameNode uptime calculation. |

|

YARN (HDP 2.1 or later Stacks) |

|

|

ResourceManager Heap |

The percentage of ResourceManager JVM Heap used. |

|

ResourceManager Uptime |

The ResourceManager uptime calculation. |

|

NodeManagers Live |

The number of DataNodes live, as reported from the ResourceManager. |

|

YARN Memory |

The percentage of available YARN memory (used vs. total available). |

|

HBase |

|

|

HBase Master Heap |

The percentage of NameNode JVM Heap used. |

|

HBase Ave Load |

The average load on the HBase server. |

|

HBase Master Uptime |

The HBase Master uptime calculation. |

|

Region in Transition |

The number of HBase regions in transition. |

|

Storm (HDP 2.1 or later Stacks) |

|

|

Supervisors Live |

The number of Supervisors live, as reported from the Nimbus server. |

Drilling Into Metrics for a Service

-

To see more detailed information about a service, hover your cursor over a Metrics widget.

More detailed information about the service displays, as shown in the following example:

-

To remove a widget from the mashup, click the white X.

-

To edit the display of information in a widget, click the pencil icon. For more information about editing a widget, see Customizing Metrics Display.

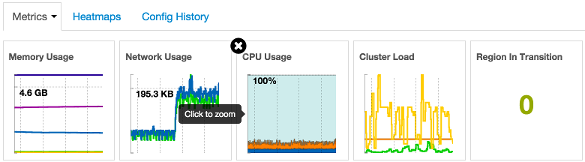

Viewing Cluster-Wide Metrics

Cluster-wide metrics display information that represents your whole cluster. The Ambari Dashboard shows the following cluster-wide metrics:

|

Metric: |

Description: |

|---|---|

|

Memory Usage |

The cluster-wide memory utilization, including memory cached, swapped, used, shared. |

|

Network Usage |

The cluster-wide network utilization, including in-and-out. |

|

CPU Usage |

Cluster-wide CPU information, including system, user and wait IO. |

|

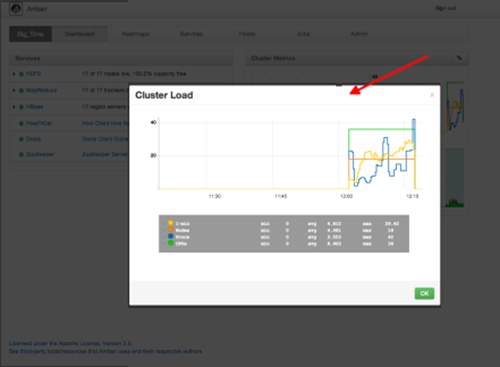

Cluster Load |

Cluster-wide Load information, including total number of nodes. total number of CPUs, number of running processes and 1-min Load. |

-

To remove a widget from the dashboard, click the white X.

-

Hover your cursor over each cluster-wide metric to magnify the chart or itemize the widget display.

-

To remove or add metric items from each cluster-wide metric widget, select the item on the widget legend.

-

To see a larger view of the chart, select the magnifying glass icon.

Ambari displays a larger version of the widget in a pop-out window, as shown in the following example:

Use the pop-up window in the same ways that you use cluster-wide metric widgets on the dashboard.

To close the widget pop-up window, choose OK.

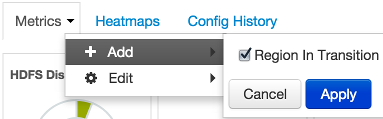

Adding a Widget to the Dashboard

To replace a widget that has been removed from the dashboard:

-

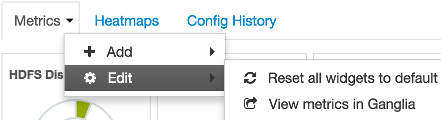

Select the Metrics drop-down, as shown in the following example:

-

Choose Add.

-

Select a metric, such as Region in Transition.

-

Choose Apply.

Resetting the Dashboard

To reset all widgets on the dashboard to display default settings:

-

Select the Metrics drop-down, as shown in the following example:

-

Choose Edit.

-

Choose Reset all widgets to default.

Customizing Metrics Display

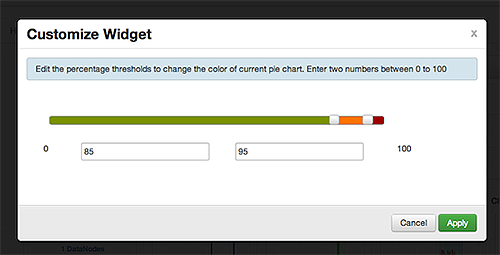

To customize the way a service widget displays metrics information:

-

Hover your cursor over a service widget.

-

Select the pencil-shaped, edit icon that appears in the upper-right corner.

The Customize Widget pop-up window displays properties that you can edit, as shown in the following example.

-

Follow the instructions in the Customize Widget pop-up to customize widget appearance.

In this example, you can adjust the thresholds at which the HDFS Capacity bar chart changes color, from green to orange to red.

-

To save your changes and close the editor, choose

Apply. -

To close the editor without saving any changes, choose

Cancel.

Viewing More Metrics for your HDP Stack

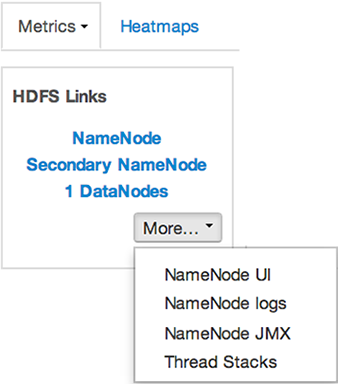

The HDFS Links and HBase Links widgets list HDP components for which links to more metrics information, such as thread stacks, logs and native component UIs are available. For example, you can link to NameNode, Secondary NameNode, and DataNode components for HDFS, using the links shown in the following example:

Choose the More drop-down to select from the list of links available for each service. The Ambari

Dashboard includes additional links to metrics for the following services:

|

Service: |

Metric: |

Description: |

|---|---|---|

|

HDFS |

||

|

NameNode UI |

Links to the NameNode UI. |

|

|

NameNode Logs |

Links to the NameNode logs. |

|

|

NameNode JMX |

Links to the NameNode JMX servlet. |

|

|

Thread Stacks |

Links to the NameNode thread stack traces. |

|

|

HBase |

||

|

HBase Master UI |

Links to the HBase Master UI. |

|

|

HBase Logs |

Links to the HBase logs. |

|

|

ZooKeeper Info |

Links to ZooKeeper information. |

|

|

HBase Master JMX |

Links to the HBase Master JMX servlet. |

|

|

Debug Dump |

Links to debug information. |

|

|

Thread Stacks |

Links to the HBase Master thread stack traces. |

Viewing Heatmaps

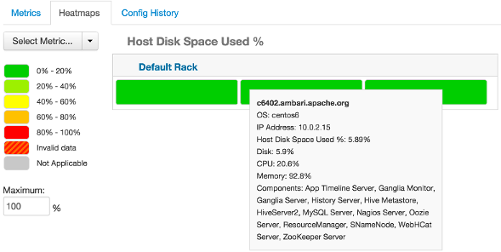

Heatmaps provides a graphical representation of your overall cluster utilization using simple color coding.

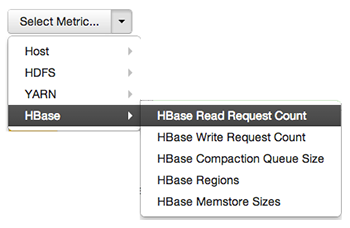

A colored block represents each host in your cluster. To see more information about a specific host, hover over the block representing the host in which you are interested. A pop-up window displays metrics about HDP components installed on that host. Colors displayed in the block represent usage in a unit appropriate for the selected set of metrics. If any data necessary to determine state is not available, the block displays "Invalid Data". Changing the default maximum values for the heatmap lets you fine tune the representation. Use the Select Metric drop-down to select the metric type.

Heatmaps supports the following metrics:

|

Metric |

Uses |

|---|---|

|

Host/Disk Space Used % |

disk.disk_free and disk.disk_total |

|

Host/Memory Used % |

memory.mem_free and memory.mem_total |

|

Host/CPU Wait I/O % |

cpu.cpu_wio |

|

HDFS/Bytes Read |

dfs.datanode.bytes_read |

|

HDFS/Bytes Written |

dfs.datanode.bytes_written |

|

HDFS/Garbage Collection Time |

jvm.gcTimeMillis |

|

HDFS/JVM Heap MemoryUsed |

jvm.memHeapUsedM |

|

YARN/Garbage Collection Time |

jvm.gcTimeMillis |

|

YARN / JVM Heap Memory Used |

jvm.memHeapUsedM |

|

YARN / Memory used % |

UsedMemoryMB and AvailableMemoryMB |

|

HBase/RegionServer read request count |

hbase.regionserver.readRequestsCount |

|

HBase/RegionServer write request count |

hbase.regionserver.writeRequestsCount |

|

HBase/RegionServer compaction queue size |

hbase.regionserver.compactionQueueSize |

|

HBase/RegionServer regions |

hbase.regionserver.regions |

|

HBase/RegionServer memstore sizes |

hbase.regionserver.memstoreSizeMB |

Scanning Status

Notice the color of the dot appearing next to each component name in a list of components, services or hosts. The dot color and blinking action indicates operating status of each component, service, or host. For example, in the Summary View, notice green dot next to each service name. The following colors and actions indicate service status:

|

Color |

Status |

|---|---|

|

Solid Green |

All masters are running |

|

Blinking Green |

Starting up |

|

Solid Red |

At least one master is down |

|

Blinking Red |

Stopping |

Click the service name to open the Services screen, where you can see more detailed information on each service.

Managing Hosts

Use Ambari Hosts to manage multiple HDP components such as DataNodes, NameNodes, NodeManagers and RegionServers, running on hosts throughout your cluster. For example, you can restart all DataNode components, optionally controlling that task with rolling restarts. Ambari Hosts supports filtering your selection of host components, based on operating status, host health, and defined host groupings.

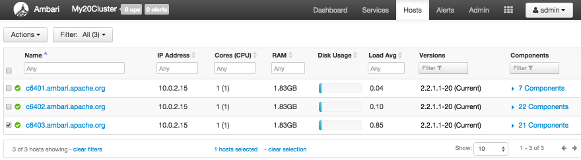

Working with Hosts

Use Hosts to view hosts in your cluster on which Hadoop services run. Use options on Actions to perform actions on one or more hosts in your cluster.

View individual hosts, listed by fully-qualified domain name, on the Hosts landing page.

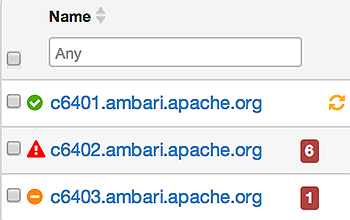

Determining Host Status

A colored dot beside each host name indicates operating status of each host, as follows:

-

Red - At least one master component on that host is down. Hover to see a tooltip that lists affected components.

-

Orange - At least one slave component on that host is down. Hover to see a tooltip that lists affected components.

-

Yellow - Ambari Server has not received a heartbeat from that host for more than 3 minutes.

-

Green - Normal running state.

A red condition flag overrides an orange condition flag, which overrides a yellow condition flag. In other words, a host having a master component down may also have other issues. The following example shows three hosts, one having a master component down, one having a slave component down, and one healthy. Warning indicators appear next to hosts having a component down.

Filtering the Hosts List

Use Filters to limit listed hosts to only those having a specific operating status. The number of hosts in your cluster having a listed operating status appears after each status name, in parenthesis. For example, the following cluster has one host having healthy status and three hosts having Maintenance Mode turned on.

For example, to limit the list of hosts appearing on Hosts home to only those with Healthy status, select Filters, then choose the Healthy option. In this case, one host name appears on Hosts home. Alternatively, to limit the list of hosts appearing on Hosts home to only those having Maintenance Mode on, select Filters, then choose the Maintenance Mode option. In this case, three host names appear on Hosts home.

Use the general filter tool to apply specific search and sort criteria that limits the list of hosts appearing on the Hosts page.

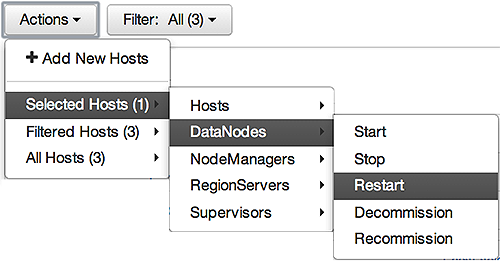

Performing Host-Level Actions

Use Actions to act on one, or multiple hosts in your cluster. Actions performed on multiple hosts are also known as bulk operations.

Actions comprises three menus that list the following option types:

-

Hosts - lists selected, filtered or all hosts options, based on your selections made using Hosts home and Filters.

-

Objects - lists component objects that match your host selection criteria.

-

Operations - lists all operations available for the component objects you selected.

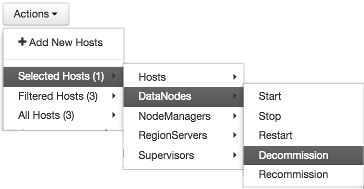

For example, to restart DataNodes on one host:

-

In Hosts, select a host running at least one DataNode.

-

In Actions, choose

Selected Hosts > DataNodes > Restart, as shown in the following image.

-

Choose OK to confirm starting the selected operation.

-

Optionally, use Monitoring Background Operations to follow, diagnose or troubleshoot the restart operation.

Viewing Components on a Host

To manage components running on a specific host, choose a FQDN on the Hosts page. For example, choose c6403.ambari.apache.org in the default example shown. Summary-Components lists all components installed on that host.

Choose options in Host Actions, to start, stop, restart, delete, or turn on maintenance mode for all components

installed on the selected host.

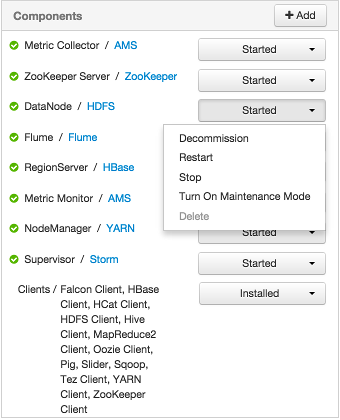

Alternatively, choose action options from the drop-down menu next to an individual component on a host. The drop-down menu shows current operation status for each component, For example, you can decommission, restart, or stop the DataNode component (started) for HDFS, by selecting one of the options shown in the following example:

Decommissioning Masters and Slaves

Decommissioning is a process that supports removing a component from the cluster. You must decommission a master or slave running on a host before removing the component or host from service. Decommissioning helps prevent potential loss of data or service disruption. Decommissioning is available for the following component types:

-

DataNodes

-

NodeManagers

-

RegionServers

Decommissioning executes the following tasks:

-

For DataNodes, safely replicates the HDFS data to other DataNodes in the cluster.

-

For NodeManagers, stops accepting new job requests from the masters and stops the component.

-

For RegionServers, turns on drain mode and stops the component.

How to Decommission a Component

To decommission a component using Ambari Web, browse Hosts to find the host FQDN on which the component resides.

Using Actions, select HostsComponent Type, then choose Decommission.

For example:

The UI shows "Decommissioning" status while steps process, then "Decommissioned" when complete.

How to Delete a Component

To delete a component using Ambari Web, on Hosts choose the host FQDN on which the component resides.

-

In

Components, find a decommissioned component. -

Stop the component, if necessary.

-

For a decommissioned component, choose Delete from the component drop-down menu.

Deleting a slave component, such as a DataNode does not automatically inform a master component, such as a NameNode to remove the slave component from its exclusion list. Adding a deleted slave component back into the cluster presents the following issue; the added slave remains decommissioned from the master's perspective. Restart the master component, as a work-around.

Deleting a Host from a Cluster

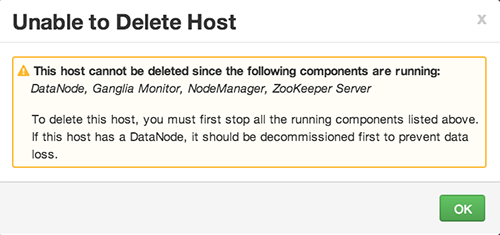

Deleting a host removes the host from the cluster. Before deleting a host, you must complete the following prerequisites:

-

Stop all components running on the host.

-

Decommission any DataNodes running on the host.

-

Move from the host any master components, such as NameNode or ResourceManager, running on the host.

-

Turn Off Maintenance Mode, if necessary, for the host.

How to Delete a Host from a Cluster

-

In Hosts, click on a host name.

-

On the Host-Details page, select Host Actions drop-down menu.

-

Choose Delete.

If you have not completed prerequisite steps, a warning message similar to the following one appears:

Setting Maintenance Mode

Maintenance Mode supports suppressing alerts and skipping bulk operations for specific services, components and hosts in an Ambari-managed cluster. You typically turn on Maintenance Mode when performing hardware or software maintenance, changing configuration settings, troubleshooting, decommissioning, or removing cluster nodes. You may place a service, component, or host object in Maintenance Mode before you perform necessary maintenance or troubleshooting tasks.

Maintenance Mode affects a service, component, or host object in the following two ways:

-

Maintenance Mode suppresses alerts, warnings and status change indicators generated for the object

-

Maintenance Mode exempts an object from host-level or service-level bulk operations

Explicitly turning on Maintenance Mode for a service implicitly turns on Maintenance Mode for components and hosts that run the service. While Maintenance Mode On prevents bulk operations being performed on the service, component, or host, you may explicitly start and stop a service, component, or host having Maintenance Mode On.

Setting Maintenance Mode for Services, Components, and Hosts

For example, examine using Maintenance Mode in a 3-node, Ambari-managed cluster installed using default options. This cluster has one data node, on host c6403. This example describes how to explicitly turn on Maintenance Mode for the HDFS service, alternative procedures for explicitly turning on Maintenance Mode for a host, and the implicit effects of turning on Maintenance Mode for a service, a component and a host.

How to Turn On Maintenance Mode for a Service

-

Using Services, select

HDFS. -

Select Service Actions, then choose

Turn On Maintenance Mode. -

Choose OK to confirm.

Notice, on Services Summary that Maintenance Mode turns on for the NameNode and SNameNode components.

How to Turn On Maintenance Mode for a Host

-

Using Hosts, select c6401.ambari.apache.org.

-

Select

Host Actions, then chooseTurn On Maintenance Mode. -

Choose OK to confirm.

Notice on Components, that Maintenance Mode turns on for all components.

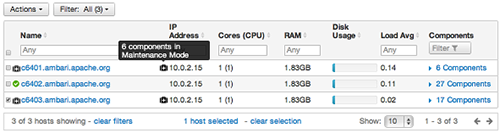

How to Turn On Maintenance Mode for a Host (alternative using filtering for hosts)

-

Using Hosts, select c6403.ambari.apache.org.

-

In

Actions > Selected Hosts > HostschooseTurn On Maintenance Mode. -

Choose

OKto confirm.Notice that Maintenance Mode turns on for host c6403.ambari.apache.org.

Your list of Hosts now shows Maintenance Mode On for hosts c6401 and c6403.

-

Hover your cursor over each Maintenance Mode icon appearing in the Hosts list.

-

Notice that hosts c6401 and c6403 have Maintenance Mode On.

-

Notice that on host c6401; HBaseMaster, HDFS client, NameNode, and ZooKeeper Server have Maintenance Mode turned On.

-

Notice on host c6402, that HDFS client and Secondary NameNode have Maintenance Mode On.

-

Notice on host c6403, that 15 components have Maintenance Mode On.

-

-

The following behavior also results:

-

Alerts are suppressed for the DataNode.

-

DataNode is skipped from HDFS Start/Stop/Restart All, Rolling Restart.

-

DataNode is skipped from all Bulk Operations except Turn Maintenance Mode ON/OFF.

-

DataNode is skipped from Start All and / Stop All components.

-

DataNode is skipped from a host-level restart/restart all/stop all/start.

-

Maintenance Mode Use Cases

Four common Maintenance Mode Use Cases follow:

-

You want to perform hardware, firmware, or OS maintenance on a host.

You want to:

-

Prevent alerts generated by all components on this host.

-

Be able to stop, start, and restart each component on the host.

-

Prevent host-level or service-level bulk operations from starting, stopping, or restarting components on this host.

To achieve these goals, turn On Maintenance Mode explicitly for the host. Putting a host in Maintenance Mode implicitly puts all components on that host in Maintenance Mode.

-

-

You want to test a service configuration change. You will stop, start, and restart the service using a rolling restart to test whether restarting picks up the change.

You want:

-

No alerts generated by any components in this service.

-

To prevent host-level or service-level bulk operations from starting, stopping, or restarting components in this service.

To achieve these goals, turn on Maintenance Mode explicitly for the service. Putting a service in Maintenance Mode implicitly turns on Maintenance Mode for all components in the service.

-

-

You turn off a service completely.

You want:

-

The service to generate no warnings.

-

To ensure that no components start, stop, or restart due to host-level actions or bulk operations.

To achieve these goals, turn On Maintenance Mode explicitly for the service. Putting a service in Maintenance Mode implicitly turns on Maintenance Mode for all components in the service.

-

-

A host component is generating alerts.

You want to:

-

Check the component.

-

Assess warnings and alerts generated for the component.

-

Prevent alerts generated by the component while you check its condition.

-

To achieve these goals, turn on Maintenance Mode explicitly for the host component. Putting a host component in Maintenance Mode prevents host-level and service-level bulk operations from starting or restarting the component. You can restart the component explicitly while Maintenance Mode is on.

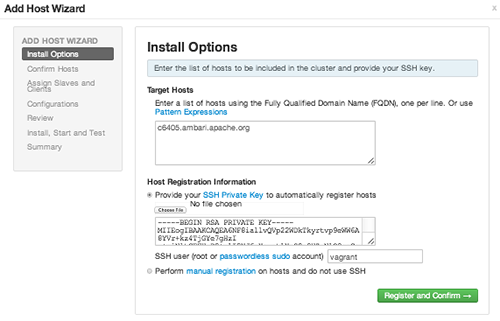

Adding Hosts to a Cluster

To add new hosts to your cluster, browse to the Hosts page and select Actions > +Add New Hosts. The Add Host Wizard provides a sequence of prompts similar to those in the Ambari Install Wizard. Follow

the prompts, providing information similar to that provided to define the first set

of hosts in your cluster.

Managing Services

Use Services to monitor and manage selected services running in your Hadoop cluster.

All services installed in your cluster are listed in the leftmost Services panel.

Services supports the following tasks:

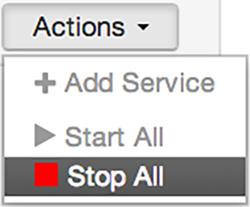

Starting and Stopping All Services

To start or stop all listed services at once, select Actions, then choose Start All or Stop All, as shown in the following example:

Selecting a Service

Selecting a service name from the list shows current summary, alert, and health information for the selected service. To refresh the monitoring panels and show information about a different service, select a different service name from the list.

Notice the colored dot next to each service name, indicating service operating status and a small, red, numbered rectangle indicating any alerts generated for the service.

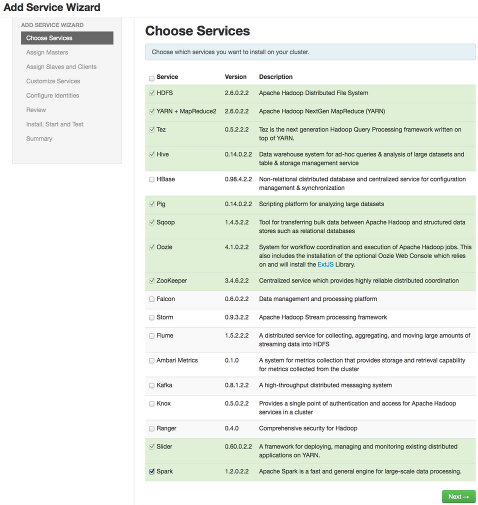

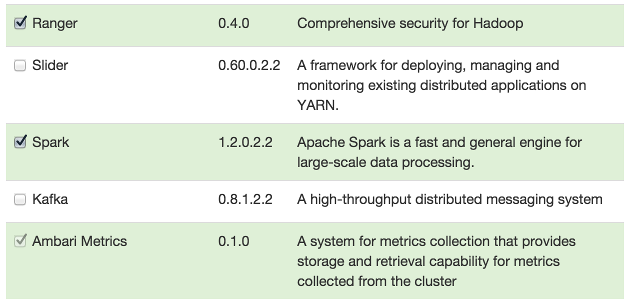

Adding a Service

The Ambari install wizard installs all available Hadoop services by default. You may

choose to deploy only some services initially, then add other services at later times.

For example, many customers deploy only core Hadoop services initially. Add Service supports deploying additional services without interrupting operations in your Hadoop

cluster. When you have deployed all available services, Add Service displays disabled.

For example, if you are using HDP 2.2 Stack and did not install Falcon or Storm, you

can use the Add Service capability to add those services to your cluster.

To add a service, select Actions > Add Service, then complete the following procedure using the Add Service Wizard.

Adding a Service to your Hadoop cluster

This example shows the Falcon service selected for addition.

-

Choose

Services.Choose an available service. Alternatively, choose all to add all available services to your cluster. Then, choose Next. The Add Service wizard displays installed services highlighted green and check-marked, not available for selection.

For more information about installing Ranger, see Installing Ranger.

For more information about Installing Spark, see Installing Spark. -

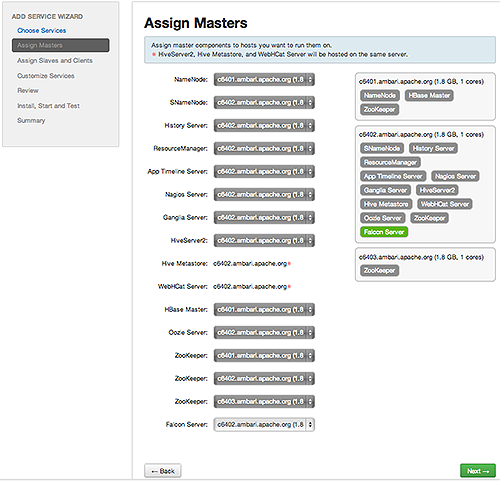

In

Assign Masters, confirm the default host assignment. Alternatively, choose a different host machine to which master components for your selected service will be added. Then, choose Next.The Add Services Wizard indicates hosts on which the master components for a chosen service will be installed. A service chosen for addition shows a grey check mark.

Using the drop-down, choose an alternate host name, if necessary.-

A green label located on the host to which its master components will be added, or

-

An active drop-down list on which available host names appear.

-

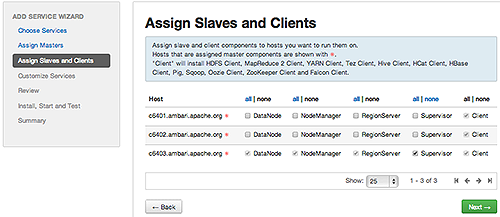

-

In

Assign Slaves and Clients, accept the default assignment of slave and client components to hosts. Then, choose Next.Alternatively, select hosts on which you want to install slave and client components. You must select at least one host for the slave of each service being added.

Service Added

Host Role Required

YARN

NodeManager

HBase

RegionServer

Host Roles Required for Added Services

The Add Service Wizard skips and disables the Assign Slaves and Clients step for a service requiring no slave nor client assignment.

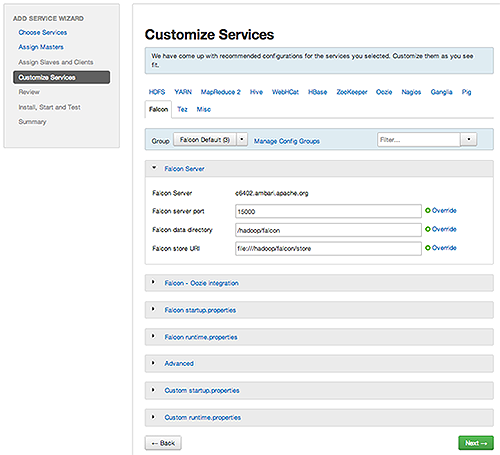

-

In

Customize Services, accept the default configuration properties.Alternatively, edit the default values for configuration properties, if necessary. Choose Override to create a configuration group for this service. Then, choose Next.

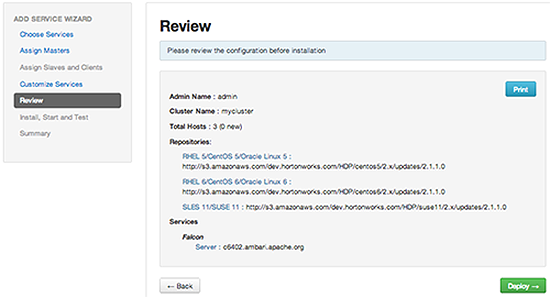

-

In Review, make sure the configuration settings match your intentions. Then, choose Deploy.

-

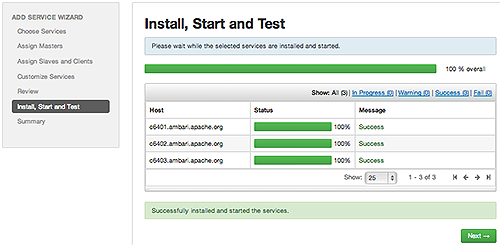

Monitor the progress of installing, starting, and testing the service. When the service installs and starts successfully, choose Next.

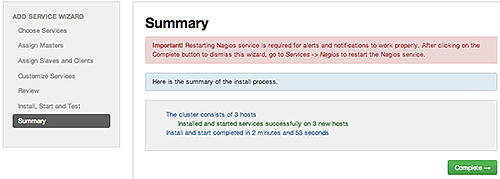

-

Summary displays the results of installing the service. Choose Complete.

-

Restart any other components having stale configurations.

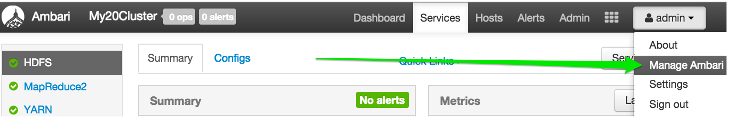

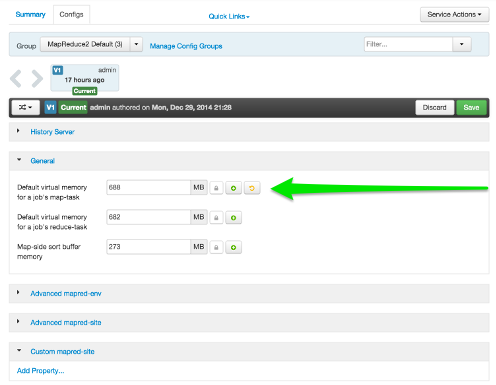

Editing Service Config Properties

Select a service, then select Configs to view and update configuration properties for the selected service. For example,

select MapReduce2, then select Configs. Expand a config category to view configurable

service properties. For example, select General to configure Default virtual memory

for a job's map task.

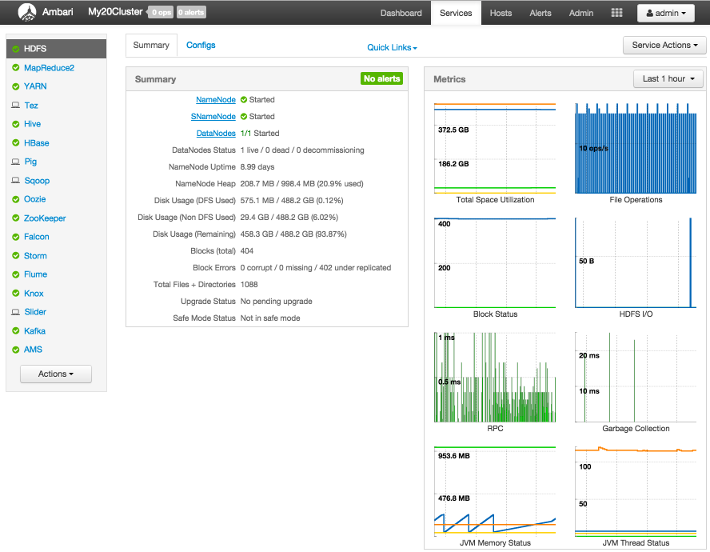

Viewing Summary, Alert, and Health Information

After you select a service, the Summary tab displays basic information about the selected service.

Select one of the View Host links, as shown in the following example, to view components and the host on which

the selected service is running.

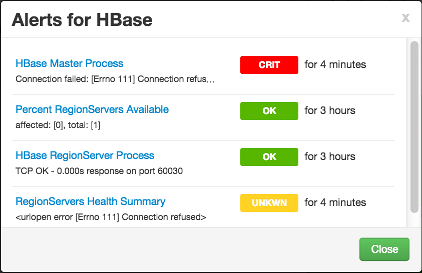

Alerts and Health Checks

On each Service page, in the Summary area, click Alerts to see a list of all health checks and their status for the selected service. Critical

alerts are shown first. Click the text title of each alert message in the list to

see the alert definition. For example, On the HBase > Services, click Alerts. Then,

in Alerts for HBase, click HBase Master Process.

Analyzing Service Metrics

Review visualizations in Metrics that chart common metrics for a selected service. Services > Summary displays metrics widgets for HDFS, HBase, Storm services. For more information about

using metrics widgets, see Scanning System Metrics.

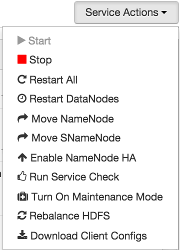

Performing Service Actions

Manage a selected service on your cluster by performing service actions. In Services, select the Service Actions drop-down menu, then choose an option. Available options depend on the service you

have selected. For example, HDFS service action options include:

Optionally, choose Turn On Maintenance Mode to suppress alerts generated by a service before performing a service action. Maintenance

Mode suppresses alerts and status indicator changes generated by the service, while

allowing you to start, stop, restart, move, or perform maintenance tasks on the service.

For more information about how Maintenance Mode affects bulk operations for host components,

see Setting Maintenance Mode.

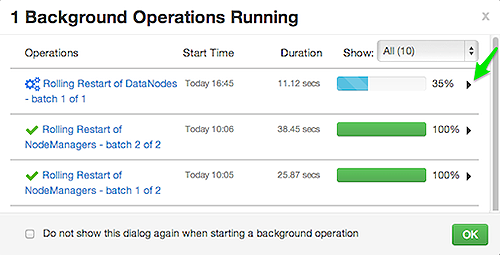

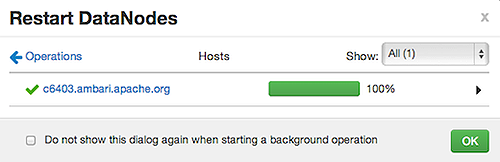

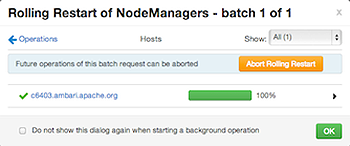

Monitoring Background Operations

Optionally, use Background Operations to monitor progress and completion of bulk operations such as rolling restarts.

Background Operations opens by default when you run a job that executes bulk operations.

-

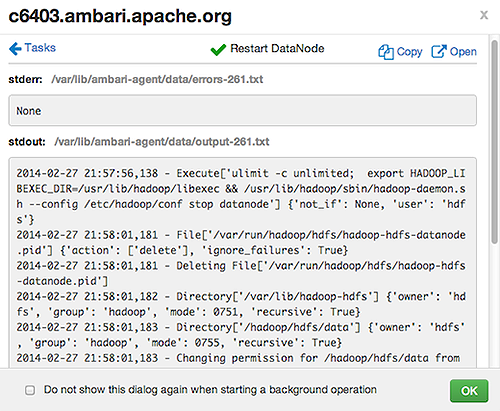

Select the right-arrow for each operation to show restart operation progress on each host.

-

After restarts complete, Select the right-arrow, or a host name, to view log files and any error messages generated on the selected host.

-

Select links at the upper-right to copy or open text files containing log and error information.

Optionally, select the option to not show the bulk operations dialog.

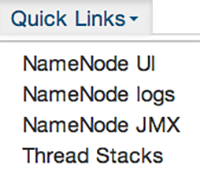

Using Quick Links

Select Quick Links options to access additional sources of information about a selected service. For

example, HDFS Quick Links options include the native NameNode GUI, NameNode logs,

the NameNode JMX output, and thread stacks for the HDFS service. Quick Links are not

available for every service.

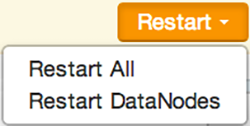

Rolling Restarts

When you restart multiple services, components, or hosts, use rolling restarts to distribute the task; minimizing cluster downtime and service disruption. A rolling restart stops, then starts multiple, running slave components such as DataNodes, NodeManagers, RegionServers, or Supervisors, using a batch sequence. You set rolling restart parameter values to control the number of, time between, tolerance for failures, and limits for restarts of many components across large clusters.

To run a rolling restart:

-

Select a Service, then link to a lists of specific components or hosts that Require Restart.

-

Select Restart, then choose a slave component option.

-

Review and set values for Rolling Restart Parameters.

-

Optionally, reset the flag to only restart components with changed configurations.

-

Choose Trigger Restart.

Use Monitor Background Operations to monitor progress of rolling restarts.

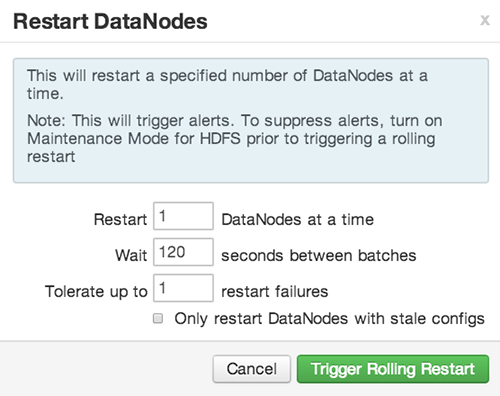

Setting Rolling Restart Parameters

When you choose to restart slave components, use parameters to control how restarts of components roll. Parameter values based on ten percent of the total number of components in your cluster are set as default values. For example, default settings for a rolling restart of components in a 3-node cluster restarts one component at a time, waits two minutes between restarts, will proceed if only one failure occurs, and restarts all existing components that run this service.

If you trigger a rolling restart of components, Restart components with stale configs defaults to true. If you trigger a rolling restart of services, Restart services with stale configs defaults to false.

Rolling restart parameter values must satisfy the following criteria:

|

Parameter |

Required |

Value |

Description |

|---|---|---|---|

|

Batch Size |

Yes |

Must be an integer > 0 |

Number of components to include in each restart batch. |

|

Wait Time |

Yes |

Must be an integer > = 0 |

Time (in seconds) to wait between queuing each batch of components. |

|

Tolerate up to x failures |

Yes |

Must be an integer > = 0 |

Total number of restart failures to tolerate, across all batches, before halting the restarts and not queuing batches. |

Aborting a Rolling Restart

To abort future restart operations in the batch, choose Abort Rolling Restart.

Refreshing YARN Capacity Scheduler

After you modify the Capacity Scheduler configuration, YARN supports refreshing the queues without requiring you to restart your ResourceManager. The “refresh” operation is valid if you have made no destructive changes to your configuration. Removing a queue is an example of a destructive change.

How to refresh the YARN Capacity Scheduler

This topic describes how to refresh the Capacity Scheduler in cases where you have added or modified existing queues.

-

In Ambari Web, browse to

Services > YARN > Summary. -

Select

Service Actions, then chooseRefresh YARN Capacity Scheduler. -

Confirm you would like to perform this operation.

The refresh operation is submitted to the YARN ResourceManager.

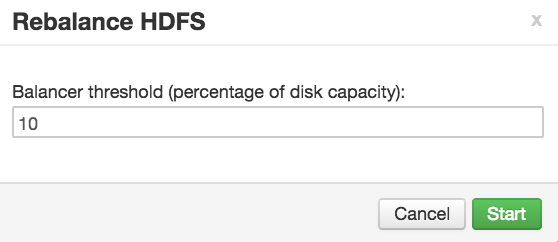

Rebalancing HDFS

HDFS provides a “balancer” utility to help balance the blocks across DataNodes in the cluster.

How to rebalance HDFS

This topic describes how you can initiate an HDFS rebalance from Ambari.

-

. In Ambari Web, browse to

Services > HDFS > Summary. -

Select

Service Actions, then chooseRebalance HDFS. -

Enter the Balance Threshold value as a percentage of disk capacity.

-

Click

Startto begin the rebalance. -

You can check rebalance progress or cancel a rebalance in process by opening the Background Operations dialog.

Managing Service High Availability

Ambari provides the ability to configure the High Availability features available with the HDP Stack services. This section describes how to enable HA for the various Stack services.

NameNode High Availability

To ensure that a NameNode in your cluster is always available if the primary NameNode

host fails, enable and set up NameNode High Availability on your cluster using Ambari

Web.

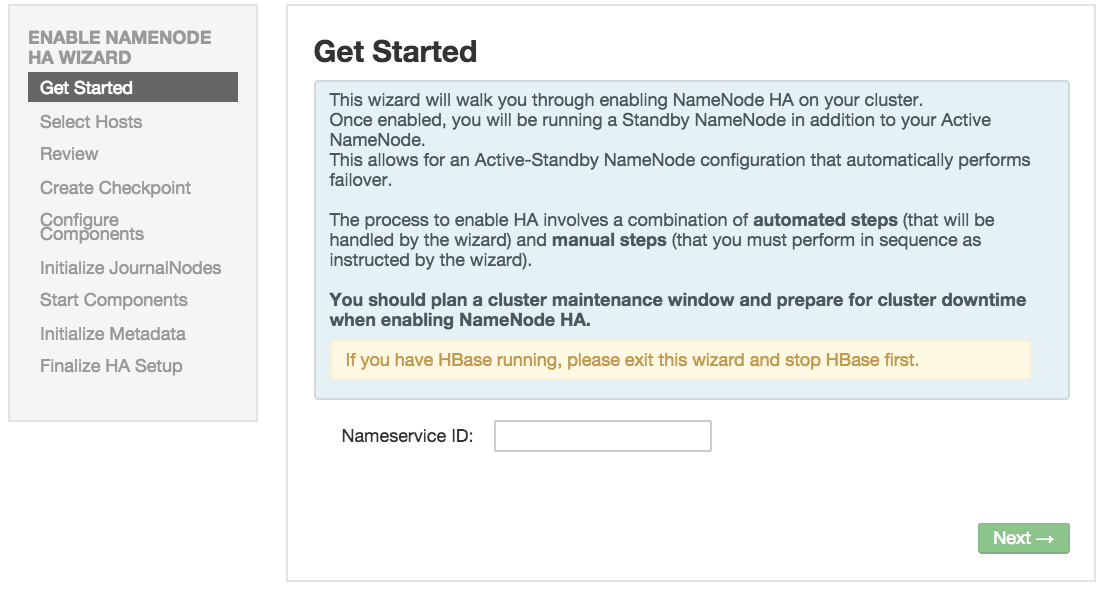

Follow the steps in the Enable NameNode HA Wizard.

For more information about using the Enable NameNode HA Wizard, see How to Configure NameNode High Availability.

How To Configure NameNode High Availability

-

Check to make sure you have at least three hosts in your cluster and are running at least three ZooKeeper servers.

-

In Ambari Web, select

Services > HDFS > Summary. -

Select Service Actions and choose Enable NameNode HA.

-

The Enable HA Wizard launches. This wizard describes the set of automated and manual steps you must take to set up NameNode high availability.

-

Get Started : This step gives you an overview of the process and allows you to select a Nameservice ID. You use this Nameservice ID instead of the NameNode FQDN once HA has been set up. Click

Nextto proceed.

-

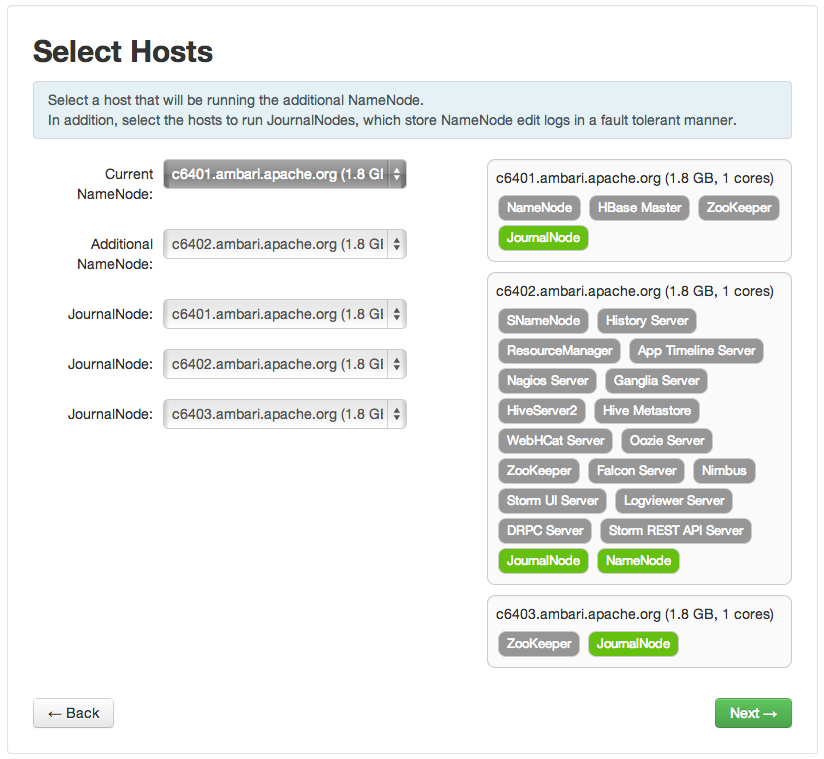

Select Hosts : Select a host for the additional NameNode and the JournalNodes. The wizard suggest options that you can adjust using the drop-down lists. Click

Nextto proceed.

-

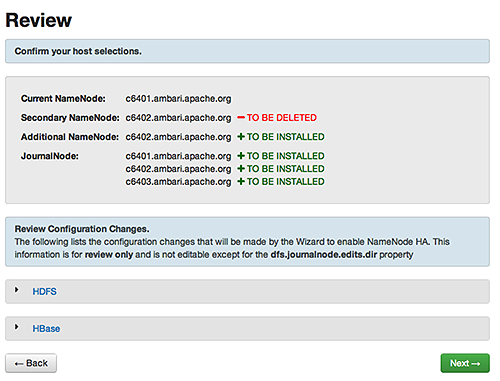

Review : Confirm your host selections and click

Next.

-

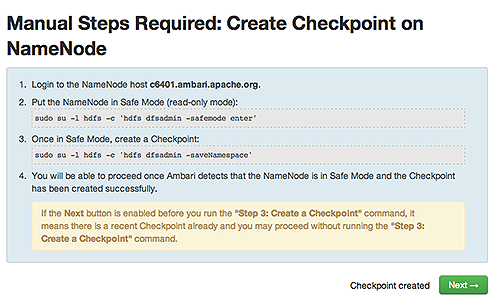

Create Checkpoints : Follow the instructions in the step. You need to log in to your current NameNode host to run the commands to put your NameNode into safe mode and create a checkpoint. When Ambari detects success, the message on the bottom of the window changes. Click

Next.

-

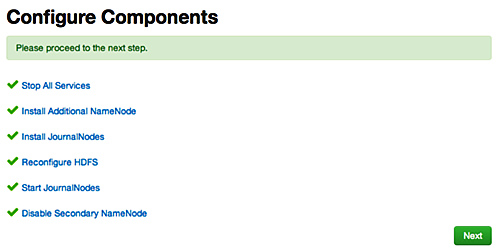

Configure Components : The wizard configures your components, displaying progress bars to let you track the steps. Click

Nextto continue.

-

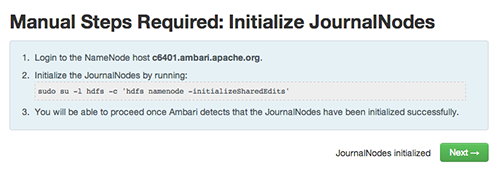

Initialize JournalNodes : Follow the instructions in the step. You need to login to your current NameNode host to run the command to initialize the JournalNodes. When Ambari detects success, the message on the bottom of the window changes. Click Next.

-

Start Components : The wizard starts the ZooKeeper servers and the NameNode, displaying progress bars to let you track the steps. Click

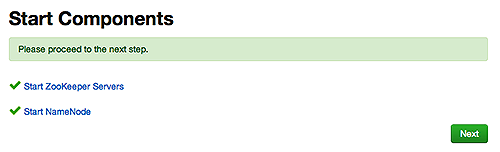

Nextto continue.

-

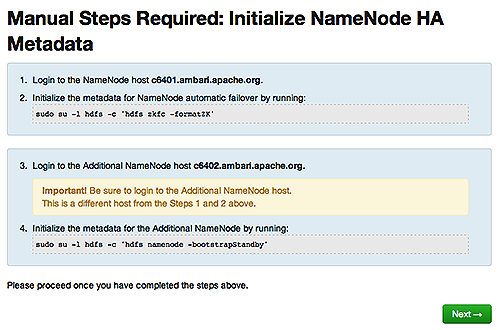

Initialize Metadata : Follow the instructions in the step. For this step you must log in to both the current NameNode and the additional NameNode. Make sure you are logged in to the correct host for each command. Click

Nextwhen you have completed the two commands. A Confirmation pop-up window displays, reminding you to do both steps. ClickOKto confirm.

-

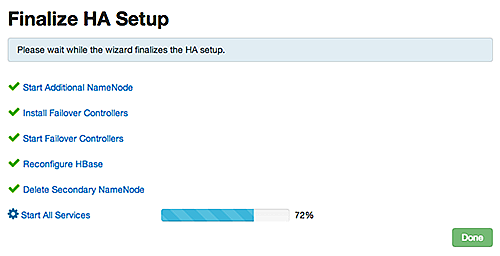

Finalize HA Setup : The wizard the setup, displaying progress bars to let you track the steps. Click

Doneto finish the wizard. After the Ambari Web GUI reloads, you may see some alert notifications. Wait a few minutes until the services come back up. If necessary, restart any components using Ambari Web.

-

If you are using Hive, you must manually change the Hive Metastore FS root to point to the Nameservice URI instead of the NameNode URI. You created the Nameservice ID in the Get Started step.

-

Check the current FS root. On the Hive host:

hive --config /etc/hive/conf.server --service metatool -listFSRootThe output looks similar to the following:

Listing FS Roots... hdfs://<namenode-host>/apps/hive/warehouse -

Use this command to change the FS root:

$ hive --config /etc/hive/conf.server --service metatool -updateLocation <new-location><old-location>$ hive --config /etc/hive/conf.server --service metatool -updateLocation hdfs://mycluster/apps/hive/warehouse hdfs://c6401.ambari.apache.org/apps/hive/warehouseThe output looks similar to the following:

Successfully updated the following locations...Updated X records in SDS table

-

-

Adjust the ZooKeeper Failover Controller retries setting for your environment.

-

Browse to

Services > HDFS > Configs >core-site. -

Set

ha.failover-controller.active-standby-elector.zk.op.retries=120

-

How to Roll Back NameNode HA

To roll back NameNode HA to the previous non-HA state use the following step-by-step manual process, depending on your installation.

Stop HBase

-

From Ambari Web, go to the Services view and select HBase.

-

Choose

Service Actions > Stop. -

Wait until HBase has stopped completely before continuing.

Checkpoint the Active NameNode

If HDFS has been in use after you enabled NameNode HA, but you wish to revert back to a non-HA state, you must checkpoint the HDFS state before proceeding with the rollback.

If the Enable NameNode HA wizard failed and you need to revert back, you can skip this step and move on to

Stop All Services.

-

If Kerberos security has not been enabled on the cluster:

On the Active NameNode host, execute the following commands to save the namespace. You must be the HDFS service user to do this.

sudo su -l <HDFS_USER> -c 'hdfs dfsadmin -safemode enter' sudo su -l <HDFS_USER> -c 'hdfs dfsadmin -saveNamespace' -

If Kerberos security has been enabled on the cluster:

sudo su -l <HDFS_USER> -c 'kinit -kt /etc/security/keytabs/nn.service.keytab nn/<HOSTNAME>@<REALM>;hdfs dfsadmin -safemode enter' sudo su -l <HDFS_USER> -c 'kinit -kt /etc/security/keytabs/nn.service.keytab nn/<HOSTNAME>@<REALM>;hdfs dfsadmin -saveNamespace'Where

<HDFS_USER>is the HDFS service user; for example hdfs,<HOSTNAME>is the Active NameNode hostname, and<REALM>is your Kerberos realm.

Stop All Services

Browse to Ambari Web > Services, then choose Stop All in the Services navigation panel. You must wait until all the services are completely

stopped.

Prepare the Ambari Server Host for Rollback

Log into the Ambari server host and set the following environment variables to prepare for the rollback procedure:

|

Variable |

Value |

|---|---|

|

export AMBARI_USER=AMBARI_USERNAME |

Substitute the value of the administrative user for Ambari Web. The default value is admin. |

|

export AMBARI_PW=AMBARI_PASSWORD |

Substitute the value of the administrative password for Ambari Web. The default value is admin. |

|

export AMBARI_PORT=AMBARI_PORT |

Substitute the Ambari Web port. The default value is 8080. |

|

export AMBARI_PROTO=AMBARI_PROTOCOL |

Substitute the value of the protocol for connecting to Ambari Web. Options are http or https. The default value is http. |

|

export CLUSTER_NAME=CLUSTER_NAME |

Substitute the name of your cluster, set during the Ambari Install Wizard process. For example: mycluster. |

|

export NAMENODE_HOSTNAME=NN_HOSTNAME |

Substitute the FQDN of the host for the non-HA NameNode. For example: nn01.mycompany.com. |

|

export ADDITIONAL_NAMENODE_HOSTNAME=ANN_HOSTNAME |

Substitute the FQDN of the host for the additional NameNode in your HA setup. |

|

export SECONDARY_NAMENODE_HOSTNAME=SNN_HOSTNAME |

Substitute the FQDN of the host for the standby NameNode for the non-HA setup. |

|

export JOURNALNODE1_HOSTNAME=JOUR1_HOSTNAME |

Substitute the FQDN of the host for the first Journal Node. |

|

export JOURNALNODE2_HOSTNAME=JOUR2_HOSTNAME |

Substitute the FQDN of the host for the second Journal Node. |

|

export JOURNALNODE3_HOSTNAME=JOUR3_HOSTNAME |

Substitute the FQDN of the host for the third Journal Node. |

Double check that these environment variables are set correctly.

Restore the HBase Configuration

If you have installed HBase, you may need to restore a configuration to its pre-HA state.

-

To check if your current HBase configuration needs to be restored, on the Ambari Server host:

/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> get localhost <CLUSTER_NAME> hbase-siteWhere the environment variables you set up in Prepare the Ambari Server Host for Rollback substitute for the variable names.

Look for the configuration property

hbase.rootdir. If the value is set to the NameService ID you set up using theEnable NameNode HAwizard, you need to revert thehbase-siteconfiguration set up back to non-HA values. If it points instead to a specific NameNode host, it does not need to be rolled back and you can go on to Delete ZooKeeper Failover Controllers.For example:

"hbase.rootdir":"hdfs://<name-service-id>:8020/apps/hbase/data"The hbase.rootdir property points to the NameService ID and the value needs to be rolled back"hbase.rootdir":"hdfs://<nn01.mycompany.com>:8020/apps/hbase/data"The hbase.rootdir property points to a specific NameNode host and not a NameService ID. This does not need to be rolled back. -

If you need to roll back the

hbase.rootdirvalue, on the Ambari Server host, use theconfig.shscript to make the necessary change:/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p<AMBARI_PW> -port <AMBARI_PORT> set localhost <CLUSTER_NAME> hbase-site hbase.rootdir hdfs://<NAMENODE_HOSTNAME>:8020/apps/hbase/dataWhere the environment variables you set up in Prepare the Ambari Server Host for Rollback substitute for the variable names.

-

Verify that the

hbase.rootdirproperty has been restored properly. On the Ambari Server host:/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> get localhost <CLUSTER_NAME> hbase-siteThe

hbase.rootdirproperty should now be set to the NameNode hostname, not the NameService ID.

Delete ZooKeeper Failover Controllers

You may need to delete ZooKeeper (ZK) Failover Controllers.

-

To check if you need to delete ZK Failover Controllers, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=ZKFCIf this returns an empty

itemsarray, you may proceed to Modify HDFS Configuration. Otherwise you must use the following DELETE commands: -

To delete all ZK Failover Controllers, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X DELETE <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts/<NAMENODE_HOSTNAME>/host_components/ZKFC curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X DELETE <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts/<ADDITIONAL_NAMENODE_HOSTNAME>/host_components/ZKFC -

Verify that the ZK Failover Controllers have been deleted. On the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=ZKFCThis command should return an empty

itemsarray.

Modify HDFS Configurations

You may need to modify your hdfs-site configuration and/or your core-site configuration.

-

To check if you need to modify your

hdfs-siteconfiguration, on the Ambari Server host:/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> get localhost <CLUSTER_NAME> hdfs-siteIf you see any of the following properties, you must delete them from your configuration.

-

dfs.nameservices -

dfs.client.failover.proxy.provider.<NAMESERVICE_ID> -

dfs.ha.namenodes.<NAMESERVICE_ID> -

dfs.ha.fencing.methods -

dfs.ha.automatic-failover.enabled -

dfs.namenode.http-address.<NAMESERVICE_ID>.nn1 -

dfs.namenode.http-address.<NAMESERVICE_ID>.nn2 -

dfs.namenode.rpc-address.<NAMESERVICE_ID>.nn1 -

dfs.namenode.rpc-address.<NAMESERVICE_ID>.nn2 -

dfs.namenode.shared.edits.dir -

dfs.journalnode.edits.dir -

dfs.journalnode.http-address -

dfs.journalnode.kerberos.internal.spnego.principal -

dfs.journalnode.kerberos.principal -

dfs.journalnode.keytab.fileWhere

<NAMESERVICE_ID>is the NameService ID you created when you ran the Enable NameNode HA wizard.

-

-

To delete these properties, execute the following for each property you found. On the Ambari Server host:

/

var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> delete localhost <CLUSTER_NAME> hdfs-site property_nameWhere you replace

property_namewith the name of each of the properties to be deleted. -

Verify that all of the properties have been deleted. On the Ambari Server host:

/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> get localhost <CLUSTER_NAME> hdfs-siteNone of the properties listed above should be present.

-

To check if you need to modify your

core-siteconfiguration, on the Ambari Server host:/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> get localhost <CLUSTER_NAME> core-site -

If you see the property

ha.zookeeper.quorum, it must be deleted. On the Ambari Server host:/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> delete localhost <CLUSTER_NAME> core-site ha.zookeeper.quorum -

If the property

fs.defaultFSis set to the NameService ID, it must be reverted back to its non-HA value. For example:"fs.defaultFS":"hdfs://<name-service-id>" The property fs.defaultFS needs to be modified as it points to a NameService ID "fs.defaultFS":"hdfs://<nn01.mycompany.com>"The propertyfs.defaultFSdoes not need to be changed as it points to a specific NameNode, not to a NameService ID -

To revert the property

fs.defaultFSto the NameNode host value, on the Ambari Server host:/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> set localhost <CLUSTER_NAME> core-site fs.defaultFS hdfs://<NAMENODE_HOSTNAME> -

Verify that the

core-siteproperties are now properly set. On the Ambari Server host:/var/lib/ambari-server/resources/scripts/configs.sh -u <AMBARI_USER> -p <AMBARI_PW> -port <AMBARI_PORT> get localhost <CLUSTER_NAME> core-siteThe property

fs.defaultFSshould be set to point to the NameNode host and the propertyha.zookeeper.quorumshould not be there.

Recreate the Standby NameNode

You may need to recreate your standby NameNode.

-

To check to see if you need to recreate the standby NameNode, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X GET <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=SECONDARY_NAMENODEIf this returns an empty

itemsarray, you must recreate your standby NameNode. Otherwise you can go on to Re-enable Standby NameNode. -

Recreate your standby NameNode. On the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X POST -d '{"host_components" : [{"HostRoles":{"component_name":"SECONDARY_NAMENODE"}] }' <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts?Hosts/host_name=<SECONDARY_NAMENODE_HOSTNAME> -

Verify that the standby NameNode now exists. On the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X GET <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=SECONDARY_NAMENODEThis should return a non-empty

itemsarray containing the standby NameNode.

Re-enable the Standby NameNode

To re-enable the standby NameNode, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X '{"RequestInfo":{"context":"Enable

Secondary NameNode"},"Body":{"HostRoles":{"state":"INSTALLED"}}}'<AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts/<SECONDARY_NAMENODE_HOSTNAME}/host_components/SECONDARY_NAMENODE

-

If this returns 200, go to Delete All JournalNodes.

-

If this returns 202, wait a few minutes and run the following command on the Ambari Server host:

curl -u <AMBARI_USER>:${AMBARI_PW -H "X-Requested-By: ambari" -i -X "<AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=SECONDARY_NAMENODE&fields=HostRoles/state"When

"state" : "INSTALLED"is in the response, go on to the next step.

Delete All JournalNodes

You may need to delete any JournalNodes.

-

To check to see if you need to delete JournalNodes, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X GET <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=JOURNALNODEIf this returns an empty

itemsarray, you can go on to Delete the Additional NameNode. Otherwise you must delete the JournalNodes. -

To delete the JournalNodes, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X DELETE <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts/<JOURNALNODE1_HOSTNAME>/host_components/JOURNALNODE curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X DELETE <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts/<JOURNALNODE2_HOSTNAME>/host_components/JOURNALNODE curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X DELETE <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts/<JOURNALNODE3_HOSTNAME>/host_components/JOURNALNODE -

Verify that all the JournalNodes have been deleted. On the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X GET <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=JOURNALNODEThis should return an empty

itemsarray.

Delete the Additional NameNode

You may need to delete your Additional NameNode.

-

To check to see if you need to delete your Additional NameNode, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X GET <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=NAMENODEIf the

itemsarray contains two NameNodes, the Additional NameNode must be deleted. -

To delete the Additional NameNode that was set up for HA, on the Ambari Server host:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X DELETE <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/hosts/<ADDITIONAL_NAMENODE_HOSTNAME>/host_components/NAMENODE -

Verify that the Additional NameNode has been deleted:

curl -u <AMBARI_USER>:<AMBARI_PW> -H "X-Requested-By: ambari" -i -X GET <AMBARI_PROTO>://localhost:<AMBARI_PORT>/api/v1/clusters/<CLUSTER_NAME>/host_components?HostRoles/component_name=NAMENODEThis should return an

itemsarray that shows only one NameNode.

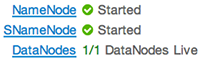

Verify the HDFS Components

Make sure you have the correct components showing in HDFS.

-

Go to

Ambari Web UI > Services, then selectHDFS. -

Check the Summary panel and make sure that the first three lines look like this:

-

NameNode

-

SNameNode

-

DataNodes

You should not see any line for JournalNodes.

-

Start HDFS

-

In the

Ambari Web UI, selectService Actions, then chooseStart.Wait until the progress bar shows that the service has completely started and has passed the service checks.

If HDFS does not start, you may need to repeat the previous step. -

To start all of the other services, select

Actions > Start Allin theServicesnavigation panel.

ResourceManager High Availability

The following topic explains How to Configure ResourceManager High Availability.

How to Configure ResourceManager High Availability

-

Check to make sure you have at least three hosts in your cluster and are running at least three ZooKeeper servers.

-

In Ambari Web, browse to

Services > YARN > Summary. SelectService Actionsand chooseEnable ResourceManager HA. -

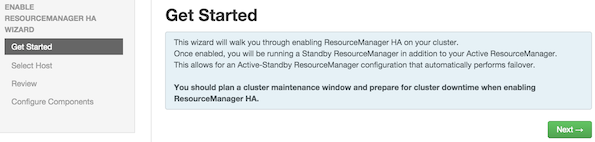

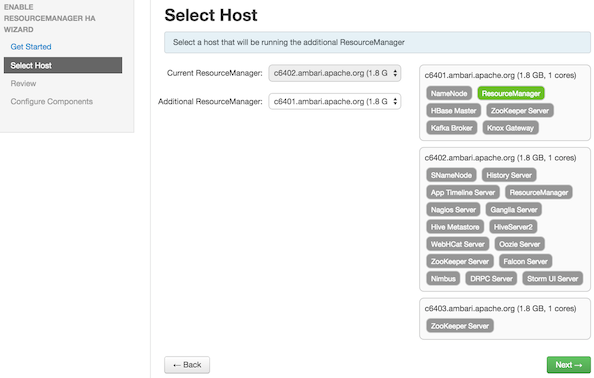

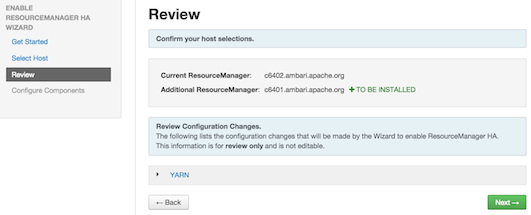

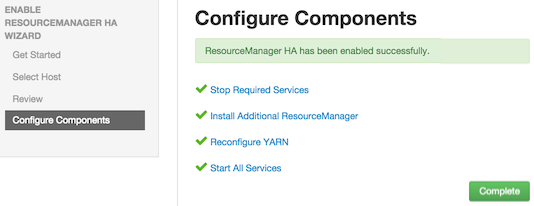

The Enable ResourceManager HA Wizard launches. The wizard describes a set of automated and manual steps you must take to set up ResourceManager High Availability.

-

Get Started: This step gives you an overview of enabling ResourceManager HA. Click

Nextto proceed.

-

Select Host: The wizard shows you the host on which the current ResourceManager is installed and suggests a default host on which to install an additional ResourceManager. Accept the default selection, or choose an available host. Click

Nextto proceed.

-

Review Selections: The wizard shows you the host selections and configuration changes that will occur to enable ResourceManager HA. Expand YARN, if necessary, to review all the YARN configuration changes. Click

Nextto approve the changes and start automatically configuring ResourceManager HA.

-

Configure Components: The wizard configures your components automatically, displaying progress bars to let you track the steps. After all progress bars complete, click

Completeto finish the wizard.

HBase High Availability

During the HBase service install, depending on your component assignment, Ambari installs and configures one HBase Master component and multiple RegionServer components. To setup high availability for the HBase service, you can run two or more HBase Master components by adding an HBase Master component. Once running two or more HBase Masters, HBase uses ZooKeeper for coordination of the active Master.

Adding an HBase Master Component

-

In Ambari Web, browse to

Services > HBase. -

In Service Actions, select the

+ Add HBase Masteroption. -

Choose the host to install the additional HBase Master, then choose Confirm Add.

Ambari installs the new HBase Master and reconfigure HBase to handle multiple Master instances.

Hive High Availability

The Hive service has multiple, associated components. The primary Hive components are: Hive Metastore and HiveServer2. To setup high availability for the Hive service, you can run two or more of each of those components.

Adding a Hive Metastore Component

-

In Ambari Web, browse to

Services > Hive. -

In Service Actions, select the

+ Add Hive Metastoreoption. -

Choose the host to install the additional Hive Metastore, then choose Confirm Add.

-

Ambari installs the component and reconfigures Hive to handle multiple Hive Metastore instances.

Adding a HiveServer2 Component

-

In Ambari Web, browse to the host where you would like to install another HiveServer2.

-

On the Host page, choose

+Add. -

Select

HiveServer2from the list. -

Ambari installs the new HiveServer2.

Ambari installs the component and reconfigures Hive to handle multiple Hive Metastore instances.

Oozie High Availability

To setup high availability for the Oozie service, you can run two or more instances of the Oozie Server component.

Adding an Oozie Server Component

-

In Ambari Web, browse to the host where you would like to install another Oozie Server.

-

On the Host page, click the “+Add” button.

-

Select “Oozie Server” from the list and Ambari will install the new Oozie Server.

-

After configuring your external Load Balancer, update the oozie configuration.

-

Browse to Services > Oozie > Configs and in oozie-site add the following:

Property

Value

oozie.zookeeper.connection.string

List of ZooKeeper hosts with ports. For example:

c6401.ambari.apache.org:2181,c6402.ambari.apache.org:2181,c6403.ambari.apache.org:2181oozie.services.ext

org.apache.oozie.service.ZKLocksService,org.apache.oozie.service.ZKXLogStreamingService,org.apache.oozie.service.ZKJobsConcurrencyService

oozie.base.url

http://<loadbalancer.hostname>:11000/oozie

-

In oozie-env, uncomment OOZIE_BASE_URL property and change value to point to the Load Balancer. For example:

export OOZIE_BASE_URL="http://<loadbalance.hostname>:11000/oozie" -

Restart Oozie service for the changes to take affect.

-

Update HDFS configs for the Oozie proxy user. Browse to Services > HDFS > Configs and in core-site update the hadoop.proxyuser.oozie.hosts property to include the newly added Oozie Server host. Hosts should be comma separated.

-

Restart all needed services.

Managing Configurations

Use Ambari Web to manage your HDP component configurations. Select any of the following topics:

Configuring Services

Select a service, then select Configs to view and update configuration properties for the selected service. For example,

select MapReduce2, then select Configs. Expand a config category to view configurable

service properties.

Updating Service Properties

-

Expand a configuration category.

-

Edit values for one or more properties that have the Override option.

Edited values, also called stale configs, show an Undo option.

-

Choose Save.

Restarting components

After editing and saving a service configuration, Restart indicates components that

you must restart.

Select the Components or Hosts links to view details about components or hosts requiring

a restart.

Then, choose an option appearing in Restart. For example, options to restart YARN

components include:

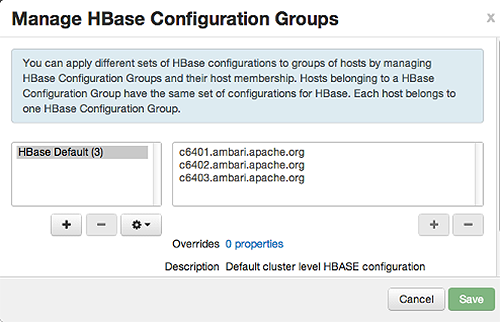

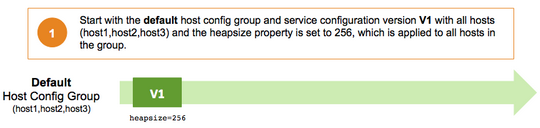

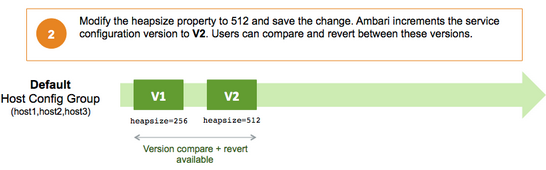

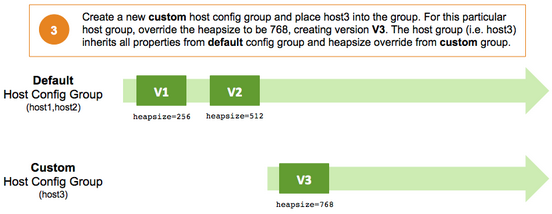

Using Host Config Groups

Ambari initially assigns all hosts in your cluster to one, default configuration group

for each service you install. For example, after deploying a three-node cluster with

default configuration settings, each host belongs to one configuration group that

has default configuration settings for the HDFS service. In Configs, select Manage Config Groups, to create new groups, re-assign hosts, and override default settings for host components

you assign to each group.

To create a Configuration Group:

-

Choose

Add New Configuration Group. -

Name and describe the group, then choose Save.

-

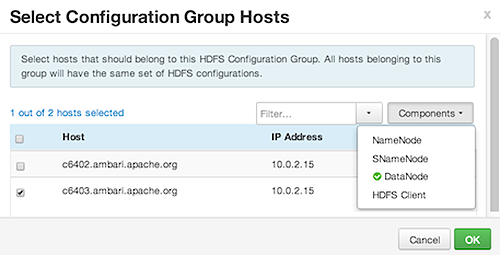

Select a Config Group, then choose Add Hosts to Config Group.

-

Select Components and choose from available Hosts to add hosts to the new group.

Select Configuration Group Hosts enforces host membership in each group, based on installed components for the selected service.

-

Choose OK.

-

In Manage Configuration Groups, choose Save.

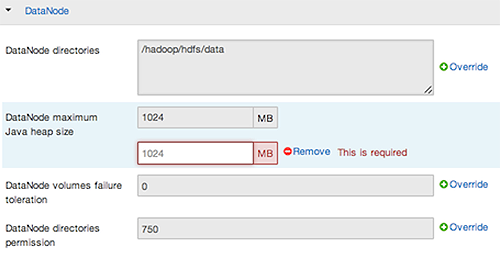

To edit settings for a configuration group:

-

In Configs, choose a Group.

-

Select a Config Group, then expand components to expose settings that allow Override.

-

Provide a non-default value, then choose Override or Save.

Configuration groups enforce configuration properties that allow override, based on installed components for the selected service and group.

-

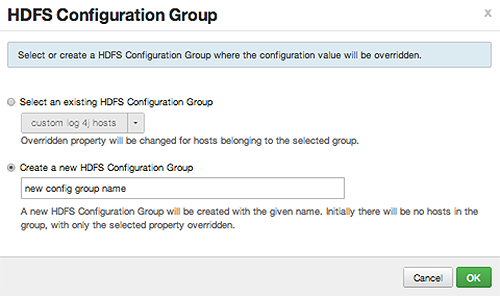

Override prompts you to choose one of the following options:

-

Select an existing configuration group (to which the property value override provided in step 3 will apply), or

-

Create a new configuration group (which will include default properties, plus the property override provided in step 3).

-

Then, choose

OK.

-

-

In Configs, choose Save.

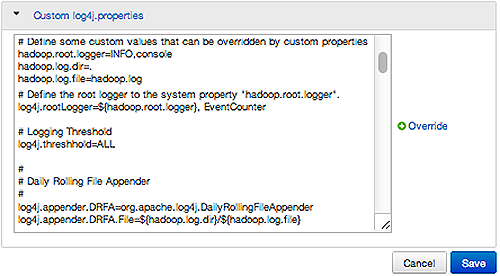

Customizing Log Settings

Ambari Web displays default logging properties in Service Configs > Custom log 4j Properties. Log 4j properties control logging activities for the selected service.

Restarting components in the service pushes the configuration properties displayed in Custom log 4j Properties to each host running components for that service. If you have customized logging properties that define how activities for each service are logged, you will see refresh indicators next to each service name after upgrading to Ambari 1.5.0 or higher. Make sure that logging properties displayed in Custom log 4j Properties include any customization. Optionally, you can create configuration groups that include custom logging properties. For more information about saving and overriding configuration settings, see Editing Service Config Properties.

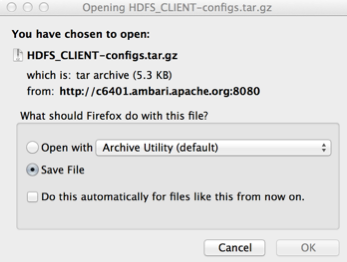

Downloading Client Configs

For Services that include client components (for example Hadoop Client or Hive Client), you can download the client configuration files associated with that client from Ambari.

-

In Ambari Web, browse to the Service with the client for which you want the configurations.

-

Choose

Service Actions. -

Choose

Download Client Configs. You are prompted for a location to save the client configs bundle.

-

Save the bundle.

Service Configuration Versions

Ambari provides the ability to manage configurations associated with a Service. You can make changes to configurations, see a history of changes, compare + revert changes and push configuration changes to the cluster hosts.

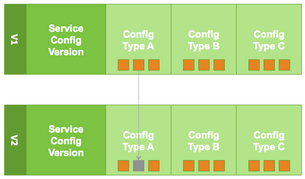

Basic Concepts

It’s important to understand how service configurations are organized and stored in

Ambari. Properties are grouped into Configuration Types (config types). A set of config

types makes up the set of configurations for a service.

For example, the HDFS Service includes the following config types: hdfs-site, core-site,

hdfs-log4j, hadoop-env, hadoop-policy. If you browse to Services > HDFS > Configs, the configuration properties for these config types are available for edit.

Versioning of configurations is performed at the service-level. Therefore, when you

modify a configuration property in a service, Ambari will create a Service Config

Version. The figure below shows V1 and V2 of a Service Configuration Version with

a change to a property in Config Type A. After making the property change to Config

Type A in V1, V2 is created.

Terminology

The following table lists configuration versioning terms and concepts that you should know.

|

Term |

Description |

|---|---|

|

Configuration Property |

Configuration property managed by Ambari, such as NameNode heapsize or replication factor. |

|

Configuration Type (Config Type) |

Group of configuration properties. For example: hdfs-site is a Config Type. |

|

Service Configurations |

Set of configuration types for a particular service. For example: hdfs-site and core-site Config Types are part of the HDFS Service Configuration. |

|

Change Notes |

Optional notes to save with a service configuration change. |

|

Service Config Version (SCV) |

Particular version of configurations for a specific service. Ambari saves a history of service configuration versions. |

|

Host Config Group (HCG) |

Set of configuration properties to apply to a specific set of hosts. Each service has a default Host Config Group, and custom config groups can be created on top of the default configuration group to target property overrides to one or more hosts in the cluster. See Managing Configuration Groups for more information. |

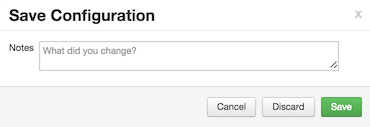

Saving a Change

-

Make the configuration property change.

-

Choose Save.

-

You are prompted to enter notes that describe the change.

-

Click Save to confirm your change. Cancel will not save but instead returns you to the configuration page to continuing editing.

To revert the changes you made and not save, choose Discard.

To return to the configuration page and continue editing without saving changes, choose Cancel.

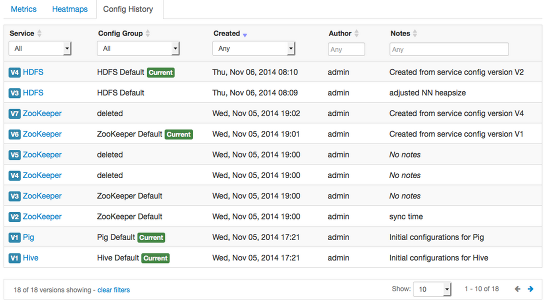

Viewing History

Service Config Version history is available from Ambari Web in two places: On the

Dashboard page under the Config History tab; and on each Service page under the Configs

tab.

The Dashboard > Config History tab shows a list of all versions across services with each version number and the

date and time the version was created. You can also see which user authored the change

with the notes entered during save. Using this table, you can filter, sort and search

across versions.

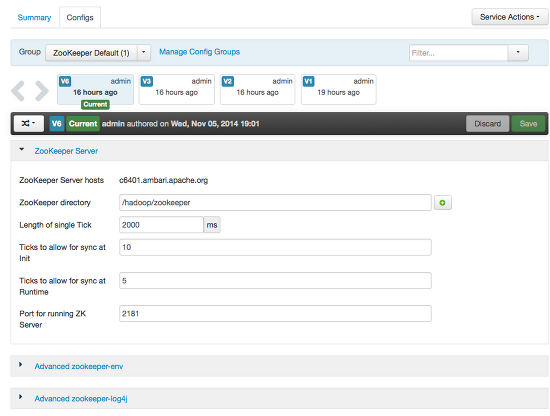

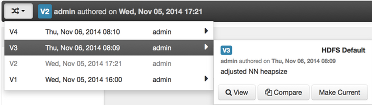

The most recent configuration changes are shown on the Service > Configs tab. Users can navigate the version scrollbar left-right to see earlier versions.

This provides a quick way to access the most recent changes to a service configuration.

Click on any version in the scrollbar to view, and hover to display an option menu which allows you compare versions and perform a revert. Performing a revert makes any config version that you select the current version.

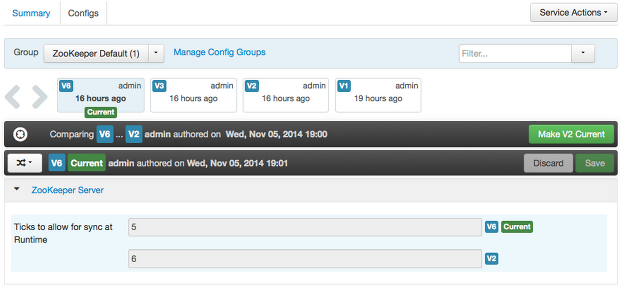

Comparing Versions

When navigating the version scroll area on the Services > Configs tab, you can hover over a version to display options to view, compare or revert.

- To perform a compare between two service configuration versions:

-

Navigate to a specific configuration version. For example “V6”.

-

Using the version scrollbar, find the version would you like to compare against “V6”. For example, if you want to compare V6 to V2, find V2 in the scrollbar.

-

Hover over the version to display the option menu. Click “Compare”.

-

Ambari displays a comparison of V6 to V2, with an option to revert to V2.

-

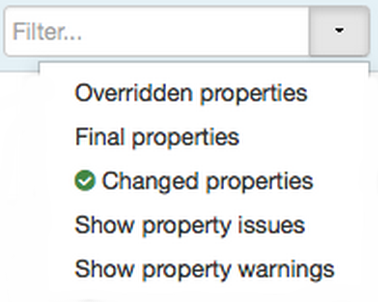

Ambari also filters the display by only “Changed properties”. This option is available under the Filter control.

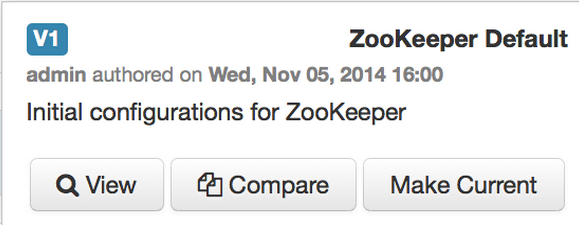

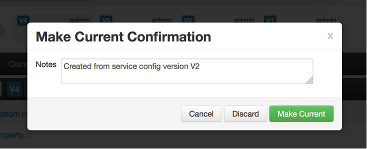

Reverting a Change

You can revert to an older service configuration version by using the “Make Current” feature. The “Make Current” will actually create a new service configuration version with the configuration properties from the version you are reverting -- it is effectively a “clone”. After initiating the Make Current operation, you are prompted to enter notes for the new version (i.e. the clone) and save. The notes text will include text about the version being cloned.

There are multiple methods to revert to a previous configuration version:

-

View a specific version and click the “Make V* Current” button.

-

Use the version navigation dropdown and click the “Make Current” button.

-

Hover on a version in the version scrollbar and click the “Make Current” button.

-

Perform a comparison and click the “Make V* Current” button.

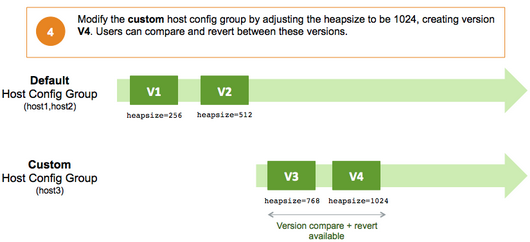

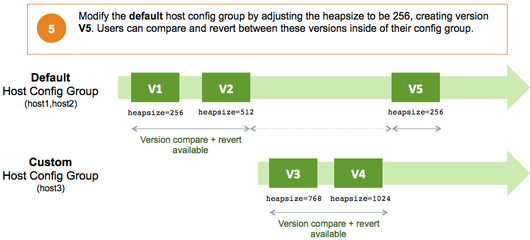

Versioning and Host Config Groups

Service configuration versions are scoped to a host config group. For example, changes made in the default group can be compared and reverted in that config group. Same with custom config groups.

The following example describes a flow where you have multiple host config groups and create service configuration versions in each config group.

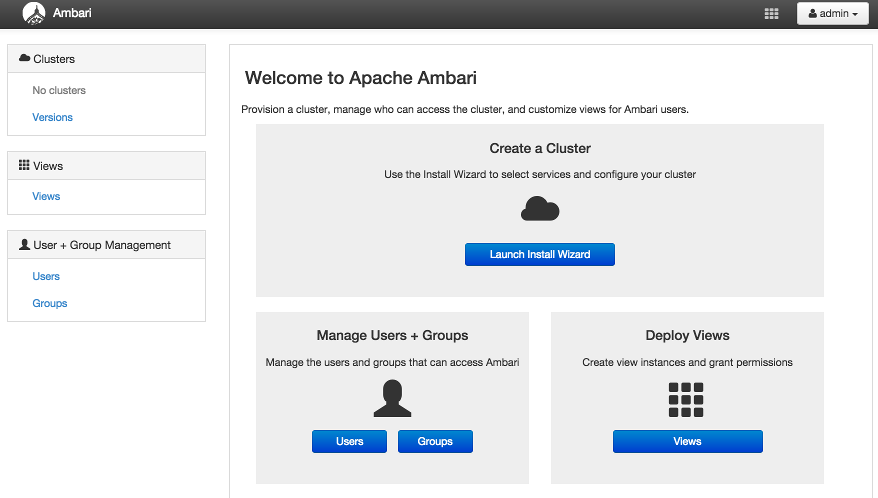

Administering the Cluster

From the cluster dashboard, use the Admin options to view information about Managing Stack and Versions, Service Accounts, and to Enable Kerberos security.

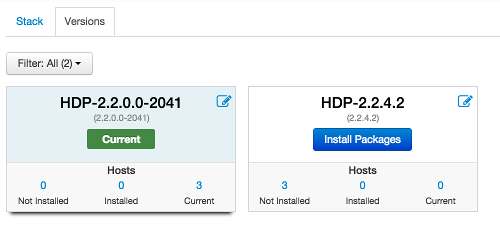

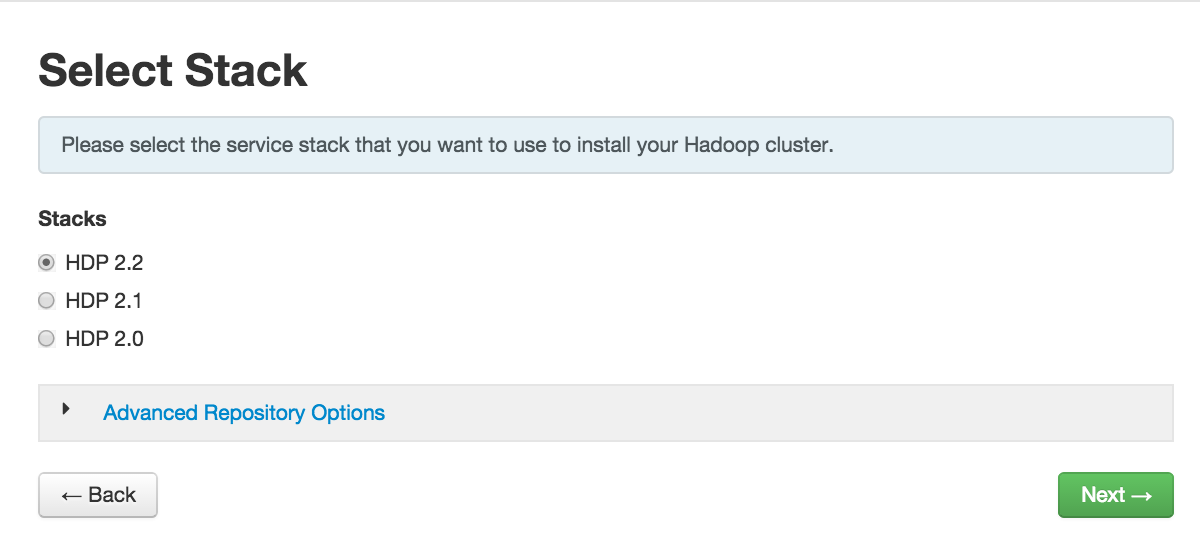

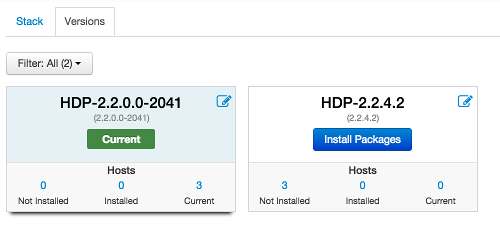

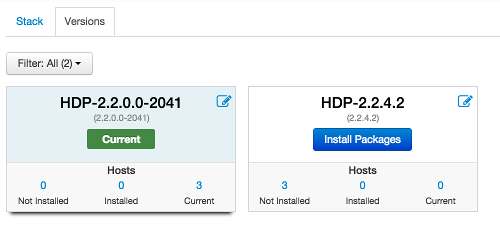

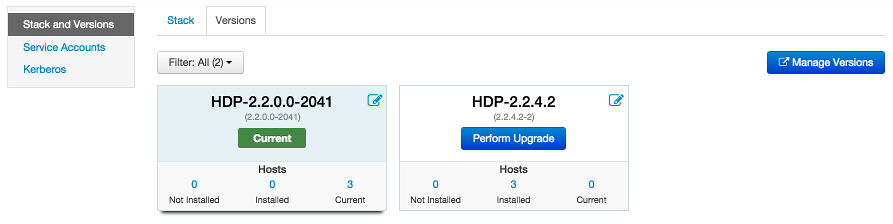

Managing Stack and Versions

The Stack section includes information about the Services installed and available in the cluster

Stack. Browse the list of Services and click Add Service to start the wizard to install Services into your cluster.

The Versions section shows what version of software is currently running and installed in the

cluster. This section also exposes the capability to perform an automated cluster

upgrade for maintenance and patch releases for the Stack. This capability is available

for HDP 2.2 Stack only. If you have a cluster running HDP 2.2, you can perform Stack

upgrades to later maintenance and patch releases. For example: you can upgrade from

the GA release of HDP 2.2 (which is HDP 2.2.0.0) to the first maintenance release

of HDP 2.2 (which is HDP 2.2.4.2).

The process for managing versions and performing an upgrade is comprised of three main steps:

-

Register a Version into Ambari

-

Install the Version into the Cluster

-

Perform Upgrade to the New Version

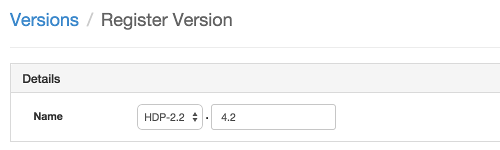

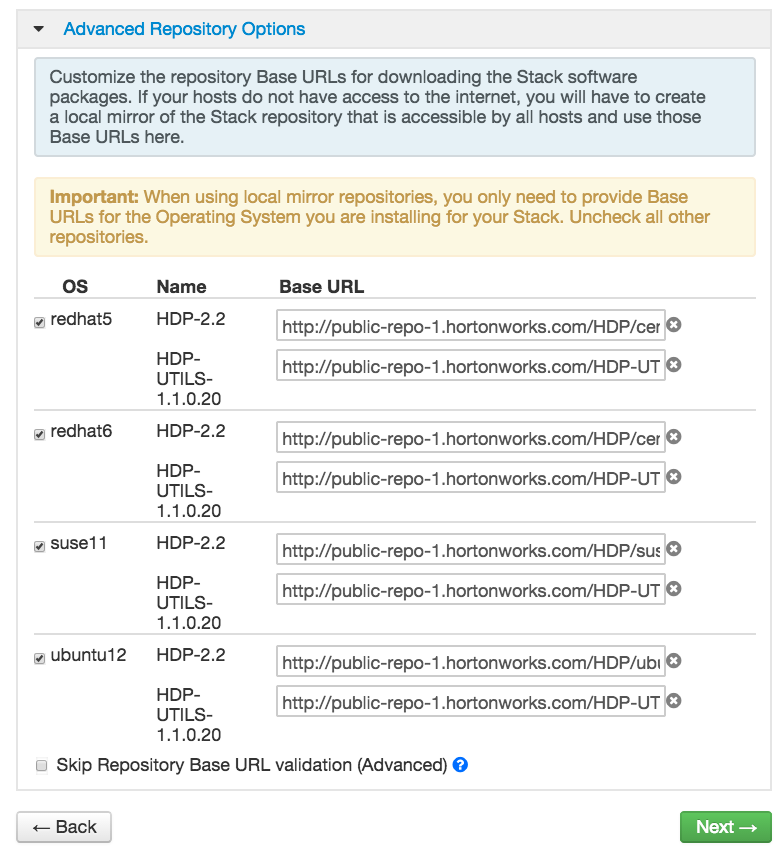

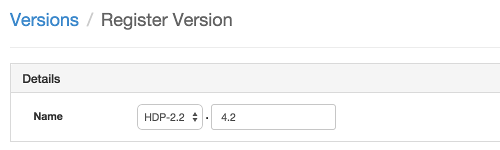

Register a Version

Ambari can manage multiple versions of Stack software.

To register a new version:

-

On the Versions tab, click

Manage Versions. -

Proceed to register a new version by clicking

+ Register Version. -

Enter a two-digit version number. For example, enter 4.2, (which makes the version HDP-2.2.4.2).

-

Select one or more OS families and enter the respective Base URLs.

-

Click

Save. -

You can click “Install On...” or you can browse back to

Admin > Stack and Versions > Versionstab. You will see the version current running and the version you just registered. Proceed to Install the Version.

Install the Version

To install a version in the cluster:

-

On the versions tab, click

Install Packages.

-

Click OK to confirm.

-

The Install version operation will start and the new version will be installed on all hosts.

-

You can browse to Hosts and to each

Host > Versionstab to see the new version is installed. Proceed to Perform Upgrade.

Perform Upgrade

Once your target version has been registered into Ambari, installed on all hosts in the cluster and you meet the Prerequisites you are ready to perform an upgrade.

The perform upgrade process switches over the services in the cluster to a new version

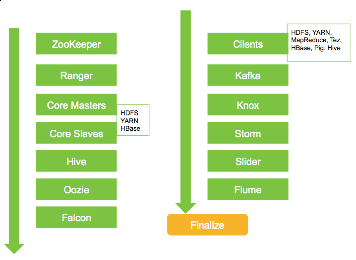

in a rolling fashion. The process follows the flow below. Starting with ZooKeeper

and the Core Master components, ending with a Finalize step. To ensure the process

runs smoothly, this process includes some manual prompts for you to perform cluster

verification and testing along the way. You will be prompted when your input is required.

Upgrade Prerequisites

To perform an automated cluster upgrade from Ambari, your cluster must meet the following prerequisites:

|

Item |

Requirement |

Description |

|---|---|---|

|

Cluster |

Stack Version |

Must be running HDP 2.2 Stack. This capability is not available for HDP 2.0 or 2.1 Stacks. |

|

Version |

New Version |

All hosts must have the new version installed. |

|

HDFS |

NameNode HA |

NameNode HA must be enabled and working properly. See the Ambari User’s Guide for more information Configuring NameNode High Availability. |

|

HDFS |

Decommission |

No components should be in decommissioning or decommissioned state. |

|

YARN |

YARN WPR |

Work Preserving Restart must be configured. |

|

Hosts |

Heartbeats |

All Ambari Agents must be heartbeating to Ambari Server. Any hosts that are not heartbeating must be in Maintenance Mode. |

|

Hosts |

Maintenance Mode |

Any hosts in Maintenance Mode must not be hosting any Service master components. |

|

Services |

Services Started |

All Services must be started. |

|

Services |

Maintenance Mode |

No Services can be in Maintenance Mode. |

To perform an upgrade to a new version.

-

On the versions tab, click

Perform Upgradeon the new version. -

Follow the steps on the wizard.

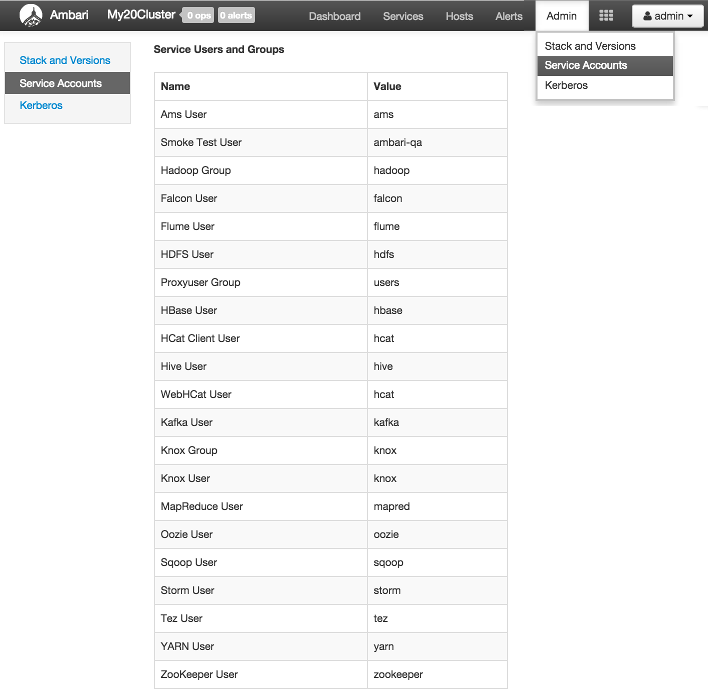

Service Accounts

To view the list of users and groups used by the cluster services, choose Admin > Service Accounts.

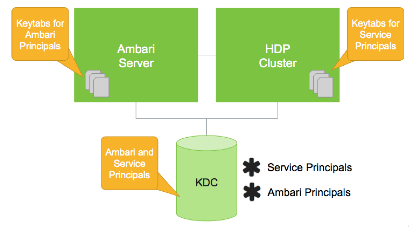

Kerberos

If Kerberos has not been enabled in your cluster, click the Enable Kerberos button to launch the Kerberos wizard. For more information on configuring Kerberos in your cluster, see the Ambari Security Guide. Once Kerberos is enabled, you can:

How To Regenerate Keytabs

-

Browse to

Admin > Kerberos. -

Click the

Regenerate Kerberosbutton. -

Confirm your selection to proceed.

-

Optionally, you can regenerate keytabs for only those hosts that are missing keytabs. For example, hosts that were not online/available from Ambari when enabling Kerberos.

-

Once you confirm, Ambari will connect to the KDC and regenerate the keytabs for the Service and Ambari principals in the cluster.

-

Once complete, you must restart all services for the new keytabs to be used.

How To Disable Kerberos

-

Browse to

Admin > Kerberos. -

Click the

Disable Kerberosbutton. -

Confirm your selection to proceed. Cluster services will be stopped and the Ambari Kerberos security settings will be reset.

-

To re-enable Kerberos, click Enable Kerberos and follow the wizard steps. For more information on configuring Kerberos in your cluster, see the Ambari Security Guide.

Monitoring and Alerts

Ambari monitors cluster health and can alert you in the case of certain situations to help you identify and troubleshoot problems. You manage how alerts are organized, under which conditions notifications are sent, and by which method. This section provides information on:

Managing Alerts