Use the following instructions to deploy HDP on a single node Windows Server machine:

Install the necessary prerequisites using one of the following options:

Option I - Use CLI: Download all prerequisites to a single directory and use command line interface (CLI) to install these prerequisites.

Option II - Install manually: Download each prerequisite and follow the step by step GUI driven manual instructions provided after download.

Prepare the single node machine.

Collect Information.

Get the hostname of the server where you plan to install HDP. Open the command shell on that cluster host and execute the following command:

> hostname WIN-RT345SERVER

Use the output of this command to identify the cluster machine.

Configure firewall.

HDP uses multiple ports for communication with clients and between service components.

If your corporate policies require maintaining per server firewall, you must enable the ports listed here. Use the following command to open these ports:

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=$PORT_NUMBER

For example, the following command will open up port 80 in the active Windows Firewall:

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=80

For example, the following command will open ports all ports from

49152to65535. in the active Windows Firewall:netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=49152-65535

If your networks security policies allow you open all the ports, use the following instructions to disable Windows Firewall: http://technet.microsoft.com/en-us/library/cc766337(v=ws.10).aspx

Install and start HDP.

Download the HDP for Windows MSI file from: http://public-repo-1.hortonworks.com/HDP-Win/2.0/GA/hdp-2.0.6-GA.zip.

Open a command prompt with Administrator privileges and execute the MSI installer command. If you are installing on Windows Server 2012, use this method to open the installer:

msiexec /lv c:\hdplog.txt /i "<PATH_to_MSI_file>"

The following example illustrates the command to launch the installer:

msiexec /lv c:\hdplog.txt /i "C:\Users\Administrator\Downloads\hdp-2.0.6.0.winpkg.msi"As shown in the example above, the

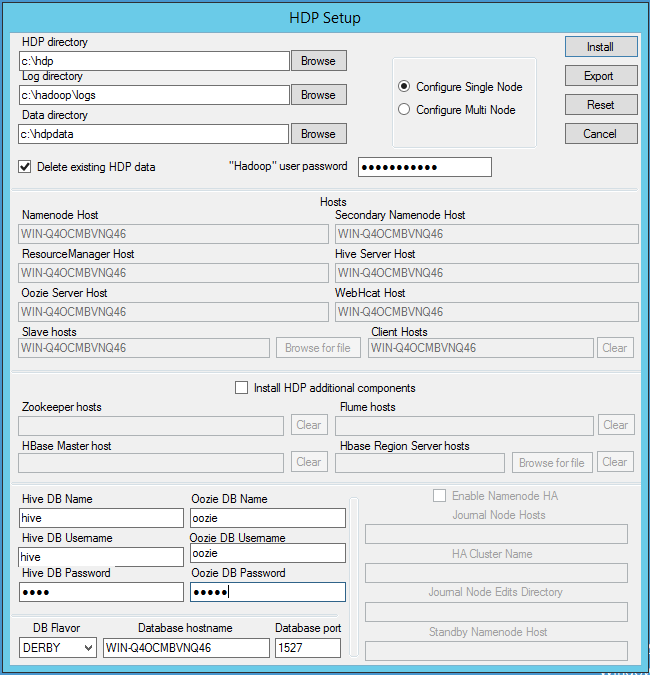

PATH_to_MSI_fileparameter should be modified to match the location of the downloaded MSI file.The HDP Setup window appears pre-populated with the host name of the server, as well as default installation parameters.

You must specify the following parameters:

Hadoop User Password --Type in a password for the Hadoop super user (the administrative user). This password enables you to log in as the administrative user and perform administrative actions. You must enter an acceptable pasword to successfully install. Password requirements are controlled by Windows, and typically require that the password include a combination of uppercase and lowercase letters, digits, and special characters.

Hive and Oozie DB Names, Usernames, and Passwords -- Set the DB (database) name, user name, and password for the Hive and Oozie metastores. You can use the boxes at the lower left of the HDP Setup window ("Hive DB Name", "Hive DB Username", etc.) to specify these parameters.

DB Flavor --Select DERBY to use an embedded database for the single-node HDP installation.

You can optionally configure the following parameters:

HDP Directory --The directory in which HDP will be installed. The default installation directory is

c:\hdp.Log Directory --The directory for the HDP service logs. The default location is

c:\hadoop\logs.Data Directory --The directory for user data for each HDP service. The default location is

c:\hdpdata.Delete Existing HDP Data --Selecting this check box removes any existing data from prior HDP installs. This will ensure that HDFS will automatically start with a formatted file system. For a single node installation, it is recommended that you select this option to start with a freshly formatted HDFS.

Install HDP Additional Components --Select this check box to install Zookeeper, Flume, and HBase as HDP services deployed to the single node server.

When you have finished setting the installation parameters, click Install to install HDP.

The HDP Setup window will close, and a progress indicator will be displayed while the installer is running. The installation may take several minutes. Also, the time remaining estimate may not be accurate.

A confirmation message displays when the installation is complete.

Note: If you did not select the "Delete existing HDP data"check box, the HDFS file system must be formatted. To format the HDFS file system, open the Hadoop Command Line shortcut on the Windows desktop, then run the following command:

bin\hadoop namenode -format

Start all HDP services on the single machine.

In a command prompt, navigate to the HDP install directory. This is the "HDP directory" setting you specified in the HDP Setup window.

Run the following command from the HDP install directory:

start_local_hdp_services

Validate the install by running the full suite of smoke tests.

Run-SmokeTests

Note: You can use the Export button on the HDP Setup window to export the configuration information for use in a CLI/script-driven deployment. Clicking Export stops the installation and creates a clusterproperties.txt file that contains the configuration information specified in the fields on the HDP Setup window.

The following table provides descriptions and example values for all of the parameters available in the HDP Setup window, and also indicates whether or not each value is required.

Configuration Values for HDP Setup

| Configuration Field | Description | Example value | Mandatory/ Optional/ Conditional |

| Log directory | HDP's operational logs will be written to this directory on each cluster host. Ensure that you have sufficient disk space for storing these log files. | d:\hadoop\logs | Mandatory |

| Data directory | HDP data will be stored in this directory on each cluster node. You can add multiple comma-separated data locations for multiple data directories. | d:\hdp\data | Mandatory |

| NameNode Host | The FQDN for the cluster node that will run the NameNode master service. | NAMENODE_MASTER.acme.com | Mandatory |

| ResourceManager Host | The FQDN for the cluster node that will run the ResourceManager master service. | RESOURCE_MANAGER.acme.com | Mandatory |

| Hive Server Host | The FQDN for the cluster node that will run the Hive Server master service. | HIVE_SERVER_MASTER.acme.com | Mandatory |

| Oozie Server Host | The FQDN for the cluster node that will run the Oozie Server master service. | OOZIE_SERVER_MASTER.acme.com | Mandatory |

| WebHcat Host | The FQDN for the cluster node that will run the WebHCat master service. | WEBHCAT_MASTER.acme.com | Mandatory |

| Flume Hosts | A comma-separated list of FQDN for those cluster nodes that will run the Flume service. | FLUME_SERVICE1.acme.com, FLUME_SERVICE2.acme.com, FLUME_SERVICE3.acme.com | Mandatory |

| HBase Master Host | The FQDN for the cluster node that will run the HBase master. | HBASE_MASTER.acme.com | Mandatory |

| HBase Region Server hosts | A comma-separated list of FQDN for those cluster nodes that will run the HBase Region Server services. | slave1.acme.com, slave2.acme.com, slave3.acme.com | Mandatory |

| Slave hosts | A comma-separated list of FQDN for those cluster nodes that will run the HDFS DataNode and YARN Nodemanager services. | slave1.acme.com, slave2.acme.com, slave3.acme.com | Mandatory |

| Zookeeper Hosts | A comma-separated list of FQDN for those cluster nodes that will run the Zookeeper hosts. | ZOOKEEEPER_HOST.acme.com | Mandatory |

| DB Flavor | Database type for Hive and Oozie metastores (allowed databases are SQL Server and Derby). To use default embedded Derby instance, set the value of this property to derby. To use an existing SQL Server instance as the metastore DB, set the value as mssql. | mssql or derby | Mandatory |

| Database hostname | FQDN for the node where the metastore database service is installed. If you are using SQL Server, set the value to your SQL Server hostname. If you are using Derby for the Hive metastore, set the value to HIVE_SERVER_HOST. | sqlserver1.acme.com | Mandatory |

| Database port | This is an optional property required only if you are using SQL Server for Hive and Oozie metastores. The database port is set to 1433 by default. | 1433 | |

| Hive DB Name | Database for Hive metastore. If you are using SQL Server, ensure that you create the database on the SQL Server instance. | hivedb | Mandatory |

| Hive DB Username | User account credentials for the Hive metastore database instance. Ensure that this user account has appropriate permissions. | hive_user | Mandatory |

| Hive DB Password | Password for Hive database. | hive_password | Mandatory |

| Oozie DB Name | Database for the Oozie metastore. If you are using SQL Server, ensure that you create the database on the SQL Server instance. | ooziedb | Mandatory |

| Oozie DB Username | User account credentials for the Oozie metastore database instance. Ensure that this user account has appropriate permissions. | oozie_user | Mandatory |

| Oozie DB Password | Password for Oozie database. | oozie_password | Mandatory |