Create controller services for your data flow

Learn how to create and configure controller services for the Kafka ingest data flow.

You can add controller services that can provide shared services to be used by the processors in your data flow.

- HortonworksSchemaRegistry Controller Service

- CSVReader Controller Service

- AvroRecordSetWriter Controller Service

See below for property details.

HortonworksSchemaRegistry Controller Service

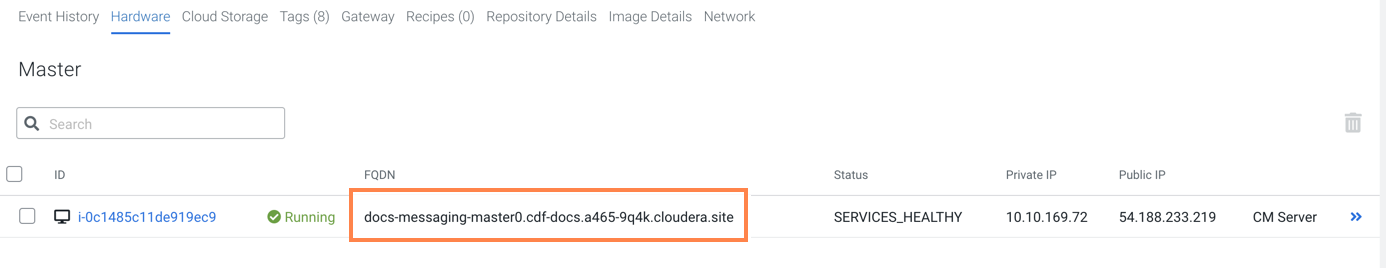

In this data flow, the data source is Kafka. To define the Schema Registry URL property of the HortonworksSchemaRegistry Controller Service, provide the URL of the schema registry that you want to connect to. You can find the master hostname on the Streams Messaging cluster overview page when selecting the Hardware tab.

| Property | Description | Example value for ingest data flow |

|---|---|---|

|

Schema Registry URL |

Provide the URL of the schema registry that this Controller Service should

connect to, including version. In the format:

|

|

|

SSL Context Service |

Specify the SSL Context Service to use for communicating with Schema Registry. Use the pre-configured SSLContextProvider. |

Default NiFi SSL Context Service |

|

Kerberos Principal |

Specify the user name that should be used for authenticating with Kerberos. Use your CDP workload username to set this Authentication property. |

srv_nifi-kafka-ingest |

|

Kerberos Password |

Provide the password that should be used for authenticating with Kerberos. Use your CDP workload password to set this Authentication property. |

password |

CSVReader Controller Service

| Property | Description | Example value for ingest data flow |

|---|---|---|

|

Schema Access Strategy |

Specify how to obtain the schema to be used for interpreting the data. |

Use String Fields From Header |

|

Treat First Line as Header |

Specify whether or not the first line of CSV should be considered a Header or a record. |

true |

AvroRecordSetWriter Controller Service

| Property | Description | Example value for ingest data flow |

|---|---|---|

|

Schema Write Strategy |

Specify how the schema for a Record should be added to the data. |

HWX Content-Encoded Schema Reference |

|

Schema Access Strategy |

Specify how to obtain the schema to be used for interpreting the data. |

Use 'Schema Name' Property |

|

Schema Registry |

Specify the Controller Service to use for the Schema Registry. |

CDPSchemaRegistry |

|

Schema Name |

Specify the name of the schema to look up in the Schema Registry property. See the Appendix for an example schema. |

customer |