Build the data flow

From the Apache NiFi canvas, set up the elements of your data flow. This involves opening NiFi in CDP Public Cloud, adding processors to your NiFi canvas, and connecting the processors.

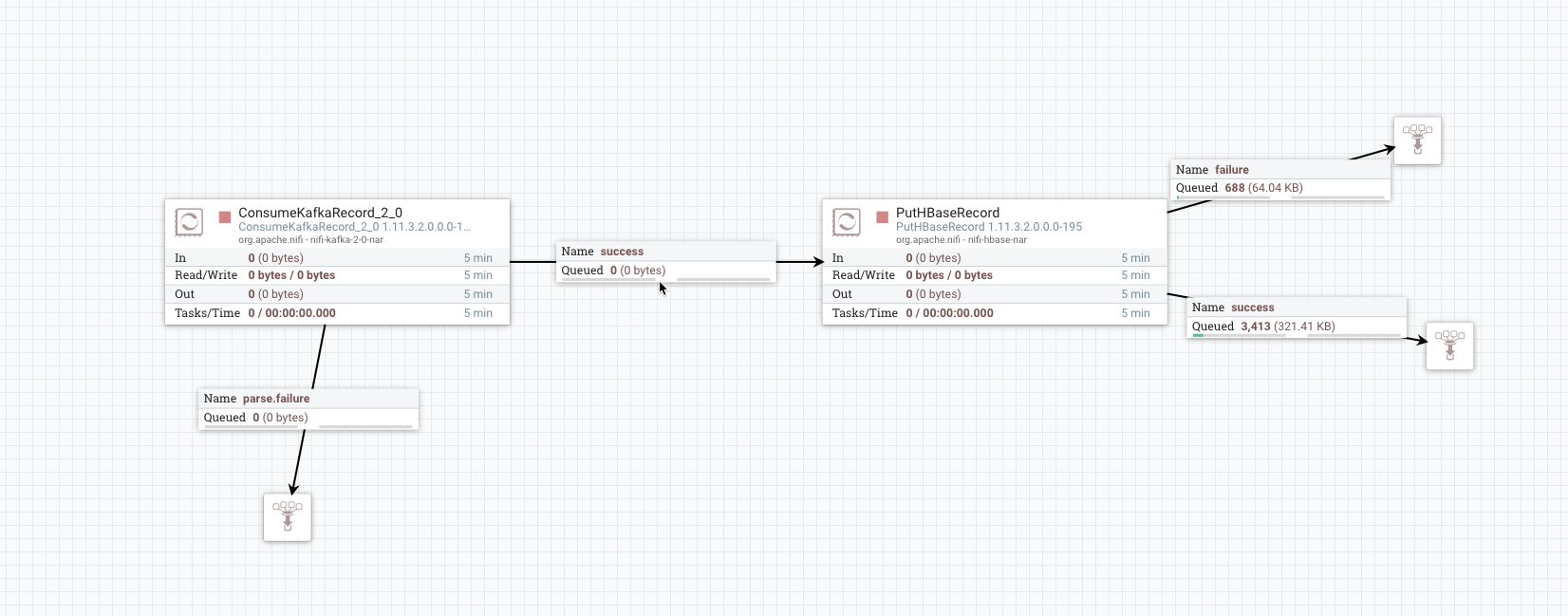

You should use the PutHBaseRecord processor to build your HBase ingest data flows.

-

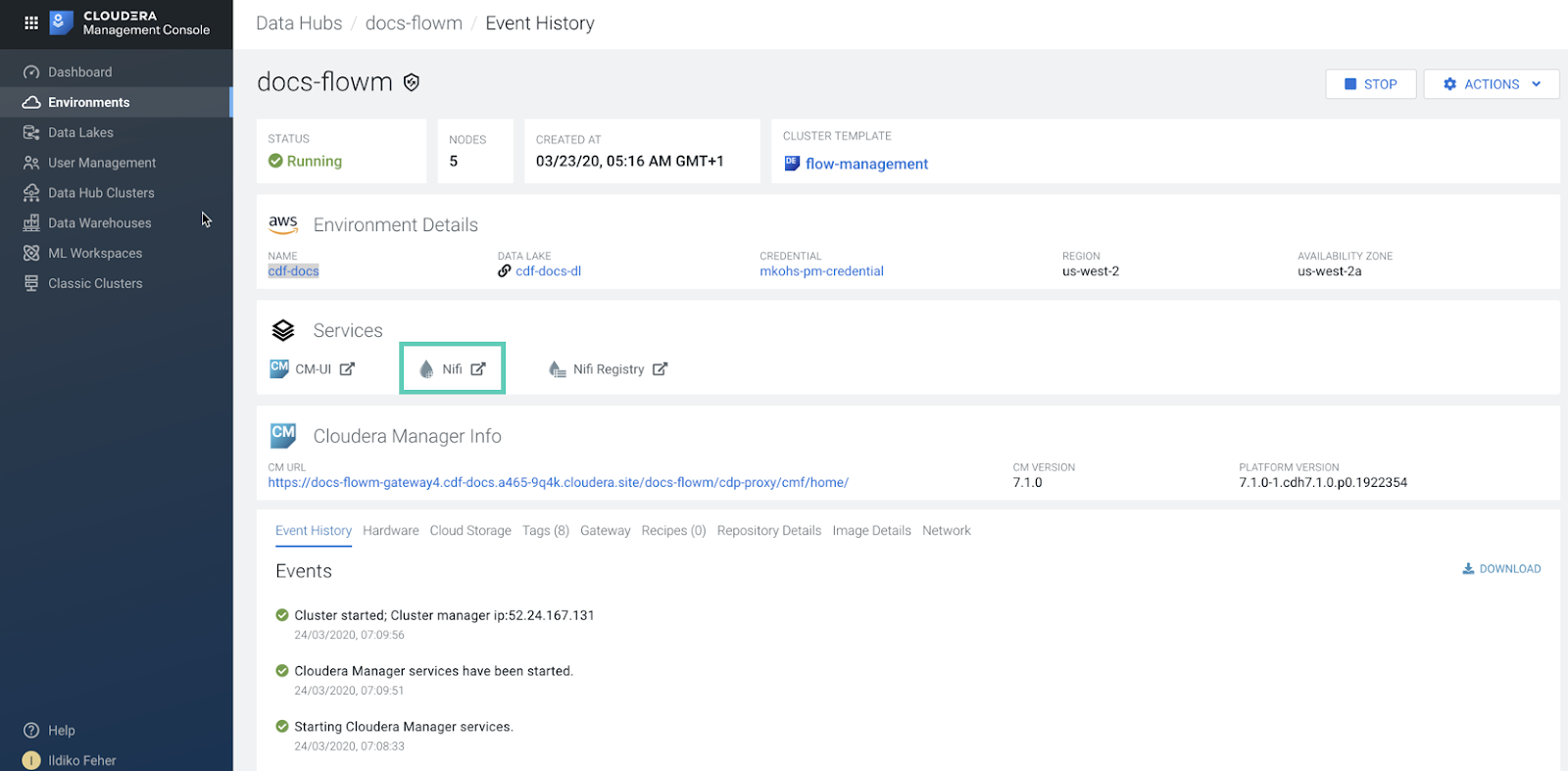

Open NiFi in CDP Public Cloud.

-

Add the NiFi Processors to your canvas.

- Select the Processor icon from the Cloudera Flow Management actions pane, and drag a processor to the Canvas.

- Use the Add Processor filter box to search for the processor you want to add, and then click Add.

- Add each of the processors you want to use for your data flow.

-

Connect the two processors to create a flow.

Your data flow may look similar to the following:

Create the Controller service for your data flow. You will need these services later on as you configure your data flow target processor.