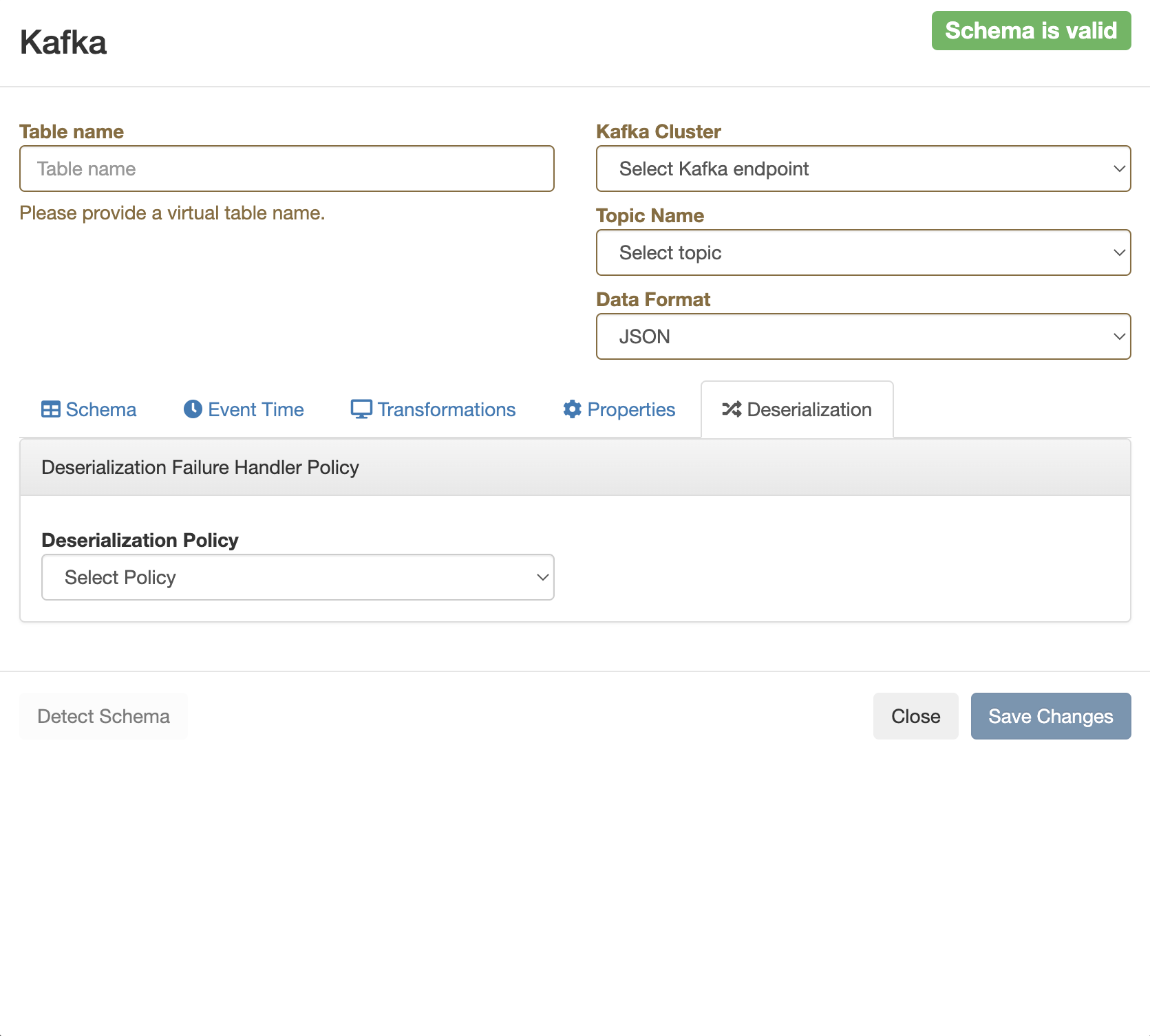

Deserialization tab

When creating a Kafka table, you can configure how to handle errors due to schema mismatch using DDL or the Kafka wizard.

You can configure every supported type of Kafka connectors (local-kafka, kafka or upsert) how to handle if a message fails to deserialize which can result in job submission error. You can choose from the following configurations:

- Fail

- In this case an exception is thrown, and the job submission fails

- Ignore

- In this case the error message is ignored without any log, and the job submission is successful

- Ignore and Log

- In this case the error message is ignored, and the job submission is successful

- Save to DLQ

- In this case the error message is ignored, but you can store it in a dead-letter queue (DLQ) Kafka topic

Using the Kafka wizard

- Navigate to the Streaming SQL Console.

- Navigate to , and select the environment where you have created your cluster.

- Select the Streaming Analytics cluster from the list of Data Hub clusters.

- Select SQL Stream Builder from the list of services.

- Click Compose tab.

- Click Tables tab.

- Select .

The Add Kafka table window appears

- Select Deserialization tab.

- Choose from the following policy options under Deserialization

Policy:

- Fail

- Ignore

- Ignore and Log

- Save to DLQ

If you choose the

Save to DLQoption, you need to create a dedicated Kafka topic where you store the error message. After selecting this option, you need to further select the created DLQ topic. - Click Save Changes.

Using DDL

- Navigate to the Streaming SQL Console.

- Navigate to , and select the environment where you have created your cluster.

- Select the Streaming Analytics cluster from the list of Data Hub clusters.

- Select SQL Stream Builder from the list of services.

- Click Compose tab.

- Choose one of the Kafka template types from Templates.

- Select any type of data format.

The predefined CREATE TABLE statement is imported to the SQL Window.

- Fill out the Kafka template based on your requirements.

- Search for the

deserialization.failure.policy. - Provide the value for the error handling from the following options:

‘error’‘ignore’‘ignore_and_log’‘dlq’If you choose the

dlqoption, you need to create a dedicated Kafka topic where you store the error message. After selecting this option, you need to further provide the name of the created DLQ topic.

- Click Execute.