Configure the processor for your data source

Learn how you can configure the ConsumeKafkaRecord_2_0 data source processor for your S3 ingest data flow. You can set up a data flow to move data to Amazon S3 from many different locations. This example assumes that you are streaming data from Kafka and shows you the configuration for the relevant data source processor.

-

Launch the Configure Processor window, by right clicking the

ConsumeKafkaRecord_2_0 processor and selecting

Configure.This gives you a configuration dialog with the following tabs: Settings, Scheduling, Properties, Comments.

- Configure the processor according to the behavior you expect in your data flow.

- When you have finished configuring the options you need, save the changes by

clicking the Apply button.

Make sure that you set all required properties, as you cannot start the processor until all mandatory properties have been configured.

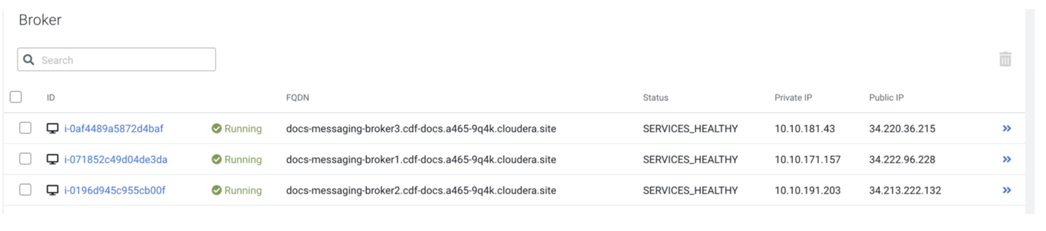

In this example data flow, the data source is Kafka. You can create the modified Kafka broker URLs using the broker hostnames and adding port :9093 to the end of each FQDN. You can find the hostnames on the Streams Messaging cluster overview page when selecting the Hardware tab.

The following table includes a description and example values for the properties required to configure the example ingest data flow. For a complete list of ConsumeKafkaRecord_2_0 properties, see the Apache Nifi Documentation.

Table 1. ConsumeKafkaRecord_2_0 processor properties Property Description Example value for ingest data flow Kafka Brokers

Provide a comma-separated list of known Kafka Brokers.

In the format:

<host>:<port>Docs-messaging-broker1.cdf-docs.a465-9q4k.cloudera.site:9093,docs-messaging-broker2.cdf-docs.a465-9q4k.cloudera.site:9093,docs-messaging-broker3.cdf-docs.a465-9q4k.cloudera.site:9093Topic Name(s)

Provide the name of the Kafka Topic(s) to pull from.

Customer

Record Reader

Specify the Record Reader to use for incoming FlowFiles.

ReadCustomerAvroRoot

Record Writer

Specify the Record Writer to use in order to serialize the data before sending to Kafka.

CSVRecordSetWriter

Security Protocol

Specify the protocol used to communicate with Kafka brokers.

SASL_SSL

SASL Mechanism

Specify the SASL mechanism to use for authentication.

PLAIN

Username

Use your CDP workload username to set this Authentication property.

srv_nifi-kafka-ingest

Password

Use your CDP workload password to set this Authentication property.

password

SSL Context Service

Specify the SSL Context Service to use for communicating with Kafka.

Use the pre-configured SSLContextProvider.

Default NiFi SSL Context Service

Group ID

Provide the consumer group ID to identify consumers that are within the same consumer group.

nifi-s3-ingest