Configure the processor for your data target

Learn how to configure a data target processor for the Kafka ingest data flow.

You can set up a data flow to move data to many locations. This example assumes that you are moving data to Apache Kafka. If you want to move data to a different location, see the other use cases in the Cloudera Data Flow for Data Hub library.

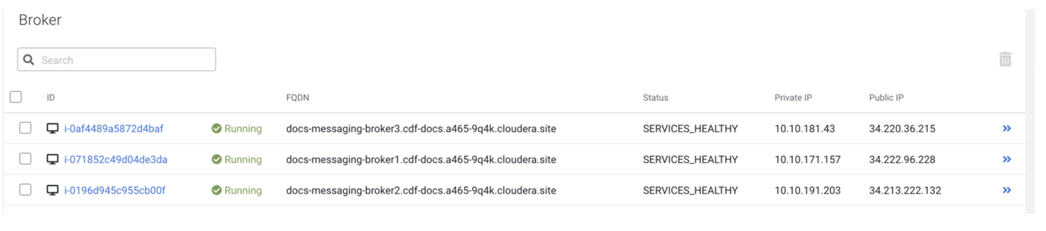

In this example data flow, we are writing data to Kafka. You can create the modified Kafka broker URLs using the broker hostnames and adding port :9093 to the end of each FQDN. You can find the hostnames on the Streams Messaging cluster overview page when selecting the Hardware tab.

The following properties are used for the PublishKafka2RecordCDP processor:

| Property | Description | Example value for ingest data flow |

|---|---|---|

|

Kafka Brokers |

Provide a comma-separated list of known Kafka Brokers. In the format |

|

|

Topic Name |

Provide the name of the Kafka Topic to publish to. |

Customer |

|

Record Reader |

Specify the Record Reader to use for incoming FlowFiles. |

ReadCustomerCSV |

|

Record Writer |

Specify the Record Writer to use in order to serialize the data before sending to Kafka. |

WriteCustomerAvro |

|

Use Transactions |

Specify whether or not NiFi should provide Transactional guarantees when communicating with Kafka. |

false |

|

Delivery Guarantee |

Specify the requirement for guaranteeing that a message is sent to Kafka. |

Guarantee Single Node Delivery |

|

Security Protocol |

Specify the protocol used to communicate with Kafka brokers. |

SASL_SSL |

|

SASL Mechanism |

Specify the SASL mechanism to use for authentication. |

PLAIN |

|

Username |

Use your CDP workload username to set this Authentication property. |

srv_nifi-kafka-ingest |

|

Password |

Use your CDP workload password to set this Authentication property. This will use the specified CDP user to authenticate against Kafka. Make sure that this user has the correct permissions to write to the specified topic. |

password |

|

SSL Context Service |

Specify the SSL Context Service to use for communicating with Kafka. Use the pre-configured SSLContextProvider. |

Default NiFi SSL Context Service |