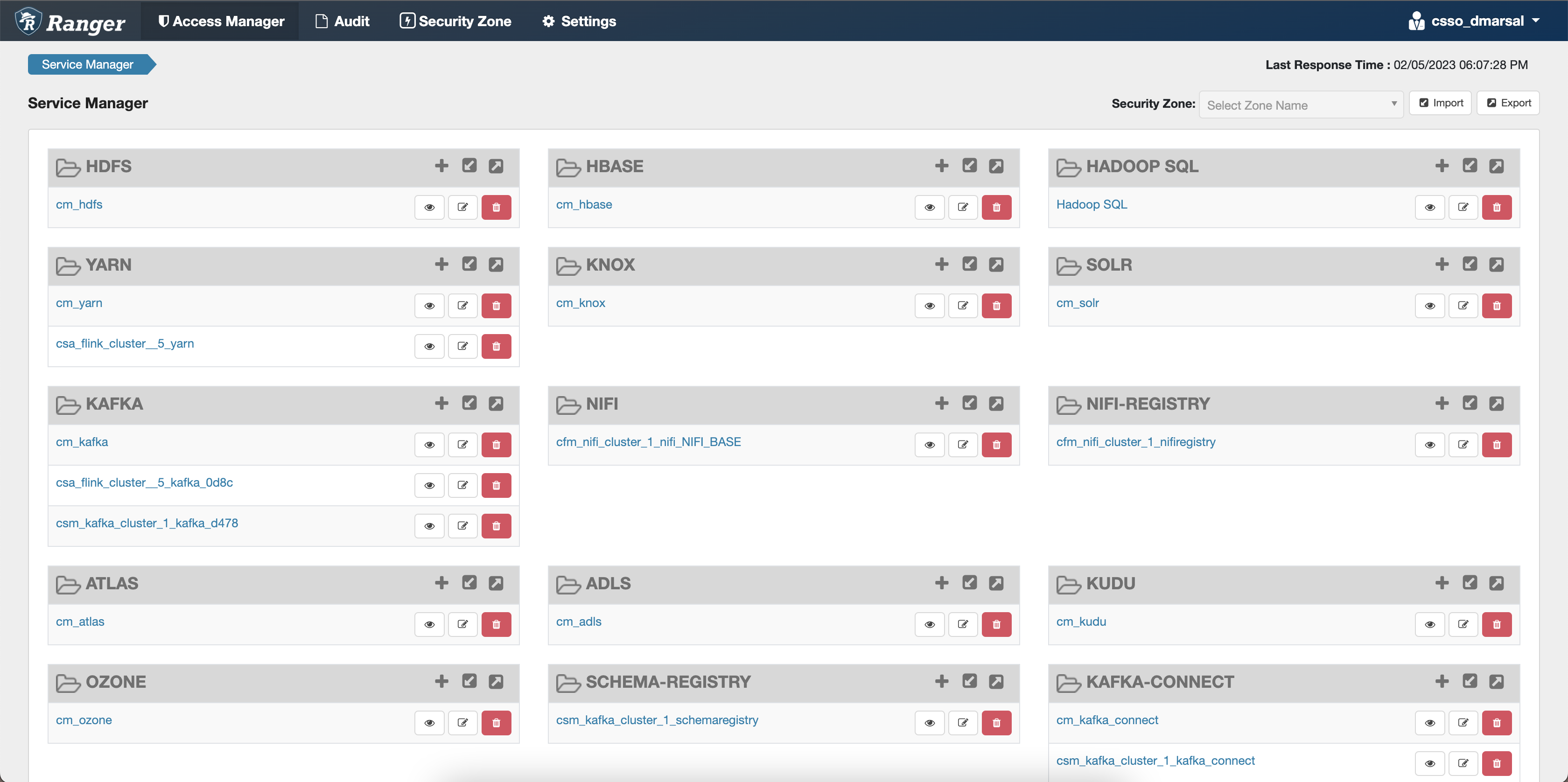

After creating your cluster

As an EnvironmentAdmin, you need to provide access to users to your environment and to the Streaming Analytics cluster by assigning user roles, adding users to Ranger policies, and creating IDBroker mappings.

The cluster you have created using the Streaming Analytics cluster definition is kerberized and secured with SSL. Users can access cluster UIs and endpoints through a secure gateway powered by Apache Knox. Before you can use Flink and SQL Stream Builder, you must provide users access to the Streaming Analytics cluster components.

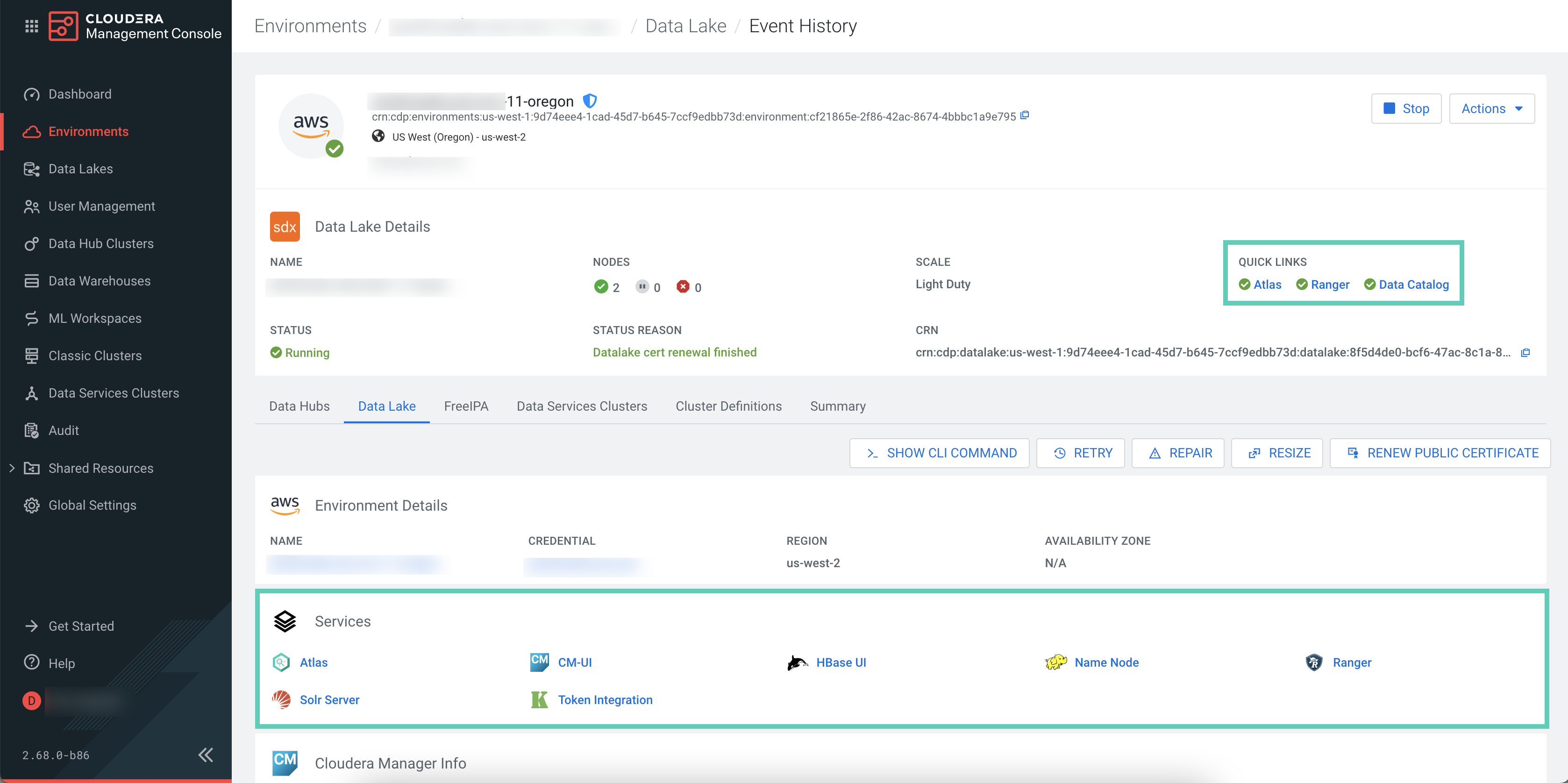

Retrieving keytab file

As a user, you need to retrieve the keytab file of your profile and upload it to the Streaming SQL Console to be able to run SQL jobs.

- Navigate to , and select the environment where you have created your cluster.

- Click on your profile name.

- Click Profile.

- Click Actions > Get Keytab.

- Choose the environment where your Data Hub cluster is running.

- Click Download.

- Save the keytab file in a chosen location.

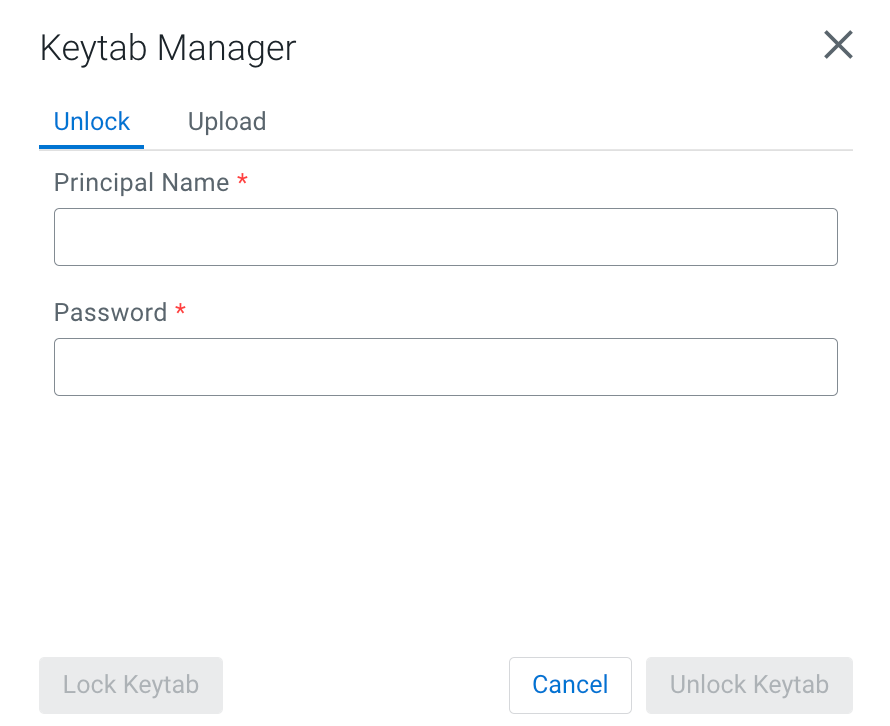

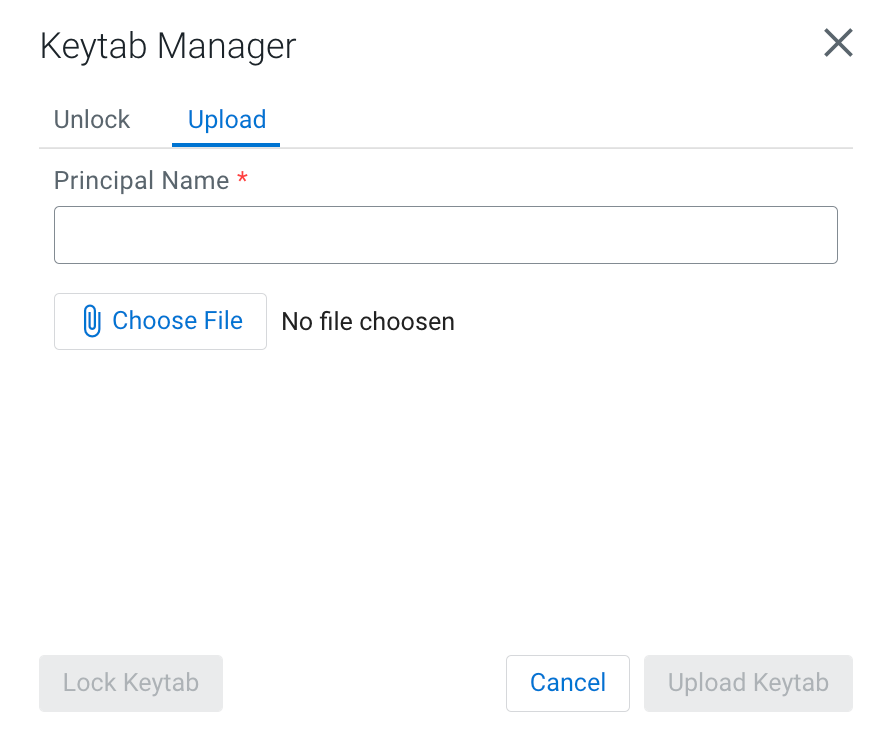

Uploading or unlocking your keytab

When accessing the Streaming SQL Console for the first time in Data Hub, you must upload and unlock the keytab file corresponding with your profile before you can use SQL Stream Builder (SSB).

Configuring Ranger policies for Flink and SSB

You must add your workload username and the SQL Stream Builder (SSB) service user to the Ranger policies that are needed for Kafka, Schema Registry, Hive and Kudu to provide access to topics, schemas and tables used by the components and to be able to execute Flink jobs.

You need to provide access to users and the SSB service by configuring Ranger policies for the Kafka data source and the Schema Registry, Kudu and Hive catalog services. To be able to use Flink, you need to add the workload user or users to the required policies. For SSB, the ssb service user needs to be added to the same policies.

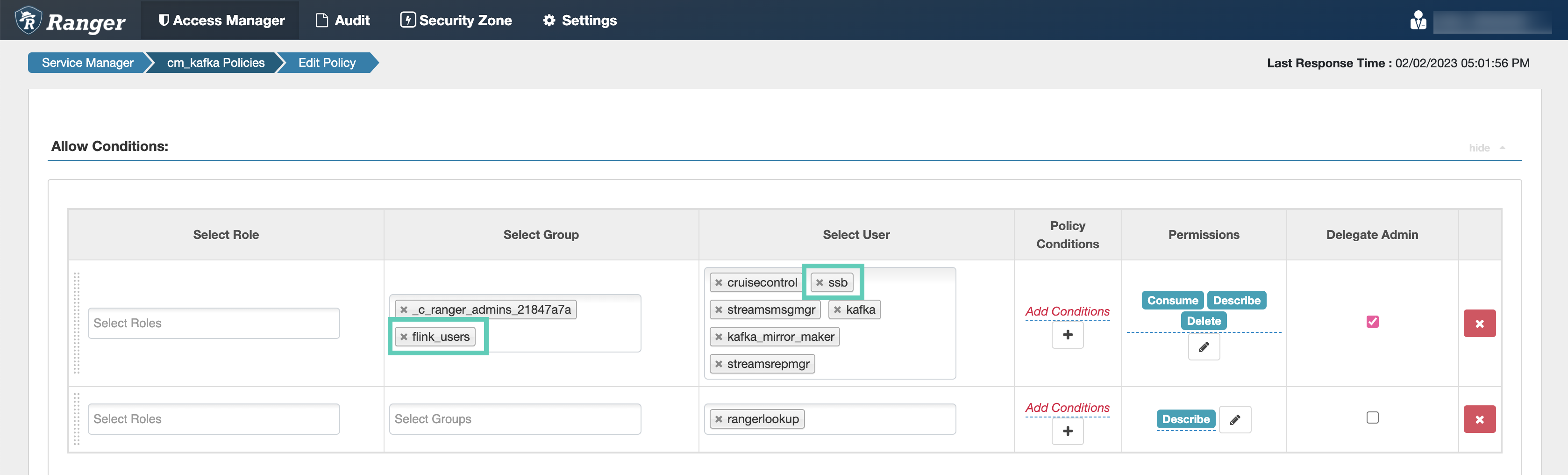

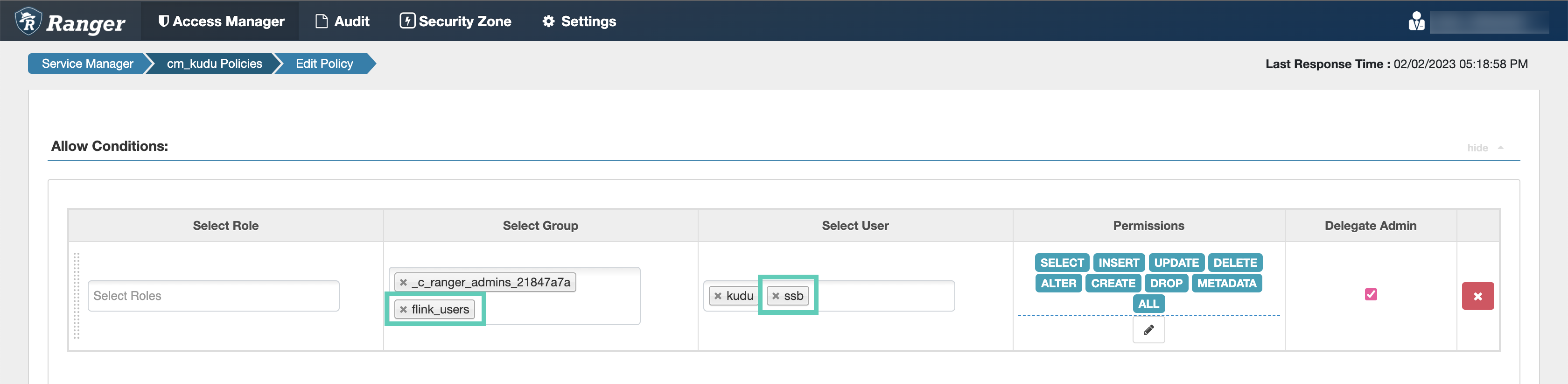

When adding more workload users, instead of adding them one by one, you can create user groups

in Ranger, for example a flink_users group. This way you can assign every user

who will use the Streaming Analytics cluster into a group, and add only that one group to the

Ranger policies.

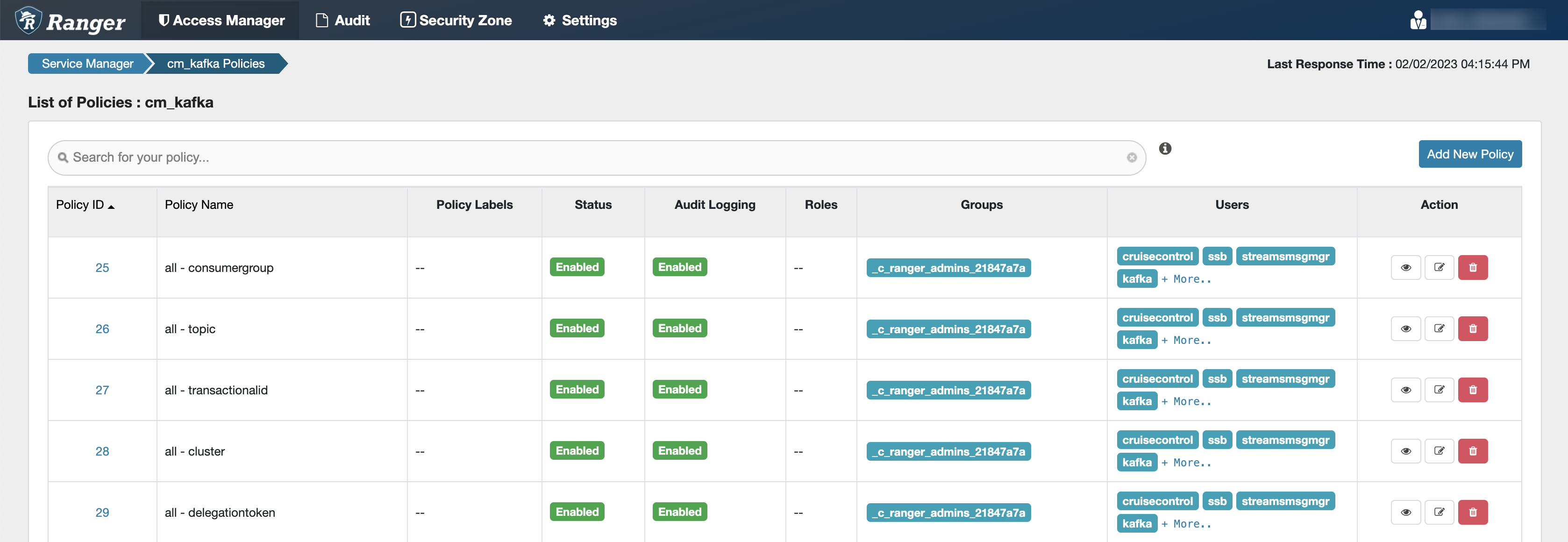

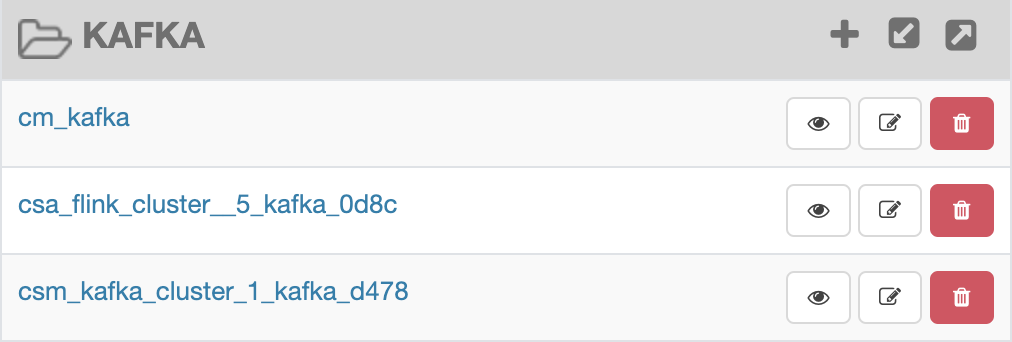

Configuring Kafka policies

After accessing the Ranger Admin Web UI, the workload username or user groups, and the SSB service user needs to be added to the Kafka policies to be able to use Flink and SSB.

- all - topic: Provides access to all topics for users

- all - consumergroup: Provides access to all consumer groups for users

- all - cluster: Provides access to all clusters to users

- all - transactionalid: Provides transactionalid access to users

- all - delegationtoken: Provides delegationtoken access to users

ssb service user is added to the policies of the Kafka service and

to the policies of the created Streams Messaging and Streaming Alaytics clusters.

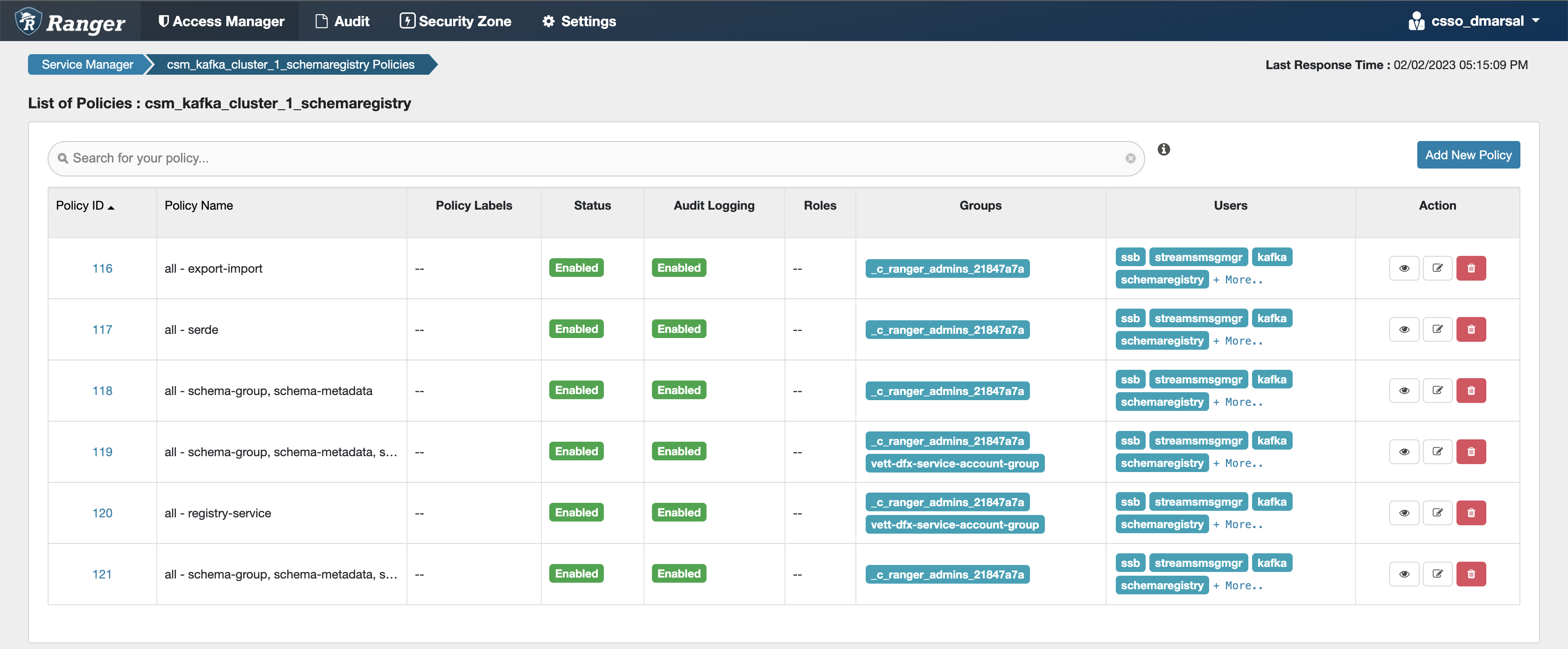

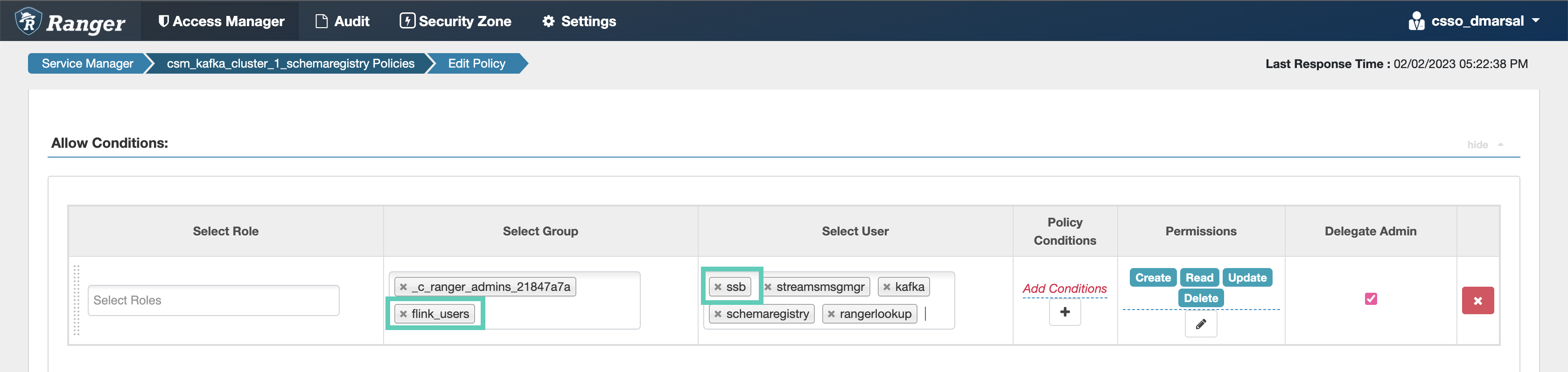

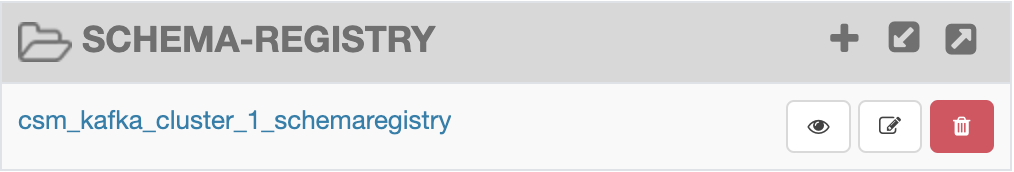

Configuring Schema Registry policies

After accessing the Ranger Admin Web UI, the workload username or user groups, and the SSB service user needs to be added to the Schema Registry policies to be able to use Flink and SSB.

- all - export-import: Provides import and export access for users.

- all - serde: Provides access to store metadata for the format of how the data should be read and written.

- all - schema-group, schema-metadata: Provides access to the schema groups, schema metadata, and schema branch.

- all - schema-group, schema-metadata, schema-branch: Provides access to the schema groups, schema metadata, and schema branch.

- all - schema-group, schema-metadata, schema-branch, schema-version: Provides access to schema groups, schema metadata, schema branch, and schema version.

- all - registry-service: Provides access to the schema registry service, the user can access all Schema Registry entities.

ssb service user is added to the policies of the created Streams

Messaging clusters.

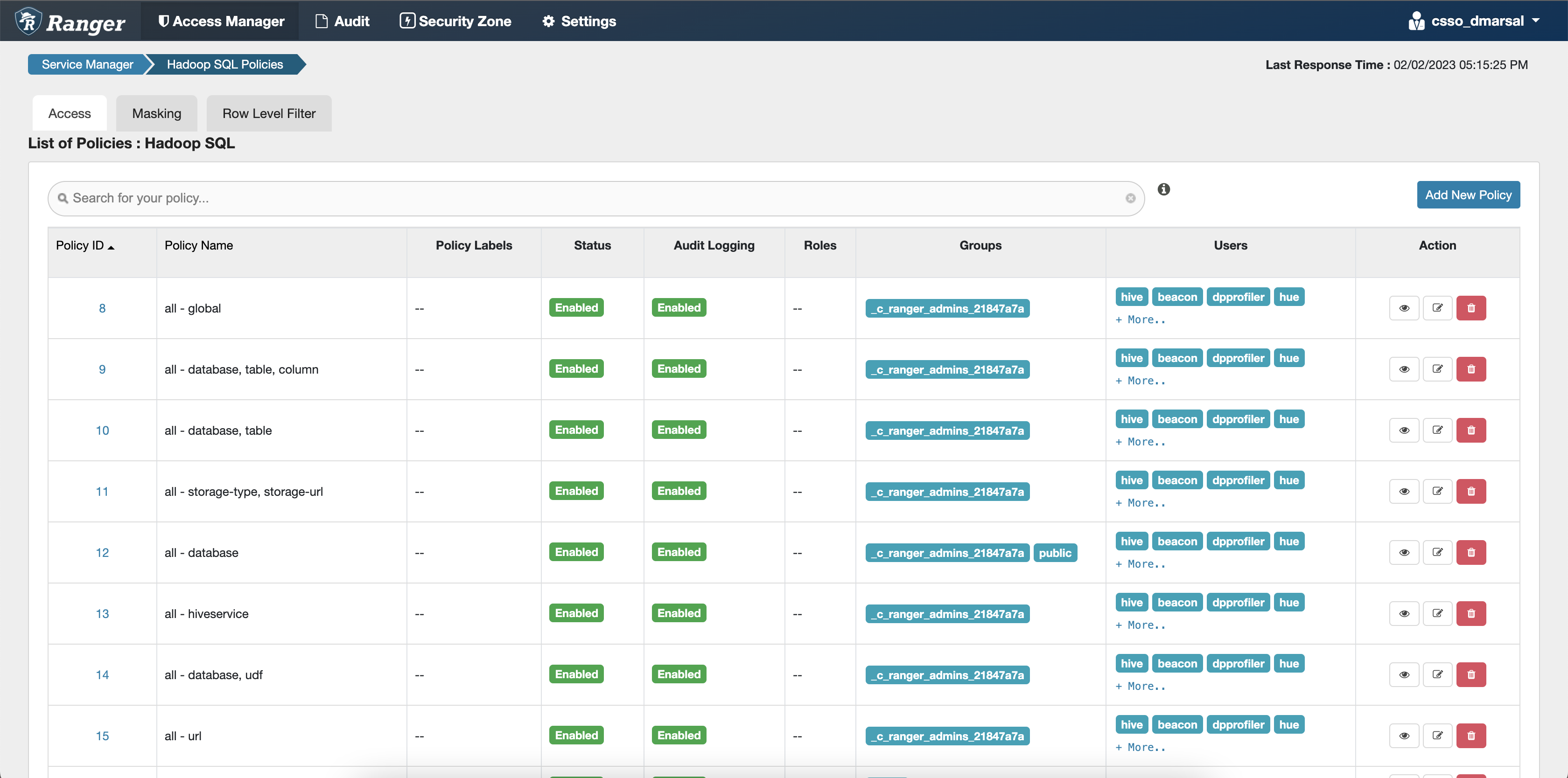

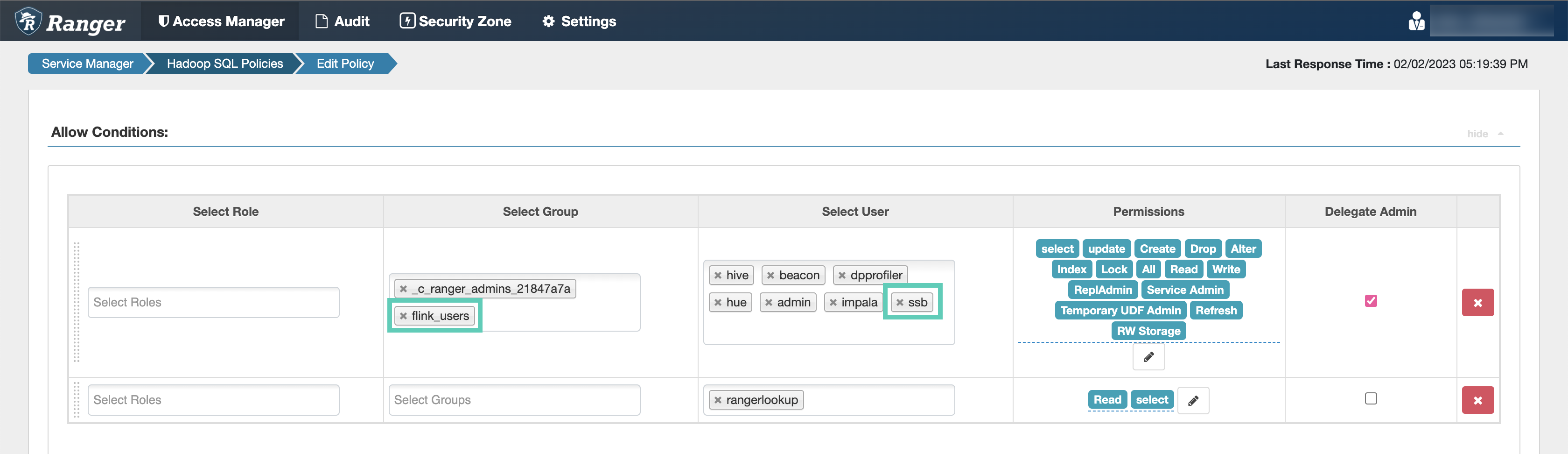

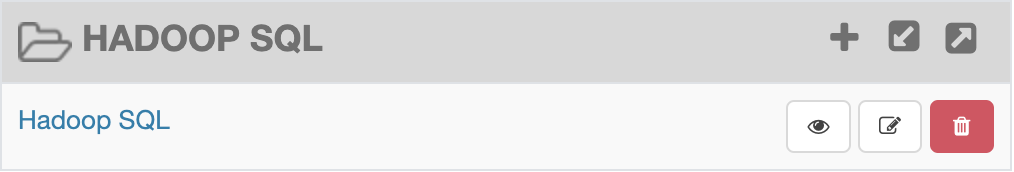

Configuring Hive policies

After accessing the Ranger Admin Web UI, the workload username or user groups, and the SSB service user needs to be added to the Hadoop SQL policies to be able to use Flink and SSB with Hive.

- all - global: Provides global access to users.

- all - database, table, column: Provides access to all databases, tables, and columns for users.

- all - database, table: Provides access to all databases and tables for users.

- all - database: Provides access to all databases for users.

- all - hiveservice: Provides hiveservice access to users.

- all - database, udf: Provides database and udf access to users.

- all - url: Provides url access

ssb service user is added to the policies of the Hive service and

the created Operational Database clusters.

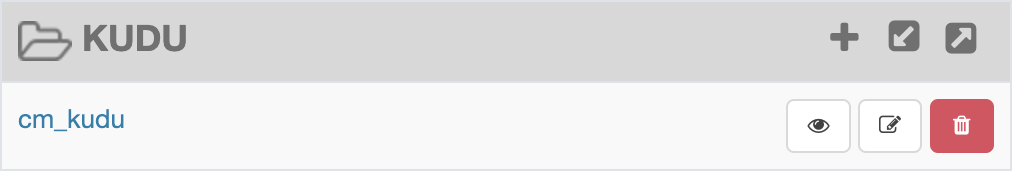

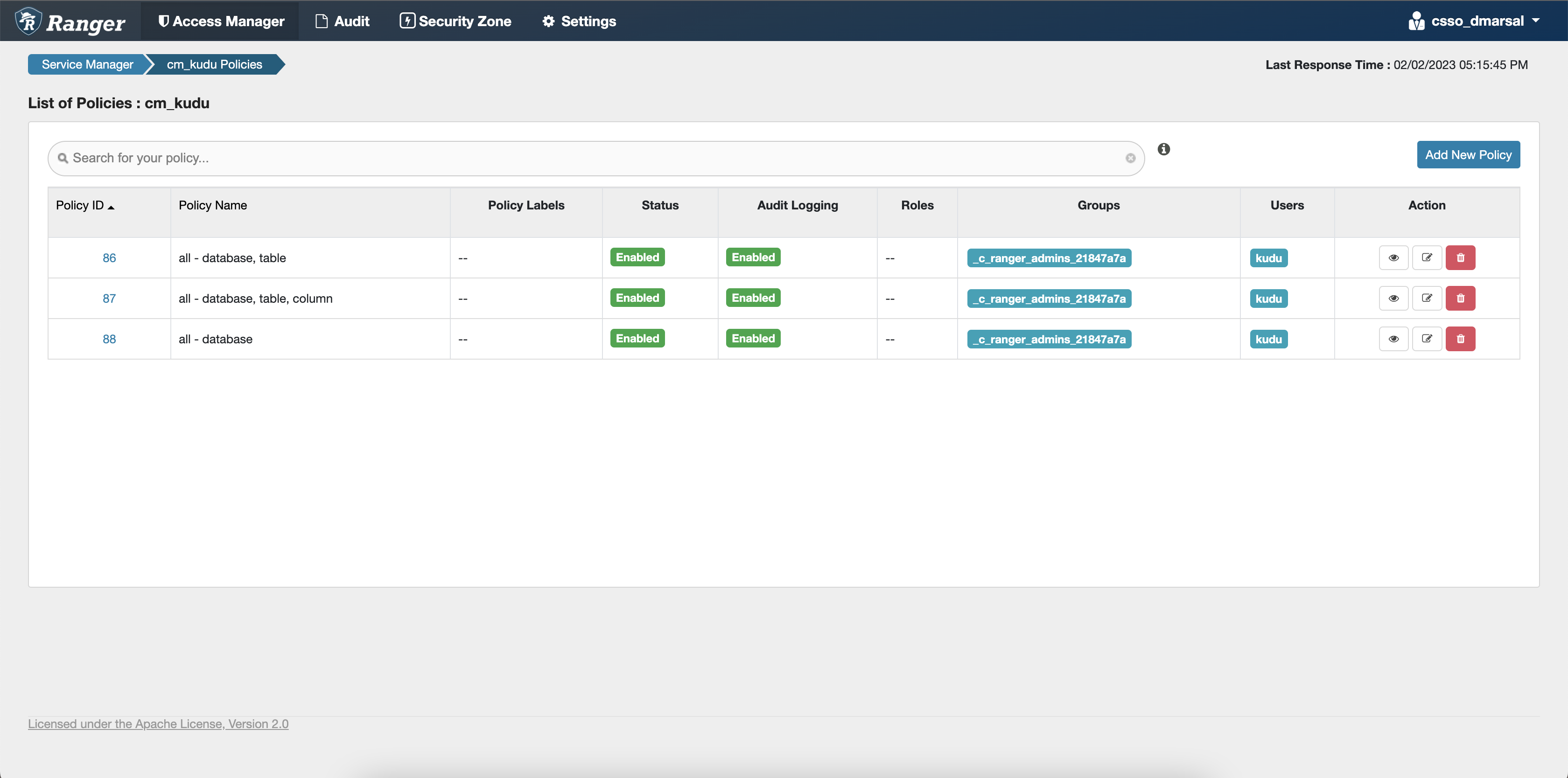

Configuring Kudu policies

After accessing the Ranger Admin Web UI, the workload username or user groups, and the SSB service user needs to be added to the Kudu policies to be able to use Flink and SSB.

- all - database, table: Provides access to all databases and tables for users.

- all - database, table, column: Provides access to all databases, tables, and columns for users.

- all - database: Provides access to all databases for users.

ssb service user is added to the policies of the Kudu service and

the created Real-Time Data Mart clusters.