Start the data flow

When your flow is ready, you can begin ingesting data into Azure Data Lake Storage folders. Learn how to start your ADLS ingest data flow.

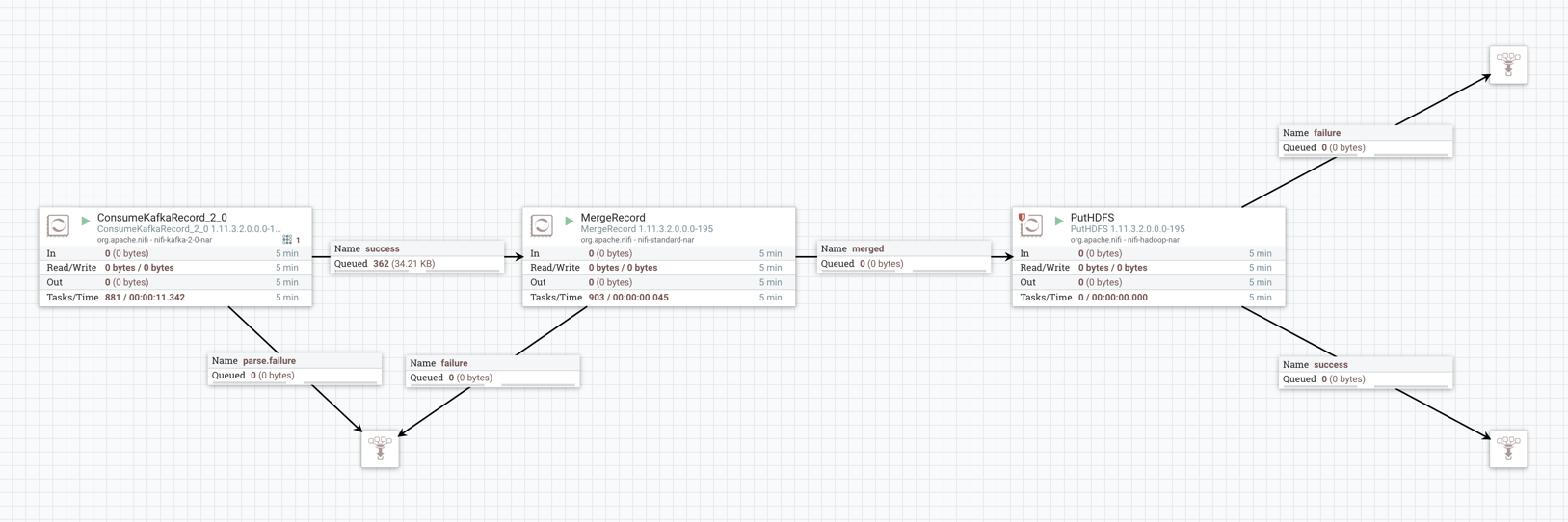

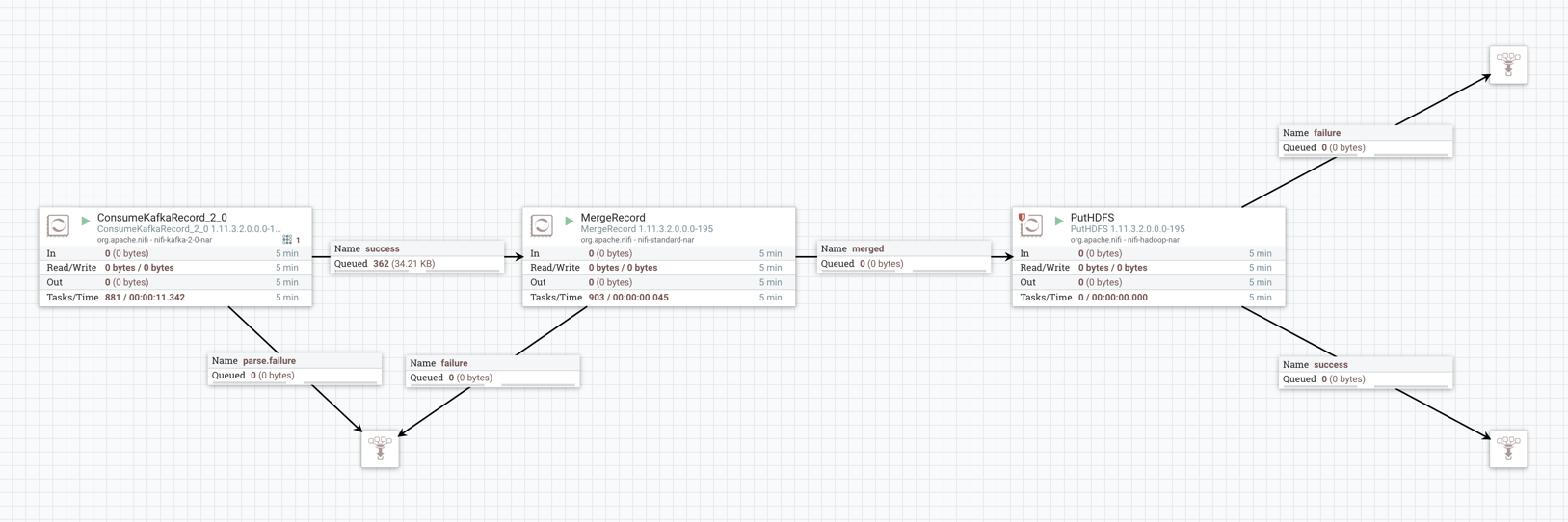

This example data flow has been created using the PutHDFS processor.

When your flow is ready, you can begin ingesting data into Azure Data Lake Storage folders. Learn how to start your ADLS ingest data flow.

This example data flow has been created using the PutHDFS processor.