Introduction to HWC execution modes

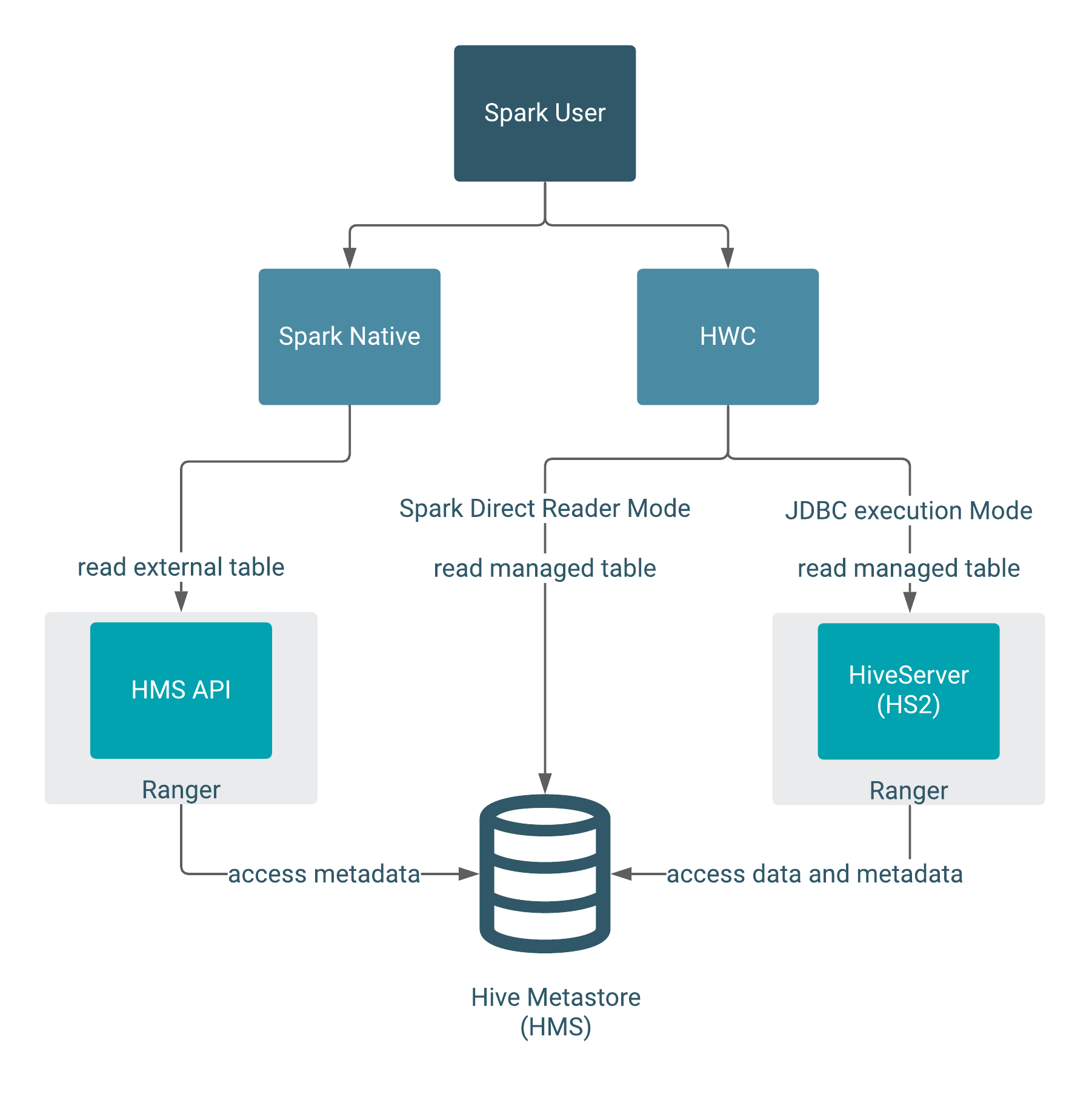

A comparison of each execution mode helps you make HWC configuration choices. You can see, graphically, how the configuration affects the query authorization process and your security. You read about which configuration provides fine-grained access control, such as column masking.

| Capabilities | JDBC mode | Spark Direct Reader mode |

|---|---|---|

| Ranger integration (fine-grained access control) | ✓ | N/A |

| Hive ACID reads | ✓ | ✓ |

| Workloads handled | Small datasets | ETL without fine-grained access control |

- Spark Direct Reader mode: Connects to Hive Metastore (HMS)

- JDBC execution mode: Connects to HiveServer (HS2)

The read execution mode determines the type of query authorization for reads. Ranger authorizes access to Hive tables from Spark through HiveServer (HS2) or the Hive metastore API (HMS API).

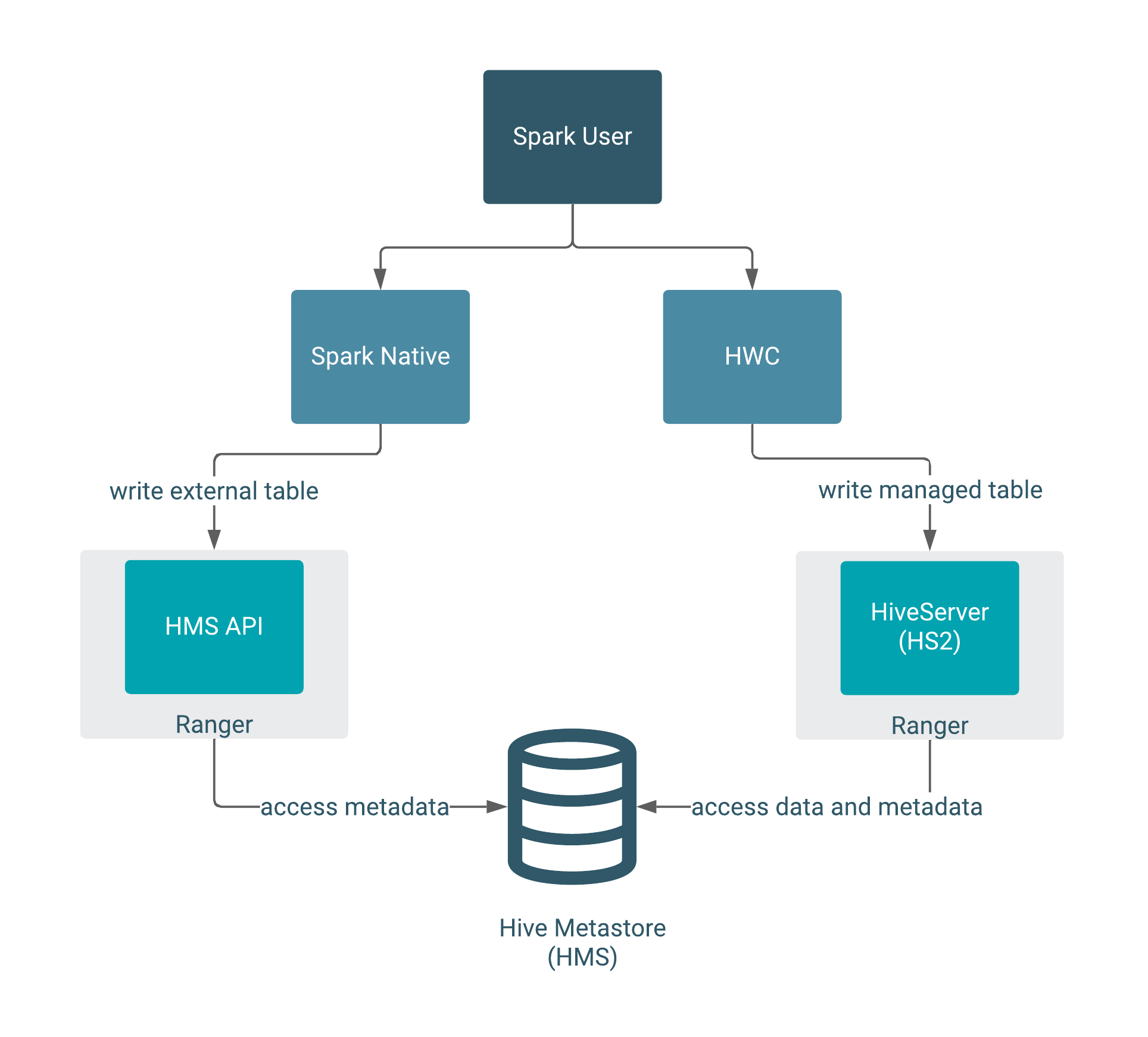

To write ACID managed tables from Spark to Hive, use HWC. To write external tables from Spark to Hive, use native Spark.

The following diagram shows the typical read authorization process:

The following diagram shows the typical write authorization process:

You need to use HWC to read or write managed tables from Spark. Spark Direct Reader mode does not support writing to managed tables. Managed table queries go through HiveServer, which is integrated with Ranger. External table queries go through the HMS API, which is also integrated with Ranger.

In Spark Direct Reader mode, SparkSQL queries read managed table metadata directly from the HMS, but only if you have permission to access files on the file system.

If you do not use HWC, the Hive metastore (HMS) API, integrated with Ranger, authorizes external table access. HMS API-Ranger integration enforces the Ranger Hive ACL in this case. When you use HWC, queries such as DROP TABLE affect file system data as well as metadata in HMS.

Managed tables

A Spark job impersonates the end user when attempting to access an Apache Hive managed table. As an end user, you do not have permission to secure, managed files in the Hive warehouse. Managed tables have default file system permissions that disallow end user access, including Spark user access.

As Administrator, you set permissions in Ranger to access the managed tables in JDBC mode. You can fine-tune Ranger to protect specific data. For example, you can mask data in certain columns, or set up tag-based access control.

In Spark Direct Reader mode, you cannot use Ranger. You must set read access to the file

system location for managed tables. You must have Read and Execute permissions on the Hive

warehouse location (hive.metastore.warehouse.dir).

External tables

Ranger authorization of external table reads and writes is supported. You need to configure a few properties in Cloudera Manager for authorization of external table writes. You must be granted file system permissions on external table files to allow Spark direct access to the actual table data instead of just the table metadata. For example, to purge actual data you need access to the file system.

Spark Direct Reader mode vs JDBC mode

As Spark allows users to run arbitrary code, fine grained access control, such as row level filtering or column level masking, is not possible within Spark itself. This limitation extends to data read in Spark Direct Reader mode.

To restrict data access at a fine-grained level, consider using Ranger and HWC in JDBC execution mode if your datasets are small. If you do not require fine-grained access, consider using HWC Spark Direct Reader mode. For example, use Spark Direct Reader mode for ETL use cases. Spark Direct Reader mode is the recommended read mode for production. Using HWC is the recommended write mode for production.