Known Issues in Hue

Learn about the known issues in Hue, the impact or changes to the functionality, and the workaround.

- CDPD-40354: Uploading large files to WebHDFS from Hue secured using Knox fails

- When you upload files larger than 300 MB to WebHDFS using Hue File

Browser from a Hue instance that is secured using Knox, the upload fails and you see the

following error on the Hue web interface: 502 bad gateway nginx error. This

error occus only when you access the Hue instance from the Knox Gateway. Also, you may not

find any exceptions in Hue or Knox service logs.

This issue is caused because the value of the

is set toaddExpect100Continuetrue

. Therefore streaming large files causes out of memory error in Knox. - Hue uses the unsafe-inline directive in its Content Security Policy (CSP) header

- Hue 4 web interface uses the unsafe-inline directive in its CSP header. As a result, the application server does not set the CSP header in its HTTP responses, and therefore does not benefit from the additional protection against potential cross-site scripting issues and other modern application vulnerabilities which a properly configured CSP may provide. This could lead to application vulnerability.

- Downloading Impala query results containing special characters in CSV format fails with ASCII codec error

- In CDP, Hue is compatible with Python 2.7.x, but the Tablib library for Hue has been upgraded from 0.10.x to 0.14.x, which is generally used with the Python 3 release. If you try to download Impala query results having special characters in the result set in a CSV format, then the download may fail with the ASCII unicode decode error.

- Impala SELECT table query fails with UTF-8 codec error

- Hue cannot handle columns containing non-UTF8 data. As a result,

you may see the following error while queying tables from the Impala editor in Hue:

'utf8' codec can't decode byte 0x91 in position 6: invalid start byte. - Psycopg2 library needed for PostgreSQL-backed Hue when on RHEL 8 or Ubuntu 20 platforms

- You may see a warning on the Host Inspector Results page stating that a compatible version of the Psycopg2 library is missing on your host if you have installed CDP 7.1.7 on RHEL 8 or Ubuntu 20 platforms and if you are using PostgreSQL as the backend database for Hue. This is because RHEL 8 and Ubuntu 20 contain Python 3 by default and Hue does not support Python 3.

- Impala editor fails silently after SAML SSO session times out

-

When you run a query from an Impala editor in Hue, the Impala editor may silently fail without displaying an error message. As a result, you may not see any action on the screen after submitting your query. This happens if Hue is configured with SAML authentication and you run a query from a browser session that has remained open for a period longer than the SSO maximum session time set by the SAML Identity Provider.

- Connection failed error when accessing the Search app (Solr) from Hue

-

If you are using Solr with Hue to generate interactive dashboards and for indexing data, and if you have deployed two Solr services on your cluster and selected the second one as a dependency for Hue, then Cloudera Manager assigns the hostname of the first Solr service and the port number of the second Solr service generating an incorrect Solr URL in the search section of the hue.ini file. As a result, you may see a “Connection failed” error when you try to access the Search app from the Hue web UI.

- Invalid S3 URI error while accessing S3 bucket

-

The Hue Load Balancer merges the double slashes (//) in the S3 URI into a single slash (/) so that the URI prefix "

/filebrowser/view=S3A://" is changed to "/filebrowser/view=S3A:/". This results in an error when you try to access the S3 buckets from the Hue File Browser through the port 8889.The Hue web UI displays the following error: “

Unknown error occurred”.The Hue server logs record the “

ValueError: Invalid S3 URI: S3A” error. - Error while rerunning Oozie workflow

-

You may see an error such as the following while rerunning an an already executed and finished Oozie workflow through the Hue web interface:

E0504: App directory [hdfs:/cdh/user/hue/oozie/workspaces/hue-oozie-1571929263.84] does not exist. - Python-psycopg2 package version 2.8.4 not compatible with Hue

-

Ubuntu 18.04 provides python-psycopg2 package version 2.8.4 but it is not compatible with Hue because of a bug in the Django framework.

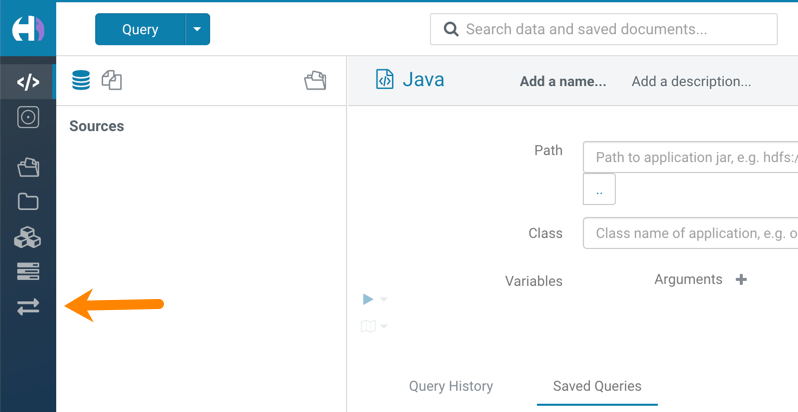

- Hue Importer is not supported in the Data Engineering template

- When you create a Data Hub cluster using the Data Engineering

template, the Importer application is not supported in Hue:

- Hue limitation after upgrading from CDH to CDP Private Cloud Base

- The

hive.server2.parallel.ops.in.sessionconfiguration property changes fromTRUEtoFALSEafter upgrading from CDH to CDP Private Cloud Base. Current versions of Hue are compatible with this property change; however, if you still would like to use an earlier version of Hue that was not compatible with this property beingFALSEand shared a single JDBC connection to issue queries concurrently, the connection will no longer work after upgrading. - Unsupported feature: Importing and exporting Oozie workflows across clusters and between different CDH versions is not supported

-

You can export Oozie workflows, schedules, and bundles from Hue and import them only within the same cluster if the cluster is unchanged. You can migrate bundle and coordinator jobs with their workflows only if their arguments have not changed between the old and the new cluster. For example, hostnames, NameNode, Resource Manager names, YARN queue names, and all the other parameters defined in the

workflow.xmlandjob.propertiesfiles.Using the import-export feature to migrate data between clusters is not recommended. To migrate data between different versions of CDH, for example, from CDH 5 to CDP 7, you must take the dump of the Hue database on the old cluster, restore it on the new cluster, and set up the database in the new environment. Also, the authentication method on the old and the new cluster should be the same because the Oozie workflows are tied to a user ID, and the exact user ID needs to be present in the new environment so that when a user logs into Hue, they can access their respective workflows.

- PySpark and SparkSQL are not supported with Livy in Hue

- Hue does not support configuring and using PySpark and SparkSQL with Livy in CDP Private Cloud Base.

Technical Service Bulletins

- TSB 2021-487: Cloudera Hue is vulnerable to Cross-Site Scripting attacks

- Multiple Cross-Site Scripting (XSS) vulnerabilities of Cloudera Hue have been found.

They allow JavaScript code injection and execution in the application context.

- CVE-2021-29994 - The Add Description field in the Table schema browser does not sanitize user inputs as expected.

- CVE-2021-32480 - Default Home direct button in Filebrowser is also susceptible to XSS attack.

- CVE-2021-32481 - The Error snippet dialog of the Hue UI does not sanitize user inputs.

- Knowledge article

- For the latest update on this issue see the corresponding Knowledge article: TSB 2021-487: Cloudera Hue is vulnerable to Cross-Site Scripting attacks (CVE-2021-29994, CVE-2021-32480, CVE-2021-32481)