Running HDFS lineage commands

To support HDFS lineage extraction, you must execute the lineage shell script commands.

Running the

-put command:

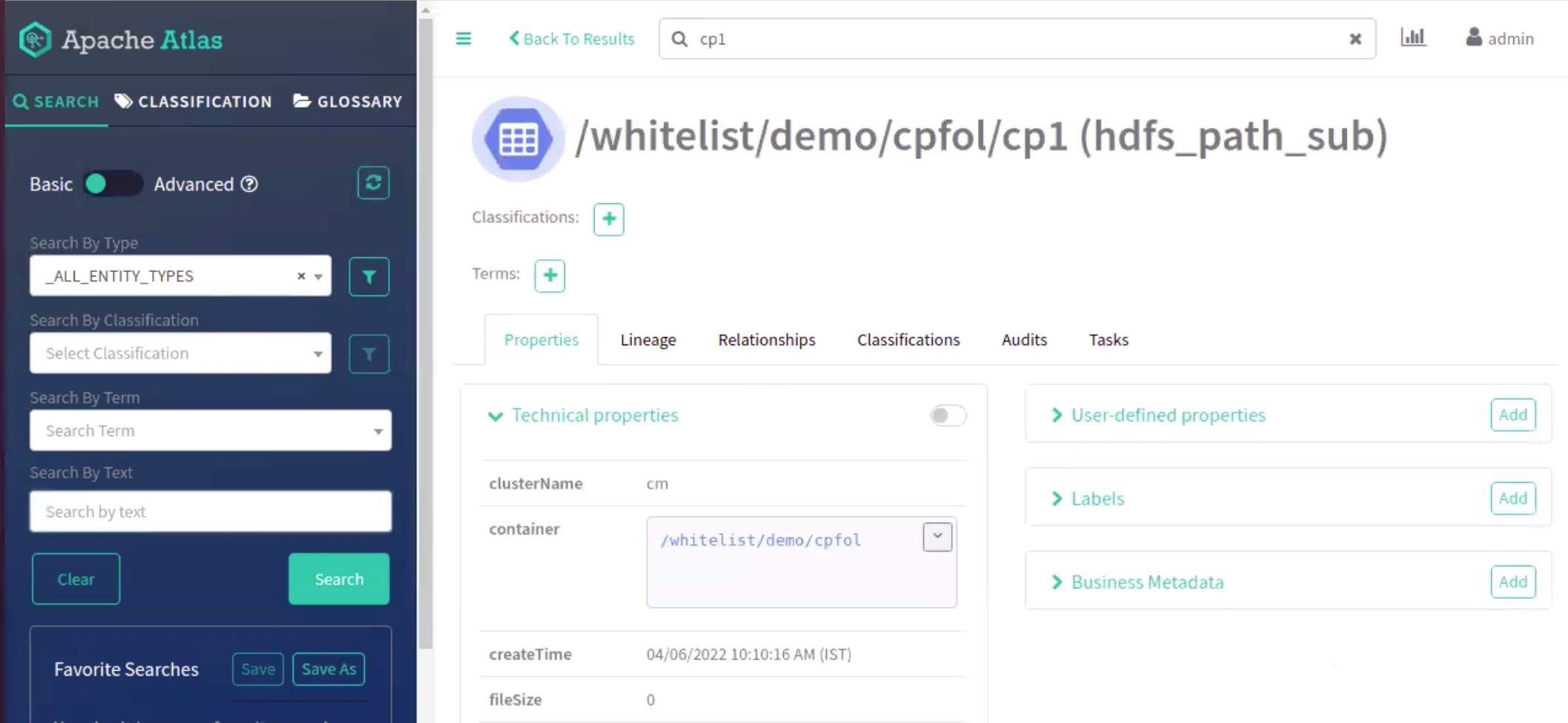

./hdfs-lineage.sh -put

~/csvfolder/ /tmp/whitelist/demo/cpfol/cp1

Log

file for HDFS Extraction is /var/log/atlas/hdfs-extractor/log

Hdfs dfs -put /root/csvfolder/ /tmp/whitelist/demo/cpfol/cp1 command was

successfulHDFS Meta Data extracted successfully!!!

You must verify if the specific folder is moved to Atlas.

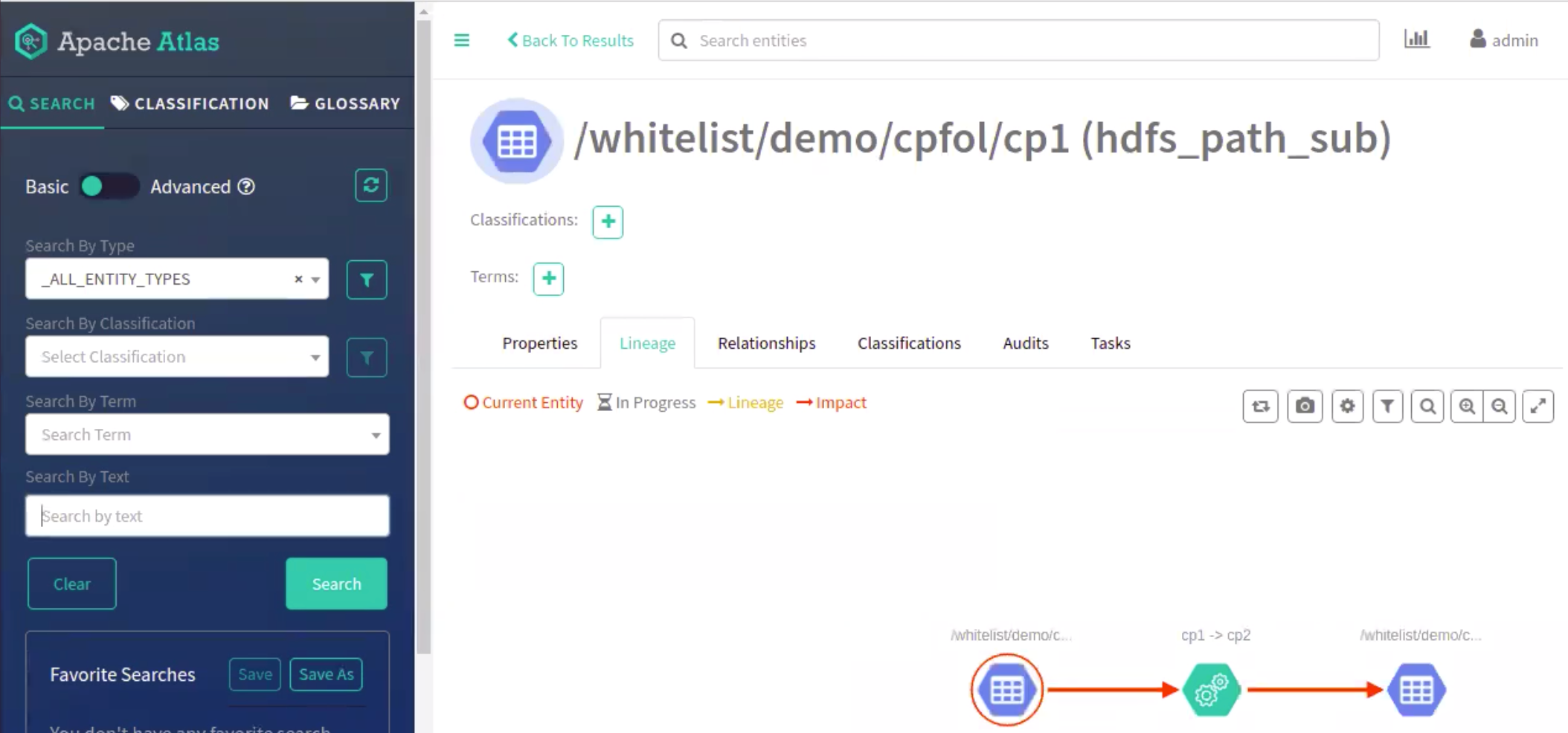

Running the -cp command:

./hdfs-lineage.sh -cp /tmp/whitelist/demo/cpfol/cp1

/tmp/whitelist/demo/cpfol/cp2

Log file for HDFS

Extraction is /var/log/atlas/hdfs-extractor/log

Hdfs dfs -cp /tmp/whitelist/demo/cpfol/cp1

/tmp/whitelist/demo/cpfol/cp2 command was successful

HDFS Meta Data extracted successfully!!!

You must verify if the contents of the folder is moved to another folder.

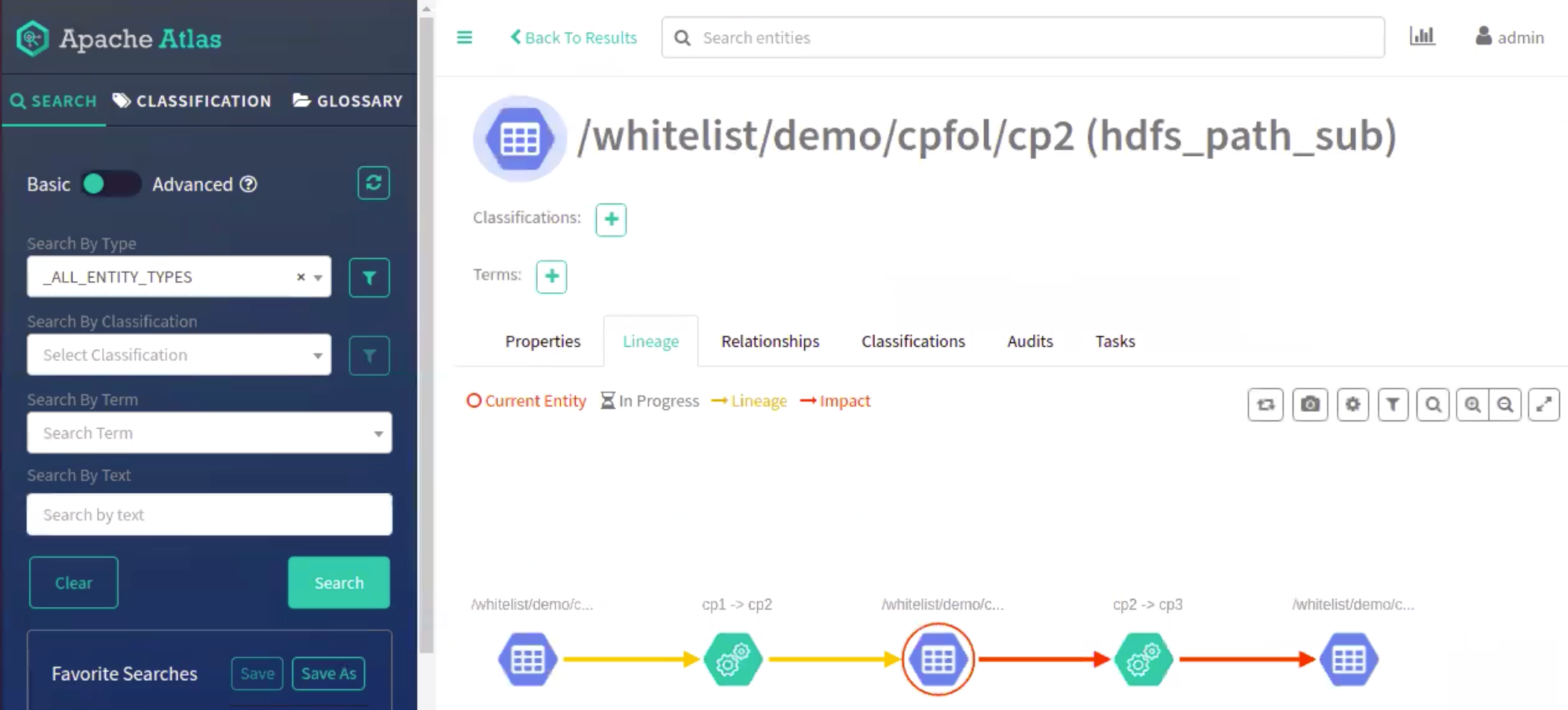

Similarly, you can move the contents of the copied folder to another folder.

./hdfs-lineage.sh -cp /tmp/whitelist/demo/cpfol/cp2

/tmp/whitelist/demo/cpfol/cp3

Log file for HDFS

Extraction is /var/log/atlas/hdfs-extractor/log

Hdfs dfs -cp /tmp/whitelist/demo/cpfol/cp2

/tmp/whitelist/demo/cpfol/cp3 command was successful

HDFS Meta Data extracted successfully!!!

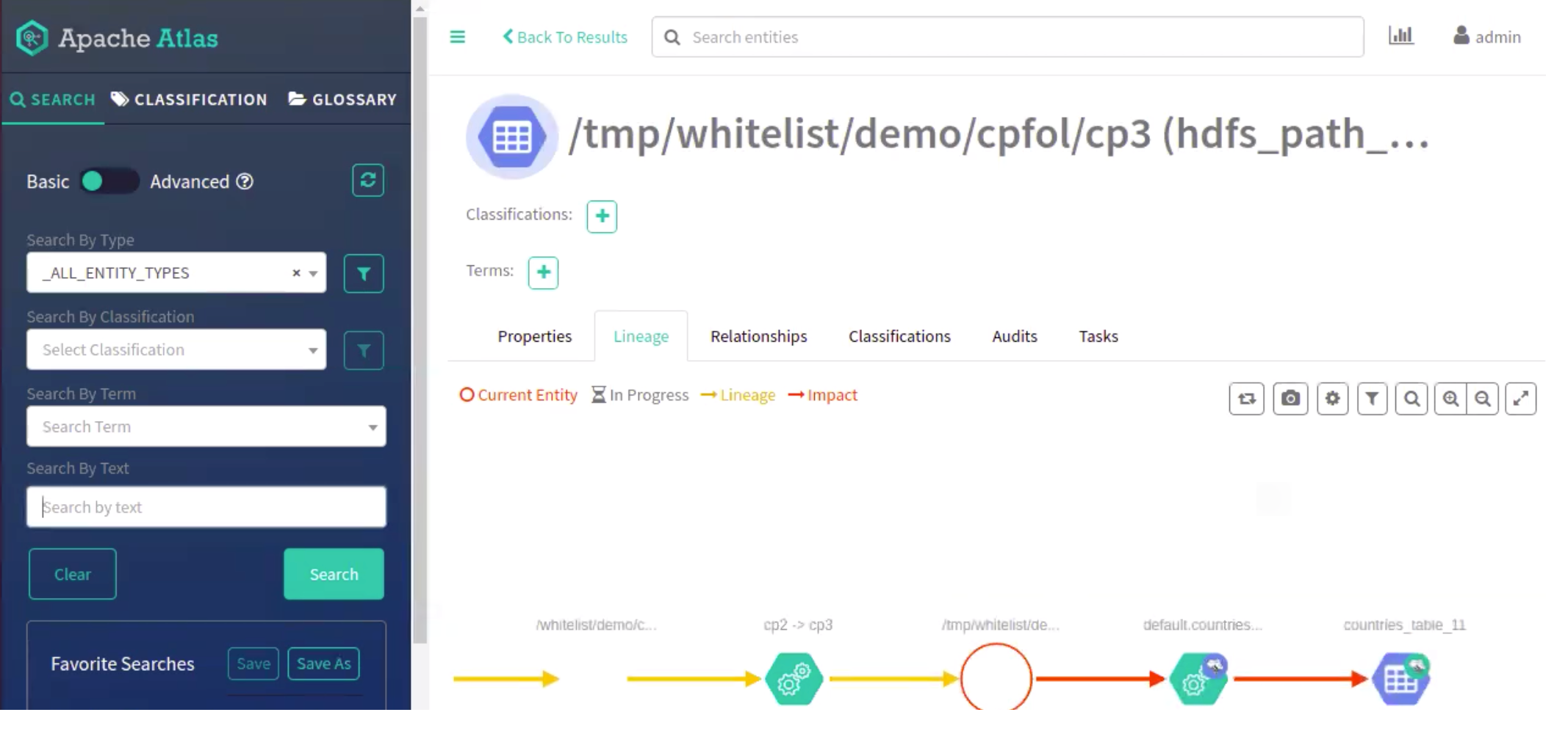

You must create an External Hive table on top of the HDFS location. For example, a Hive table on top of the HDFS location: cp3

create external table if not exists countries_table_11 (

CountryID int, CountryName string, Capital string, Population

string)

comment 'List of

Countries'

row format delimited

fields terminated by ','

stored

as textfile

location

'/tmp/whitelist/demo/cpfol/cp3';

Running the mv command:

./hdfs-lineage.sh -put ~/csvfolder/

/tmp/whitelist/demo/mvfol/mv1

Log file for HDFS

Extraction is /var/log/atlas/hdfs-extractor/log

Hdfs dfs -put /root/csvfolder/ /tmp/whitelist/demo/mvfol/mv1 command was

successfulHDFS Meta Data extracted successfully!!!

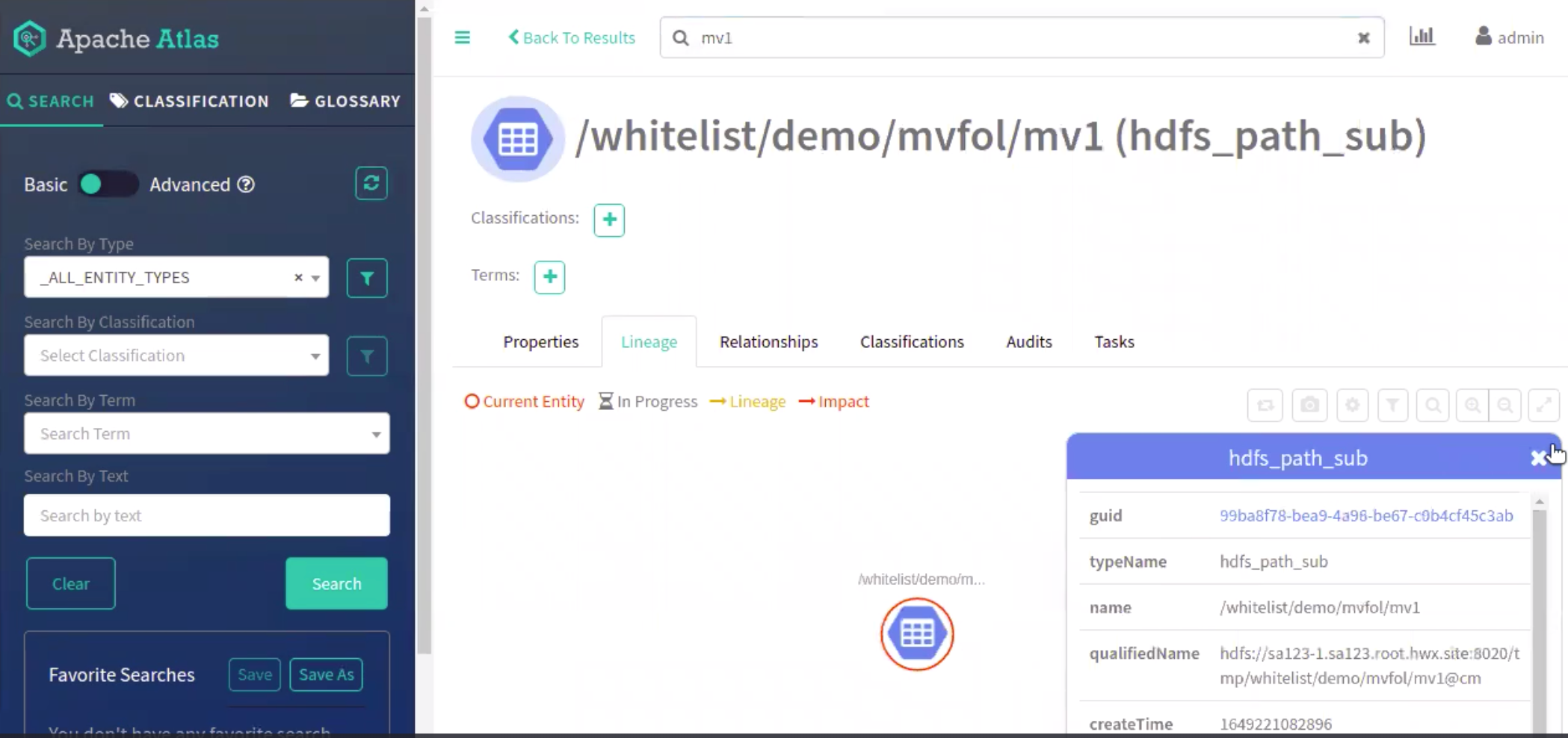

A single node is generated in the Atlas UI

You can move the contents of the folder mv1 to mv2

./hdfs-lineage.sh -mv /tmp/whitelist/demo/mvfol/mv1

/tmp/whitelist/demo/mvfol/mv2

Log file for

HDFS Extraction is /var/log/atlas/hdfs-extractor/log

Hdfs dfs -mv /tmp/whitelist/demo/mvfol/mv1

/tmp/whitelist/demo/mvfol/mv2 command was successful

HDFS Meta Data extracted successfully!!!

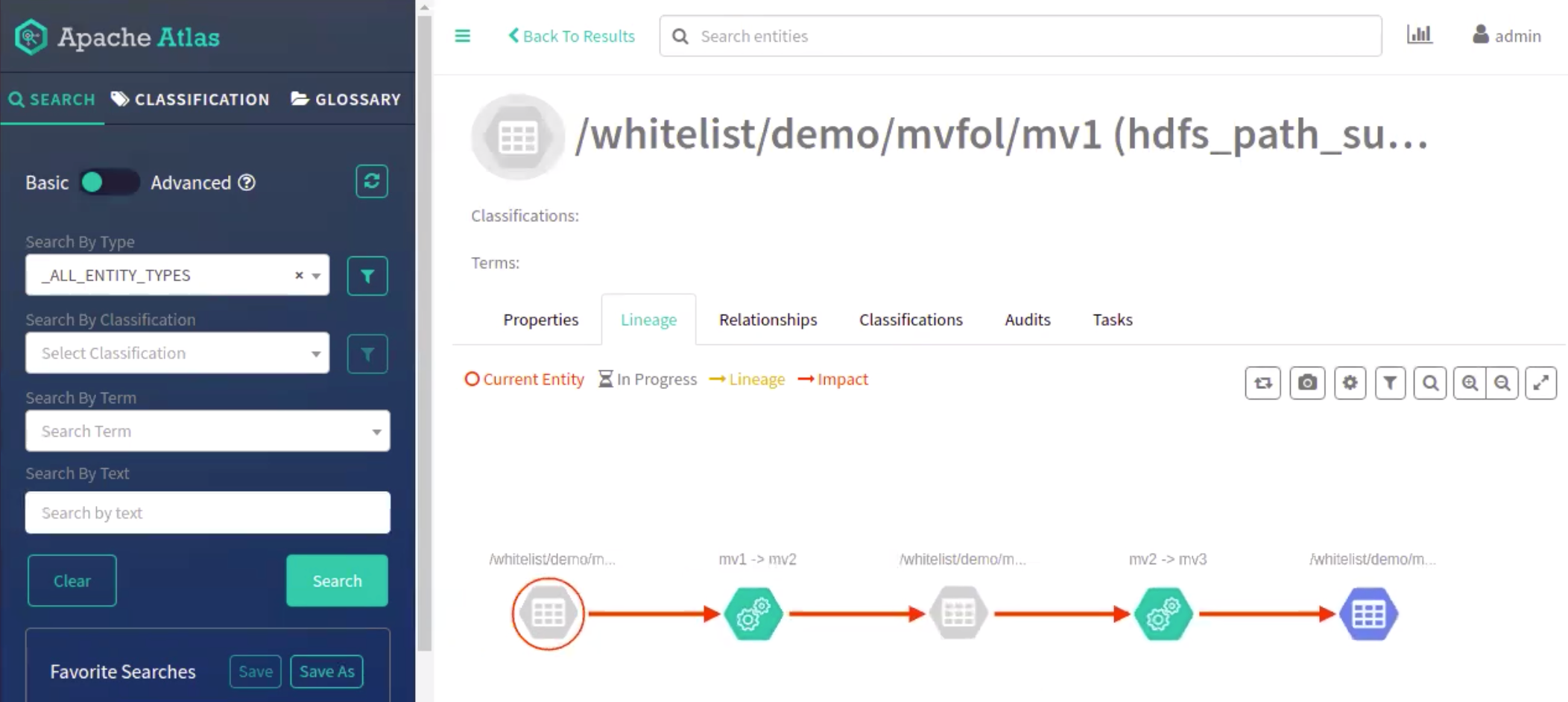

In Atlas UI, you can view the contents of mv1 being moved to mv2 and the old entity is deleted (grayed out in the image) and likewise it continues for every operation where the previously created entity is deleted.

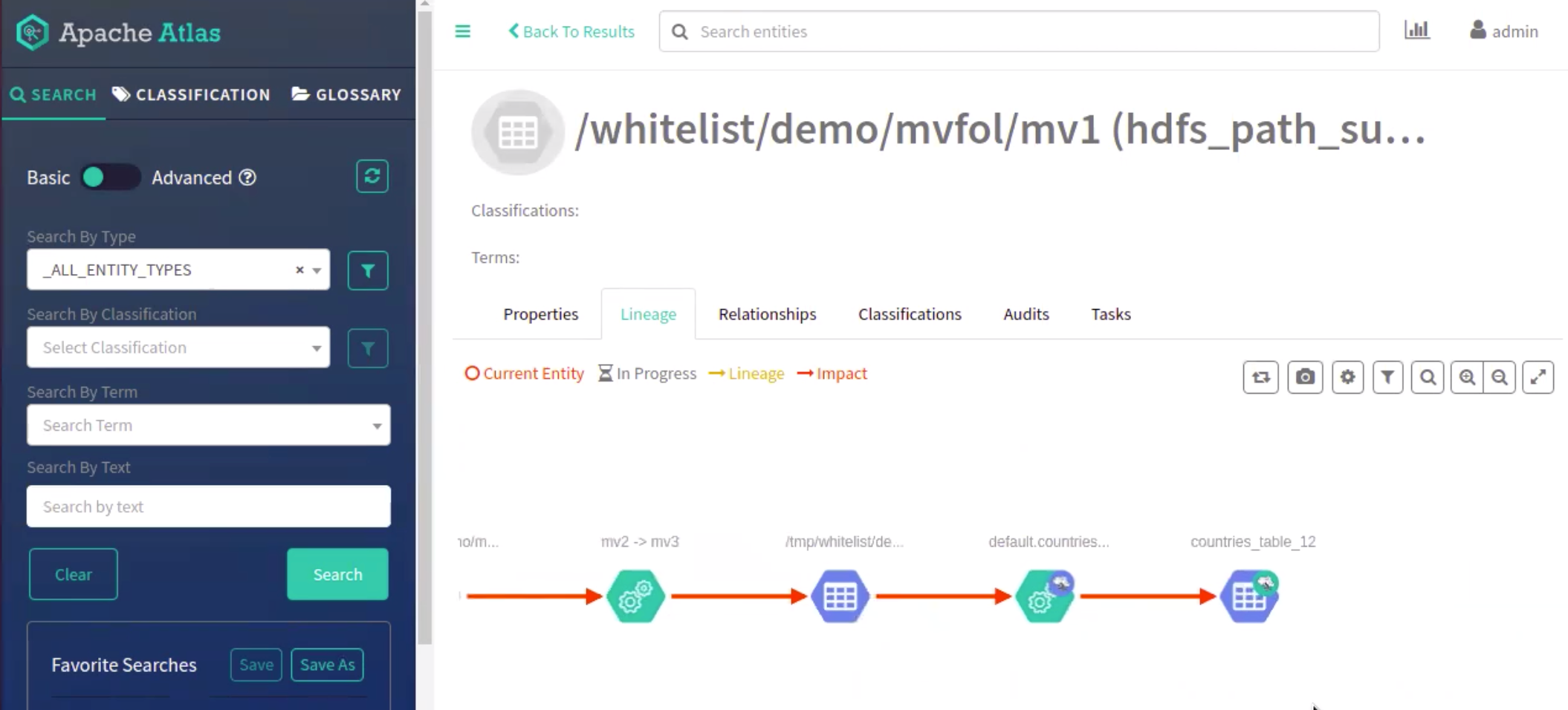

You must create an External Hive table on top of the HDFS location. For example, a Hive table on top of the HDFS location: mv3

create external table if not exists countries_table_12 (

CountryID int, CountryName string, Capital string, Population

string)

comment 'List of

Countries'

row format delimited

fields terminated by ','

stored

as textfile

location

'/tmp/whitelist/demo/mvfol/mv3';

Running the rm command:

Create a sample file in HDFS

./hdfs-lineage.sh -put ~/sample.txt

/tmp/whitelist/rmtest.txt

Log file for HDFS

Extraction is /var/log/atlas/hdfs-extractor/log

Hdfs dfs -put /root/sample.txt /tmp/whitelist/rmtest.txt command was

successful

HDFS Meta Data extracted

successfully!!!

Use the rm command

to delete the rmtest.txt file.

./hdfs-lineage.sh -rm -skipTrash

/tmp/whitelist/rmtest.txt

Log file for HDFS

Extraction is /var/log/atlas/hdfs-extractor/log

Hdfs dfs -rm -skipTrash /tmp/whitelist/rmtest.txt command was

successful

HDFS Meta Data extracted

successfully!!!

./hdfs-lineage.sh -rm -r -skipTrash

/tmp/whitelist/demo/delfol