Developing a dataflow for Stateless NiFi

Learn about the recommended process of building a dataflow that you can deploy with the Stateless NiFi Sink or Source connectors. This process involves building and designing a parameterized dataflow within a process group and then downloading the dataflow as a flow definition.

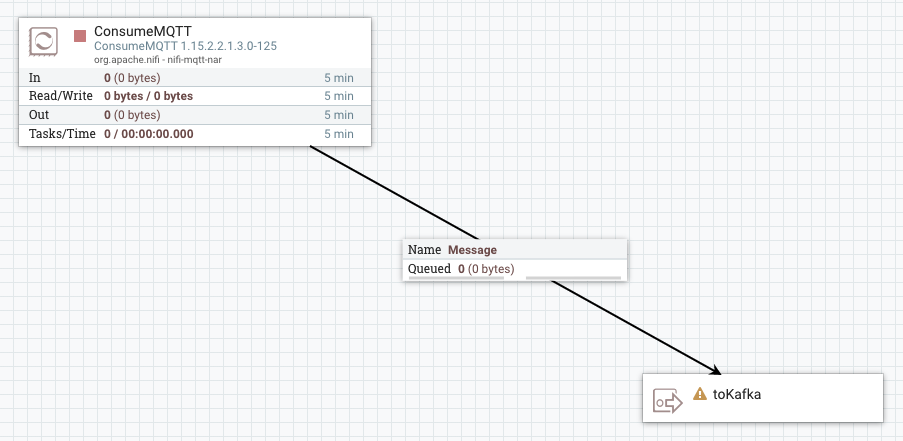

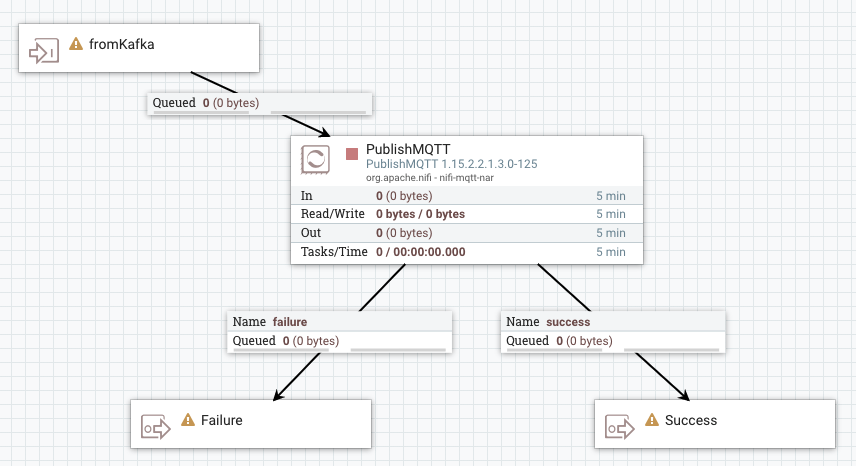

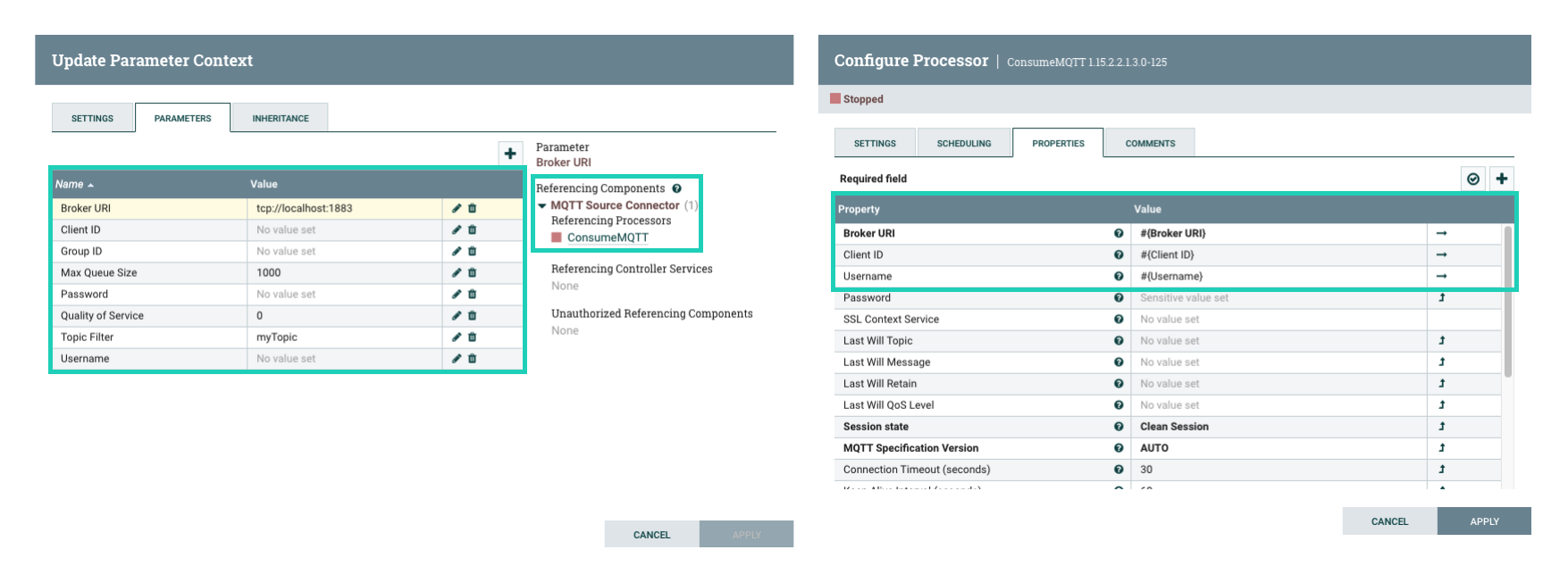

The general steps for building a dataflow are identical for both source and sink connector flows. For ease of understanding a dataflow example for a simple MQTT Source and MQTT Sink connector is provided without going into details (what processors to use, what parameters and properties to set, what relationships to define and so on).

- Ensure that you reviewed Dataflow development best practices for Stateless NiFi.

- You have access to a running instance of NiFi. The NiFi instance does not need to run on a cluster. A standalone instance running on any machine, like your own computer, is sufficient.

- Ensure that the version of your NiFi instance matches the version of the Stateless NiFi

plugin used by Kafka Connect on your cluster.

You can look up the Stateless NiFi plugin version either by using the Streams Messaging Manager UI, or by logging into a Kafka Connect host and checking the Connect plugin directory. In addition, ensure that you note down the plugin version. You will need to manually edit the flow definition JSON and replace the NiFi version with the plugin version.

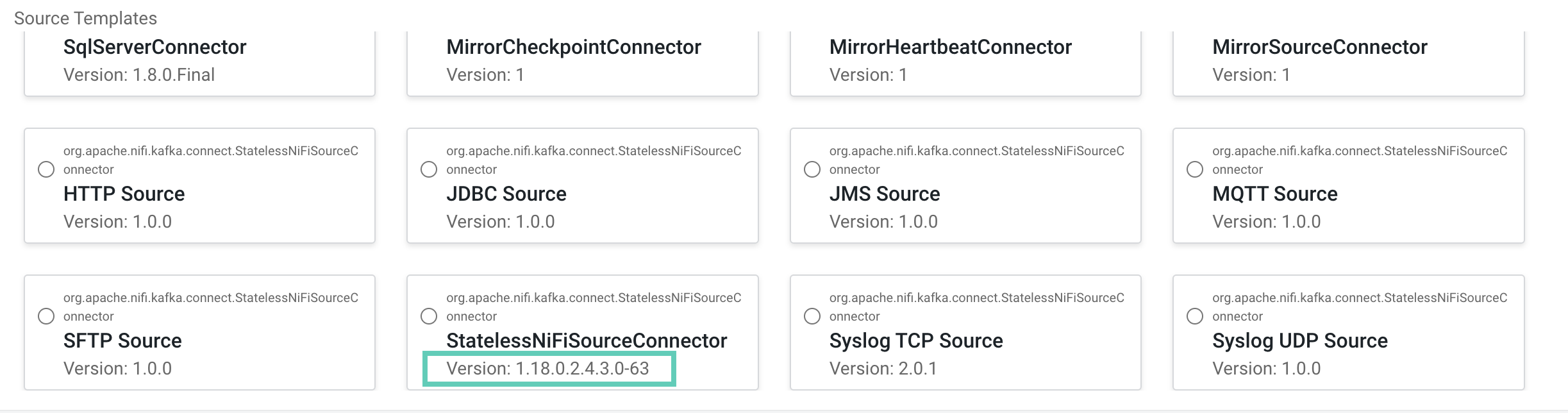

- Access the Streams Messaging Manager UI, and click

Connect in the navigation sidebar.

- Click the

New Connector option.

- Locate the StatelessNiFiSourceConnector or

StatelessNiFiSinkConnector cards. The version is located

on the card.

The version is made up of multiple digits. The first three represent the NiFi version. For example, if the version on the card is

1.18.0.2.4.3.0-63, then you must NiFi1.18.0to build your flow.

- Using SSH, log in to one of your Kafka Connect hosts.

- Navigate to the directory where your Kafka Connect plugins are located.

The default directory is /var/lib/kafka. If you are using a custom plugin directory, go to and search for the Plugin Path property. This property specifies the directory where Kafka Connect plugins are stored.

- List the contents of the directory and look for a

nifi-kafka-connector-[***VERSION***]

entry.

The version is made up of multiple digits. The first three represent the NiFi version. For example, if the version in the name of the entry is

1.18.0.2.4.3.0-63, then you must use NiFi1.18.0to build your flow.

- Access the Streams Messaging Manager UI, and click