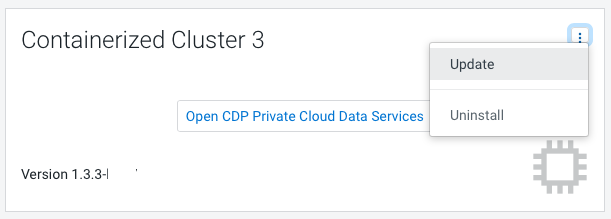

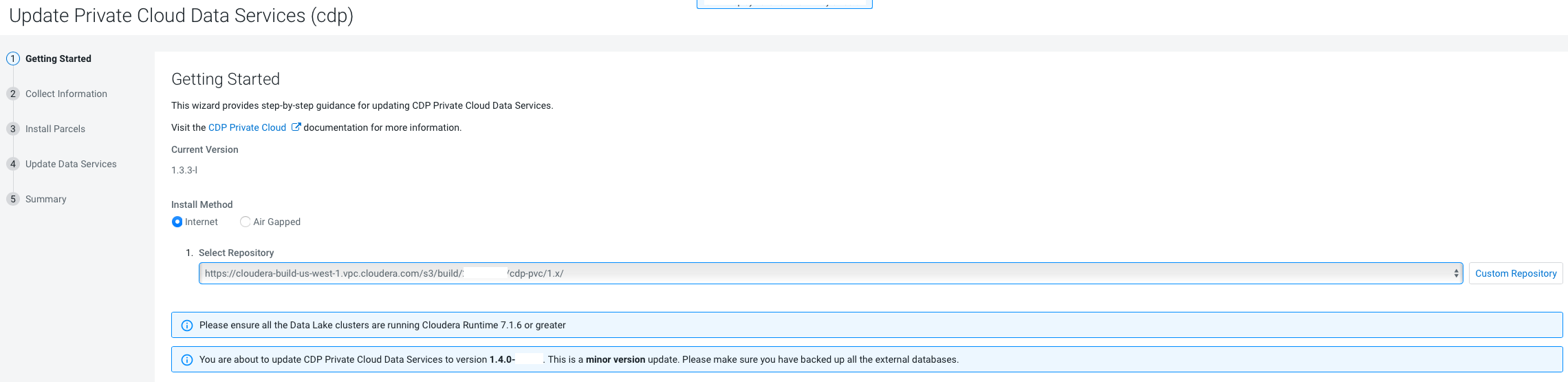

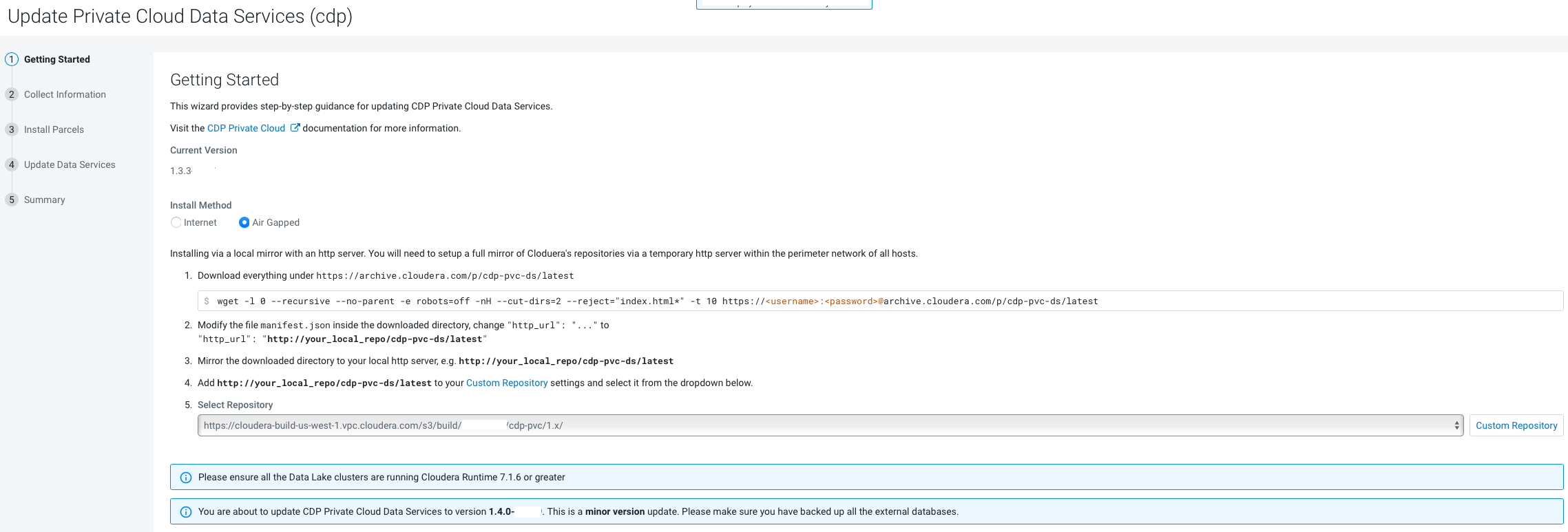

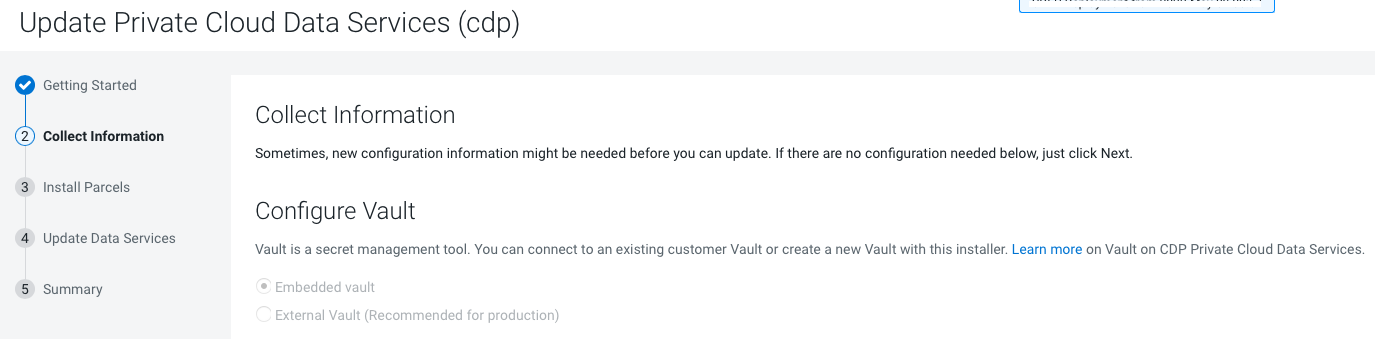

Update from 1.3.3 to 1.4.0/1.4.0-H1

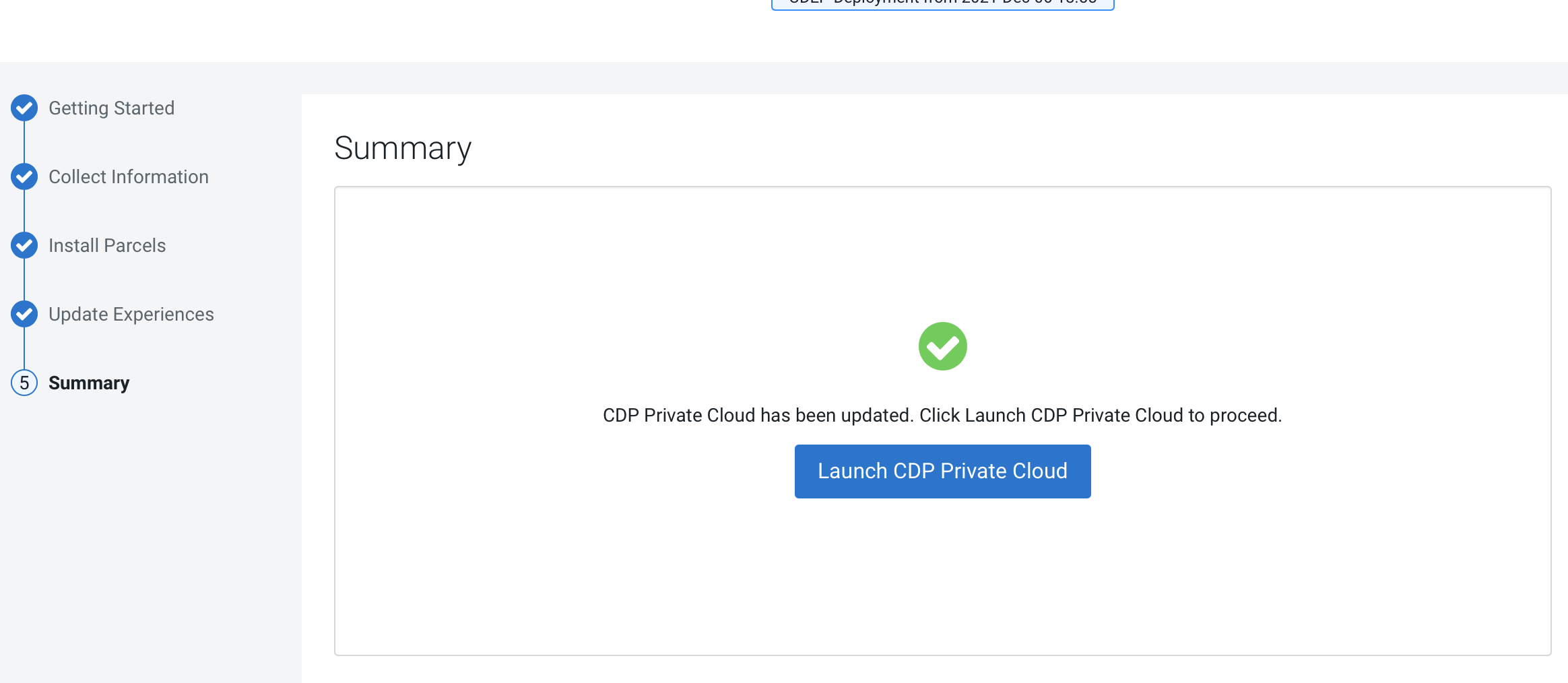

You can update your existing CDP Private Cloud Data Services 1.3.3 to 1.4.0 or 1.4.0-H1 without requiring an uninstall.

- If you see a Longhorn Health Test message about a degraded Longhorn volume, wait for the cluster repair to complete.

- After the upgrade, the version of YuniKorn may not match the version

that should be used with Private Cloud version 1.3.4. The YuniKorn

version should be: 0.10.4-b25. If this version is not deployed, do the

following to correct this:

- Log in to any node with access to the ECS cluster using ssh. The user must have the correct administration privileges to execute these commands.

- Run the following command to find the YuniKorn scheduler

pod:

The first value on the line is the scheduler pod ID. Copy that text and us it in the following command to describe the pod:kubectl get pods -n yunikorn | grep yunikorn-schedulerkubectl describe pod **yunikorn-scheduler-ID** -n yunikorn : grep "Image:"A completed upgrade for 1.3.4 shows the image version:docker-private.infra.cloudera.com/cloudera/cloudera-scheduler:0.10.4-b25The correct version is

0.10.4-b25. If it still shows an older version like0.10.3-b10, then the upgrade has failed.In case of a failed upgrade you must manually upgrade to the correct version. Continue with the remaining steps.

- Scale the YuniKorn deployment down to

0:

kubectl scale deployment yunikorn-scheduler -n yunikorn --replicas=0 - Wait until all pods are terminated. you can check this by

listing the pods in the

unikornnamespace:kubectl get pods -n yunikorn - Edit the deployment to update the

version:

kubectl edit deployment yunikorn-scheduler -

Replace all the references to the old version with the new updated version. There should be 3 references in the deployment file:

ADMISSION_CONTROLLER_IMAGE_TAG- Two lines with the "

Image:" tag

Replace all occurrences of the old version with the new version. For example: replace

0.10.3-b10with0.10.4-b25 -

Save the changes.

-

Scale the deployment back up:

kubectl scale deployment yunikorn-scheduler -n yunikorn --replicas=1

- If the upgrade stalls, do the following:

- Check the status of all pods by running the following command on

the ECS server node:

kubectl get pods --all-namespaces - If there are any pods stuck in “Terminating” state, then force

terminate the pod using the following

command:

kubectl delete pods <NAME OF THE POD> -n <NAMESPACE> --grace-period=0 —forceIf the upgrade still does not resume, continue with the remaining steps.

- In the Cloudera Manager Admin Console, go to the ECS service and

click .

The Longhorn dashboard opens.

-

Check the "in Progress" section of the dashboard to see whether there are any volumes stuck in the attaching/detaching state in. If a volume is that state, reboot its host.

- Check the status of all pods by running the following command on

the ECS server node:

- If the

upgrade fails, or constantly retries the upgrade, do the following:

- Open the ECS Web UI (Kubernetes Dashboard):

- In the Cloudera Manager Admin Console, go to the ECS service.

- Click .

- If you see an error message similar to the following after the

upgrade for the alertmanager pod, perform the steps below. If it

is another pod, skip to Step 3 below.

Warning FailedAttachVolume 2s (x5 over 20s) attachdetach-controller AttachVolume.Attach failed for volume "pvc-6b2bc988-cbdf-4b4a-a005-dee7a1b26cf5" : rpc error: code = DeadlineExceeded desc = volume pvc-6b2bc988-cbdf-4b4a-a005-dee7a1b26cf5 failed to attach to node ecs-bcrgq6-3.vpc.myco.com- Restart the pod with the error

message:

The pod will restart.kubectl delete pod <pod name and number as shown in the error message> - If the pod still reports the error, log in to one of the ECS

hosts and run the following command to delete the

pvc:

kubectl delete pvc storage-volume-monitoring-prometheus-alertmanager-<number> -n <namespace> - Restart the pod with the error

message:

The pod will restart.kubectl delete pod <pod name and number as shown in the error message> - There will be two instances of alertmanager,

cdp-release-prometheus-alertmanager-0andcdp-release-prometheus-alertmanager-1, Run the following command, using the instance of the pod with the error message to restart the pod:kubectl delete pod cdp-release-prometheus-alertmanager-<number> -n <namespace>

- Restart the pod with the error

message:

- If the same error happens with a pod that is not the

alertmanager, but one that is not running in a statefulset, but in

a deployment (like prometheus or grafana), save the pvc before

deleting it and re-add it after it has terminated:

- Log in to one of the ECS hosts and run the following command

to save the

pvc:

kubectl get pvc storage-volume-monitoring-prometheus-alertmanager-<number> -n <namespace> -o yaml > mybackup.yaml

- Log in to one of the ECS hosts and run the following command

to save the

pvc:

- Run the following command to start the

pvc:

kubectl apply -f mybackup.yaml -n <namespace>

- Open the ECS Web UI (Kubernetes Dashboard):

. Click

. Click