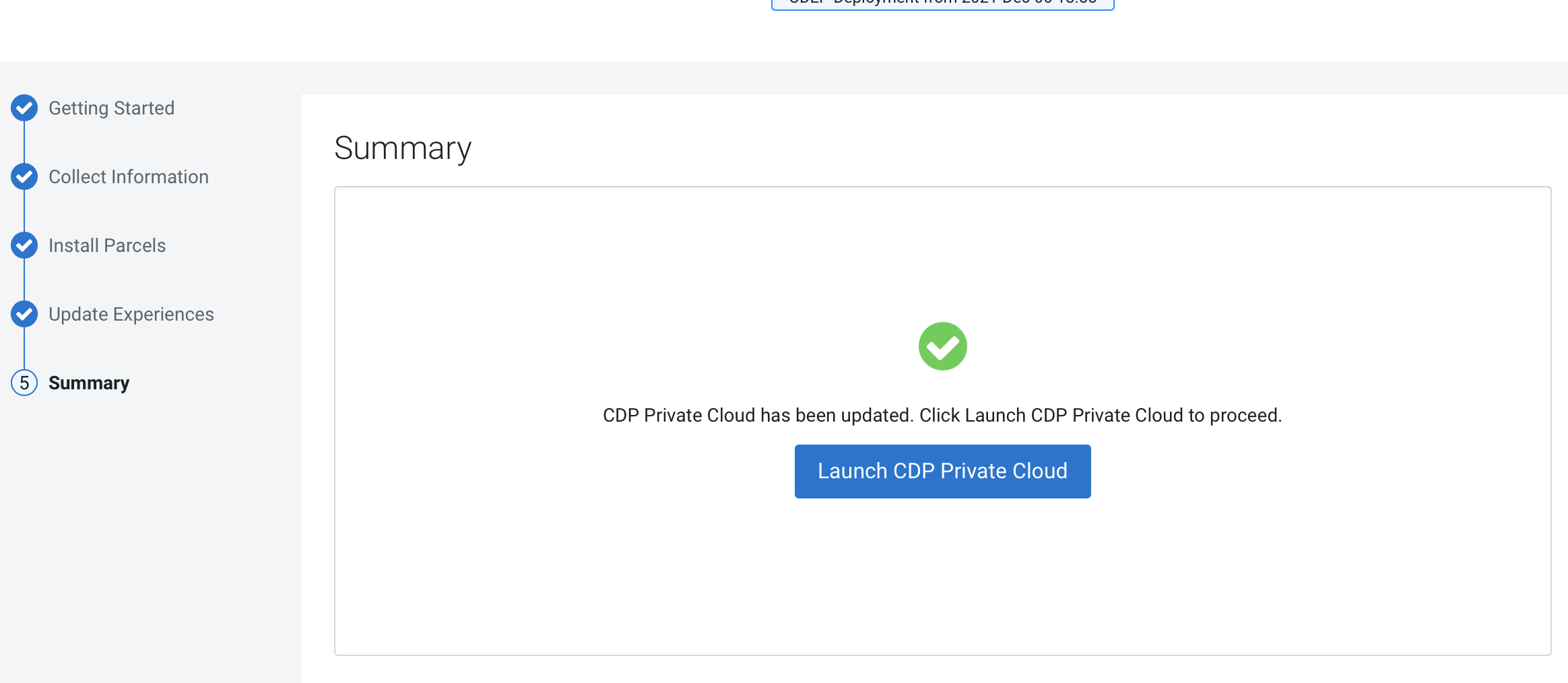

Update from 1.4.0-H1 or 1.4.1 to 1.5.0 (ECS)

You can update your existing CDP Private Cloud Data Services 1.4.0-H1 or 1.4.1 to 1.5.0 without performing an uninstall.

- Run the following commands on the ECS server hosts:

TOLERATION='{"spec": { "template": {"spec": { "tolerations": [{ "effect": "NoSchedule","key": "node-role.kubernetes.io/control-plane","operator": "Exists" }]}}}}' kubectl patch deployment/yunikorn-admission-controller -n yunikorn -p "$TOLERATION" kubectl patch deployment/yunikorn-scheduler -n yunikorn -p "$TOLERATION" - Upgrading the Embedded Container Service (ECS) version, while CDE service is enabled, it fails to launch the Jobs page in the old CDE virtual cluster. You must back up CDE jobs in the CDE virtual cluster, and then delete the CDE service and CDE virtual cluster. Restore it after the upgrade. For more information about backup and restore CDE jobs, see Backing up and restoring CDE jobs.

- If the upgrade stalls, do the following:

- Check the status of all pods by running the following command on

the ECS server node:

kubectl get pods --all-namespaces - If there are any pods stuck in “Terminating” state, then force

terminate the pod using the following

command:

kubectl delete pods <NAME OF THE POD> -n <NAMESPACE> --grace-period=0 —forceIf the upgrade still does not resume, continue with the remaining steps.

- In the Cloudera Manager Admin Console, go to the ECS service and

click .

The Longhorn dashboard opens.

-

Check the "in Progress" section of the dashboard to see whether there are any volumes stuck in the attaching/detaching state in. If a volume is that state, reboot its host.

- Check the status of all pods by running the following command on

the ECS server node:

- You may see the following error message during the

Upgrade Cluster > Reapplying all settings > kubectl-patch

:

If you see this error, do the following:kubectl rollout status deployment/rke2-ingress-nginx-controller -n kube-system --timeout=5m error: timed out waiting for the condition- Check whether all the Kubernetes nodes are ready for scheduling.

Run the following command from the ECS Server

node:

You will see output similar to the following:kubectl get nodesNAME STATUS ROLES AGE VERSION <node1> Ready,SchedulingDisabled control-plane,etcd,master 103m v1.21.11+rke2r1 <node2> Ready <none> 101m v1.21.11+rke2r1 <node3> Ready <none> 101m v1.21.11+rke2r1 <node4> Ready <none> 101m v1.21.11+rke2r1 -

Run the following command from the ECS Server node for the node showing a status of

SchedulingDisabled:

You will see output similar to the following:kubectl uncordon<node1>node/<node1> uncordoned - Scale down and scale up the

rke2-ingress-nginx-controller pod by

running the following command on the ECS Server

node:

kubectl delete pod rke2-ingress-nginx-controller-<pod number> -n kube-system - Resume the upgrade.

- Check whether all the Kubernetes nodes are ready for scheduling.

Run the following command from the ECS Server

node:

- If you specified a custom certificate, select the ECS cluster in Cloudera Manager, then

select Actions > Update Ingress Controller. This command copies the

cert.pemandkey.pemfiles from the Cloudera Manager server host to the ECS Management Console host.

icon, then click

icon, then click