Deploying the NiFi flows

Learn about the steps to deploy flows (custom flow definitions or ReadyFlows) so that you can start running your NiFi flows with Cloudera DataFlow.

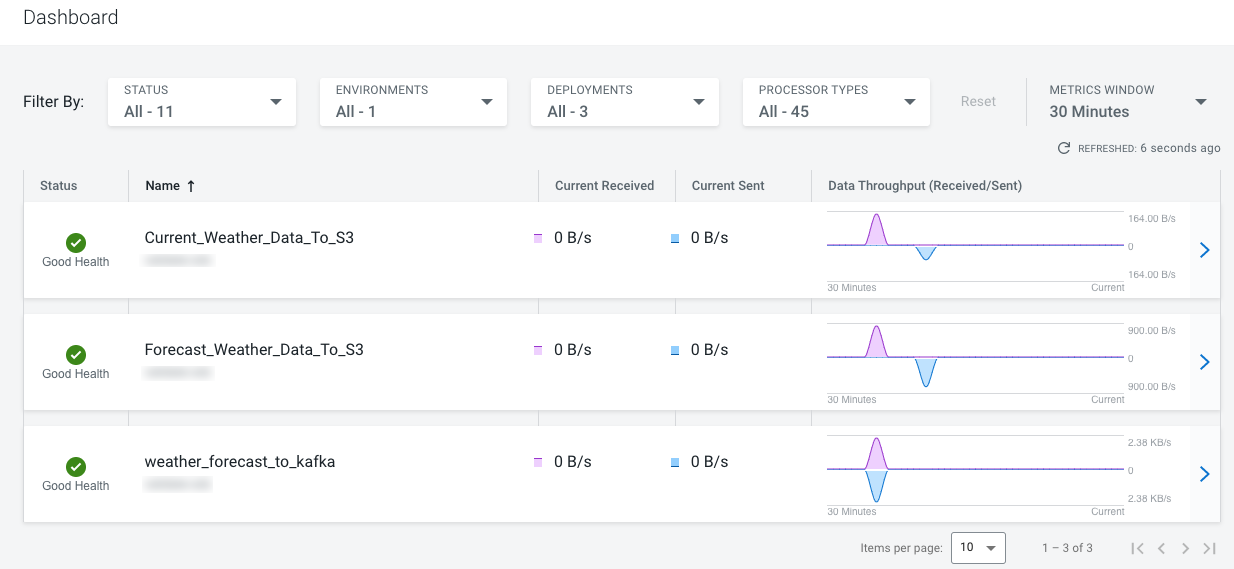

Now that you have imported the custom NiFi flow definition and added the ReadyFlow to the catalog you may proceed by deploying your Business Intelligence at Scale data flows. You must deploy the following three flows:

weather_forecast_to_kafka: This flow gets both current and forecast weather data and sends it to the corresponding Kafka topics.Current_Weather_Data_To_S3: This deployment moves current weather data from the Kafka topic into an S3 bucket.Forecast_Weather_Data_To_S3: This deployment moves forecast weather data from the Kafka topic into an S3 bucket.

- Log in to the CDP web interface.

-

To create the custom flow deployment "weather_forecast_to_kafka", perform the

following steps:

-

Go to DataFlow > Catalog and click the "Kafka to S3 Avro" ReadyFlow definition

that you added earlier.

The version information about the flow is displayed.

- Click Deploy →.

- Select your CDP environment from the Target Environment drop-down menu on the New Deployment dialog and click Continue.

-

Provide a unique name to your deployment on the

Overview page.

For the Business Intelligence at Scale pattern, specify weather_forecast_to_kafka.

- Accept the default settings on the NiFi Configuration page.

-

Specify the following on the Parameters

page:

Field name Description and value CDP Workload User Specify your CDP workload username. CDP Workload User Password Specify your CDP workload password. Current Weather Topic Name Specify weather. Kafka Brokers Provide a comma separated list of Kafka FQDNs that you collected from the Data Hub cluster earlier as follows: [***broker1.fqdn***]:9093,[***broker2.fqdn***]:9093,[***broker3.fqdn***]:9093Schema Name Specify WeatherForecast. This should match with the schema name that you specified in Schema Registry. Schema Registry URL Specify [***master-node-fqdn***]:7790/api/v1 that you collected earlier from the Data Hub cluster. Topic Name Specify weather_forecastThis should match with the Kafka topics that you created in Streams Messaging Manager (SMM). Weather Forecast Topic Name Specify weather_forecast. api.key Specify the API key that you generated on the OpenWeather portal. api.key.current Specify the API key that you generated on the OpenWeather portal. - Optional:

In KPIs, identify key performance indicators,

the metrics to track those KPIs, and when and how to receive alerts

about the KPI metrics tracking.

You can skip this step for this pattern.

-

Accept the default settings on the Sizing &

Scaling page for the Business Intelligence at Scale

pattern.

For production use cases, you can specify your initial flow deployment size, whether to enable auto-scaling, and up to how many nodes you want in your cluster.

-

Review the configuration summary and click

Deploy.

You will be redirected to the Alerts tab in the detail view for the deployment where you can track its progress.

-

Go to DataFlow > Catalog and click the "Kafka to S3 Avro" ReadyFlow definition

that you added earlier.

-

To create the "Current_Weather_Data_To_S3" deployment:

-

Go to DataFlow > Catalog and click the "Kafka to S3 Avro" ReadyFlow definition

that you added earlier.

The version information about the flow is displayed.

- Click Deploy →.

- Select your CDP environment from the Target Environment drop-down menu on the New Deployment dialog and click Continue.

-

Provide a unique name to your deployment on the

Overview page.

For the Business Intelligence at Scale pattern, specify Current_Weather_Data_To_S3.

- Accept the default settings on the NiFi Configuration page.

-

Specify the following on the Parameters

page:

Field name Description and value CDP Workload User Specify your CDP workload username. CDP Workload User Password Specify your CDP workload password. CSV Delimiter Specify , (a comma). Data Input Format Specify JSON. Ensure to input this is in All-Caps. Kafka Broker Endpoint Provide a comma separated list of Kafka FQDNs that you collected from the Data Hub cluster earlier as follows: [***broker1.fqdn***]:9093,[***broker2.fqdn***]:9093,[***broker3.fqdn***]:9093Kafka Consumer Group ID Specify WeatherConsumer. Kafka Source Topic Specify weather. S3 Path Specify the path to your S3 bucket upto the root. S3 Bucket Specify the name of your S3 bucket. Schema Name Specify WeatherCurrent. This should match with the schema name that you specified in Schema Registry. Schema Registry Hostname Specify [***master-node-fqdn***] that you collected earlier from the Data Hub cluster. - Optional:

In KPIs, identify key performance indicators,

the metrics to track those KPIs, and when and how to receive alerts

about the KPI metrics tracking.

You can skip this step for this pattern.

-

Accept the default settings on the Sizing &

Scaling page for the Business Intelligence at Scale

pattern.

For production use cases, you can specify your initial flow deployment size, whether to enable auto-scaling, and up to how many nodes you want in your cluster.

-

Review the configuration summary and click

Deploy.

You will be redirected to the Alerts tab in the detail view for the deployment where you can track its progress.

-

Go to DataFlow > Catalog and click the "Kafka to S3 Avro" ReadyFlow definition

that you added earlier.

-

To create the "Forecast_Weather_Data_To_S3" deployment:

-

Go to DataFlow > Catalog and click the "Kafka to S3 Avro" ReadyFlow definition

that you added earlier.

The version information about the flow is displayed.

- Click Deploy →.

- Select your CDP environment from the Target Environment drop-down menu on the New Deployment dialog and click Continue.

-

Provide a unique name to your deployment on the

Overview page.

For the Business Intelligence at Scale pattern, specify Forecast_Weather_Data_To_S3.

- Accept the default settings on the NiFi Configuration page.

-

Specify the following on the Parameters

page:

Field name Description and value CDP Workload User Specify your CDP workload username. CDP Workload User Password Specify your CDP workload password. CSV Delimiter Specify , (a comma). Data Input Format Specify JSON. Ensure to input this is in All-Caps. Kafka Broker Endpoint Provide a comma separated list of Kafka FQDNs that you collected from the Data Hub cluster earlier as follows: [***broker1.fqdn***]:9093,[***broker2.fqdn***]:9093,[***broker3.fqdn***]:9093Kafka Consumer Group ID Specify ForecastConsumer. Kafka Source Topic Specify weather_forecast. S3 Path Specify the path to your S3 bucket upto the root. S3 Bucket Specify the name of your S3 bucket. Schema Name Specify WeatherForecast. This should match with the schema name that you specified in Schema Registry. Schema Registry Hostname Specify [***master-node-fqdn***] that you collected earlier from the Data Hub cluster. - Optional:

In KPIs, identify key performance indicators,

the metrics to track those KPIs, and when and how to receive alerts

about the KPI metrics tracking.

You can skip this step for this pattern.

-

Accept the default settings on the Sizing &

Scaling page for the Business Intelligence at Scale

pattern.

For production use cases, you can specify your initial flow deployment size, whether to enable auto-scaling, and up to how many nodes you want in your cluster.

-

Review the configuration summary and click

Deploy.

You will be redirected to the Alerts tab in the detail view for the deployment where you can track its progress.

-

Go to DataFlow > Catalog and click the "Kafka to S3 Avro" ReadyFlow definition

that you added earlier.

- Current_Weather_Data_To_S3

- Forecast_Weather_Data_To_S3

- weather_forecast_to_kafka