Concept of tables in SSB

The core abstraction for Streaming SQL is a Table which represents both inputs and outputs of the queries. SQL Stream Builder (SSB) tables are an extension of the tables used in Flink SQL to allow a bit more flexibility to the users. When creating tables in SSB, you have the option to either add them manually, import them automatically or create them using Flink SQL depending on the connector you want to use.

A Table is a logical definition of the data source that includes the location and connection parameters, a schema, and any required, context specific configuration parameters. Tables can be used for both reading and writing data in most cases. You can create and manage tables either manually or they can be automatically loaded from one of the catalogs as specified using the Data Providers section.

In SELECT queries the FROM clause defines the table sources

which can be multiple tables at the same time in case of JOIN or more complex

queries.

When you execute a query, the results go to the table you specify after the INSERT INTO statement in the SQL window. This allows you to create aggregations, filters, joins, and so on, and then route the results to another table. The schema for the results is the schema that you have created when you ran the query.

INSERT INTO air_traffic -- the name of the table sink SELECT lat,lon FROM airplanes -- the name of the table source WHERE icao <> 0;

Table types in SSB

- Kafka Tables

- Apache Kafka Tables represent data contained in a single Kafka topic in JSON, AVRO or CSV format. It can be defined using the Streaming SQL Console wizard or you can create Kafka tables from the pre-defined templates.

- Tables from Catalogs

- SSB supports Kudu, Hive and Schema Registry as catalog providers. After registering them using the Streaming SQL Console, the tables are automatically imported to SSB, and can be used in the SQL window for computations.

- Flink Tables

- Flink SQL tables represent tables created by the standard CREATE TABLE syntax. This supports full flexibility in defining new or derived tables and views. You can either provide the syntax by directly adding it to the SQL window or use one of the predefined DDL templates.

- Webhook Tables

- Webhooks can only be used as tables to write results to. When you use the Webhook Tables the result of your SQL query is sent to a specified webhook.

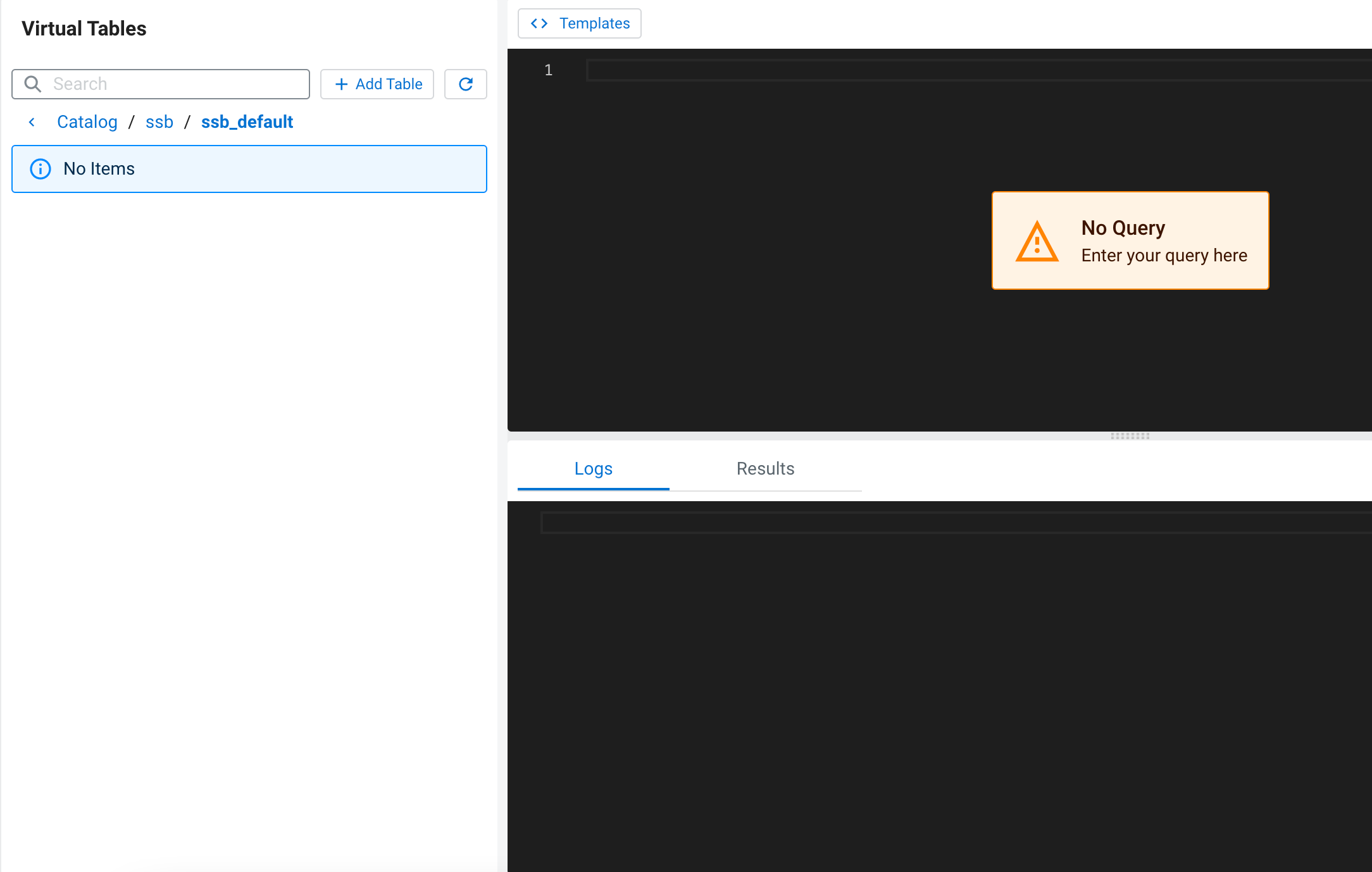

Table management on Console page

After creating your tables for the SQL jobs, you can review and manage them on the Console page next to the SQL Editor. The created tables are organized based on the teams a user is assigned to.

The + Add Table button can be used to create Apache Kafka and Webhook

tables using an add table wizard. For any other supported tables of SQL Stream Builder (SSB),

you can either manually add the CREATE TABLE statement to the SQL

Editor or choose one of the predefined templates using the

Template selector. After selecting the template of a connector, the

CREATE TABLE statement is loaded to the SQL Editor.

The created tables are listed at the left panel, regardless if they are added using the wizard or created with Flink DDL. The tables appear based on which team is selected active for the user. The search bar can be used to find a specific table or shorten the list of tables by searching for a connector type.

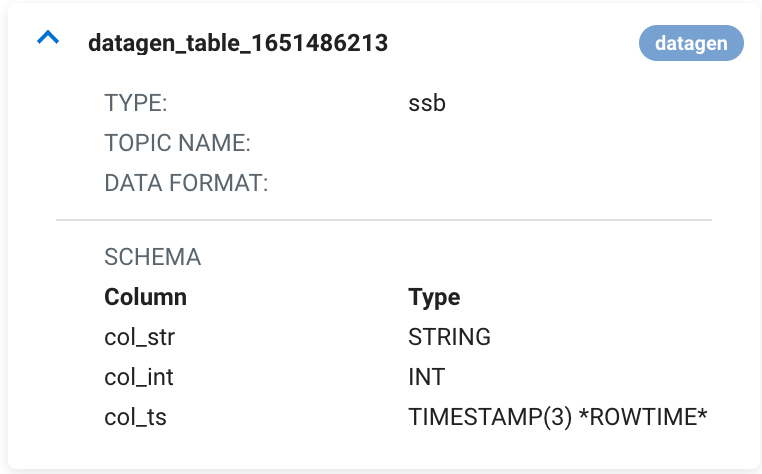

The details of a table can be seen by clicking on the arrow next to the name of the table.

When you hover over a table, expanded or not, the following buttons appear which can be used to manage your tables.

- Show DDL - the DDL format of the table appears

- You can use the copy button on the DDL window if you need to reuse the CREATE TABLE statement. You can also paste the DDL in the SQL Editor to edit the CREATE TABLE statement, and create a new table with changed attributes.

- Paste in Editor - the name of the table is added to the SQL editor

- Delete Table - removing table from SQL Stream Builder