AWS Lambda triggers

All AWS Lambda triggers are supported with Cloudera Data Flow Functions. In this section you can review the most commonly used triggers and examples of the FlowFile’s content that would be generated following a triggering event. You can add triggers on the tab.

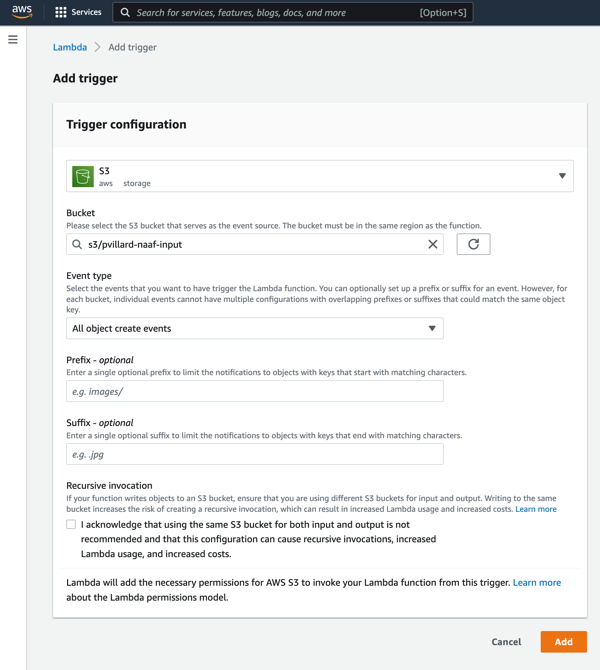

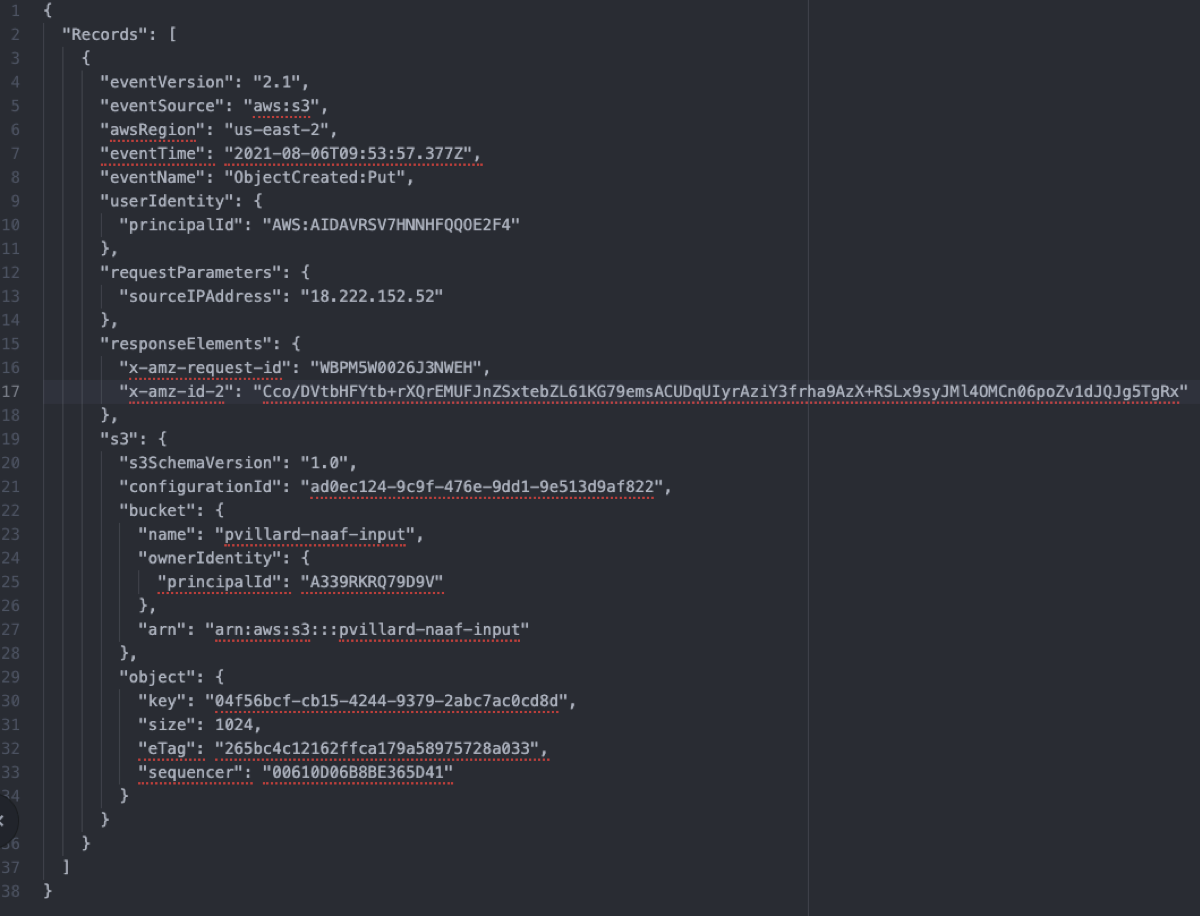

S3 Trigger

This trigger can be used, for example, to start your function whenever a file is landing into AWS S3:

The FlowFile’s content would look like this:

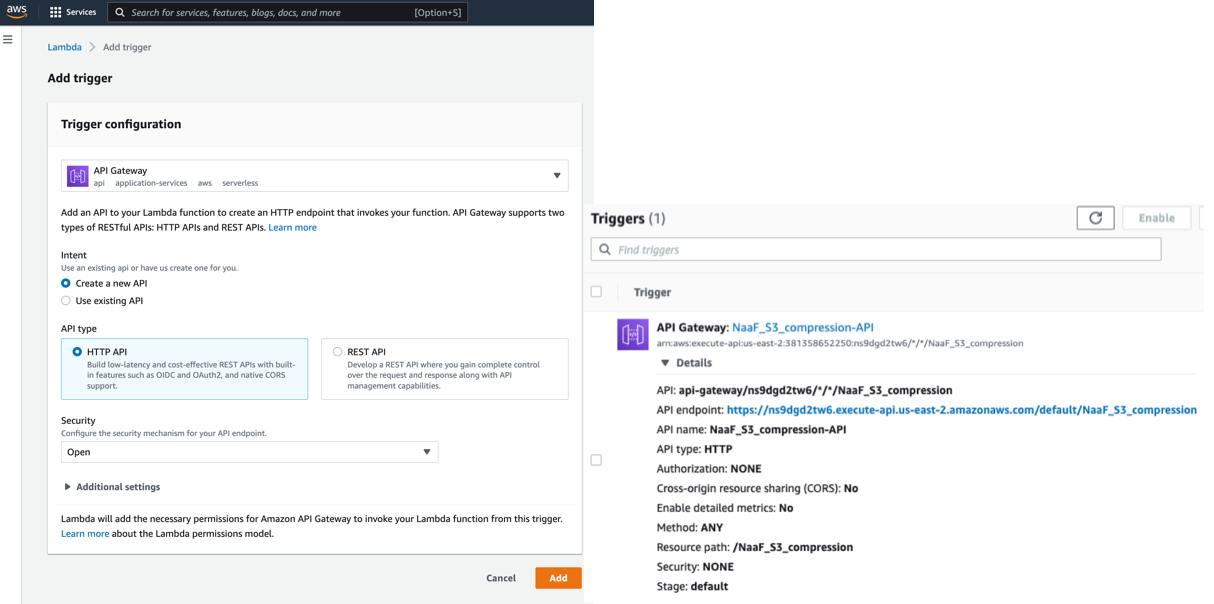

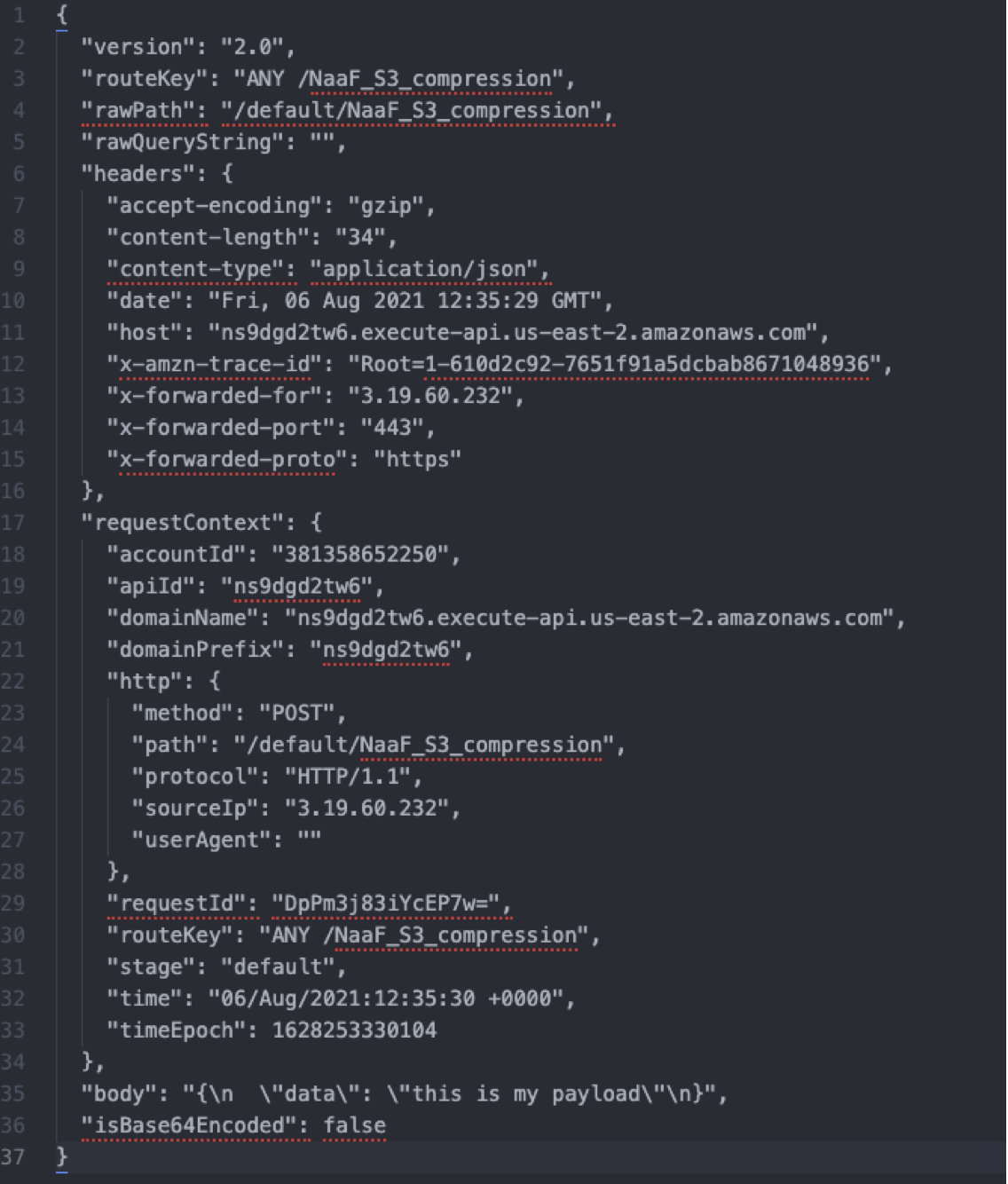

API Gateway Trigger

This trigger is used to expose an HTTPS endpoint so that clients can trigger the function with HTTP requests. This is particularly useful to build microservices. It can also be used to create an HTTPS Gateway for ingesting data into Kafka, or to provide an HTTP Gateway to many MiNiFi agents sending data into the cloud. See more information about these use cases in the next sections.

Configuration example:

Example of event generated:

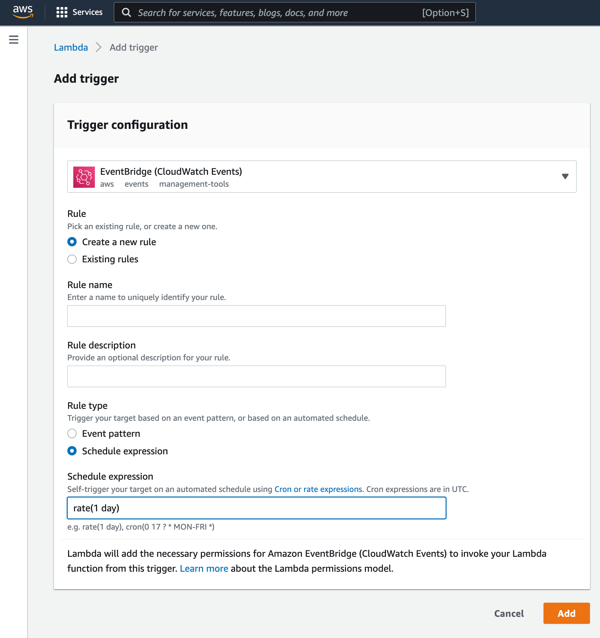

EventBridge (CloudWatch Events) - CRON driven functions

This trigger can be used with rules including CRON expressions so that your function is executed at given moments. You can use it, for example, to execute a particular processing once a day.

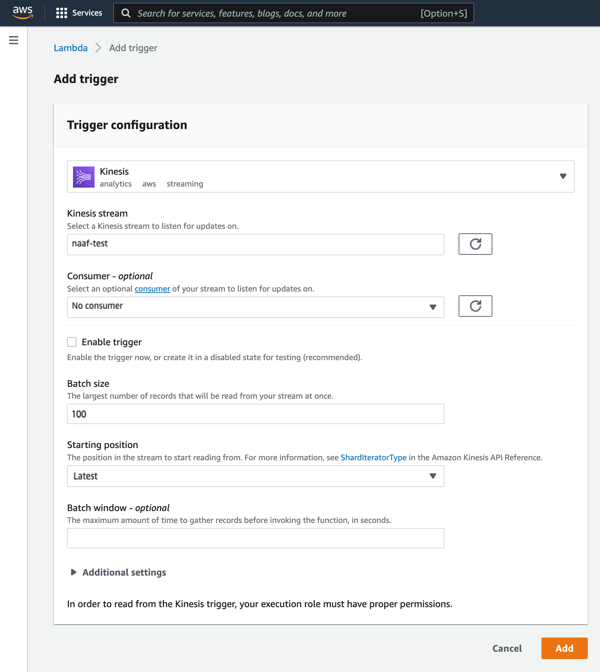

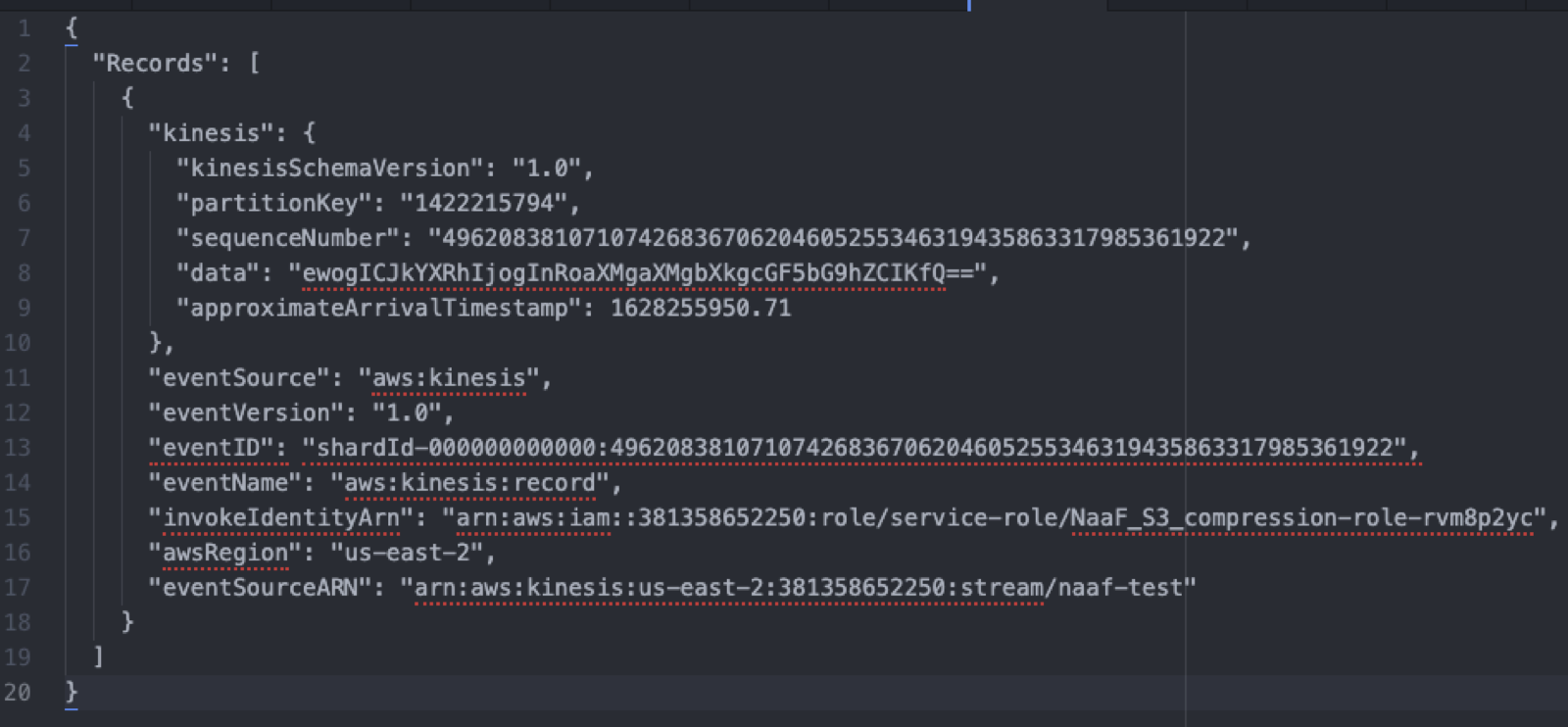

Kinesis

This trigger can be used to process a stream of events using Cloudera Data Flow Functions. The trigger configuration could be:

The FlowFile’s content would look like this:

Note that the data associated with a particular record may be base 64 encoded. In this case, you may want to consider the Base64EncodeContent processor in your flow definition to decode the content.

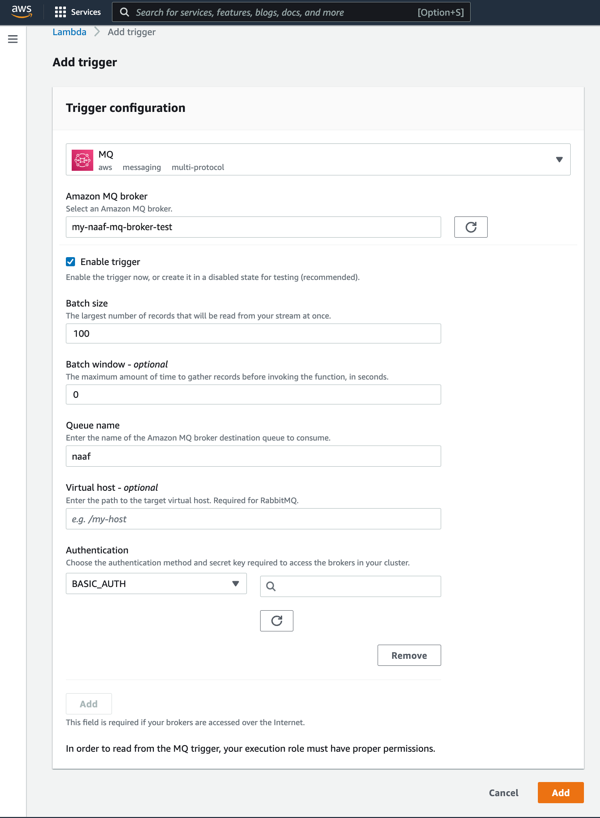

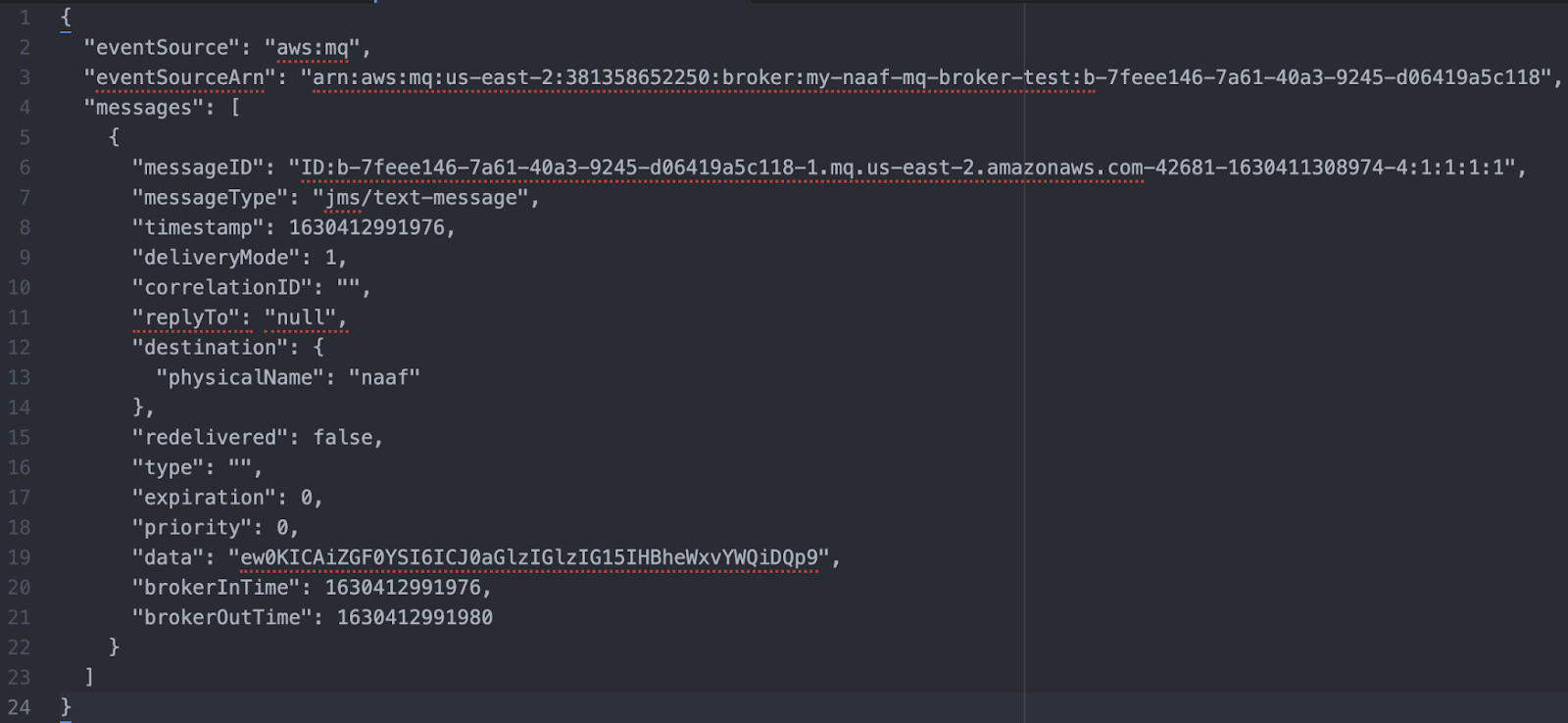

AWS MQ

This trigger can be used to trigger your Lambda based on events received in specific queues of AWS MQ. The trigger configuration may look like this:

The FlowFile’s content would look like this:

Note that the data associated with a particular message may be base 64 encoded. In this case, you may want to consider the Base64EncodeContent processor in your flow definition to decode the content.

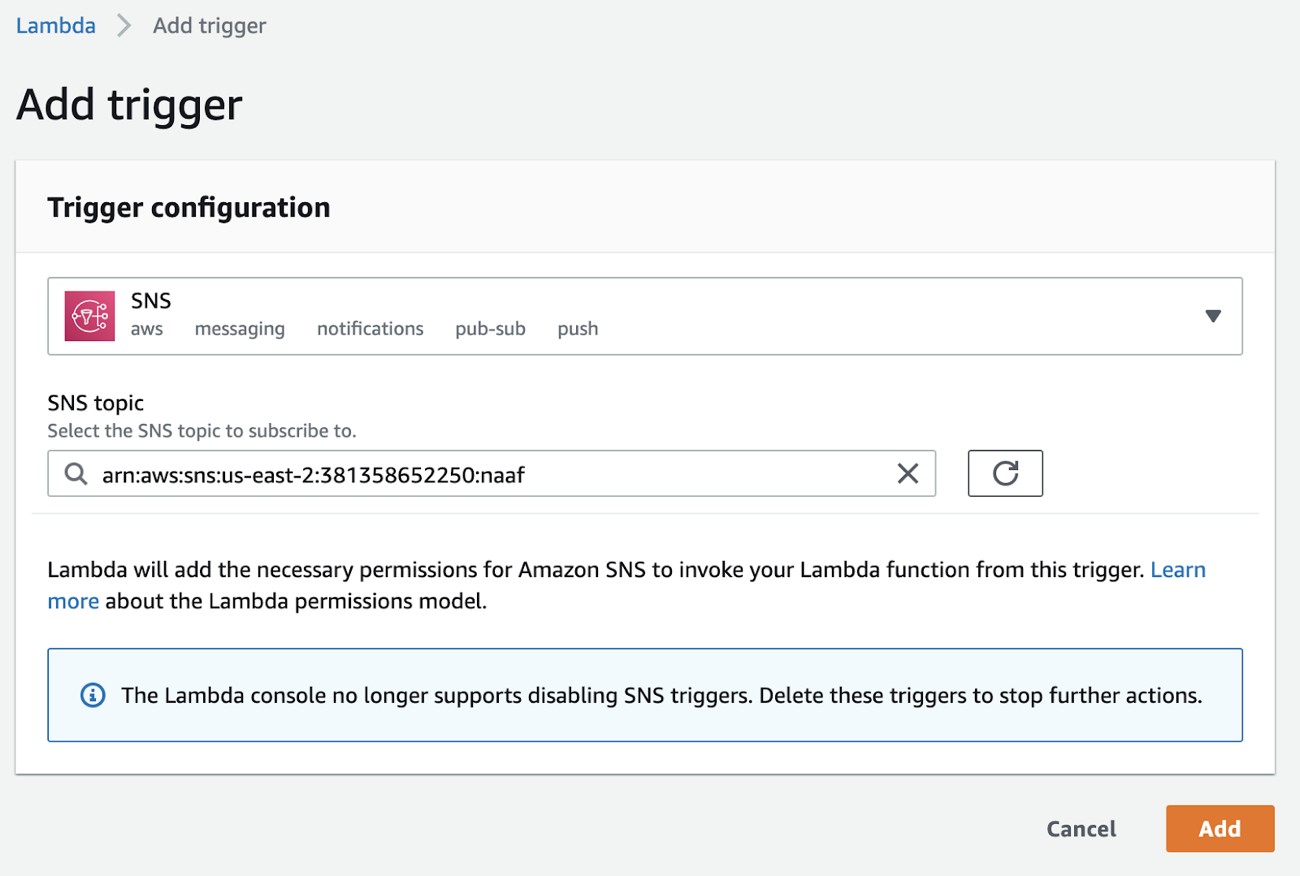

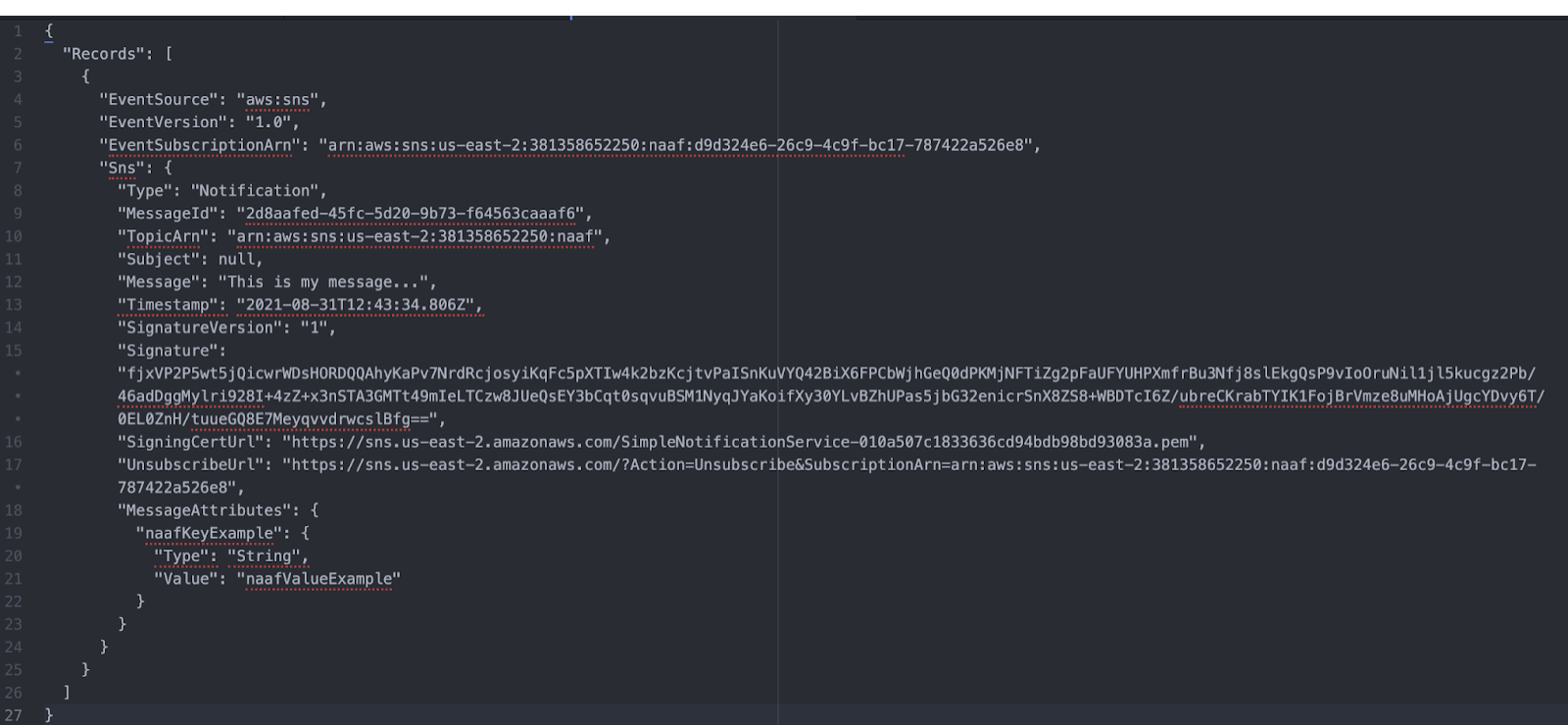

AWS SNS

When using the AWS SNS trigger with a particular topic, the configuration may look like this:

The FlowFile’s content would look like this:

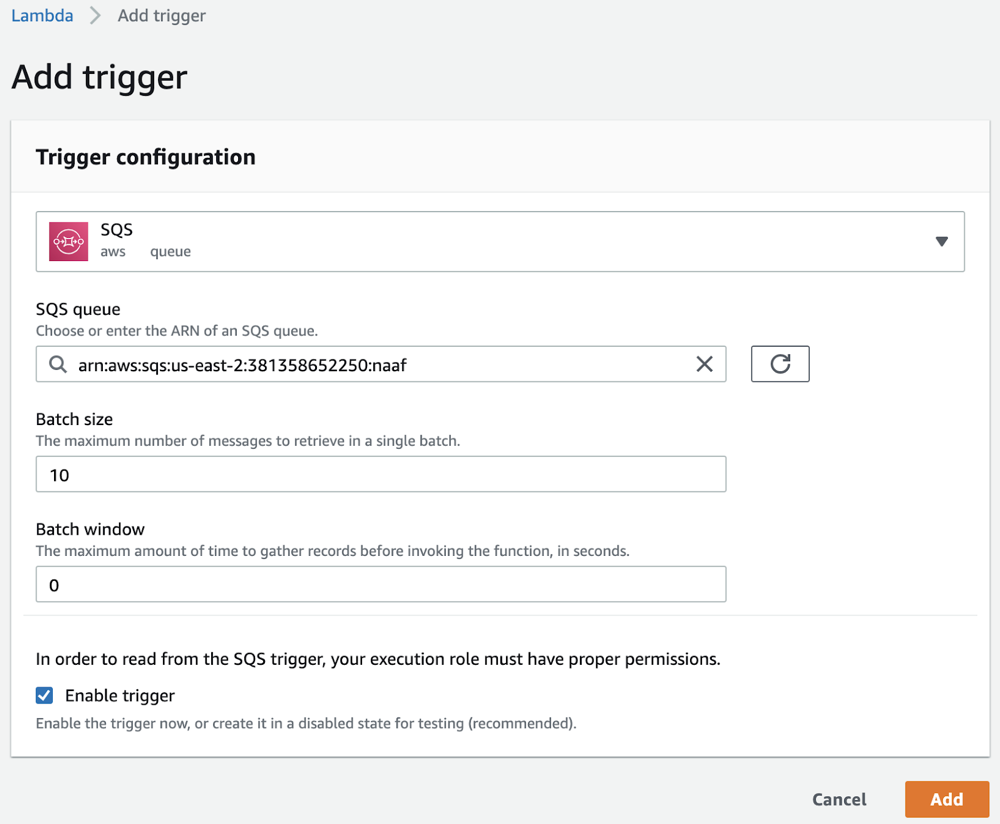

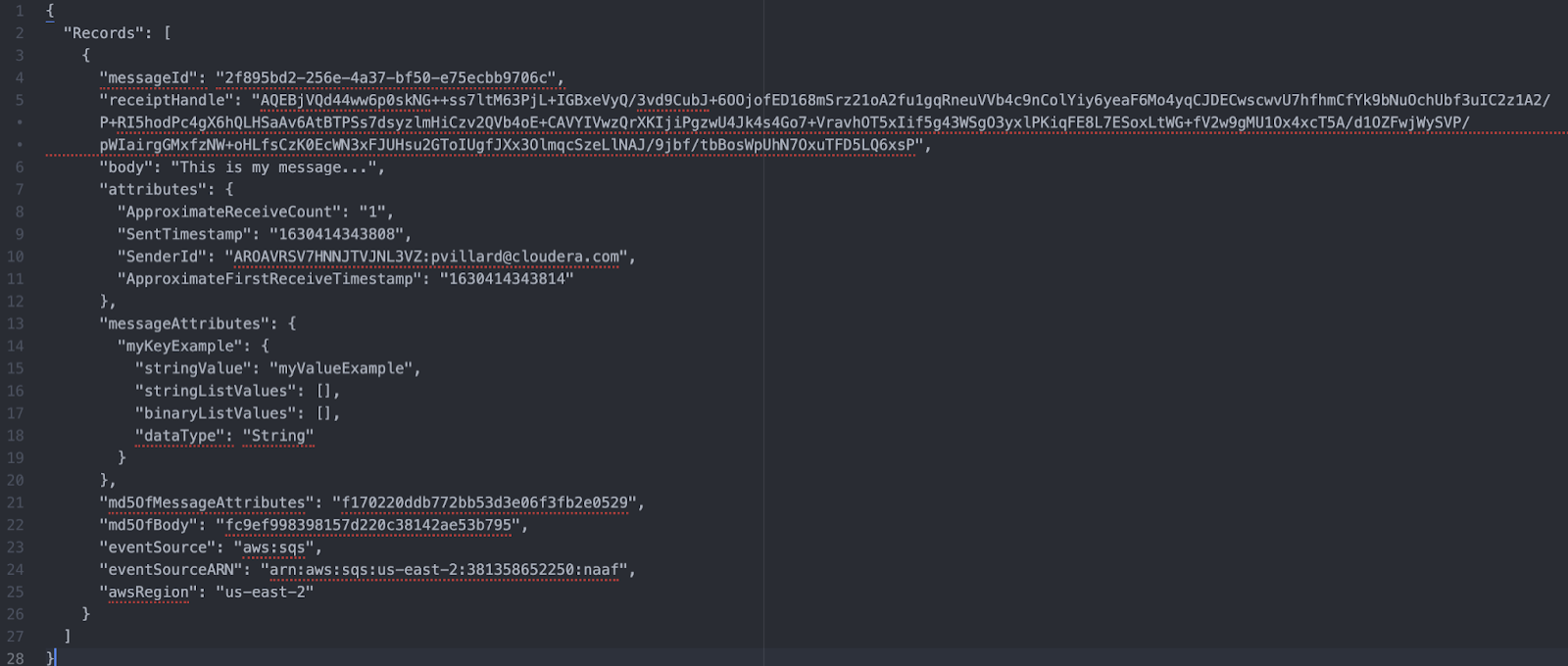

AWS SQS

When using the AWS SQS trigger with a particular queue, the configuration may look like this:

The FlowFile’s content would look like this:

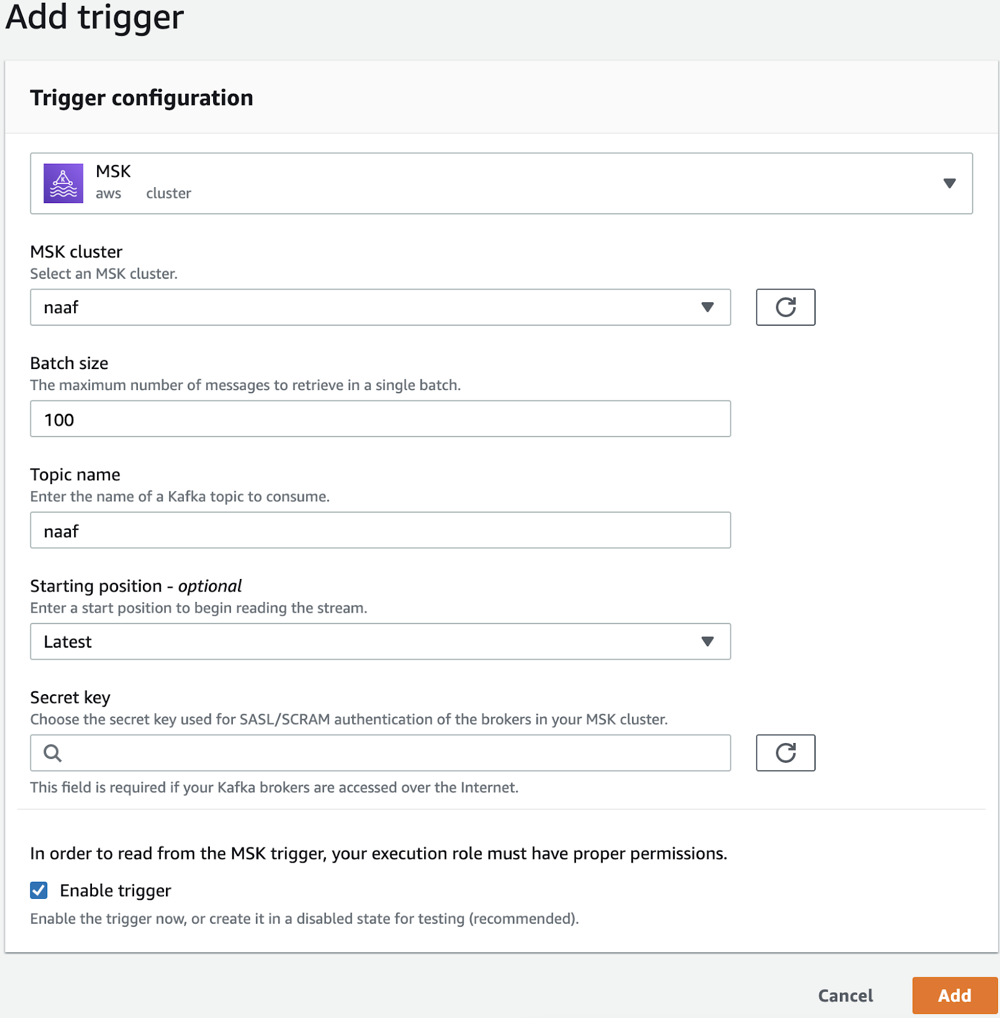

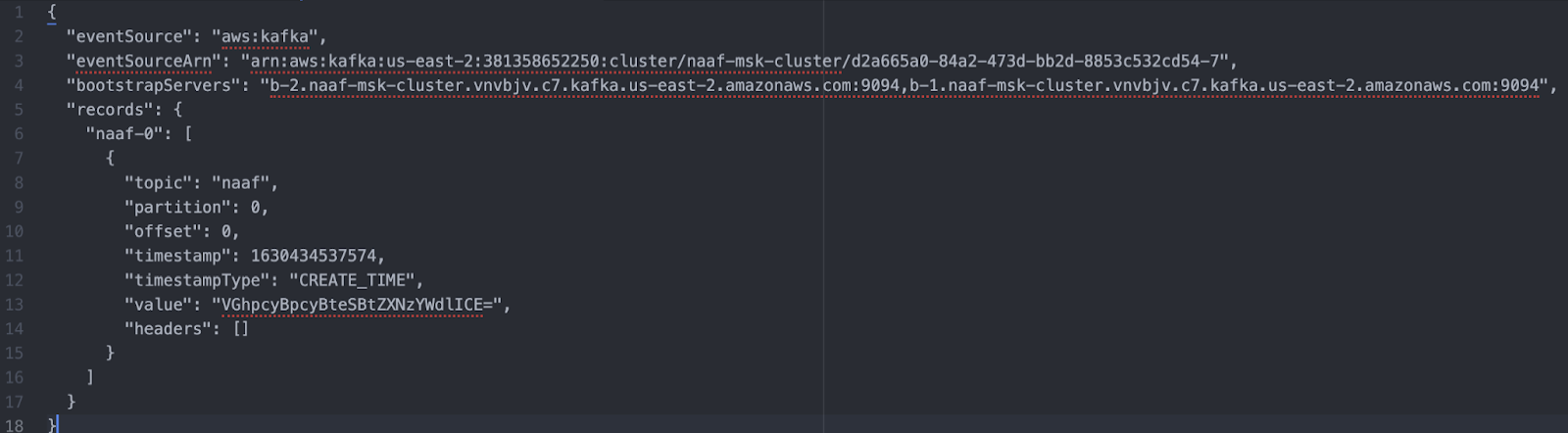

AWS MSK

When using the AWS MSK trigger with a particular Kafka cluster and topic, the configuration may look like this:

The FlowFile’s content would look like this:

Note that the data associated with a particular record may be base 64 encoded

(the value field in the above screenshot). In this case, you may want to

consider the Base64EncodeContent processor in your flow definition to decode the

content.