Cloudera Search Tasks and Processes

For content to be searchable, it must exist in CDH and be indexed. Content can either already exist in CDH and be indexed on demand or it can be updated and indexed continuously. The first step towards making content searchable is to ensure it is ingested or stored in CDH.

Ingestion

Content can be moved to CDH through techniques such as using:

- Flume, a flexible, agent-based data ingestion framework.

- A copy utility such as distcp for HDFS.

- Sqoop, a structured data ingestion connector.

- fuse-dfs.

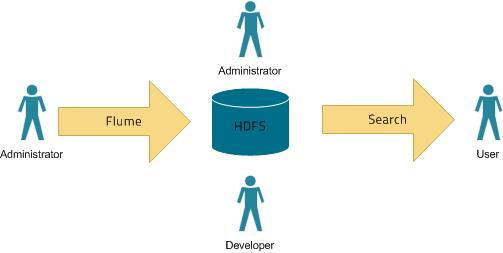

In a typical environment, administrators establish systems for search. For example, HDFS is established to provide storage; Flume or distcp are established for content ingestion. Once administrators establish these services, users can use ingestion technologies such as file copy utilities or Flume sinks.

Indexing

Content must be indexed before it can be searched. Indexing is comprised of a set of steps:

- ETL Steps: Extraction, Transformation,

and Loading (ETL) is handled using existing engines or frameworks such as Apache

Tika or Cloudera Morphlines.

- Content and metadata extraction

- Schema mapping

- Index creation: Indexes are created by

Lucene.

- Index creation

- Index serialization

Indexes are typically stored on a local file system. Lucene supports additional index writers and readers. One such index interface is HDFS-based and has been implemented as part of Apache Blur. This index interface has been integrated with Cloudera Search and modified to perform well with CDH-stored indexes. All index data in Cloudera Search is stored in HDFS and served from HDFS.

There are three ways to index content:

Batch indexing using MapReduce

To use MapReduce to index documents, documents are first written to HDFS. A MapReduce job can then be run on the content in HDFS, producing a Lucene index. The Lucene index is written to HDFS, and this index is subsequently used by search services to provide query results.

Batch indexing is most often used when bootstrapping a search cluster. The Map component of the MapReduce task parses input into indexable documents and the Reduce component contains an embedded Solr server that indexes the documents produced by the Map. A MapReduce-based indexing job can also be configured to utilize all assigned resources on the cluster, utilizing multiple reducing steps for intermediate indexing and merging operations, with the last step of reduction being to write to the configured set of shard sets for the service. This makes the batch indexing process as scalable as MapReduce workloads.

Near Real Time (NRT) indexing using Flume

Flume events are typically collected and written to HDFS. While any Flume event could be written, logs are a common case.

Cloudera Search includes a Flume sink that includes the option to directly write events to the indexer. This sink provides a flexible, scalable, fault tolerant, near real time (NRT) system for processing continuous streams of records, creating live-searchable, free-text search indexes. Typically it is a matter of seconds from data ingestion using the Flume sink to that content potentially appearing in search results, though this duration is tunable.

The Flume sink has been designed to meet the needs of identified use cases that rely on NRT availability. Data can flow from multiple sources through multiple flume nodes. These nodes, which can be spread across a network route this information to one or more Flume indexing sinks. Optionally, you can split the data flow, storing the data in HDFS while also writing it to be indexed by Lucene indexers on the cluster. In that scenario data exists both as data and as indexed data in the same storage infrastructure. The indexing sink extracts relevant data, transforms the material, and loads the results to live Solr search servers. These Solr servers are then immediately ready to serve queries to end users or search applications.

This system is flexible and customizable, and provides a high level of scaling as parsing is moved from the Solr server to the multiple Flume nodes for ingesting new content.

Search includes parsers for a set of standard data formats including Avro, CSV, Text, HTML, XML, PDF, Word, and Excel. While many formats are supported, you can extend the system by adding additional custom parsers for other file or data formats in the form of Tika plug-ins. Any type of data can be indexed: a record is a byte array of any format and parsers for any data format and any custom ETL logic can be established.

In addition, Cloudera Search comes with a simplifying Extract-Transform-Load framework called Cloudera Morphlines that can help adapt and pre-process data for indexing. This eliminates the need for specific parser deployments, replacing them with simple commands.

Cloudera Search has been designed to efficiently handle a variety of use cases.

- Search supports routing to multiple Solr collections as a way of making a single set of servers support multiple user groups (multi-tenancy).

- Search supports routing to multiple shards to improve scalability and reliability.

- Index servers can be either co-located with live Solr servers serving end user queries or they can be deployed on separate commodity hardware, for improved scalability and reliability.

- Indexing load can be spread across a large number of index servers for improved scalability, and indexing load can be replicated across multiple index servers for high availability.

This is a flexible, scalable, highly available system that provides low latency data acquisition and low latency querying. Rather than replacing existing solutions, Search complements use-cases based on batch analysis of HDFS data using MapReduce. In many use cases, data flows from the producer through Flume to both Solr and HDFS. In this system, NRT ingestion, as well as batch analysis tools can be used.

NRT indexing using some other client that uses the NRT API

Documents written by a third-party directly to HDFS can trigger indexing using the Solr REST API. This API can be used to complete a number of steps:

- Extract content from the document contained in HDFS where the document is referenced by a URL.

- Map the content to fields in the search schema.

- Create or update a Lucene index.

This could be useful if you do indexing as part of a larger workflow. For example, you might choose to trigger indexing from an Oozie workflow.

Querying

Once data has been made available as an index, the query API provided by the search service allows for direct queries to be executed, or facilitated through some third party, such as a command line tool or graphical interface. Cloudera Search provides a simple UI application that can be deployed with Hue, but it is just as easy to develop a custom application, fitting your needs, based on the standard Solr API. Any application that works with Solr is compatible and runs as a search-serving application for Cloudera Search, as Solr is the core.

| << Search Architecture | Cloudera Search Tutorial >> | |