Yunikorn Gang scheduling

Yunikorn Gang Scheduling is the default scheduling mechanism in Cloudera AI. Yunikorn schedules the workload pods when Quota Management is enabled.

Gang scheduling is a scheduling mechanism in Yunikorn where Yunikorn schedules an application (a set of pods) only when all the resources necessary for bringing up all the pods in the application are available in the cluster. If the resources for bringing up all the pods are not available, the application will not be scheduled, thus helping to use cluster resources efficiently.

Most workloads need only a single pod and work without any additional configuration in the Cloudera AI. Those workloads however, that take additional worker pods, need additional configuration of Gang parameters to work optimally.

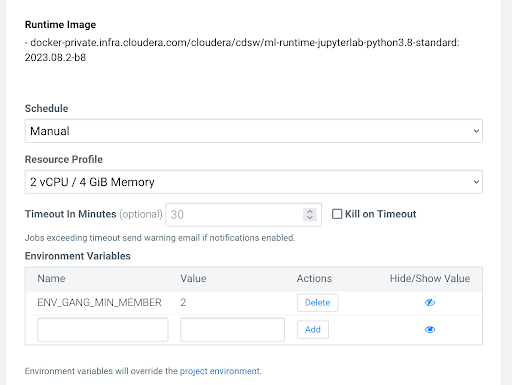

For example, in case there is a Spark job which creates additional executor pods, the driver pod is created in the default setting with the Gang Scheduler and the executor pods use the regular scheduling (that is, the pods will not be scheduled with a Gang Scheduler). However, this is not optimal to run the workloads so. It is important to specify the expected number of worker pods that the Spark driver pod will schedule. Complete the configuration with the following environment variable:

ENV_GANG_MIN_MEMBER - It sets the number of worker pods apart from the driver

pod.

Model scheduling

For model workloads, Cloudera AI configures the same number of driver pods as the number of replicas configured for the model. Consequently, the models will not be displayed if the system cannot allocate the resources for all the replicas configured. It is therefore recommended to increase the resource quota or set the replicas properly.

You can override this behavior by using the following environment variable:

ENV_DISABLE_GANG_SCHEDULER.

If the value for this variable is set to true for the workload, the Gang Scheduling will be disabled only for that workload. (This is not recommended.)

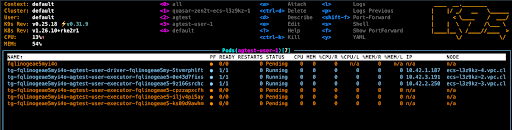

Placeholder pods

Yunikorn completes the Gang Scheduling work by creating placeholder pods for all the resources

needed for the workload. If you configure the ENV_GANG_MIN_MEMBER variable with

the value 5 and the system cannot allocate resources for all the 6

placeholder pods (5 workers + 1 driver), the driver pod will remain in PENDING state.

By default, the scheduler keeps placeholder pods in Cloudera AI for 60 seconds. If the scheduler cannot allocate resources within 60 seconds, the workload will be terminated with a FAILED status, and all the pods will be terminated.

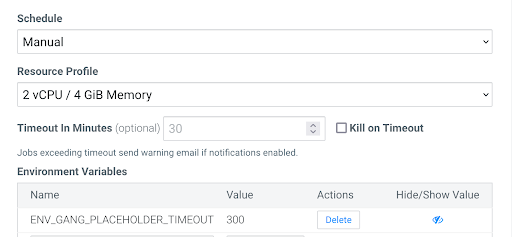

Increase the default placeholder timeout value for a workload if needed by configuring the following environment variable:

ENV_GANG_PLACEHOLDER_TIMEOUT - It sets the number of seconds until

scheduling.

In the example, the placeholder pods will be terminated after 300 seconds.

Configuring resources

By default, the resources for the executors are set similarly as those of the drivers. If the executor resources are different from those of the driver, the values can be configured using the following environment variables:

-

Set the executor resources for CPUs with:

ENV_GANG_CPU_REQUEST. -

Set the executor resources for memory with:

ENV_GANG_MEMORY_REQUEST. -

Set the executor resources for GPUs with:

ENV_GANG_GPU_REQUEST.

Only configure the resources that are different from that of the driver. If the CPU and memory

capacity needs for the executor are the same as for the driver, only configure the

ENV_GANG_GPU_REQUEST variable.