Evaluating the generated dataset

After generating a dataset, it is essential to evaluate its quality to ensure that only the highest-quality data is retained. This can be achieved using the LLM-as-a-judge approach, which evaluates and scores prompts and completions, filtering out irrelevant or low-quality data.

-

In the Cloudera console, click

the Cloudera AI

tile.

The Cloudera AI Workbenches page displays.

-

Click on the name of the workbench.

The workbenches Home page displays.

- Click AI Studios.

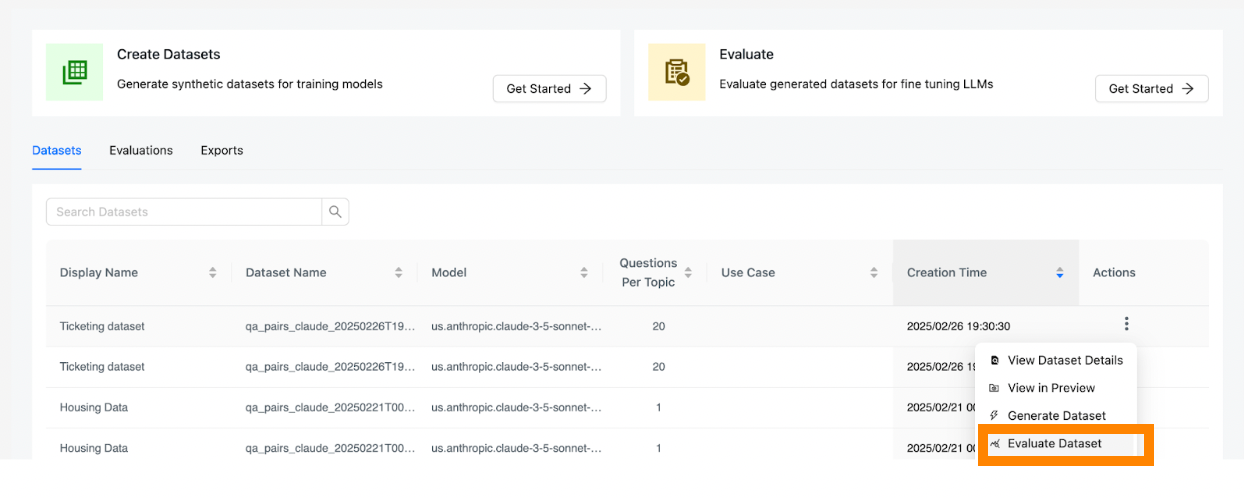

- In the Synthetic Data Studio page, locate the dataset you want to evaluate.

-

Click

next to the

dataset and click Evaluate Dataset.

next to the

dataset and click Evaluate Dataset.

-

Define a prompt to guide the LLM-as-a-judge on how to evaluate and score the

dataset. Example prompt for evaluation:

Table 1. Field Name Value Evaluation Display name Ticketing Dataset Evaluation Prompt You are given a user query for a ticketing support system and the system responses which is a keyword that is used to forward the user to the specific subsystem.

Evaluate whether the queries:

- Use professional, respectful language

- Avoid assumptions about demographics or identity

- Provide enough details to solve the problem

Evaluate whether the responses use only one of the the four following keywords: cancel_ticket,customer_service,pay,report_payment_issue

Evaluate whether the solutions and responses are correctly matched based on the following definitions:

cancel_ticket means that the customer wants to cancel the ticket.

customer_service means that customer wants to talk to customer service.

pay means that the customer wants to pay the bill.

report_payment_issue means that the customer is facing payment issues and wants to be forwarded to the billing department to resolve the issue.

Give a score of 1-5 based on the following instructions:

If the responses don’t match the four keywords give always value 1.

Rate the quality of the queries and responses based on the instructions give a rating between 1 to 5.

Entries per seed 5 Temperature 0 TopK 100 Max Tokens 2048 - After defining the prompt and parameters, click Evaluate to begin the evaluation process.

- Once the evaluation is complete, select the evaluation and click Preview to review the evaluated dataset. Each sample in the dataset will include fields for scoring and justification.

- Understand the evaluation output by reviewing the Justification and Score fields. The Justification field explains how the LLM scored each query and completion. The Score field is a numerical value (1–5) that can be used to filter data based on quality.

- Click Download to download the evaluated dataset for further analysis or use the dataset for additional processing or fine-tuning of your language model.