You learn how to identify an error related to high partition workloads that require

configuration of your Hive Virtual Warehouse to run successfully. You might need to

configure your Virtual Warehouse to prevent these errors when inserting data into workloads

with a large number of partitions and columns.

To prevent an error when inserting data into a high partition workload, such as a

table having 5,000 partitions and 100 columns, configure the Hive Virtual Warehouse as

described in the steps below. The error message you might see when inserting data

into a high partition workload that is not configured properly looks something like

this:

ERROR : FAILED: Execution Error, return code 40000 from org.apache.hadoop.hive.ql.exec.MoveTask. MetaException(message:One or more instances could not be made persistent)

-

Try changing the size of your Hive Virtual

Warehouse.

For example, if you experience the high partition error in a small Virtual

Warehouse, try using a medium Virtual Warehouse and set min/max nodes to

40.

-

In the CDW UI, in Overview, select Virtual Warehouses,

click Options

,

and select Edit.

,

and select Edit.

-

Click .

-

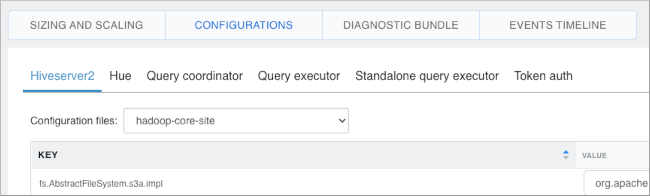

Tune HS2 parameters in the Virtual Warehouse.

For example, search for and set the following properties, or if the

property is not found, click + to add a custom configuration, and set it as

follows.

set hive.optimize.sort.dynamic.partition.threshold=0;

set hive.thrift.client.max.message.size=2147483647;

set hive.metastore.client.skip.columns.for.partitions=true;

set hive.stats.autogather=false;

set hive.stats.column.autogather=false;

set hive.msck.repair.batch.size=[***TABLE SCHEMA SIZE***];

-

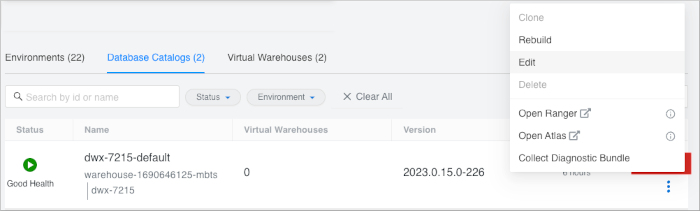

Navigate to , and select your Database Catalog.

-

Click Options

, and

select Edit.

, and

select Edit.

-

Tune Metastore parameters in the Database Catalog.

For example, click , search for and set the following properties, or if the property

is not found, click

+ to add a custom configuration, and

set it as follows.

hive.metastore.direct.sql.batch.size=[***TABLE SCHEMA SIZE***]

hive.txn.timeout=3600

hive.metastore.try.direct.sql=true

hive.metastore.try.direct.sql.ddl=true

,

and select

,

and select