Using Spark Hive Warehouse and HBase Connector Client .jar files with Livy

This section describes how to use Spark Hive Warehouse Connector (HWC) and HBase-Spark connector client .jar files with Livy. These steps are required to ensure token acquisition and avoid authentication errors.

Use the following steps to use Spark HWC and HBase-Spark client .jar files with Livy:

- Copy the applicable HWC or HBase-Spark .jar files to the Livy server node and add

these folders to the

livy.file.local-dir-whitelistproperty in thelivy.conffile. - Add the required Hive and HBase configurations in the Spark client configuration

folder: Or add the required configurations using the

- Hive:

For Spark 2 — /etc/spark/conf/hive-site.xml

For Spark 3 — /etc/spark3/conf/hive-site.xml

- HBase:

For Spark 2 — /etc/spark/conf/hbase-site.xml

For Spark 3 — /etc/spark3/conf/hbase-site.xml

conffield in the session creation request. This is equivalent to using "--conf" in spark-submit. - Hive:

- Reference these local .jar files in the session creation request using the

file:///URI format.

HWC Example

- In Cloudera Manager, go to .

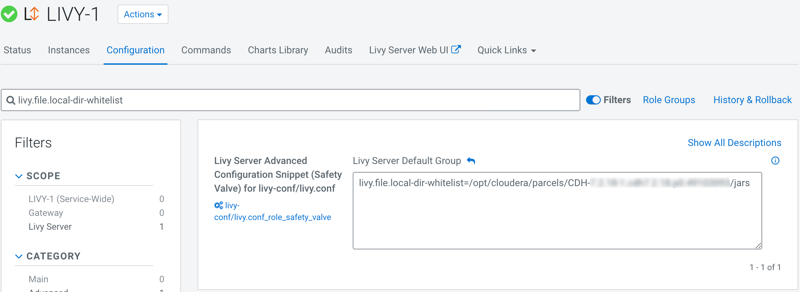

- Click the Configuration tab and search for the "Livy Server Advanced Configuration Snippet (Safety Valve) for livy-conf/livy.conf" property.

- Add the /opt/cloudera/parcels/CDH-<version>/jars folder to

the

livy.file.local-dir-whitelistproperty in thelivy.conffile.

- Save the changes and restart the Livy service.

- Log in to the Zeppelin Server Web UI and restart the Livy interpreter.

- When running the Zeppelin Livy interpreter, reference the HWC .jar file as shown

below.

- For Spark 2:

%livy2.conf livy.spark.jars file:///opt/cloudera/parcels/CDH-<version>/jars/hive-warehouse-connector-assembly-<version>.jar - For Spark 3:

%livy2.conf livy.spark.jars file:///opt/cloudera/parcels/CDH-<version>/jars/hive-warehouse-connector-spark3-assembly-<version>.jar

- For Spark 2:

HBase-Spark Connector Example

- When running the Zeppelin Livy interpreter, reference the following HBase .jar

files as shown below. Note that some of these .jar files have 644/root

permissions, and therefore may throw an exception. If this happens, you may need

to change the permissions of the applicable .jar files on the Livy node.

%livy.conf livy.spark.jars file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-spark3-1.0.0.7.2.18.0-566.jar file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-shaded-protobuf-4.1.4.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-shaded-miscellaneous-4.1.4.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-protocol-shaded-2.4.17.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-shaded-netty-4.1.4.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-shaded-client-2.4.17.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-shaded-mapreduce-2.4.17.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-common-2.4.17.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-server-2.4.17.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-client-2.4.17.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-protocol-2.4.17.7.2.18.0-566.jar, file:///opt/cloudera/parcels/CDH-<version>/jars/hbase-mapreduce-2.4.17.7.2.18.0-566.jar, ile:///opt/cloudera/parcels/CDH-<version>/jars/guava-32.1.3-jre.jar