Use Spark actions with a custom Python executable

Spark 2 supports both PySpark and JavaSpark applications. Learn how to use a custom Python executable in a given Spark action.

In case of PySpark, in Spark 2, you can designate a custom Python executable for your

Spark application by utilizing the spark.pyspark.python Spark conf

argument. For more details, see Spark 2.4 documentation. Consequently,

if you include the spark.pyspark.python Spark conf argument in your

Oozie Spark action, the Python executable you specify is used when executing the Spark

action through Oozie.

To simplify the usage of a customized Python executable with Oozie's Spark action,

you can use the oozie.service.SparkConfigurationService.spark.pyspark.python

property. This property functions similarly to Spark's spark.pyspark.python conf

argument, allowing you to specify a custom Python executable. Oozie then passes this executable

to the underlying Spark application executed through Oozie.

You can specify the

oozie.service.SparkConfigurationService.spark.pyspark.python property in

different ways.

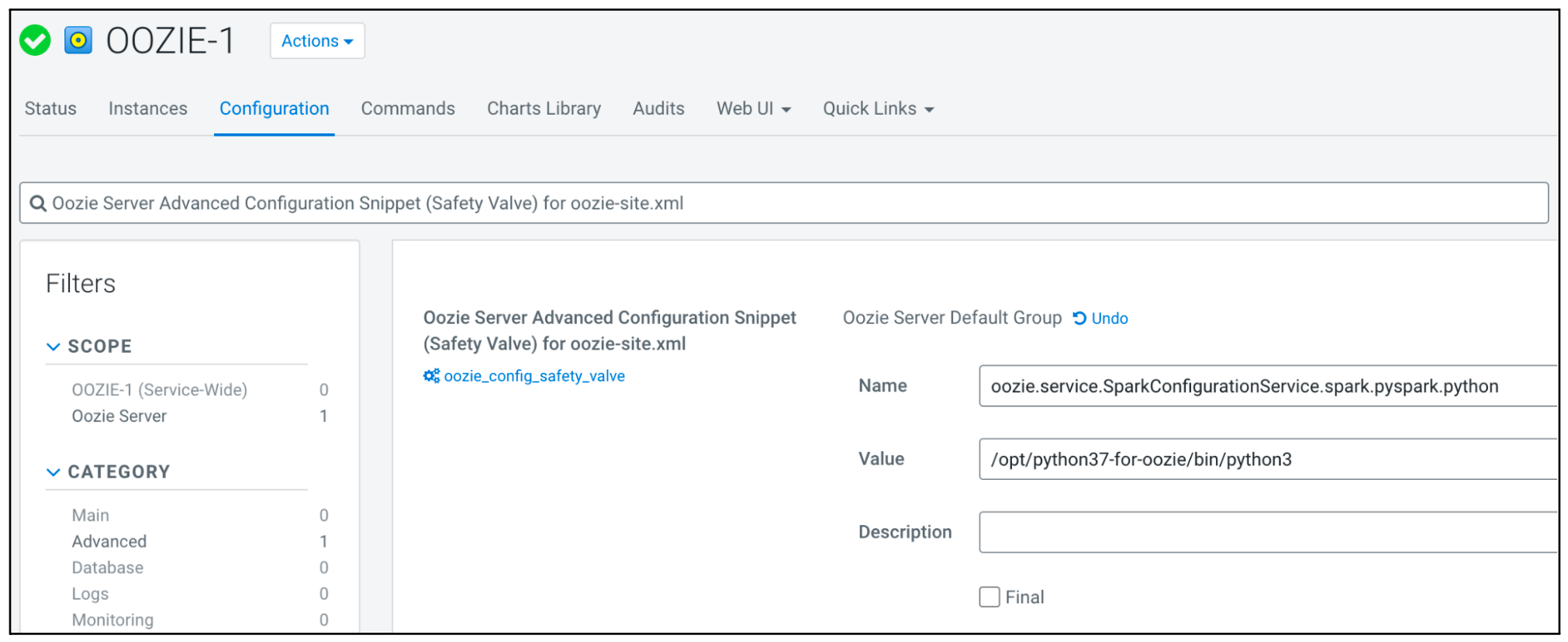

Setting Spark actions with a custom Python executable globally

-

Navigate to Oozie's configuration page in Cloudera Manager.

-

Search for

Oozie Server Advanced Configuration Snippet (Safety Valve) for oozie-site.xml. -

Add a new property named

oozie.service.SparkConfigurationService.spark.pyspark.python. -

Specify its value to point to your custom Python executable.

For example, if you installed Python 3.7 to/opt/python37-for-oozie, then specify the value as/opt/python37-for-oozie/bin/python3.

-

Save the modifications.

-

Allow Cloudera Manager some time to recognize the changes.

-

Redeploy Oozie.

Setting Spark actions with a custom Python executable per workflows

<workflow-app name="spark_workflow" xmlns="uri:oozie:workflow:1.0">

<global>

<configuration>

<property>

<name>oozie.service.SparkConfigurationService.spark.pyspark.python</name>

<value>/opt/python37-for-oozie/bin/python3</value>

</property>

</configuration>

</global>

<start to="spark_action"/>

<action name="spark_action">

...The same workflow-level Python executable can be achieved if you set the property in

your job.properties file.

Setting Spark actions with a custom Python executable for a given Spark action only

<workflow-app name="spark_workflow" xmlns="uri:oozie:workflow:1.0">

<start to="spark_action"/>

<action name="spark_action">

<spark xmlns="uri:oozie:spark-action:1.0">

<resource-manager>${resourceManager}</resource-manager>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>oozie.service.SparkConfigurationService.spark.pyspark.python</name>

<value>/opt/python37-for-oozie/bin/python3</value>

</property>

</configuration>

...The following order of precedence is applied for this configuration:

-

Oozie does not override the configuration of

spark.pyspark.pythonin the<spark-opts>tag of your action definition if you have already set it. -

If you have configured the property at the action level, it takes precedence over all other settings, and the remaining configurations are disregarded.

-

If you have configured the property in the global configuration of the workflow, the value from there is used.

-

If the setting is not available in either of the previous locations, the value configured in your

job.propertiesfile is used. -

Lastly, the global safety-valve setting comes into effect.

oozie.service.SparkConfigurationService.spark.pyspark.python is

set as a safety-valve to /opt/python37-for-oozie/bin/python3, but

in a workflow or in a specific action you want to use the default Python executable

configured for Spark 2, you can set the value of the property to

default. For

example:<workflow-app name="spark_workflow" xmlns="uri:oozie:workflow:1.0">

<global>

<configuration>

<property>

<name>oozie.service.SparkConfigurationService.spark.pyspark.python</name>

<value>default</value>

</property>

</configuration>

</global>

<start to="spark_action"/>

<action name="spark_action">

...spark.pyspark.python Spark conf

is not set at all.