Distributed applications take advantage of horizontally-scaled clusters by dividing computation tasks across nodes in a cluster. Storm offers this and additional finer-grained ways to increase the parallelism of a Storm topology:

Increase the number of workers

Increase the number of executors

Increase the number of tasks

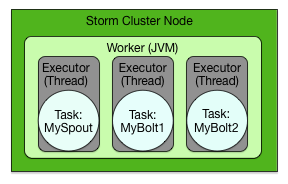

By default, Storm uses a parallelism factor of 1. Assuming a single-node Storm cluster, a parallelism factor of 1 means that one worker, or JVM, is assigned to execute the topology, and each component in the topology is assigned to a single executor. The following diagram illustrates this scenario. The topology defines a data flow with three tasks, a spout and two bolts.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

Hortonworks recommends that Storm developers store parallelism settings in a configuration file read by the topology at runtime rather than hard-coding the values passed to the Parallelism API. This topic describes and illustrates the use of the API, but developers can achieve the same effect by reading the parallelism values from a configuration file. |

Increasing Parallelism with Workers

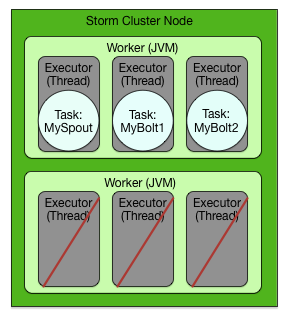

Storm developers can easily increase the number of workers assigned to execute a topology with the Config.setNumWorkers() method. This code assigns two workers to execute the topology, as the following figure illustrates.

... Config config = new Config(); config.setNumWorkers(2); ...

Adding new workers comes at a cost: additional overhead for a new JVM.

This example adds an additional worker without additional executors or tasks, but to take full advantage of this feature, Storm developers must add executors and tasks to the additional JVMs (described in the following examples).

Increasing Parallelism with Executors

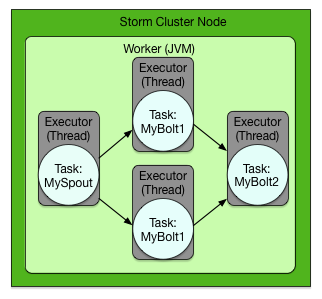

The parallelism API enables Storm developers to specify the number of executors for each worker with a parallelism hint, an optional third parameter to the setBolt() method. The following code sample sets this parameter for the MyBolt1 topology component.

... Config config = new Config(); TopologyBuilder builder = new TopologyBuilder(); builder.setSpout(MY_SPOUT_ID, mySpout); builder.setBolt(MY_BOLT1_ID, myBolt1, 2).shuffleGrouping(MY_SPOUT_ID); builder.setBolt(MY_BOLT2_ID, myBolt2).shuffleGrouping(MY_SPOUT_ID); ...

This code sample assigns two executors to the single, default worker for the specified topology component, MyBolt1, as the following figure illustrates.

The number of executors is set at the level of individual topology components, so adding executors affects the code for the specified spouts and bolts. This differs from adding workers, which affects only the configuration of the topology.

Increasing Parallelism with Tasks

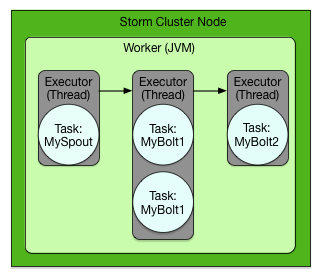

Finally, Storm developers can increase the number of tasks assigned to a single topology component, such as a spout or bolt. By default, Storm assigns a single task to each component, but developers can increase this number with the setNumTasks() method on the BoltDeclarer and SpoutDeclarer objects returned by the setBolt() and setSpout() methods.

... Config config = new Config(); TopologyBuilder builder = new TopologyBuilder(); builder.setSpout(MY_SPOUT_ID, mySpout); builder.setBolt(MY_BOLT1_ID, myBolt1).setNumTasks(2).shuffleGrouping(MY_SPOUT_ID); builder.setBolt(MY_BOLT1_ID, myBolt2).shuffleGrouping(MY_SPOUT_ID); ...

This code sample assigns two tasks to execute MyBolt1, as the following figure illustrates. This added parallelism might be appropriate for a bolt containing a large amount of data processing logic. However, adding tasks is like adding executors because the code for the corresponding spouts or bolts also changes.

Putting it All Together

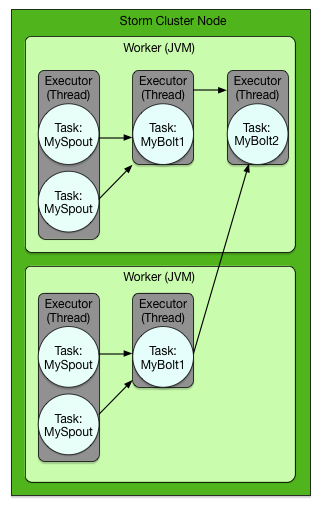

Storm developers can fine-tune the parallelism of their topologies by combining new workers, executors and tasks. The following code sample demonstrates all of the following:

Split processing of the MySpout component between four tasks in two separate executors across two workers

Split processing of the MyBolt1 component between two executors across two workers

Centralize processing of the MyBolt2 component in a single task in a single executor in a single worker on a single worker

... Config config = new Config(); config.setNumWorkers(2); TopologyBuilder builder = new TopologyBuilder(); builder.setSpout(MY_SPOUT_ID, mySpout, 2).setNumTasks(4); builder.setBolt(MY_BOLT1_ID, myBolt1, 2).setNumTasks(2).shuffleGrouping(MY_SPOUT_ID); builder.setBolt(MY_BOLT2_ID, myBolt2).shuffleGrouping(MY_SPOUT_ID); ...

The degree of parallelism depicted might be appropriate for the following topology requirements:

High-volume streaming data input

Moderate data processing logic

Low-volume topology output

See the Storm javadocs at http://storm.apache.org/apidocs for more information about the Storm API.