To install Spark manually, see Installing and Configuring Apache Spark in the Manual Installation Guide.

To install Spark on a Kerberized cluster, first read Installing Spark with Kerberos (the next topic in this Quick Start Guide).

The remainder of this section describes how to install Spark using Ambari. (For general information about installing HDP components using Ambari, see Adding a Service in the Ambari Documentation Suite.)

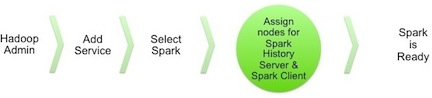

The following diagram shows the Spark installation process using Ambari.

To install Spark using Ambari, complete the following steps:

Choose the Ambari "Services" tab.

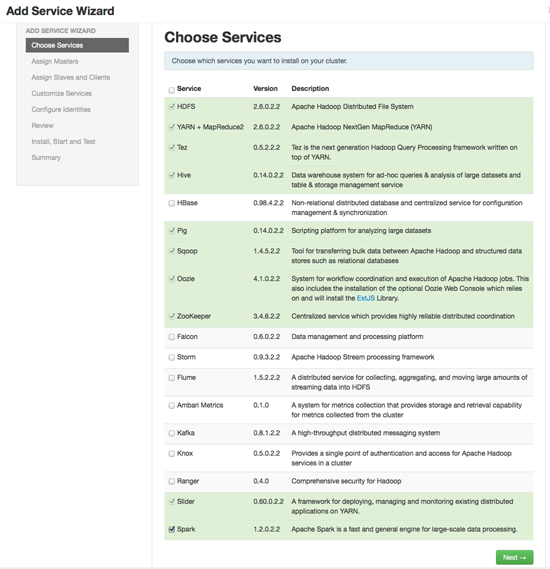

In the Ambari "Actions" pulldown menu, choose "Add Service." This will start the Add Service Wizard. You'll see the Choose Services screen.

Select "Spark", and click "Next" to continue.

(Starting with HDP 2.2.4, Ambari will install Spark version 1.2.1, not 1.2.0.2.2.)

Ambari will display a warning message. Confirm that your cluster is running HDP 2.2.4 or later, and then click "Proceed".

![[Note]](../common/images/admon/note.png)

Note You can reconfirm component versions in Step 6 before finalizing the upgrade.

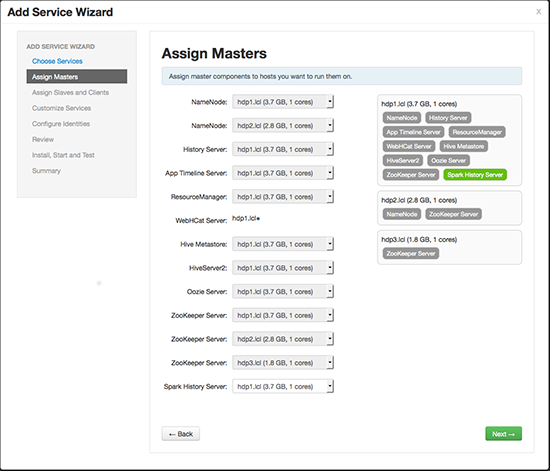

On the Assign Masters screen, choose a node for the Spark History Server.

Click "Next" to continue.

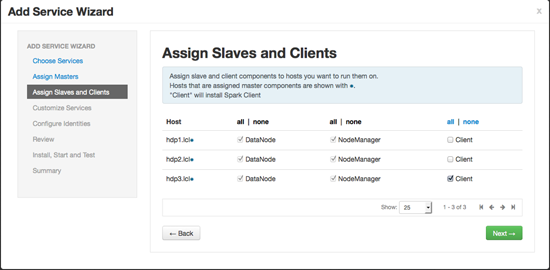

On the Assign Slaves and Clients screen, specify the machine(s) that will run Spark clients.

Click "Next" to continue.

On the Customize Services screen there are no properties that must be specified. We recommend that you use default values for your initial configuration. Click "Next" to continue. (When you are ready to customize your Spark configuration, see Apache Spark 1.2.1 properties.)

Ambari will display the Review screen.

![[Important]](../common/images/admon/important.png)

Important On the Review screen, make sure all HDP components are version 2.2.4 or later.

Click "Deploy" to continue.

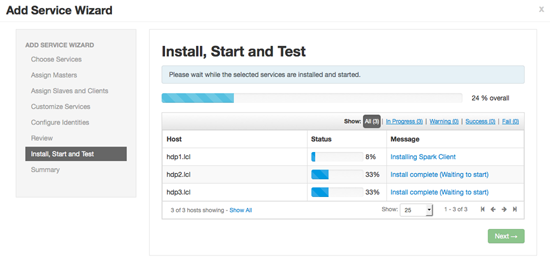

Ambari will display the Install, Start and Test screen. The status bar and messages will indicate progress.

When finished, Ambari will present a summary of results. Click "Complete" to finish installing Spark.

![[Caution]](../common/images/admon/caution.png) | Caution |

|---|---|

Ambari will create and edit several configuration files. Do not edit these files directly if you configure and manage your cluster using Ambari. |