Understanding Data Lake Security

The general consensus in nearly every industry is that data is an essential new driver of competitive advantage. Hadoop plays a critical role in the modern data architecture by providing low-cost, large-scale data storage and processing. The successful Hadoop journey typically starts with data architecture optimization or new advanced analytic applications, which leads to the formation of a Data Lake. As new and existing types of data from sources such as machine sensors, server logs, clickstream data, and other sources flow into the Data Lake, it serves as a central repository based on shared Hadoop services that power deep organizational insights across a broad and diverse set of data.

The need to protect the Data Lake with comprehensive security is clear. As large and growing volumes of diverse data are channeled into the Data Lake, it will store vital and often highly sensitive business data. However, the external ecosystem of data and operational systems feeding the Data Lake is highly dynamic and can introduce new security threats on a regular basis. Users across multiple business units can access the Data Lake freely and refine, explore, and enrich its data at will, using methods of their own choosing, further increasing the risk of a breach. Any breach of this enterprise-wide data can be catastrophic: privacy violations, regulatory infractions, or the compromise of vital corporate intelligence. To prevent damage to the company’s business, customers, finances, and reputation, IT leaders must ensure that their Data Lake meets the same high standards of security as any legacy data environment.

Only as Secure as the Weakest Link

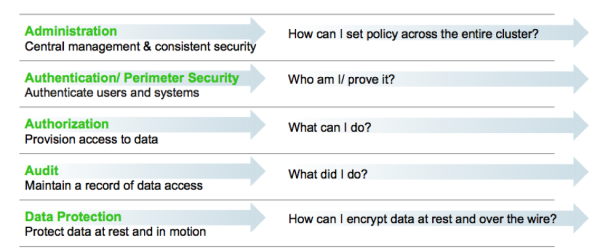

Piecemeal protections are no more effective for a Data Lake than they would be in a traditional repository. Hortonworks firmly believes that effective Hadoop security depends on a holistic approach. Our framework for comprehensive security revolves around five pillars of security: administration, authentication/ perimeter security, authorization, audit, and data protection.

Requirements for Enterrprise-Grade Security

Security administrators must address questions and provide enterprise-grade coverage across each of these areas as they design the infrastructure to secure data in Hadoop. If any of these pillars is vulnerable, it will become a risk vector built into the very fabric of the company’s Big Data environment. In this light, your Hadoop security strategy must address all five pillars, with a consistent implementation approach to ensure their effectiveness.

Needless to say, you can’t achieve comprehensive protection across the Hadoop stack by using a hodgepodge of point solutions. Security must be an integral part of the platform on which your Data Lake is built. This bottom-up approach makes it possible to enforce and manage security across the stack through a central point of administration, thereby preventubg gaps and inconsistencies. This approach is especially important for Hadoop implementations where new applications or data engines are always on the horizon in the form of new Open Source projects -- a dynamic scenario that can quickly exacerbate any vulnerability.

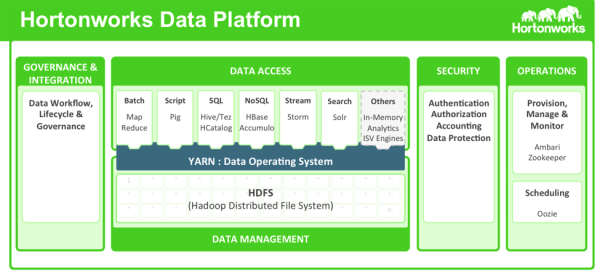

Hortonworks helps customers maintain the high levels of protection for enterprise data by building centralized security administration and management into the infrastructure of the Hortonworks Data Platform. HDP provides an enterprise-ready data platform with rich capabilities spanning security, governance, and operations. HDP includes powerful data security functionality that works across component technologies and integrates with preexisting EDW, RDBMS and MPP systems. By implementing security at the platform level, Hortonworks ensures that security is consistently administered to all of the applications across the stack, and simplifies the process of adding or removing Hadoop applications.

The Hortonworks Data Platform