Chapter 2. Introducing the Cloud Storage Connectors

When deploying HDP clusters on AWS or Azure cloud IaaS, you can take advantage of the

native integration with the cloud object storage services available on each of the cloud

platforms: Amazon S3 on AWS, and ADLS and

WASB on Azure. This integration is via cloud storage connectors included with HDP.

Their primary function is to help you connect to, access, and work with data the cloud storage

services. These connectors are not a replacement for HDFS and cannot be used as a replacement

for HDFS defaultFS.

The cloud connectors allow you to seamlessly access and work with data stored in Amazon S3, Azure ADLS and Azure WASB storage services, including, but not limited to, the following use cases:

Collect data for analysis and then load it into Hadoop ecosystem applications such as Hive or Spark directly from cloud storage services.

Persist data to cloud storage services for use outside of HDP clusters.

Copy data stored in cloud storage services to HDFS for analysis and then copy back to the cloud when done.

Share data between multiple HDP clusters – and between various external non-HDP systems – by pointing at the same data sets in the cloud object stores.

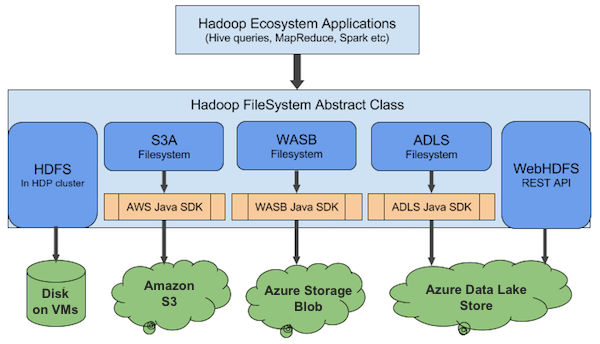

The S3A, ADLS and WASB connectors are implemented as individual Hadoop modules. The libraries and their dependencies are automatically placed on the classpath.

Figure 2.1. HDP Cloud Storage Connector Architecture

Amazon S3 is an object store. The S3A connector implements the Hadoop filesystem interface using WWS Java SDK to access the web service, and provides Hadoop applications with a filesystem view of the buckets. Applications can seamlessly manipulate data stored in Amazon S3 buckets with an URL starting with the s3a:// prefix.

Azure WASB is an object store with a flat name architecture (flat name space). The WASB connector implements the Hadoop filesystem interface using WASB Java SDK to access the web service, and provides Hadoop applications with a filesystem view of the blobs. Applications can seamlessly manipulate data stored in WASB with an URL starting with the wasb:// prefix.

Azure ADLS is a WebHDFS-compatible hierarchical file system. Applications can access the data in ADLS directly using WebHDFS REST API. Meanwhile, the ADLS connector implements the Hadoop filesystem interface using ADLS Java SDK to access the web service. Applications can manipulate data stored in ADLS with the URL starting with the adl:// prefix.

Table 2.1. Cloud Storage Connectors

| Cloud Storage Service | Connector Description | URL Prefix |

|---|---|---|

| Amazon Simple Storage Service (S3) | The S3A connector enables reading and writing files stored in the Amazon S3 object store. | s3a:// |

| Azure Data Lake Store (ADLS) | The ADLS connector enables reading and writing files stored in the ADLS file system. | adl:// |

| Windows Azure Storage Blob (WASB) | The WASB connector enables reading and writing both block blobs and page blobs from and to WASB object store. | wasb:// |

The cluster's default filesystem HDFS is defined in the configuration property

fs.defaultFS in core-site.xml. As a result, when running FS shell

commands or DistCp against HDFS, you can but do not need to specify the hdfs:// URL

prefix:

hadoop distcp hdfs://source-folder s3a://destination-bucket

hadoop distcp /source-folder s3a://destination-bucket

When working with the cloud using cloud URIs do not change

the value of fs.defaultFS to use a cloud storage connector as the filesystem for

HDFS. This is not recommended or supported. Instead, when

working with data stored in S3, ADLS, or WASB, use a fully qualified URL for that connector.

To get started with your chosen cloud storage service, refer to: