Installing Spark Using Ambari

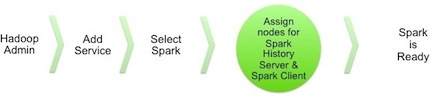

The following diagram shows the Spark installation process using Ambari. Before you install Spark using Ambari, refer to Adding a Service in the Ambari Operations Guide for background information about how to install Hortonworks Data Platform (HDP) components using Ambari.

![[Caution]](../common/images/admon/caution.png) | Caution |

|---|---|

During the installation process, Ambari creates and edits several configuration files. If you configure and manage your cluster using Ambari, do not edit these files during or after installation. Instead, use the Ambari web UI to revise configuration settings. |

To install Spark using Ambari, complete the following steps.

Click the Ambari "Services" tab.

In the Ambari "Actions" menu, select "Add Service."

This starts the Add Service wizard, displaying the Choose Services page. Some of the services are enabled by default.

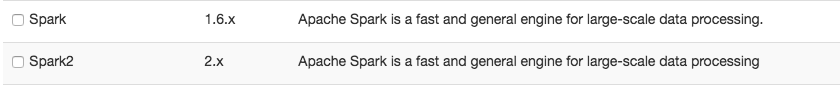

Scroll through the alphabetic list of components on the Choose Services page, and select "Spark", "Spark2", or both:

Click "Next" to continue.

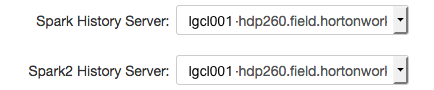

On the Assign Masters page, review the node assignment for the Spark History Server or Spark2 History Server, depending on which Spark versions you are installing. Modify the node assignment if desired, and click "Next":

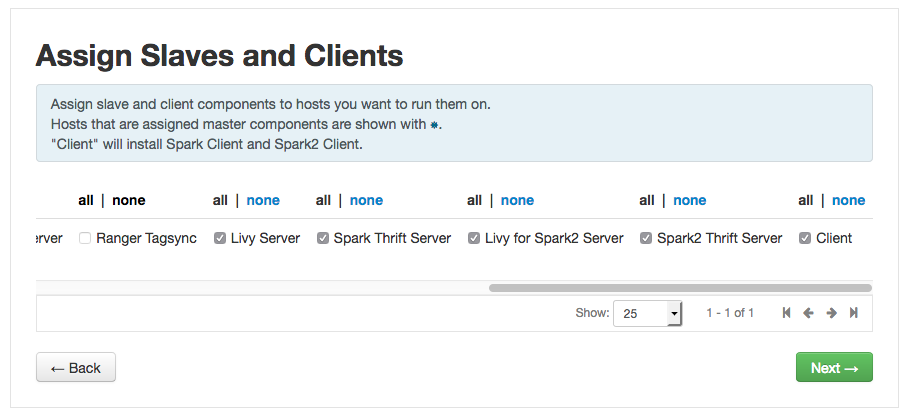

On the Assign Slaves and Clients page:

Scroll to the right and choose the "Client" nodes that you want to run Spark clients. These are the nodes from which Spark jobs can be submitted to YARN.

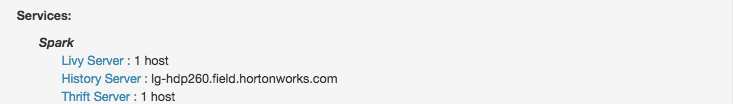

To install the optional Livy server, for security and user impersonation features, check the "Livy Server" box for the desired node assignment on the Assign Slaves and Clients page, for the version(s) of Spark you are deploying.

To install the optional Spark Thrift server at this time, for ODBC or JDBC access, review Spark Thrift Server node assignments on the Assign Slaves and Clients page and assign one or two nodes to it, as needed for the version(s) of Spark you are deploying. (To install the Thrift server later, see Installing the Spark Thrift Server after Deploying Spark.)

Deploying the Thrift server on multiple nodes increases scalability of the Thrift server. When specifying the number of nodes, take into consideration the cluster capacity allocated to Spark.

Click "Next" to continue.

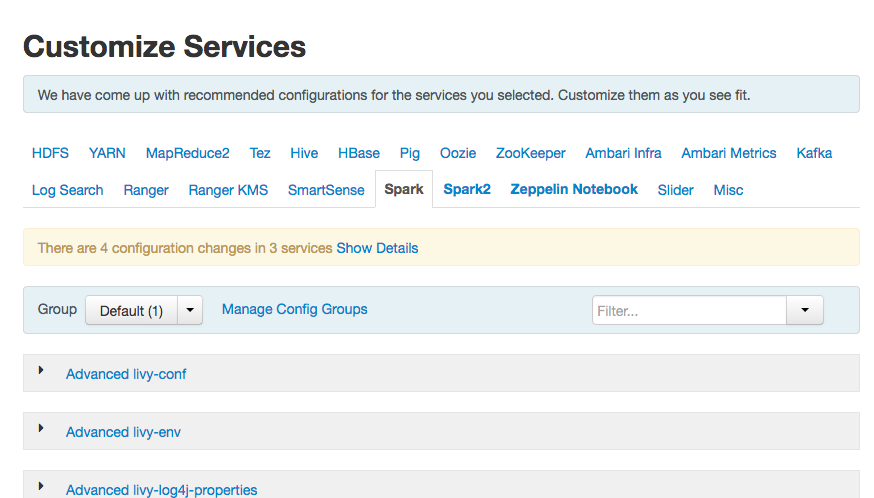

Unless you are installing the Spark Thrift server now, use the default values displayed on the Customize Services page. Note that there are two tabs, one for Spark settings, and one for Spark2 settings.

If you are installing the Spark Thrift server at this time, complete the following steps:

Click the "Spark" or "Spark2" tab on the Customize Services page, depending on which version of Spark you are installing.

Navigate to the "Advanced spark-thrift-sparkconf" group.

Set the

spark.yarn.queuevalue to the name of the YARN queue that you want to use.

Click "Next" to continue.

If Kerberos is enabled on the cluster, review principal and keytab settings on the Configure Identities page, modify settings if desired, and then click Next.

When the wizard displays the Review page, ensure that all HDP components correspond to HDP 2.6.0 or later. Scroll down and check the node assignments for selected services; for example:

Click "Deploy" to begin installation.

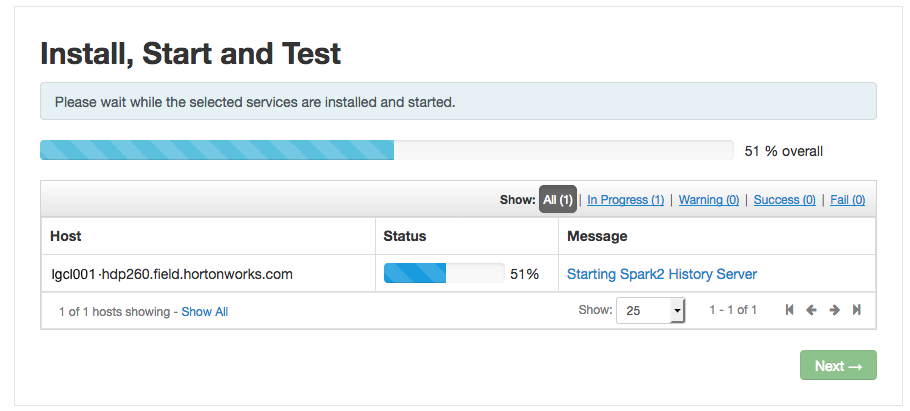

When Ambari displays the Install, Start and Test page, monitor the status bar and messages for progress updates:

When the wizard presents a summary of results, click "Complete" to finish installing Spark.