Install Spark using Ambari

Use the following steps to install Apache Spark on an Ambari-managed cluster.

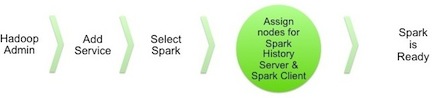

The following diagram shows the Spark installation process using Ambari. Before you install Spark using Ambari, refer to "Adding a Service" in the Ambari Managing and Monitoring a Cluster guide for background information about how to install Hortonworks Data Platform (HDP) components using Ambari.

| Caution |

|---|---|

During the installation process, Ambari creates and edits several configuration files. If you configure and manage your cluster using Ambari, do not edit these files during or after installation. Instead, use the Ambari web UI to revise configuration settings. |