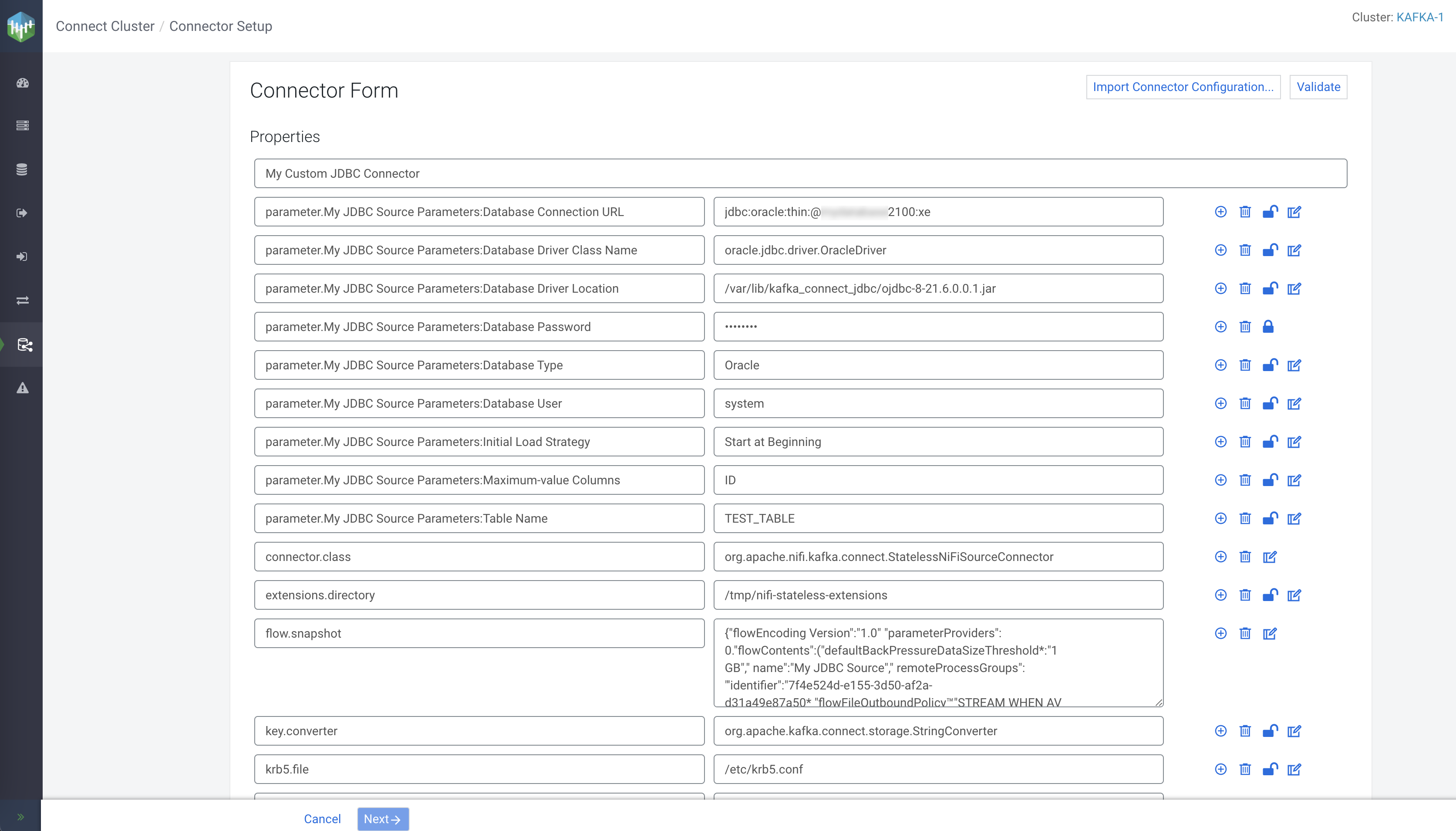

Tutorial: developing and deploying a JDBC Source dataflow in Kafka Connect using Stateless NiFi

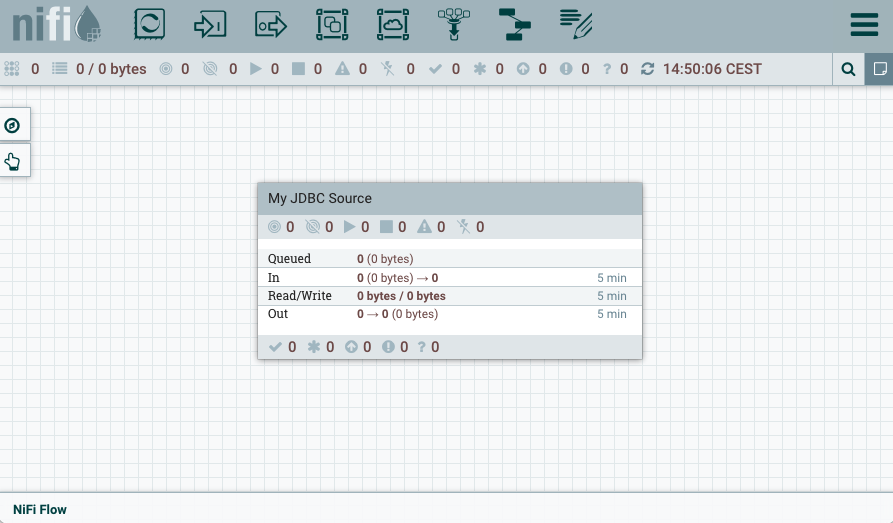

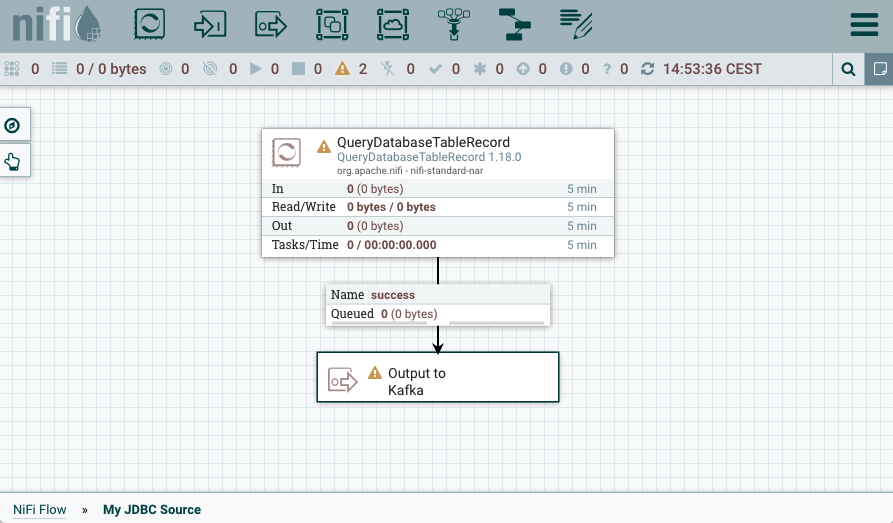

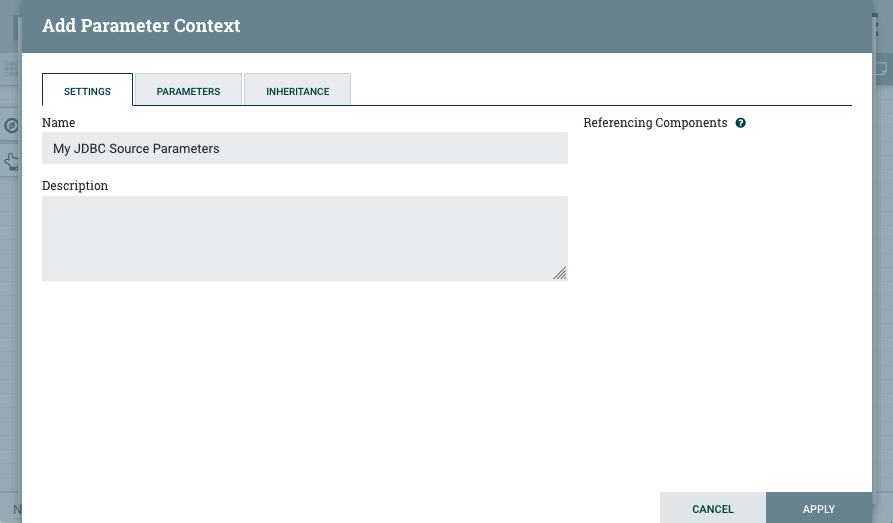

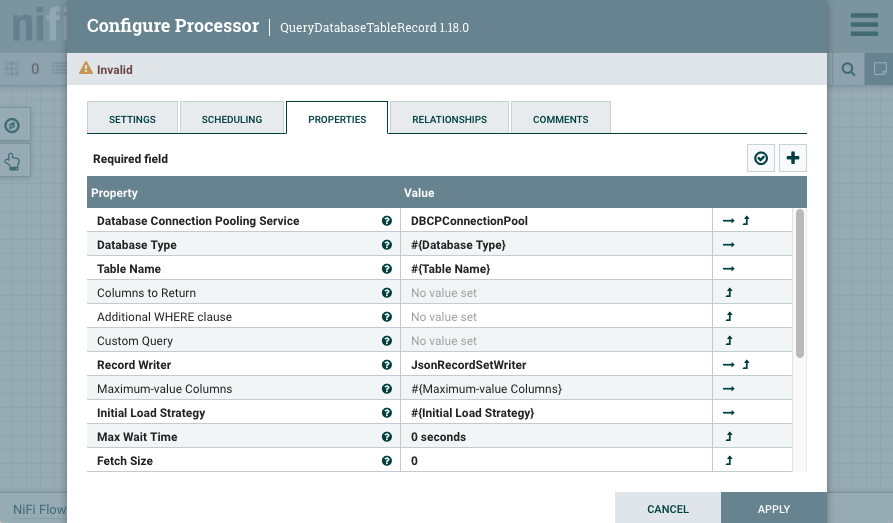

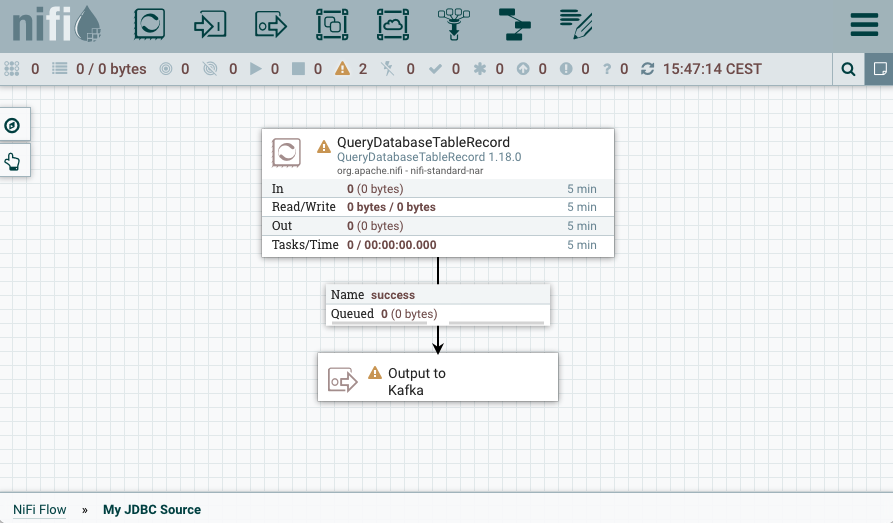

A step-by step tutorial that walks you through how you can create a JDBC Source dataflow and how to deploy the dataflow as a Kafka Connect connector using the Stateless NiFi Source connector. The connector/dataflow presented in this tutorial reads records from an Oracle database table and forwards them to Kafka in JSON format.

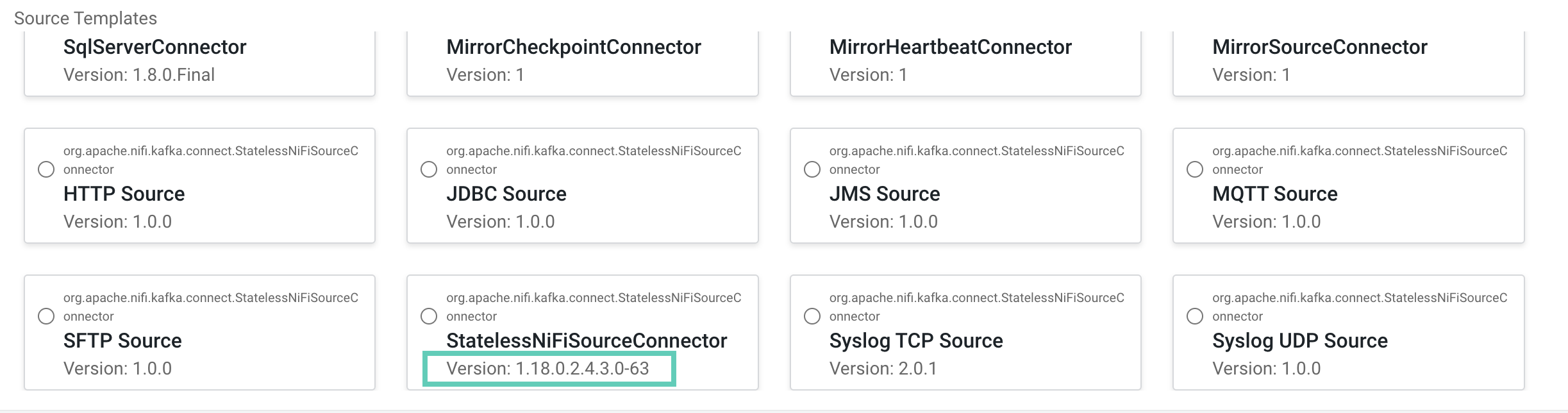

- Look up the Stateless NiFi plugin version either by using the Streams Messaging

Manager (SMM) UI, or by logging into a Kafka Connect host and checking the

Connect plugin directory.

- Access the SMM UI, and click

Connect in the navigation sidebar.

- Click the

New Connector option.

- Locate the StatelessNiFiSourceConnector or

StatelessNiFiSinkConnector cards. The version is located

on the card.

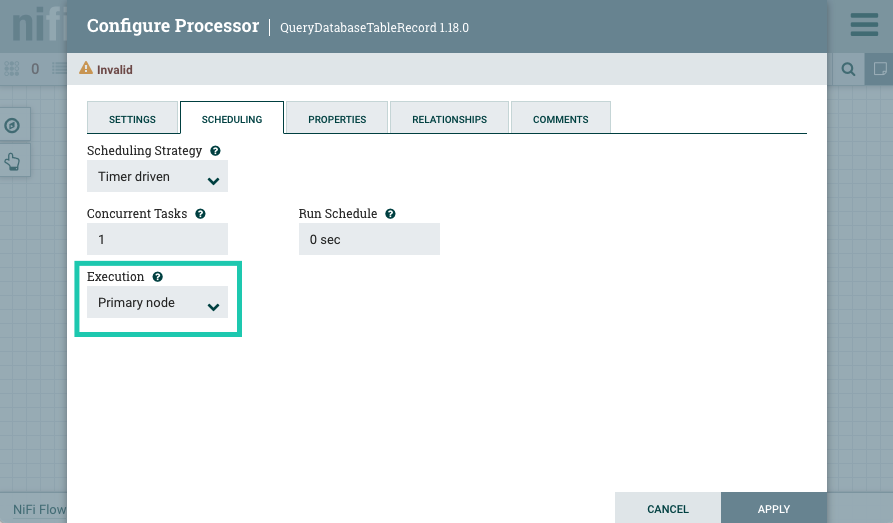

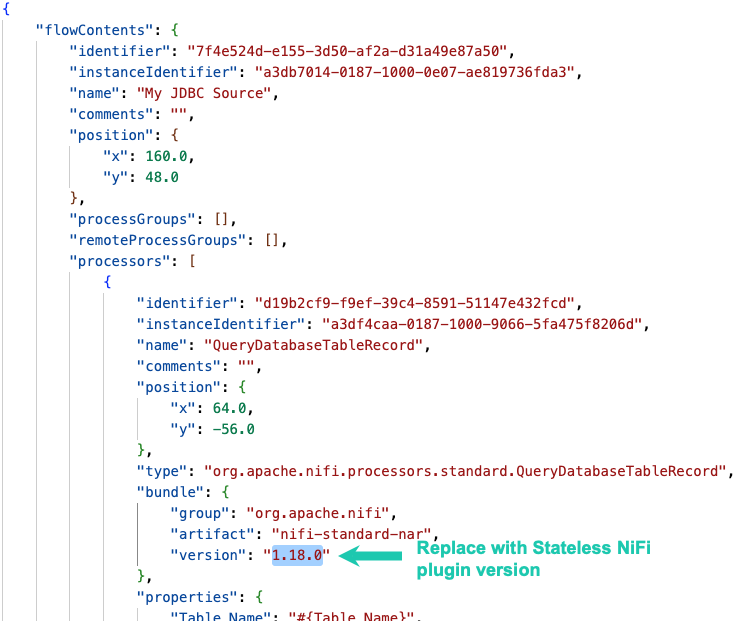

The version is made up of multiple digits. The first three represent the NiFi version. For example, if the version on the card is

1.18.0.2.4.3.0-63, then you should use NiFi1.18.0to build your flow.

- Access the SMM UI, and click

- Download and start NiFi. You can download NiFi from https://archive.apache.org/dist/nifi/. This example uses NiFi 1.18.0 (nifi-1.18.0-bin.zip).

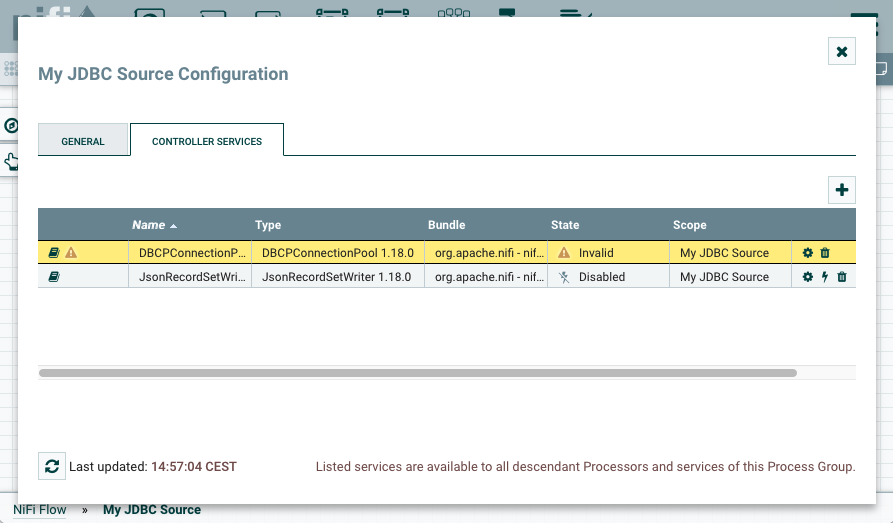

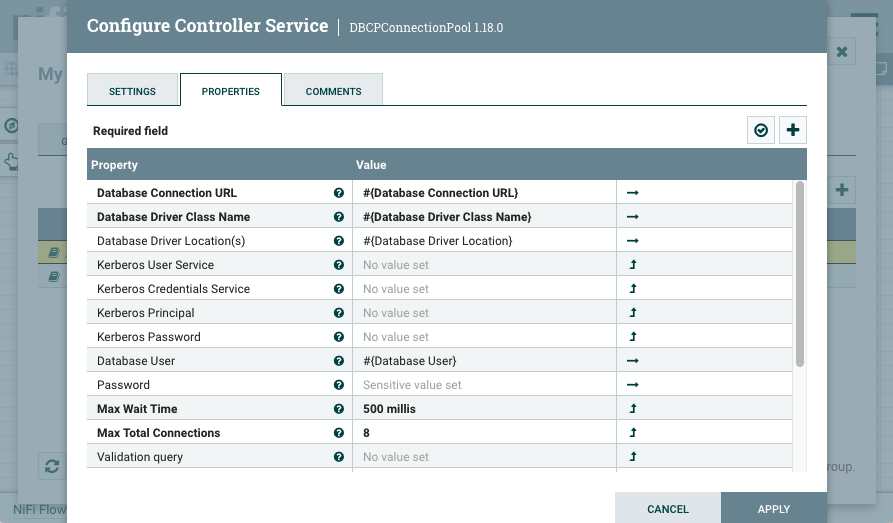

- The connector/dataflow developed in this tutorial requires the Oracle JDBC

driver to function. Ensure that the driver JAR is deployed and available on

every Kafka Connect host under the same path with correct file permissions. Note

down the location where you deploy the driver, you will need to set the location

as a property value during connector deployment. For

example:

cp ./ojdbc8-[***VERSION***].jar /var/lib/kafka_connect_jdbc/oracle-connector-java.jarchmod 644 /var/lib/kafka_connect_jdbc/oracle-connector-java.jar

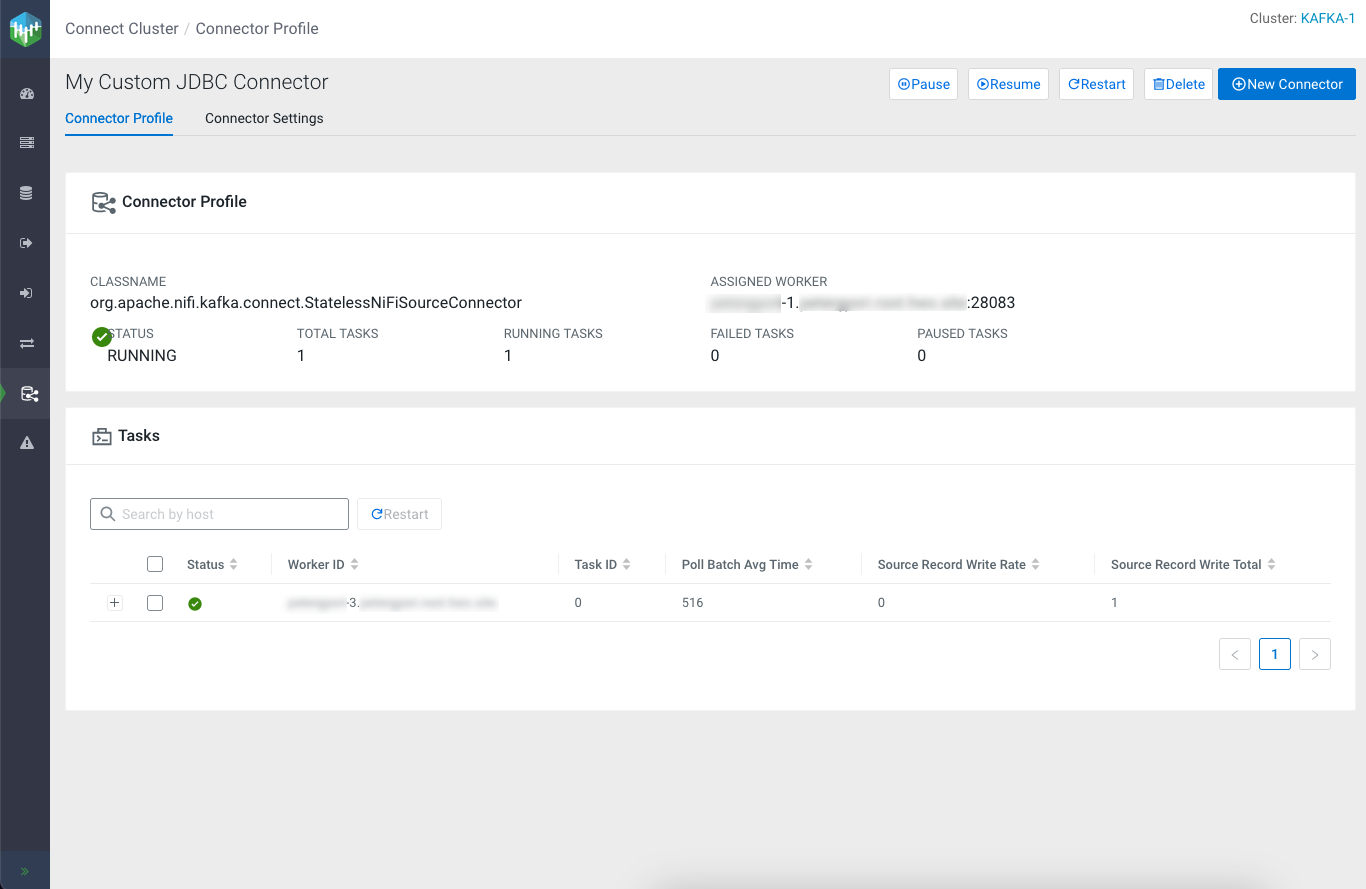

Connector Profile page of the connector. On this page you can

view various details regarding the connector. Most importantly, you can view what the

connector's status is, and which workers the connector and its task were assigned to.

Connector Profile page of the connector. On this page you can

view various details regarding the connector. Most importantly, you can view what the

connector's status is, and which workers the connector and its task were assigned to.

In this particular case, the connector was assigned to the worker running on cluster host 1, whereas the task was assigned to worker running on cluster host 3. This means that dataflow log entries will be present in the Kafka Connect log file on host 3.

In addition to monitoring the connector, you can also check the

contents of the topic that the connector is writing to. This can be done in SMM by

navigating to the

New Connector

New Connector