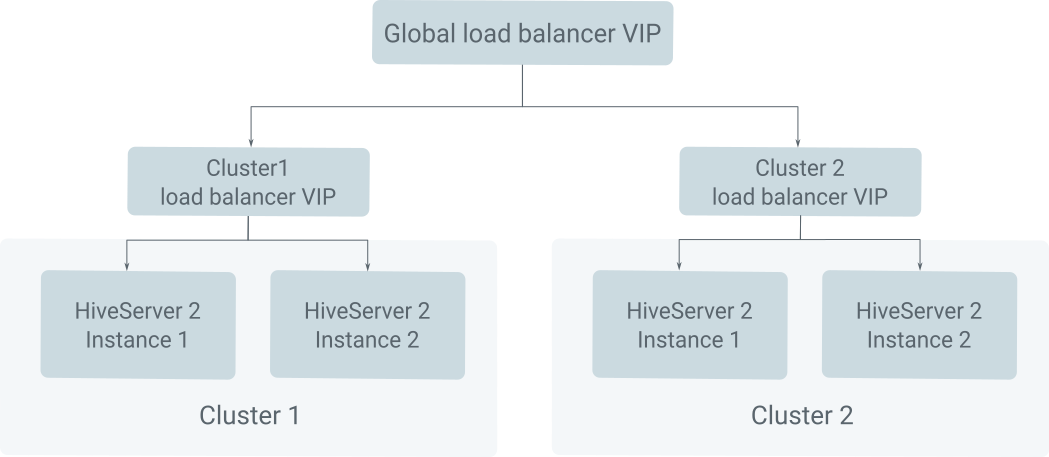

Achieving cross-cluster availability through Hive Load Balancer failover

Learn how you can manage user authentication in Hive using Kerberos, LDAP, and Knox with global and cluster-level load balancers. Also understand keytab management for multiple clusters and switching from one cluster to another using global load balancers during a primary cluster failure.

In Cloudera Manager, the Hive Load Balancer can either be set up by using an external Load Balancer or by using Zookeeper for each cluster. When configured with an external Load Balancer, Cloudera Manager manages the backend configuration that includes adding the service principal for Hive with the Load Balancer hostname to the keytab file in addition to the service principals based on the individual hiveserver2 instance hostnames.

To ensure seamless failover between two clusters, the Hive keytab needs to include in addition to the cluster level load balancer, another service principal for the global load balancer hostname. The below setup ensures that your system remains highly available and transitions smoothly during failover situations.

Load Balancer Details

Global Load Balancer : [***GLOBAL LOAD BALANCER VIP HOSTNAME***].example.com

Cluster1 Load Balancer :[***CLUSTER1 LOAD BALANCER VIP HOSTNAME***].example.com

Cluster2 Load Balancer :[***CLUSTER2 LOAD BALANCER VIP HOSTNAME***].example.com

Ensuring High Availability with Kerberos

- The Cluster 1 Hive service principal keytab should

include:

hive/[***GLOBAL LOAD BALANCER VIP HOSTNAME***].example.com hive/[***CLUSTER1 LOAD BALANCER VIP HOSTNAME***].example.com hive/hive-[***HIVESERVER2 HOSTNAME***].example.com - The Cluster 2 Hive service principal keytab should

include:

hive/[***GLOBAL LOAD BALANCER VIP HOSTNAME***].example.com hive/[***CLUSTER2 LOAD BALANCER VIP HOSTNAME***].example.com hive/hive-[***HIVESERVER2 HOSTNAME***].example.com

Enabling High Availability with LDAP

Kerberos authentication requires the cluster administrator to manage keytab files for service principals. However, users can authenticate by using LDAP as the authentication backend across both clusters without customizing keytab files.

AuthMech = 3. Then, authenticate using your LDAP user account. This ensures

secure access and authentication within the system. For

Example:jdbc:hive2://<HS2 hostname>.example.com:10000/default;SSL=1;SSLTrustStore=<path>/cm-auto-global_truststore.jks;SSLTrustStorePwd=xxxx;AuthMech=3;UID=XXX;PWD=XXXX

Enabling High Availability with Knox front end

jdbc:hive2://<knox-host>:8443/;ssl=true;transportMode=http;

httpPath=gateway/cdp-proxy-api/hive;

sslTrustStore=/<path to JKS>/bin/certs/gateway-client-trust.jks;