Creating jobs in Cloudera Data Engineering

A job in Cloudera Data Engineering consists of defined configurations and resources (including application code). Jobs can be run on demand or scheduled.

In Cloudera Data Engineering, jobs are associated with virtual clusters. Before you can create a job, you must create a virtual cluster that can run it. For more information, see Creating virtual clusters.

- In the Cloudera console, click the Data Engineering tile. The Cloudera Data Engineering Home page displays.

- In the left navigation menu click Jobs. The Jobs page is displayed.

-

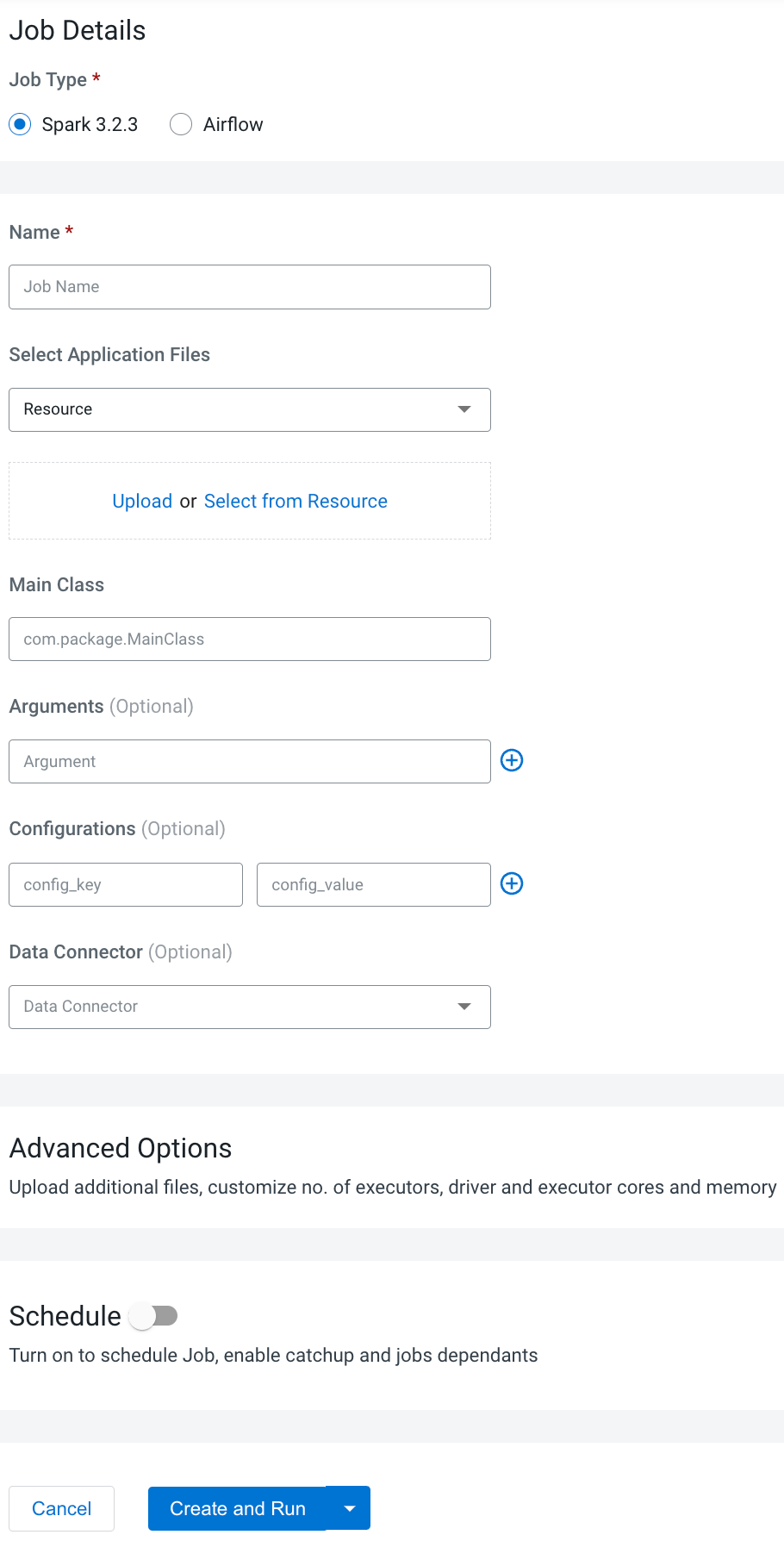

Click Create Job. The Job Details page is

displayed.

- Provide the Job Details:

- Select Spark for the job type. If you are creating the job from the Home page, select the virtual cluster where you want to create the job.

- Specify the Name.

- Select File or

URL for your application file, and

provide or specify the file. You can upload a new file or select a

file from an existing resource.If you select the URL option and specify an Amazon AWS S3 URL, add the following configuration to the job:

config_key:

spark.hadoop.fs.s3a.delegation.token.bindingconfig_value:

org.apache.knox.gateway.cloud.idbroker.s3a.IDBDelegationTokenBinding - If your application code is a JAR file, specify the Main Class.

- Specify arguments if required. You can click the Add Argument button to add multiple command arguments as necessary.

-

Enter configurations if needed. You can add multiple configuration parameters as

key value pairs in the text box as necessary.

-

Optional: Select the name of the data connector from the Data

Connector drop-down list. The UI displays the storage information that

is internally overwritten.

- If your application code is a Python file, select the Python Version, and optionally select a Python Environment.

-

Click Advanced Configurations to display more customizations,

such as additional files, initial executors, executor range, driver and executor cores,

and memory.

By default, the executor range is set to match the range of CPU cores configured for the virtual cluster. This improves resource utilization and efficiency by allowing jobs to scale up to the maximum virtual cluster resources available, without manually tuning and optimizing the number of executors per job.GPU Acceleration (Technical Preview): You can accelerate your Spark jobs using GPUs. Click Enable GPU Accelerations checkbox to enable the GPU acceleration and configure selectors and tolerations if you want to run the job on specific GPU nodes. When this job is created and run, this particular job will request GPU resources.

- Click Schedule to display scheduling

options.You can schedule the application to run periodically using the Basic controls or by specifying a Cron Expression.

-

Click Alerts and provide the email id to receive alerts. Click

+ to add more email IDs. Optionally, you can select when you want

email alerts whether for job failures or missed job service-level agreements or both.

- If you provided a schedule, click Schedule to create the job. If you did not specify a schedule, and you do not want the job to run immediately, click the drop-down arrow on Create and Run and select Create. Otherwise, click Create and Run to run the job immediately.