Using GPUs for Cloudera Data Science Workbench Workloads

A GPU is a specialized processor that can be used to accelerate highly parallelized computationally-intensive workloads. Because of their computational power, GPUs have been found to be particularly well-suited to deep learning workloads. Ideally, CPUs and GPUs should be used in tandem for data engineering and data science workloads. A typical machine learning workflow involves data preparation, model training, model scoring, and model fitting. You can use existing general-purpose CPUs for each stage of the workflow, and optionally accelerate the math-intensive steps with the selective application of special-purpose GPUs. For example, GPUs allow you to accelerate model fitting using frameworks such as Tensorflow, PyTorch, Keras, MXNet, and Microsoft Cognitive Toolkit (CNTK).

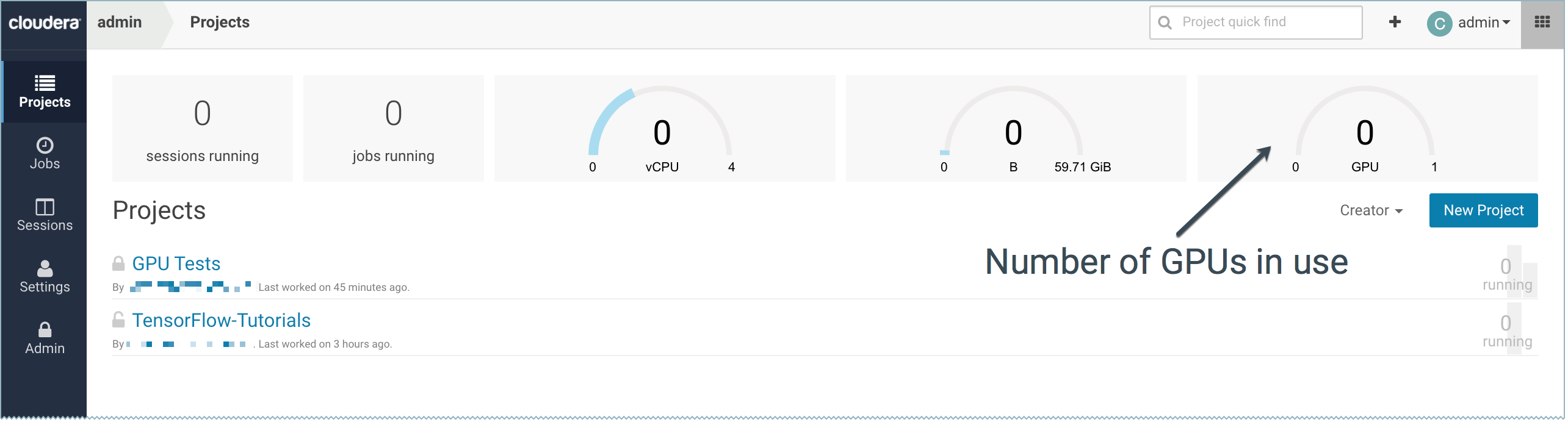

By enabling GPU support, data scientists can share GPU resources available on Cloudera Data Science Workbench nodes. Users can requests a specific number of GPU instances, up to the total number available on a node, which are then allocated to the running session or job for the duration of the run. Projects can use isolated versions of libraries, and even different CUDA and cuDNN versions via Cloudera Data Science Workbench's extensible engine feature.

Key Points to Note

-

Cloudera Data Science Workbench only supports CUDA-enabled NVIDIA GPU cards.

-

Cloudera Data Science Workbench does not support heterogeneous GPU hardware in a single deployment.

-

Cloudera Data Science Workbench does not include an engine image that supports NVIDIA libraries. Create your own custom CUDA-capable engine image using the instructions described in this topic.

-

Cloudera Data Science Workbench also does not install or configure the NVIDIA drivers on the Cloudera Data Science Workbench gateway nodes. These depend on your GPU hardware and will have to be installed by your system administrator. The steps provided in this topic are generic guidelines that will help you evaluate your setup.

-

The instructions described in this topic require Internet access. If you have an airgapped deployment, you will be required to manually download and load the resources onto your nodes.

- For a list of known issues associated with this feature, refer Known Issues - GPU Support.

Enabling Cloudera Data Science Workbench to use GPUs

To enable GPU usage on Cloudera Data Science Workbench, perform the following steps to provision the Cloudera Data Science Workbench gateway hosts and install Cloudera Data Science Workbench on these hosts. As noted in the following instructions, certain steps must be repeated on all gateway nodes that have GPU hardware installed on them.

Set Up the Operating System and Kernel

Use the following commands to update and reboot your system.

sudo yum update -y sudo reboot

Install the Development Tools and kernel-devel packages.

sudo yum groupinstall -y "Development tools" sudo yum install -y kernel-devel-`uname -r`

Perform these steps on all nodes with GPU hardware installed on them.

Install the NVIDIA Driver on GPU Nodes

wget http://us.download.nvidia.com/.../NVIDIA-Linux-x86_64-<driver_version>.run export NVIDIA_DRIVER_VERSION=<driver_version> chmod 755 ./NVIDIA-Linux-x86_64-$NVIDIA_DRIVER_VERSION.run ./NVIDIA-Linux-x86_64-$NVIDIA_DRIVER_VERSION.run -asq

/usr/bin/nvidia-smi

Perform this step on all nodes with GPU hardware installed on them.

Enable Docker NVIDIA Volumes on GPU Nodes

To enable Docker containers to use the GPUs, the previously installed NVIDIA driver libraries must be consolidated in a single directory named after the <driver_version>, and mounted into the containers. This is done using the nvidia-docker package, which is a thin wrapper around the Docker CLI and a Docker plugin.

- Download and install nvidia-docker. Use a version that is suitable for your deployment.

wget https://github.com/NVIDIA/nvidia-docker/releases/download/v1.0.1/nvidia-docker-1.0.1-1.x86_64.rpm sudo yum install -y nvidia-docker-1.0.1-1.x86_64.rpm

- Start the necessary services and plugins:

systemctl start nvidia-docker systemctl enable nvidia-docker

- Run a small container to create the Docker volume structure:

sudo nvidia-docker run --rm nvidia/cuda:8.0 nvidia-smi

- Verify that the /var/lib/nvidia-docker/volumes/nvidia_driver/$NVIDIA_DRIVER_VERSION/ directory was created.

- Use the following Docker command to verify that Cloudera Data Science Workbench can access the GPU.

sudo docker run --net host \ --device=/dev/nvidiactl \ --device=/dev/nvidia-uvm \ --device=/dev/nvidia0 \ -v /var/lib/nvidia-docker/volumes/nvidia_driver/$NVIDIA_DRIVER_VERSION/:/usr/local/nvidia/ \ -it nvidia/cuda:8.0 \ /usr/local/nvidia/bin/nvidia-smiOn a multi-GPU machine the output of this command will show exactly one GPU. This is because we have run this sample Docker container with only one device (/dev/nvidia0).

Enable GPU Support in Cloudera Data Science Workbench

Minimum Required Cloudera Manager Role: Cluster Administrator

Depending on your deployment, use one of the following sets of steps to enable Cloudera Data Science Workbench to identify the GPUs installed:

CSD Deployments

- Go to the CDSW service in Cloudera Manager. Click Configuration. Set the following parameters as directed in the following table.

Enable GPU Support

Use the checkbox to enable GPU support for Cloudera Data Science Workbench workloads. When this property is enabled on a node that is equipped with GPU hardware, the GPU(s) will be available for use by Cloudera Data Science Workbench.

NVIDIA GPU Driver Library Path

Complete path to the NVIDIA driver libraries. In this example, the path would be, /var/lib/nvidia-docker/volumes/nvidia_driver/$NVIDIA_DRIVER_VERSION/

- Restart the CDSW service in Cloudera Manager.

- Test whether Cloudera Data Science Workbench is detecting GPUs.

RPM Deployments

- Set the following parameters in /etc/cdsw/config/cdsw.conf on all Cloudera Data Science Workbench nodes. You must make sure that

cdsw.conf is consistent across all nodes, irrespective of whether they have GPU hardware installed on them.

NVIDIA_GPU_ENABLE

Set this property to true to enable GPU support for Cloudera Data Science Workbench workloads. When this property is enabled on a node that is equipped with GPU hardware, the GPU(s) will be available for use by Cloudera Data Science Workbench.

NVIDIA_LIBRARY_PATH

Complete path to the NVIDIA driver libraries. In this example, the path would be, "/var/lib/nvidia-docker/volumes/nvidia_driver/$NVIDIA_DRIVER_VERSION/"

- On the master node, run the following command to restart Cloudera Data Science Workbench.

cdsw restart

If you modified cdsw.conf on a worker node, run the following commands to make sure the changes go into effect:cdsw reset cdsw join

- Use the following section to test whether Cloudera Data Science Workbench can now detect GPUs.

Test whether Cloudera Data Science Workbench can detect GPUs

Once Cloudera Data Science Workbench has successfully restarted, if NVIDIA drivers have been installed on the Cloudera Data Science Workbench hosts, Cloudera Data Science Workbench will now be able to detect the GPUs available on its hosts.

cdsw status

Create a Custom CUDA-capable Engine Image

The base Ubuntu 16.04 engine image (docker.repository.cloudera.com/cdsw/engine:2) that ships with Cloudera Data Science Workbench will need to be extended with CUDA libraries to make it possible to use GPUs in jobs and sessions.

The following sample Dockerfile illustrates an engine on top of which machine learning frameworks such as Tensorflow and PyTorch can be used. This Dockerfile uses a deep learning library from NVIDIA called NVIDIA CUDA Deep Neural Network (cuDNN). Make sure you check with the machine learning framework that you intend to use in order to know which version of cuDNN is needed. As an example, Tensorflow uses CUDA 8.0 and requires cuDNN 6.0.

The following sample Dockerfile uses NVIDIA's official Dockerfiles for CUDA and cuDNN images.

cuda.Dockerfile

FROM docker.repository.cloudera.com/cdsw/engine:2

RUN NVIDIA_GPGKEY_SUM=d1be581509378368edeec8c1eb2958702feedf3bc3d17011adbf24efacce4ab5 && \

NVIDIA_GPGKEY_FPR=ae09fe4bbd223a84b2ccfce3f60f4b3d7fa2af80 && \

apt-key adv --fetch-keys http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/7fa2af80.pub && \

apt-key adv --export --no-emit-version -a $NVIDIA_GPGKEY_FPR | tail -n +5 > cudasign.pub && \

echo "$NVIDIA_GPGKEY_SUM cudasign.pub" | sha256sum -c --strict - && rm cudasign.pub && \

echo "deb http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64 /" > /etc/apt/sources.list.d/cuda.list

ENV CUDA_VERSION 8.0.61

LABEL com.nvidia.cuda.version="${CUDA_VERSION}"

ENV CUDA_PKG_VERSION 8-0=$CUDA_VERSION-1

RUN apt-get update && apt-get install -y --no-install-recommends \

cuda-nvrtc-$CUDA_PKG_VERSION \

cuda-nvgraph-$CUDA_PKG_VERSION \

cuda-cusolver-$CUDA_PKG_VERSION \

cuda-cublas-8-0=8.0.61.2-1 \

cuda-cufft-$CUDA_PKG_VERSION \

cuda-curand-$CUDA_PKG_VERSION \

cuda-cusparse-$CUDA_PKG_VERSION \

cuda-npp-$CUDA_PKG_VERSION \

cuda-cudart-$CUDA_PKG_VERSION && \

ln -s cuda-8.0 /usr/local/cuda && \

rm -rf /var/lib/apt/lists/*

RUN echo "/usr/local/cuda/lib64" >> /etc/ld.so.conf.d/cuda.conf && \

ldconfig

RUN echo "/usr/local/nvidia/lib" >> /etc/ld.so.conf.d/nvidia.conf && \

echo "/usr/local/nvidia/lib64" >> /etc/ld.so.conf.d/nvidia.conf

ENV PATH /usr/local/nvidia/bin:/usr/local/cuda/bin:${PATH}

ENV LD_LIBRARY_PATH /usr/local/nvidia/lib:/usr/local/nvidia/lib64

RUN echo "deb http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1604/x86_64 /" > /etc/apt/sources.list.d/nvidia-ml.list

ENV CUDNN_VERSION 6.0.21

LABEL com.nvidia.cudnn.version="${CUDNN_VERSION}"

RUN apt-get update && apt-get install -y --no-install-recommends \

libcudnn6=$CUDNN_VERSION-1+cuda8.0 && \

rm -rf /var/lib/apt/lists/*

docker build --network host -t <company-registry>/cdsw-cuda:2 . -f cuda.Dockerfile

Push this new engine image to a public Docker registry so that it can be made available for Cloudera Data Science Workbench workloads. For example:

docker push <company-registry>/cdsw-cuda:2

Allocate GPUs for Sessions and Jobs

- Sign in to Cloudera Data Science Workbench as a site administrator.

- Click Admin.

- Go to the Engines tab.

- From the Maximum GPUs per Session/Job dropdown, select the maximum number of GPUs that can be used by an engine.

- Under Engine Images, add the custom CUDA-capable engine image created in the previous step. This whitelists the image and allows project administrators to use the engine in their jobs and sessions.

- Click Update.

- Navigate to the project's Overview page.

- Click Settings.

- Go to the Engines tab.

- Under Engine Image, select the CUDA-capable engine image from the dropdown.

Example: Tensorflow

This is a simple example that walks you through a simple Tensorflow workload that uses GPUs.

- Open the workbench console and start a Python engine.

-

Install Tensorflow.

Python 2!pip install tensorflow-gpu

Python 3!pip3 install tensorflow-gpu

- Restart the session. This requirement of a session restart after installation is a known issue specific to Tensorflow.

- Create a new file with the following sample code. The code first performs a multiplication operation and prints the session output, which should mention the GPU that was used for the

computation. The latter half of the example prints a list of all available GPUs for this engine.

import tensorflow as tf a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a') b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b') c = tf.matmul(a, b) # Creates a session with log_device_placement set to True. sess = tf.Session(config=tf.ConfigProto(log_device_placement=True)) # Runs the operation. print(sess.run(c)) # Prints a list of GPUs available from tensorflow.python.client import device_lib def get_available_gpus(): local_device_protos = device_lib.list_local_devices() return [x.name for x in local_device_protos if x.device_type == 'GPU'] print get_available_gpus()