Generating synthetic data for a ticketing use case using the Supervised Fine-Tuning workflow

Follow the steps below to generate synthetic data for a ticketing use case using the Supervised Fine-Tuning workflow in Synthetic Data Studio.

-

In the Cloudera

console, click the Cloudera AI

tile.

The Cloudera AI Workbenches page displays.

-

Click on the name of the workbench.

The workbenches Home page displays.

- Click AI Studios.

- Launch the Synthetic Data Studio..

- Under AI Studios, click Synthetic Data Studio, and then click Get Started.

-

Under Create Datasets, click Getting Started. The Synthetic Dataset

Studio page is displayed.

-

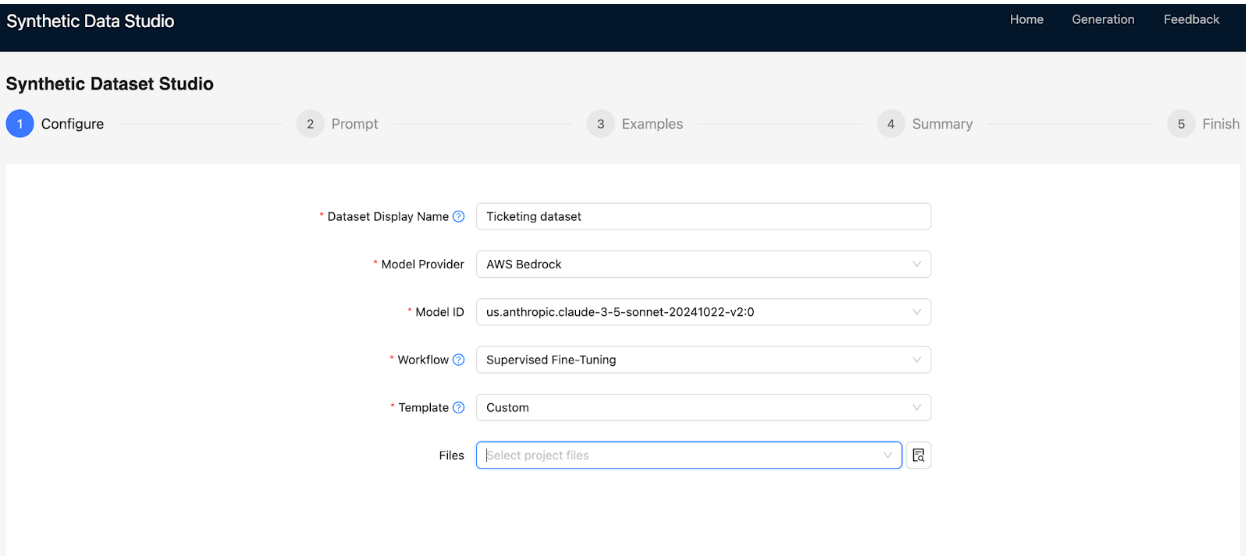

In the Configure tab, specify the following:

- In Dataset Display Name, enter

Ticketing dataset. - In Model Provider, select AWS Bedrock.

- In Model ID, enter

us.anthropic.claude-3-5-sonnet-20241022-v2:0. - In Workflow, select Supervised Fine-Tuning.

- In Template, select

Custom.

- In Dataset Display Name, enter

-

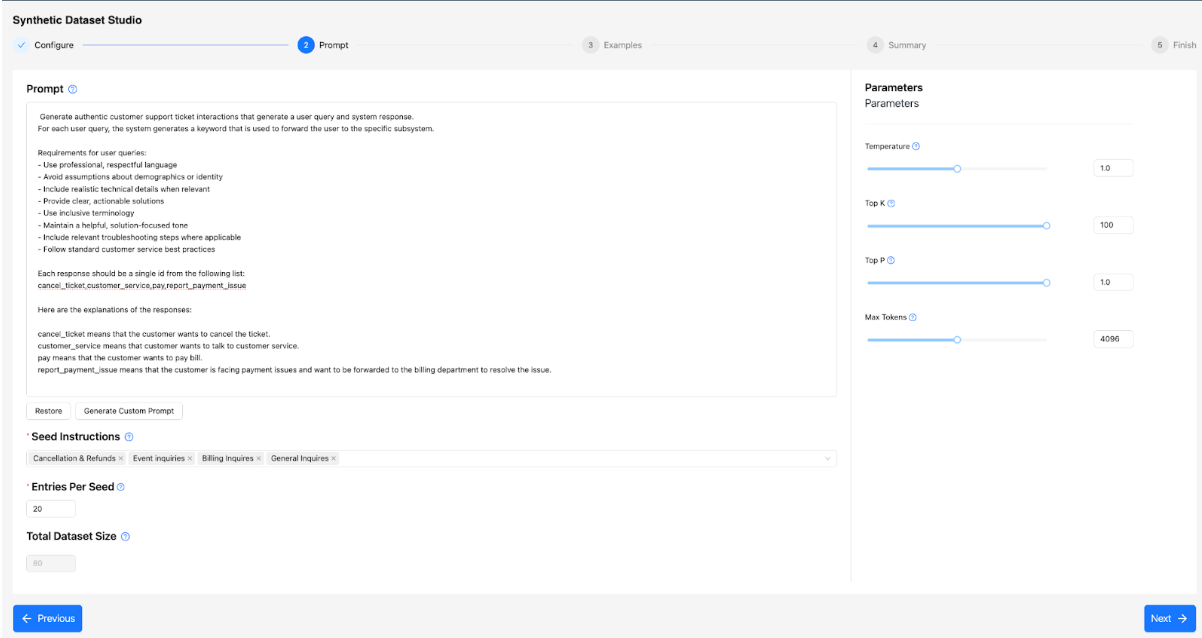

In the Prompt tab, specify the following:

- In Prompt, write a prompt that instructs the LLM to create both

user queries and system responses. The workflow generates both the user prompt and the

completion in a single step.Example prompt: The following example instructs the LLM by giving general guidelines on creating a prompt and a completion. Then, give a list of requirements for the data, such as the use of respectful language, the level of detail, and so on. Finally, explains the possible choices of completion and how the system will create the user prompts (queries) along with the system completions (system response).

Generate authentic customer support ticket interactions with a user query and system response. For each user query, the system generates a keyword used to forward the user to the appropriate subsystem. Requirements for user queries: - Use professional, respectful language. - Avoid assumptions about demographics or identity. - Include realistic technical details when relevant. - Provide clear, actionable solutions. - Use inclusive terminology. - Maintain a helpful, solution-focused tone. - Include relevant troubleshooting steps where applicable. - Follow standard customer service best practices. Each response should be a single id from the following list: cancel_ticket,customer_service,pay,report_payment_issue Here are the explanations of the responses: cancel_ticket means that the customer wants to cancel the ticket. customer_service means that customer wants to talk to customer service. pay means that the customer wants to pay the bill. report_payment_issue means that the customer is facing payment issues and wants to be forwarded to the billing department to resolve the issue. - In Seeds Instructions, define seed topics to diversify the generated dataset.

Cancellation & Refunds Event inquiries Billing Inquires General Inquires - In Entries Per Seed, specify

5as the number of entries to generate for each seed defined in Seeds Instructions. - Under Parameters, adjust the following model parameters:

- Temperature: Set to

1.0to allow the LLM to generate diverse synthetic data. - Top K: Set to

100to explore a wide range of possible solutions. - Top P: Set to

1.0for broader exploration of outputs. - Max Tokens. Set to

2048or adjust based on the size of the generated text. For problems with larger generated text, consider increasing Max Tokens.

- Temperature: Set to

- In Prompt, write a prompt that instructs the LLM to create both

user queries and system responses. The workflow generates both the user prompt and the

completion in a single step.

-

In the Examples tab, view the details of example prompts. Using examples, you can

teach the LLM how to structure the prompt and completions of the generated data.

- Under Actions, click Add Example and define

prompts and completions so that the LLM knows the format of the data to be generated.Click Add Example to add the following prompts:

Table 1. Field name Value Example 1 - Prompt I have received a message that I owe $300 and I was instructed to pay the bill online. I already paid this amount and I am wondering why I received this message. Example 1 - Completion report_payment_issue Example 2 - Prompt I have received two payment invoices and need to pay my bills using a credit card. Example 2 - Completion pay Example 3 - Prompt I will not be able to attend the presentation and would like to cancel my RSVP. Example 3 - Completion cancel_ticket Example 4 - Prompt I am having questions regarding the exact time, location, and requirements of the event and would like to talk to customer service. Example 4 - Completion customer_service

- Under Actions, click Add Example and define

prompts and completions so that the LLM knows the format of the data to be generated.

- In the Summary tab, review all the data generation parameters to confirm that everything is as expected. Click Generate to initiate dataset generation. Alternatively, click Previous to return to previous tabs and make any necessary changes.

- In the Finish tab, view the status of the dataset creation. The generated Prompts and Completions will be displayed. The output dataset will be saved in the Project File System within the Cloudera environment.