Setup Data Lake Access

Cloudera AI can access data tables stored in an AWS or Microsoft Azure Data Lake. As a Cloudera AI Admin, follow this procedure to set up the necessary permissions.

The instructions apply to Data Lakes on both AWS and Microsoft Azure. Follow the instructions that apply to your environment.

-

Cloud Provider Setup

Make sure the prerequisites for AWS or Azure are satisfied (see the Related Topics for AWS environments and Azure environments). Then, create a Cloudera environment as follows.

- For environment logs, create an S3 bucket or ADLS Gen2 container.

- For environment storage, create an S3 bucket or ADLS Gen2 container.

- For AWS, create AWS policies for each S3 bucket, and create IAM roles (simple and instance profiles) for these policies.

- For Azure, create managed identities for each of the personas, and create roles to map the identities to the ADLS permissions.

For detailed information on S3 or ADLS, see Related information. -

Environment Setup

In Cloudera, set up paths for logs and native data access to the S3 bucket or ADLS Gen2 container.

In the Environment Creation wizard, set the following:

-

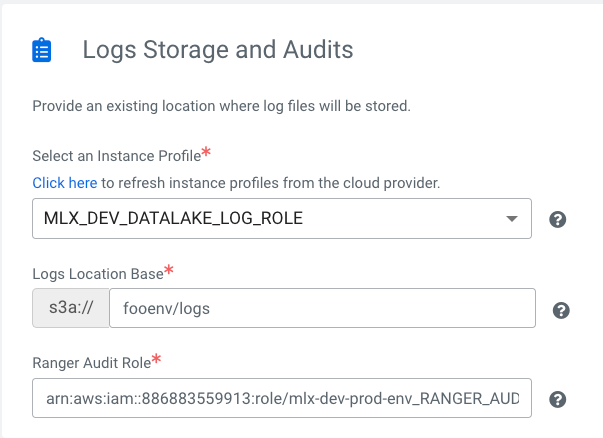

Logs Storage and Audits

- Instance Profile - The IAM role or Azure identity that is attached to the master node of the Data Lake cluster. The Instance Profile enables unauthenticated access to the S3 bucket or ADLS container for logs.

- Logs Location Base - The location in S3 or ADLS where environment logs are saved. .

- Ranger Audit Role - The IAM role or Azure identity that has S3 or ADLS access to write Ranger audit events. Ranger uses Hadoop authentication, therefore it uses IDBroker to access the S3 bucket or ADLS container, rather than using Instance profiles or Azure identities directly.

-

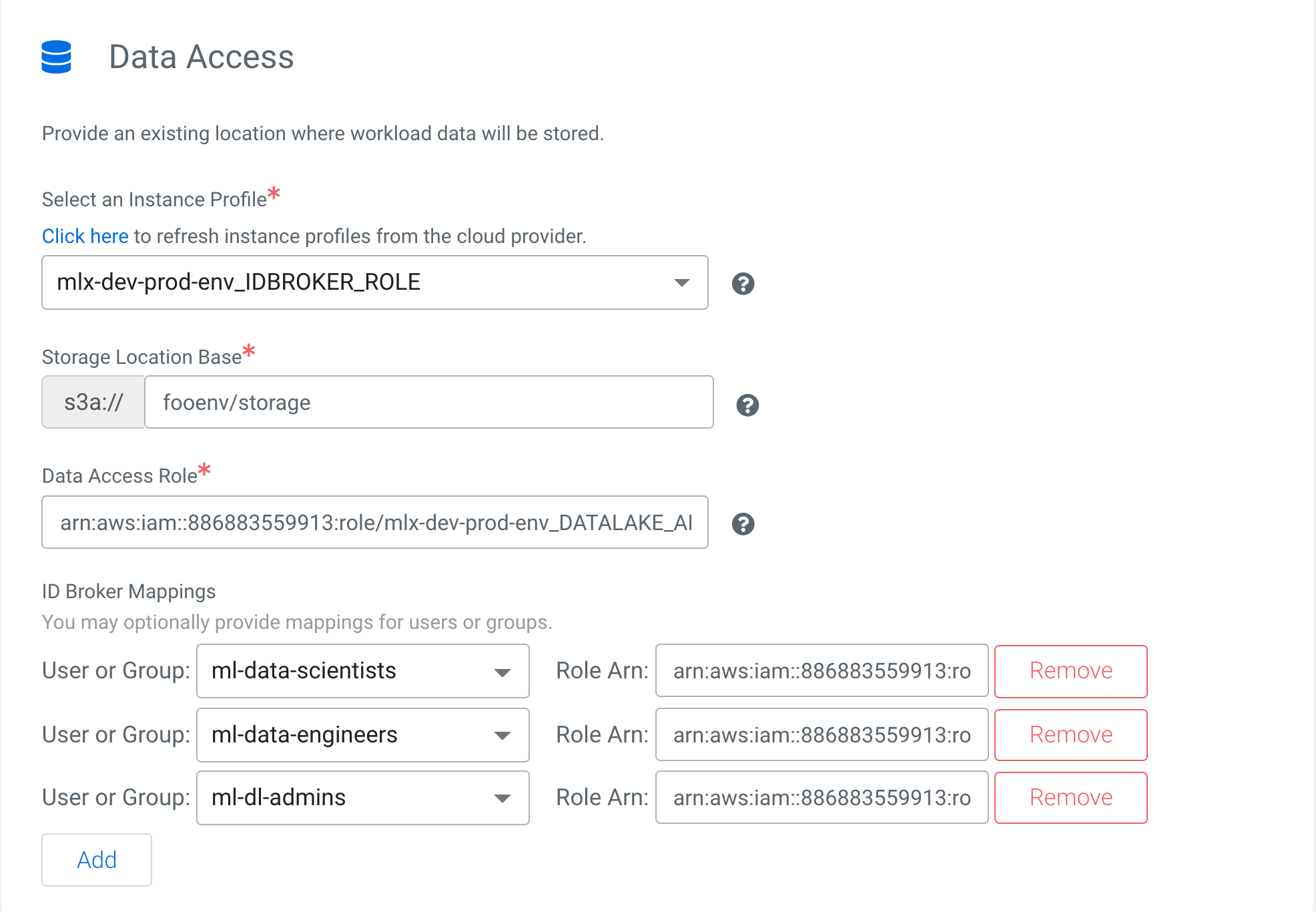

Data Access

- Instance Profile - The IAM role or Azure identity that is attached to the IDBroker node of the Data Lake cluster. IDBroker uses this profile to assume roles on behalf of users and get temporary credentials to access S3 buckets or ADLS containers.

- Storage Location Base - The S3 or ADLS location where data pertaining to the environment is saved.

- Data Access Role - The IAM role or Azure identity that has access to read or write environment data. For example, Hive creates external tables by default in the Cloudera environments, where metadata is stored in HMS running in the Data Lake. The data itself is stored in S3 or ADLS. As Hive uses Hadoop authentication, it uses IDBroker to access S3 or ADLS, rather than using Instance profiles or Azure identities. Hive uses the data access role for storage access.

- ID Broker Mappings - These specify the mappings between the Cloudera user or groups to the AWS IAM roles or Azure roles that have appropriate S3 or ADLS access. This setting enables IDBroker to get appropriate S3 or ADLS credentials for the users based on the role mappings defined.

This completes installation of the environment.

-

Logs Storage and Audits

-

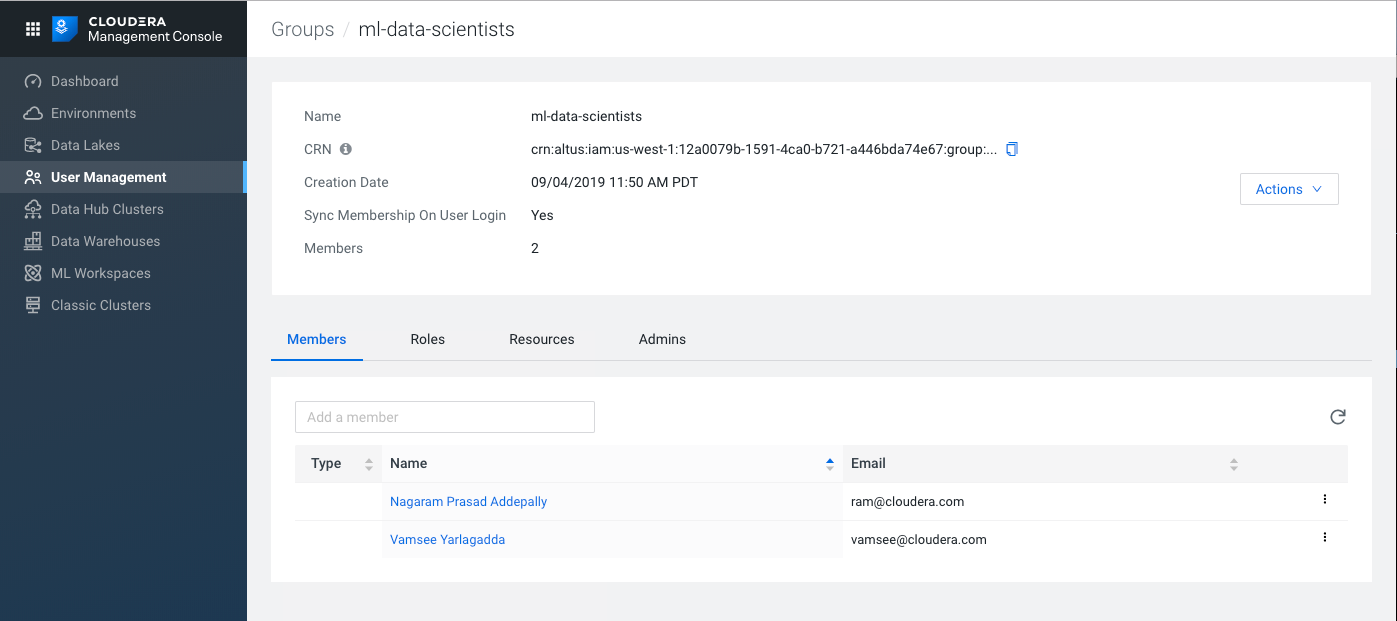

User Group Mappings

In Cloudera, you can assign users to groups to simplify permissions management. For example, you could create a group called ml-data-scientists, and assign two individual users to it, as shown here. .

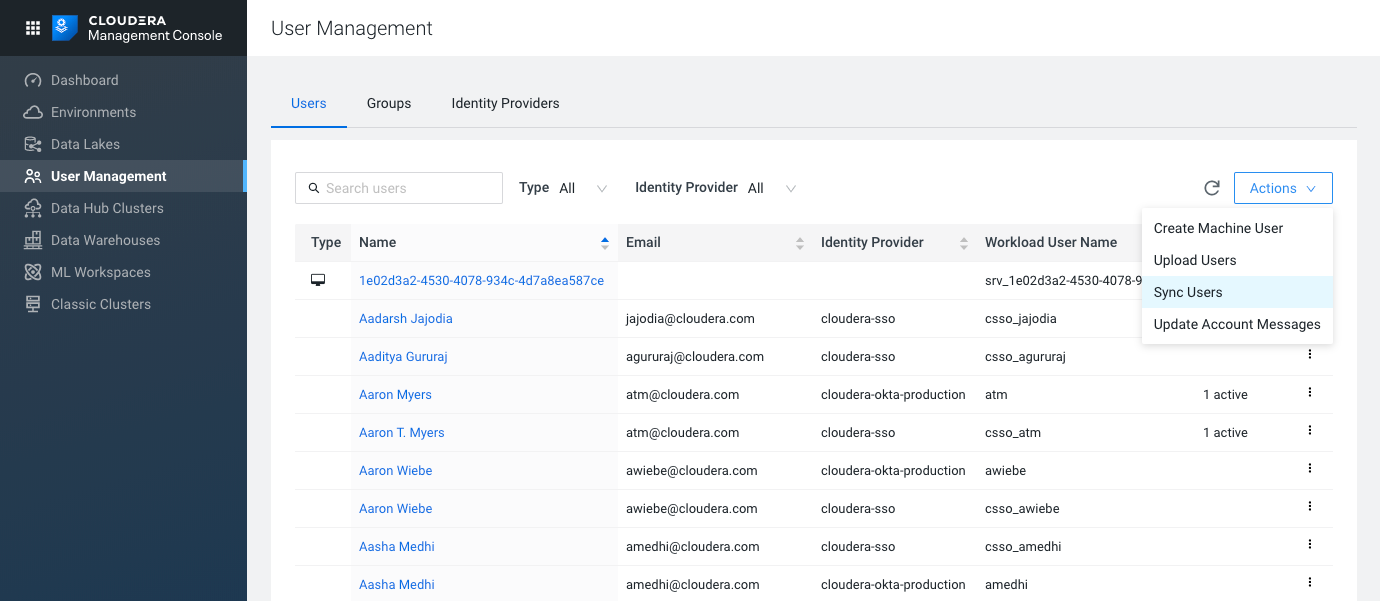

- Sync users

Whenever you make changes to user and group mappings, make sure to sync the mappings with the authentication layer. In User Management > Actions, click Sync Users, and select the environment.

- Sync users

-

IDBroker

IDBroker allows an authenticated and authorized user to exchange a set of credentials or a token for cloud vendor access tokens. You can also view and update the IDBroker mappings at this location. IDBroker mappings can be accessed through Environments > Manage Access. Click on the IDBroker Mappings tab. Click Edit to edit or add mappings. When finished, synchronize the mappings to push the settings from Cloudera to the IDBroker instance running inside the Data Lake of the environment.

At this point, Cloudera resources can access the AWS S3 buckets or Azure ADLS storage. -

Ranger

To get admin access to Ranger, users need the EnvironmentAdmin role, and that role must be synced with the environment.

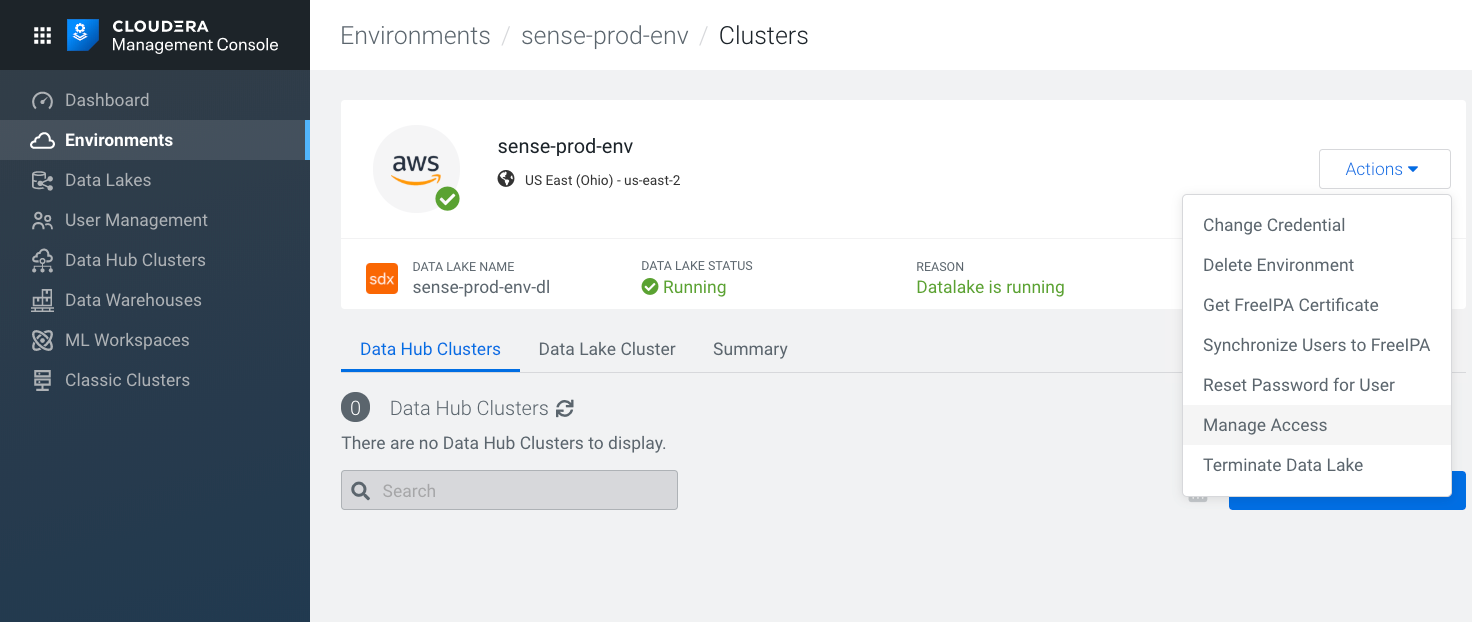

- Click Environments > Env > Actions > Manage Access > Add User

- Select EnvironmentAdmin resource role.

- Click Update Roles

- On the Environments page for the environment, in Actions, select Synchronize Users to FreeIPA.

Update permissions in Ranger- In Environments > Env > Data Lake Cluster, click Ranger.

- Select the Hadoop SQL service, and check that the users and groups have sufficient permissions to access databases, tables, columns, and urls.

For example, a user can be part of these policies:all - database,table,columnall - url

This completes all configuration needed for Cloudera AI to communicate with the Data Lake. -

Cloudera AI User Setup

Now, Cloudera AI is able to communicate with the Data Lake. There are two steps to get the user ready to work.

- In Environments > Environment name > Actions > Manage Access > Add user, the Admin selects MLUser resource role for the user.

- The User logs into the workbench in Cloudera AI Workbench > Workbench name, click Launch Workbench.

The user can now access the workbench.