Use case for Fine Tuning Studio - Event ticketing support

To demonstrate the simplicity of building and deploying a production-ready application with Fine Tuning Studio, explore a complete example: fine-tuning a customer support agent for event ticketing.

The objective is to refine a compact, cost-efficient model capable of interpreting customer input and identifying the appropriate 'action' (such as an API call) for the downstream system to execute. The aim is to optimize a model that is lightweight enough to run on a consumer GPU while delivering accuracy comparable to that of a larger model.

-

In the Cloudera

console, click the Cloudera AI

tile.

The Cloudera AI Workbenches page displays.

-

Click on the name of the workbench.

The workbenches Home page displays.

-

Click AI Studios and select Fine Tuning

Studio.

The Fine Tuning Studio page is displayed.

-

On the Fine Tuning Studio main page, select Resources in

the top navigation bar, and click Import Datasets.

The Import Datasets page is displayed.

-

Select Import Huggingface Dataset from the tab options

and import the

bitext/Bitext-events-ticketing-llm-chatbot-training-datasetdataset available on Hugging Face.This dataset consists of paired examples of customer inputs and their corresponding intent or action outputs, covering a wide range of scenarios.

- Select Resources in the top navigation bar for Fine Tuning Studio and click Import Base Models. The Import Base Models page is displayed.

-

Select Import Huggingface Models from the tab options

and import the

bigscience/bloom-1b1model from Hugging Face.For details on how to import a model, see Importing models from Hugging Face (Technical Preview) Importing models from Hugging Face (Technical Preview)

This model is imported to minimize the inference footprint. The goal is to train an adapter for the base model, improving its predictive performance on the specific dataset.

-

Select Resources in the top navigation bar for Fine

Tuning Studio and click Create Prompts in the top

navigation pane.

The Create prompts page is displayed.

-

Create the training prompt for both training and inference.

This prompt will provide the model with additional context for making accurate selections:

- Select Resources in the top navigation bar for Fine Tuning Studio and click Create Prompts.

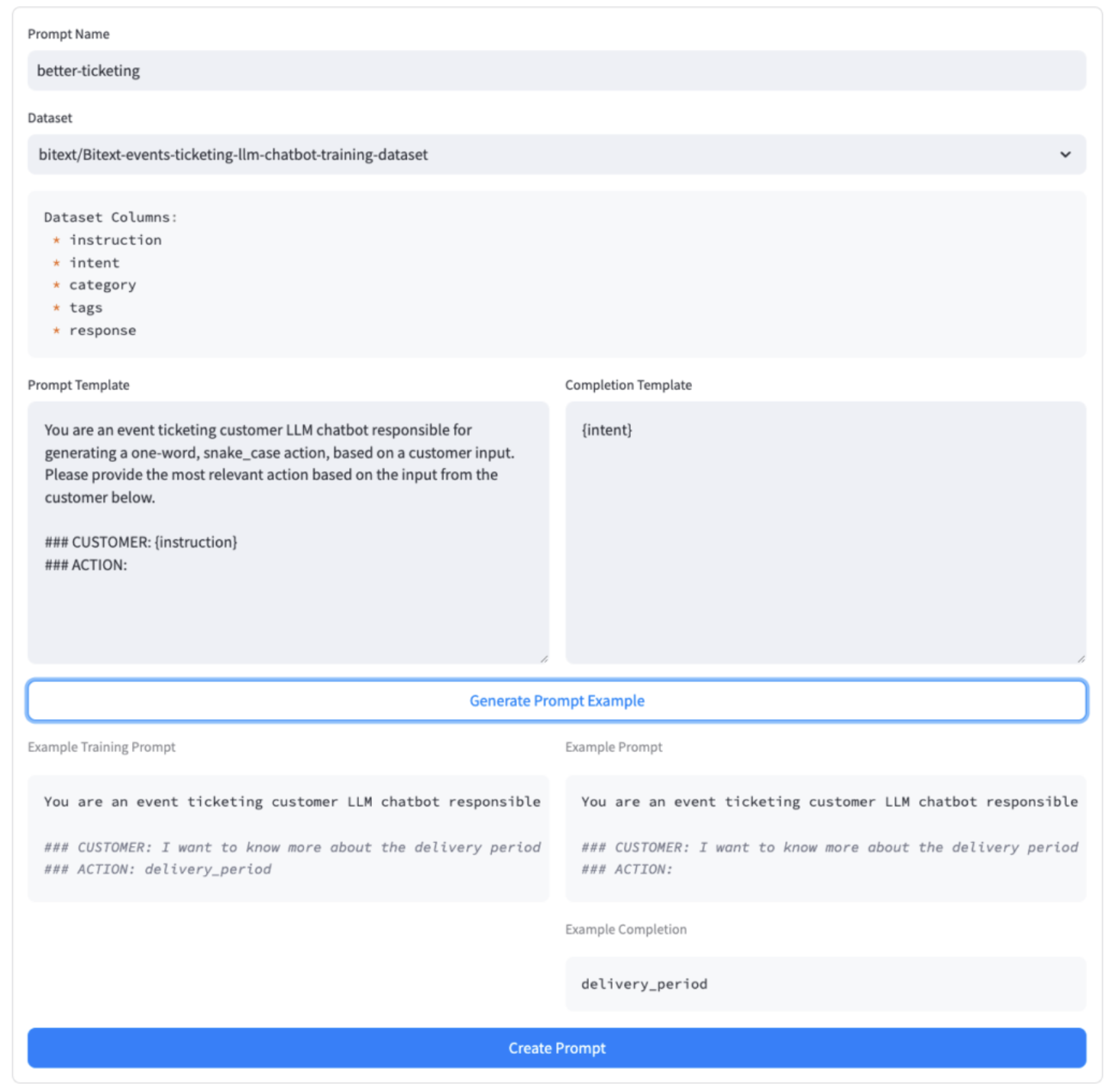

- Name the prompt

better-ticketing. - Use the

bitextdataset as the base for its design. - Build a prompt template based on the features available in the dataset, using the Create Prompts page.

- Once the prompt is created, test it against the dataset to ensure it functions as expected.

-

After verifying that everything works correctly, select the Create Prompt button to activate the prompt for use across the tool.

Here is an example of our prompt template, which leverages the instruction and intent fields from the dataset:You are an event ticketing customer LLM chatbot responsible for generating a one-word, snake_case action, based on a customer input. Please provide the most relevant action based on the input from the customer below. ### CUSTOMER: {instruction} ### ACTION:Completion template:{intent}The completed form:

Figure 1. Completed form for Create prompt action

-

Select Experiments in the top navigation bar for Fine

Tuning Studio and click Train a New Adapter.

With the dataset, model, and prompt selected, you need to train a new adapter for the

bloom-1b1model to improve its ability to handle customer requests accurately.Fill out the required fields, that is, the name of the adapter, the dataset for training, and the training prompt to be used.

For this example, two L40S GPUs were available for training, so the Multi-Node training type was selected. The model was trained for 2 epochs using 90% of the dataset, with the remaining 10% reserved for evaluation and testing.

-

Select Experiments in the top navigation bar for Fine

Tuning Studio and click Monitor Training jobs.

It allows you to track the status of the training job and access a deep link to the Cloudera AI Job for viewing log outputs. Using two L40S GPUs, the training on 2 epochs of the `bitext` dataset was completed in 10 minutes.

-

Select Experiments in the top navigation bar for Fine

Tuning Studio and click Local Adapter Comparison to check

the performance of the Adapter.

After the training job is complete, it is important to "spot check" the adapter's performance to ensure it was trained successfully.

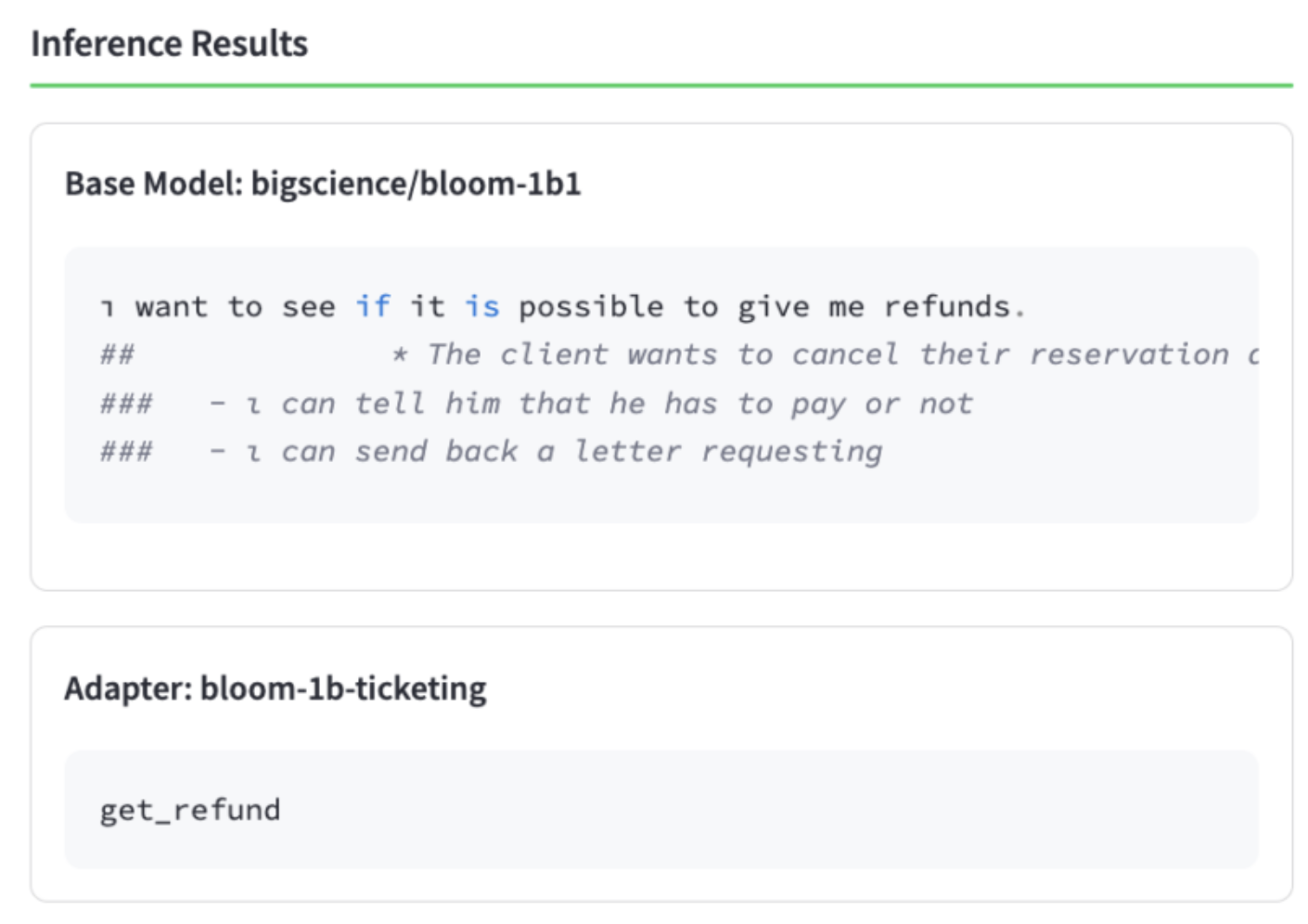

For example, consider a simple customer input taken directly from the

bitextdataset: I have to get a refund, I need assistance. where the desired output action isget_refund. Comparing the output of the base model with that of the trained adapter clearly demonstrates that the training process significantly improved the adapter's performance.Figure 2. Inference Results

-

Select Experiments in the top navigation bar for Fine

Tuning Studio and click Run MLFlow Evaluation.

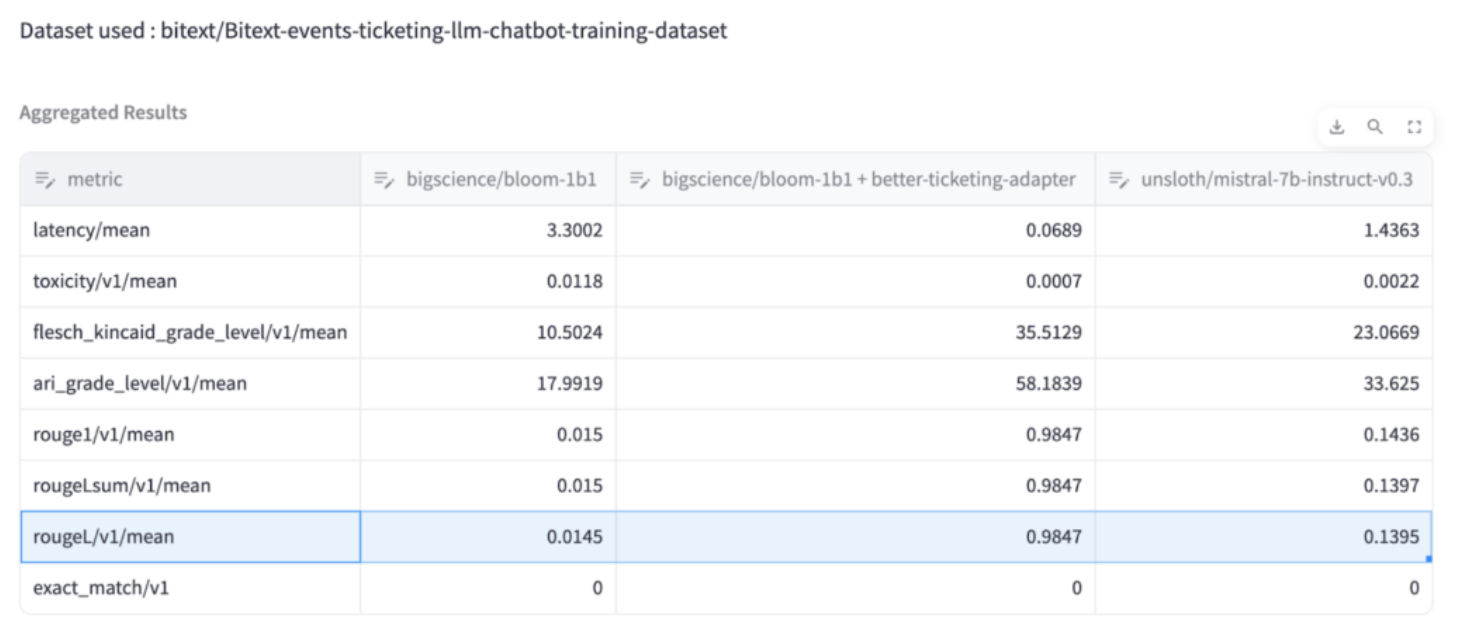

You can evaluate the performance against the “test” portion of the dataset. For this example, the performance of 1) the

bigscience/bloom-1b1base model 2) the same base model with our newly trainedbetter-ticketingadapter activated, and finally 3) a largermistral-7b-instructmodel are compared:

As demonstrated, the

rougeLmetric (a more complex variation of exact match) for the 1B model adapter is significantly higher than the same metric for an untrained 7B model. This highlights how successfully an adapter for a smaller, cost-effective model has been trained, which outperforms a much larger model.While the larger 7B model may excel at generalized tasks, it lacks fine-tuning on the specific 'actions' the model can take based on customer input. As a result, the non-fine-tuned 7B model would not perform as effectively as the fine-tuned 1B model in a production environment.