Chapter 4. Schema Registry Overview

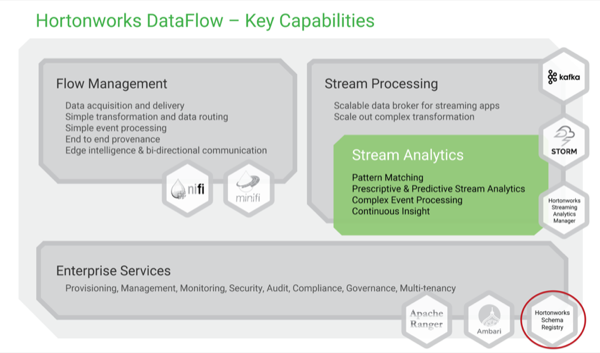

The Hortonworks DataFlow Platform (HDF) provides you with flow management, stream processing, and enterprise services so that you can collect, curate, analyze, and acting on data in motion across on-premise data centers and cloud environments.

Hortonworks Schema Registry is part of the enterprise services that powers the HDF platform:

Schema Registry provides a shared repository of schemas that allows applications and HDF components such as Apache NiFi, Apache Storm, Apache Kafka, and Streaming Analytics Manager to flexibly interact with each other.

Applications built using HDF often need a way to share metadata across three dimensions:

Data format

Schema

Semantics or meaning of the data

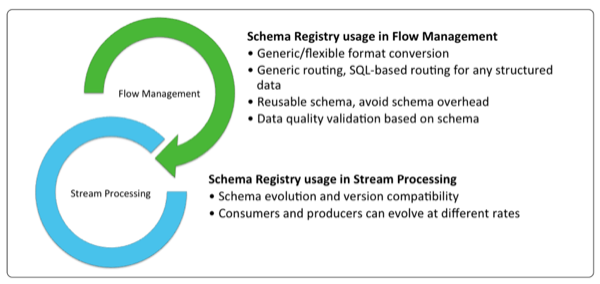

Schema Registry is designed to address the challenges of managing and sharing schemas among the components of HDF by providing the following:

A centralized registry

Provides a reusable schema to avoid attaching a new schema to every piece of data

Version management

Defines a relationship between schema versions so that consumers and producers can evolve at different rates

Schema validation

Enables generic format conversion, generic routing, and data quality