Streaming Analytics Manager Personas

Four main modules within Streaming Analytics Manager offer services to four different personas in an organization:

| User Persona | Module | Module Features and Functionality |

|---|---|---|

| IT Engineer, Operations Engineer, Platform Engineer, Platform Operator | Stream Management |

|

| Application Developer | Stream Builder |

|

| Business Analyst, Data Analyst | Stream Insight Superset |

|

| SDK Developer | Unified Streaming API |

|

The following subsections describe responsibilities for each persona. For additional information, see the following chapters in this guide:

| Persona | Chapter Reference |

|---|---|

| IT Engineer, Operations Engineer, Platform Engineer, Platform Operator |

Installing and Configuring Streaming Analytics Manager Managing Stream Apps |

| Application Developer |

Running the Sample App Building an End-to-End Stream App |

| Business Analyst, Data Analyst | Creating Visualizations: Insight Slices |

| SDK Developer | Adding Custom Builder Components |

Platform Operator Persona

A platform operator typically manages the Streaming Analytics Manager platform and provisions various services and resources for the application development team. Common responsibilities of a platform operator include the following:

Installing and managing the Streaming Analytics Manager application platform.

Provisioning and providing access to services (for example,big data services like Kafka, Storm, HDFS, and HBase) for use by the development team when building stream apps.

Provisioning and providing access to environments such as development, testing, and production, for use by the development team when provisioning stream apps.

Services, Service Pools and Environments

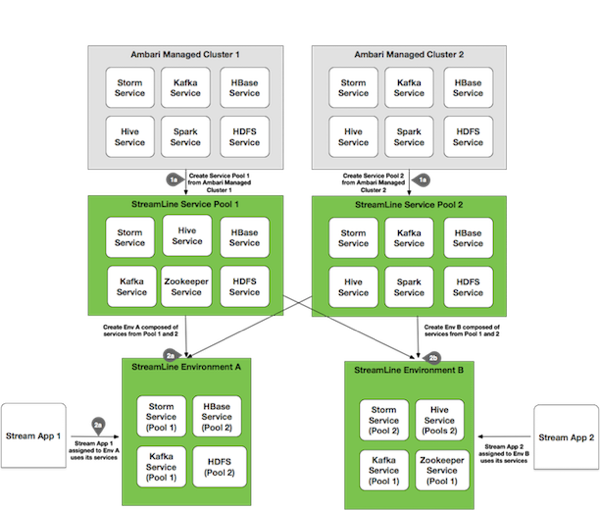

To perform these responsibilities, a platform operator works with three important abstractions in Streaming Analytics Manager:

Service is an entity that an application developer works with to build stream apps.

Examples of services include a Storm cluster that the stream app is deployed to, a Kafka cluster that is used by the stream app to create a streams, or an HBase cluster that the stream app writes to.

Service Pool is a set of services associated with an Ambari-managed cluster.

Environment is a named entity that represents a set of services chosen from different service pools.

A stream app is assigned to an environment and the app can use only the services associated with an environment.

The following diagram illustrates these constructs:

The Service, Service Pool, and Environment abstractions provide the following benefits:

Simplicity and ease of use: An application developer can use the Service abstraction without focusing on configuration details. For example, to deploy a stream app to a Storm cluster, the developer simply selects the Storm service from the environment associated with the app, and the serviceabstracts all the configuration details and complexities.

Ease of propagating a stream app between environments: With Service as an abstraction, it is easy for the stream operator or application developer to move a stream app from one environment to another. They simply export the stream app and import it into a different environment.

More Information

See Managing Stream Apps for more information about creating and managing the Streaming Analytics Manager environment.

Application Developer Persona

The application developer uses the Stream Builder component to design, implement, deploy, and debug stream apps in Streaming Analytics Manager.

The following subsections describe component building blocks and schema requirements.

More Information

Stream Builder Component Building Blocks

Stream Builder offers several building blocks for stream apps: sources, processors, sinks, and custom components.

Sources

Source builder components are used to create data streams. SAM uses the following sources:

Kafka

Azure Event Hub

HDFS

Processors

Processor builder components are used to manipulate events in the stream.

The following table lists processors that are available with Streaming Analytics Manager.

| Processor Name | Description |

|---|---|

| Join |

|

| Rule |

|

| Aggregate |

|

| Projection |

|

| Branch |

|

| PMML |

|

Sinks

Sink builder components are used to send events to other systems.

Streaming Analytics Manager supports the following sinks:

Kafka

Druid

HDFS

HBase

Hive

JDBC

OpenTSDB

Notification (OOO support Kafka and the ability to add custom notifications)

Cassandra

Solr

Custom Components

For more information about developing custom components, see SDK Developer Persona.

Schema Requirements

Unlike Apache NiFi (the flow management service of the HDF platform), Streaming Analytics Manager requires a schema for stream apps. More specifically, every Stream Builder component requires a schema to function.

The primary data stream source is Kafka, which uses the HDF Schema Registry.

The Stream Builder component for Apache Kafka is integrated with the Schema Registry. When you configure a Kafka source and supply a Kafka topic, Streaming Analytics Manager calls the Schema Registry. Using the Kafka topic as the key, Streaming Analytics Manager retrieves the schema. This schema is then displayed on the tile component, and is passed to downstream components.

Analyst Persona

A business analyst uses the Streaming Analytics Manager Stream Insight module to create time-series and real-time analytics dashboards, charts, and graphs to create rich customizable visualizations of data.

Stream Insight Key Concepts

The following table describes key concepts of the Stream Insights module.

| Stream Insight Concept | Description |

|---|---|

| Analytics Engine |

|

| Insight Data Source |

|

| Insight Slice |

|

| Dashboard |

|

A business analyst can create over 30 visualizations to gather insights on streaming data.

More Information

See the Gallery of Superset Visualizations for visualization examples

SDK Developer Persona

Streaming Analytics Manager supports the development of custom functionality through the use of its SDK.

More Information