In Hadoop version 1, MapReduce was responsible for both processing and cluster resource management. In Apache Hadoop version 2, cluster resource management has been moved from MapReduce into YARN, thus enabling other application engines to utilize YARN and Hadoop, while also improving the performance of MapReduce.

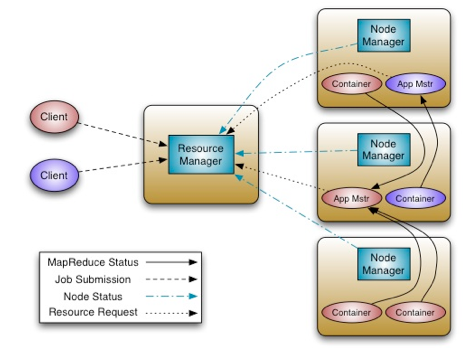

The fundamental idea of YARN is to split up the two major responsibilities of scheduling jobs and tasks that were handled by the version 1 MapReduce Job Tracker and Task Tracker into separate entities:

Resource Manager -- replaces the Job Tracker.

Application Master -- a new YARN component

Node Manager -- replaces the Task Tracker

Container running on a Node Manager -- replaces the MapReduce slots

Resource Manager

In YARN, the Resource Manager is primarily a pure scheduler. In essence, it is strictly limited to arbitrating available resources in the system among the competing applications. It optimizes for cluster utilization (keeps all resources in use all the time) against various constraints such as capacity guarantees, fairness, and SLAs. To allow for different policy constraints the Resource Manager has a pluggable scheduler that allows for different algorithms, such as capacity, to be used as necessary. The daemon runs as the “yarn” user.

Node Manager

The Node Manager is YARN’s per-node agent, and takes care of the individual compute nodes in a Hadoop cluster. This includes keeping up-to-date with the Resource Manager, life-cycle management of application Containers, monitoring resource usage (memory, CPU) of individual Containers, monitoring node health, and managing logs and other auxiliary services that can be utilized by YARN applications. The Node Manager will launch Containers ranging from simple shell scripts to C, Java, or Python processes on Unix or Windows, or even full-fledged virtual machines (e.g. KVMs). The daemon runs as the “yarn” user.

Application Master

The Application Master is, in effect, an instance of a framework-specific library and is responsible for negotiating resources from the Resource Manager and working with the Node Manager(s) to execute and monitor the Containers and their resource consumption. It has the responsibility of negotiating appropriate resource Containers from the Resource Manager and monitoring their status. The Application Master runs as a single Container that is monitored by the Resource Manager.

Container

A Container is a resource allocation, which is the successful result of the Resource Manager granting a specific Resource Request. A Container grants rights to an application to use a specific amount of resources (memory, CPU, etc.) on a specific host. In order to utilize Container resources, the Application Master must take the Container and present it to the Node Manager managing the host on which the Container was allocated. The Container allocation is verified in secure mode to ensure that Application Master(s) cannot fake allocations in the cluster.

Job History Service

This is a daemon that serves historical information about completed jobs. We recommend running it as a separate daemon. Running this daemon consumes considerable HDFS space as it saves job history information. This daemon runs as the “mapred” user.