To deploy HDP on a single-node Windows Server machine:

At the host, complete all the prerequisites, as described earlier in Before You Begin.

![[Note]](../common/images/admon/note.png)

Note Do not install Java in a location that has spaces in the pathname. Before installation, set the

JAVA_HOMEenvironment variable.Prepare the single-node machine.

Configure the firewall. HDP uses multiple ports for communication with clients and between service components. If your corporate policies require maintaining a per-server firewall, you must enable the ports listed in Configuring Ports. Use the following command to open these ports:

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=PORT_NUMBERFor example, the following command opens port 80 in the active Windows Firewall:

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=80The following command opens all ports from 49152 to 65535 in the active Windows Firewall:

netsh advfirewall firewall add rule name=AllowRPCCommunication dir=in action=allow protocol=TCP localport=49152-65535If your network security policies allow you to open all the ports, use the instructions at Microsoft Technet to disable the Windows firewall.

Install and start HDP.

Download the HDP for Windows MSI file.

At the command prompt, enter:

runas /user:administrator "cmd /C msiexec /lv c:\hdplog.txt / i PATH_to_MSI_file MSIUSEREALADMINDETECTION=1"where

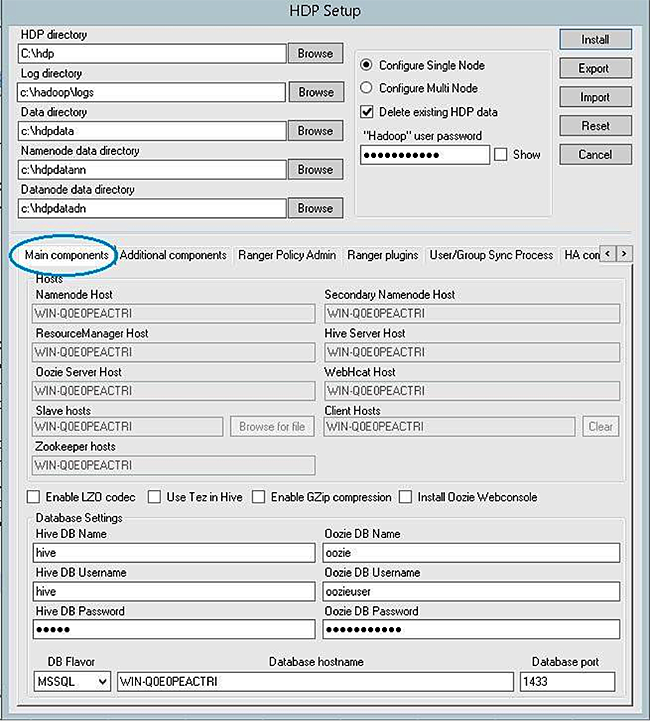

PATH_to_MSI_filematches the location of the downloaded MSI file. For example:runas /user:administrator "cmd /C msiexec /lv c:\hdplog.txt /i c:\ MSI_INSTALL\hdp-2.2.6.0.winpkg.msi MSIUSEREALADMINDETECTION=1"The HDP Setup window appears, pre-populated with the host name of the server and a set of default installation parameters. (The following image shows the

Main componentstab.)

Specify the following parameters in the HDP Setup window:

Mandatory parameters

Hadoop User Password: enter the password for the Hadoop super user (the administrative user). This password enables you to log in as the administrative user and perform administrative actions. Password requirements are controlled by Windows, and typically require that the password include a combination of uppercase and lowercase letters, digits, and special characters.

Hive and Oozie DB Names, Usernames, and Passwords: Set the DB (database) name, user name, and password for the Hive and Oozie metastores. You can use the boxes at the lower left of the HDP Setup window ("Hive DB Name", "Hive DB Username", etc.) to specify these parameters.

DB Flavor: To use the default embedded database for the single-node HDP installation, choose Derby. (The MSSQL option requires a Microsoft SQL Server database deployed in your environment and available for use by the metastore.)

Optional parameters

HDP Directory: The directory in which HDP will be installed. The default installation directory is

c:\hdp.Log Directory: The directory for the HDP service logs. The default location is

c:\hadoop\logs.Data Directory: The directory for user data for each HDP service. The default location is

c:\hdpdata.Delete Existing HDP Data: Selecting this checkbox removes all existing data from prior HDP installs. This ensures that HDFS starts with a formatted file system. For a single node installation, it is recommended that you select this option to start with a freshly formatted HDFS.

![[Important]](../common/images/admon/important.png)

Important Before selecting "Delete Existing HDP Data", make sure you wish to delete all existing data from prior HDP installs.

Install HDP Additional Components: Select this checkbox to install ZooKeeper, Flume, Storm, Knox, or HBase as HDP services deployed to the single node server.

![[Note]](../common/images/admon/note.png)

Note When deploying HDP with LZO compression enabled, add the following three files (in the HDP for Windows Installation zip) to the directory that contains the HDP for Windows Installer and the cluster properties file:

hadoop-lzo-0.4.19.2.2.6.0-2060gplcompression.dlllzo2.dll

Click

installto install HDP.The Export button on the HDP Setup window to exports the configuration information for use in a CLI- or script-driven deployment. Clicking

Exportstops the installation and creates a clusterproperties.txt file that contains the configuration information specified in the fields in the HDP Setup window.The HDP Setup window closes, and a progress indicator displays installer progress. The installation may take several minutes. Also, the time remaining estimate may be inaccurate.

When installation is complete, a confirmation window displays.

![[Warning]](../common/images/admon/warning.png) | Warning |

|---|---|

If you are reinstalling HDP and wish to delete existing data, but you did not select the Delete existing HDP data checkbox, you must format the HDFS file system. Caution: Do not format the file system if you are upgrading an existing cluster and wish to preserve your data. Formatting will delete your existing HDP data. To format the HDFS file system and delete all existing HDP data, open the Hadoop Command Line shortcut on the Windows desktop and run the following command:

|

Start all HDP services on the single machine.

In a command prompt, navigate to the HDP install directory. (This is the "HDP directory" setting you specified in the HDP Setup window.) Enter:

%HADOOP_NODE%\start_local_hdp_services

Validating the Install

To verify that HDP services work as expected, run the provided smoke tests. These tests validate installed functionality by executing a set of tests for each HDP component.

Create a

smoketestuser directory in HDFS, if one does not already exist:%HADOOP_HOME%\bin\hdfs dfs -mkdir -p /user/smoketest %HADOOP_HOME%\bin\hdfs dfs -chown -R smoketestRun the provided smoke tests as the

hadoopuser:runas /user:hadoop "cmd /K %HADOOP_HOME%\Run-SmokeTests.cmd"(You could also create a smoketest user in HDFS as described in Appendix: Adding a Smoketest User, then run the tests as the smoketest user.)