What is Hadoop?

Hadoop is an open source framework for large-scale data processing. Hadoop enables companies to retain and make use of all the data they collect, performing complex analysis quickly and storing results securely over a number of distributed servers.

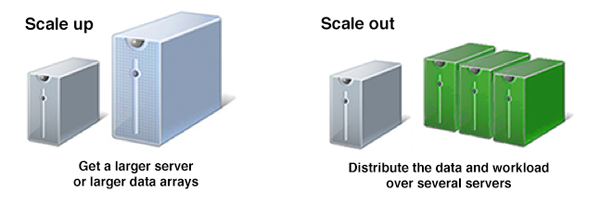

Traditional large-scale data processing was performed on a number of large computers. When demand increased, the enterprise would "scale up", replacing existing servers with larger servers or storage arrays. The advantage of this approach was that the increase in the size of the servers had no affect on the overall system architecture. The disadvantage was that scaling up was expensive.

Moreover, there's a limit on how much you can grow a system set up to process terabytes of information, and still keep the same system architecture. Sooner or later, when the amount of data collected becomes hundreds of terabytes or even petabytes, scaling up comes to a hard stop.

Scale-up strategies remain a good option for businesses that require intensive processing of data with strong internal cross-references and a need for transactional integrity. However, for growing enterprises that want to mine an ever-increasing flood of customer data, there is a better approach.

Using a Hadoop framework, large-scale data processing can respond to increased demand by "scaling out": if the data set doubles, you distribute processing over two servers; if the data set quadruples, you distribute processing over four servers. This eliminates the strategy of growing computing capacity by throwing more expensive hardware at the problem.

When individual hosts each possess a subset of the overall data set, the idea is for each host to work on their own portion of the final result independent of the others. In real life, it is likely that the hosts will need to communicate between each other, or that some pieces of data will be required by multiple hosts. This creates the potential for bottlenecks and increased risk of failure.

Therefore, designing a system where data processing is spread out over a number of servers means designing for self-healing distributed storage, fault-tolerant distributed computing, and abstraction for parallel processing. Since Hadoop is not actually a single product but a collection of several components, this design is generally accomplished through deployment of various components.

In addition to batch, interactive and real-time data access, the Hadoop "ecosystem" includes components that support critical enterprise requirements such as Security, Operations and Data Governance. To learn more about how critical enterprise requirements are implemented within the Hadoop ecosystem, see the next section in this guide, "Understanding the Hadoop Ecosystem."

For a look at a typical Hadoop cluster, see the final section in this guide, "Typical Hadoop Cluster."