Chapter 4. Query Optimization

This provides a checklist of common issues that you can use to diagnose query performance in Hive.

![[Tip]](../common/images/admon/tip.png) | Tip |

|---|---|

To view query execution in Hive, use the Ambari Hive View, which has a Visual Explain feature that presents a graphical representation of how the query executes. See the Ambari Views Guide. |

If a query is slow, check the following:

Are you getting the correct parallelism in the Mappers/Reducers?

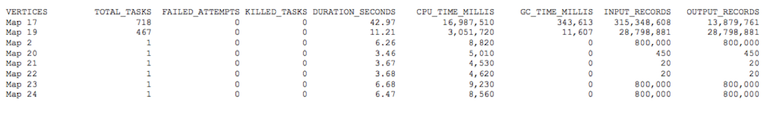

You can see the number of tasks running per vertex using Hive View or Tez View. You can then modify the parallelism to check if that helps.

If reducers do not have the correct parallelism, check hive.exec.reducers.bytes.per.reducer. You can change this to a smaller value to increase parallelism or change it to a larger value to decrease parallelism.

If mappers do not have the correct parallelism, you can modify tez.am.grouping.split-waves. This is set to 1.7 by default, which means that the number of tasks set for a vertex is equal to 1.7 of the available containers in the queue. Adjusting this to a lower value increases parallelism but allocates less resources per job.

Are you getting unusually high garbage collection times?

Sometimes garbage collection inside the Java Virtual Machine can take up a substantial portion of the total execution time. Check garbage collection time against the CPU time by either enabling hive.tez.exec.print.summary, or by checking the Tez UI:

If you see high garbage collection times, identify the operator that is causing it. Based on the operator that is causing the high garbage collection times, you can take the following actions:

Map join: A large hash table can cause high garbage collection and can negatively affect performance. For versions of HDP prior to 2.3, you can reduce hive.auto.convert.join.noconditionaltask.size to reduce the number of map joins, changing them into shuffle joins instead. However, this can decrease performance. Alternatively, you can increase the container size, still using map joins, but there will be more memory available to reduce the effects of garbage collection. In HDP 2.3 and later, map join operators support spilling if the hash table is too large. In this case, garbage collection time is not high, but the join spill of the larger hash table may impact the runtime performance.

Insert into ORC: If inserting into a table that has a large number of columns, try reducing hive.exec.orc.default.bagger.size to 64KB or increase the container size.

Insert into partitioned table: Inserting a large number of tasks into multiple partitions at the same time can create memory pressure. If this is the case, enable hive.optimize.sort.dynamic.partition. Do not enable this flag when inserting into a small number of partitions (less than 10) because this can slow query performance.

Are you getting a shuffle join and not a map join for smaller dimension tables?

hive.auto.convert.join.noconditionaltask.size determines whether a table is broadcasted or shuffled for a join. If the small table size is larger than hive.auto.convert.join.noconditonaltask.size a shuffle join is used. For accurate size accounting by the compiler, run

ANALYZE TABLE [table_name] COMPUTE STATISTICS for COLUMNS. Then enable hive.stats.fetch.column.stats. This enables the Hive physical optimizer to use more accurate per-column statistics instead of the uncompressed file size in HDFS.Are you getting an inefficient join order?

The cost-based optimizer (CBO) tries to generate the most efficient join order. For query plan optimization to work correctly, make sure that the columns that are involved in joins, filters, and aggregates have column statistics and that hive.cbo.enable is enabled. CBO does not support all operators, such as "sort by," scripts, and table functions. If your query contains these operators, rewrite the query to include only the supported operators.

If the CBO is still not generating the correct join order, rewrite the query using a Common Table Expression (CTE).