Chapter 2. Installing and Configuring Apache Atlas

Installing and Configuring Apache Atlas Using Ambari

To install Apache Atlas using Ambari, follow the procedure in Adding a Service to your Hadoop cluster in the Ambari User's

Guide. On the Choose Services page, select the Atlas service. When you reach the

Customize Services step in the Add Service wizard, set the following Atlas properties,

then complete the remaining steps in the Add Service wizard. The Atlas user name and

password are set to admin/admin by default.

Apache Atlas Prerequisites

Apache Atlas requires the following components:

Ambari Infra (which includes an internal HDP Solr Cloud instance) or an externally managed Solr Cloud instance.

HBase (used as the Atlas Metastore).

Kafka (provides a durable messaging bus).

![[Important]](../common/images/admon/important.png)

Important Ambari version 2.4.2, HDP-2.5.x and Atlas version 0.7x are the minimum supported versions

Using Ambari-2.4.x to add or update any version of Atlas prior to 0.7.x (Atlas 0.7.x is included with HDP-2.5) is not supported.

Installation and usage of any version of Atlas prior to 0.7.x on any version of HDP prior to HDP-2.5 is not supported.

Versions of Atlas prior to Atlas 0.7.x (which is included in HDP-2.5) are not intended for production use. We strongly recommend those intending to use Atlas in production use Atlas versions 0.7.x (which is included in HDP-2.5.x) after upgrading their HDP stack to HDP-2.5.

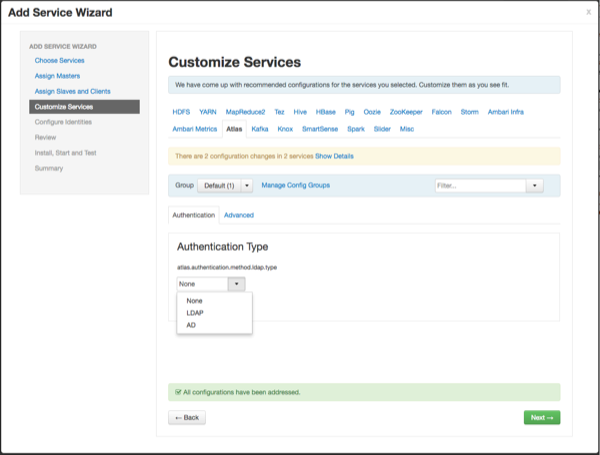

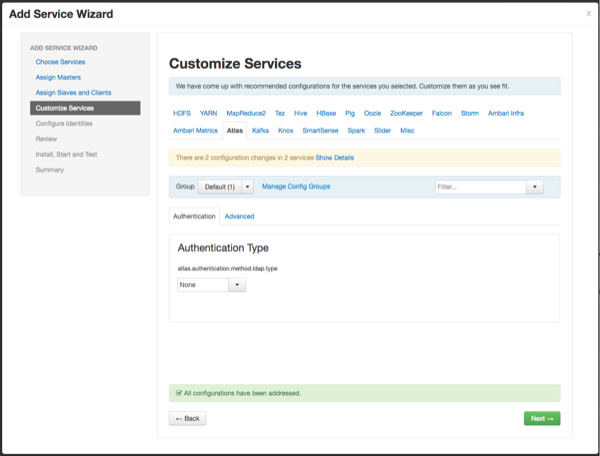

Authentication Settings

You can set the Authentication Type to None, LDAP, or AD. If authentication is set to None, file-based authentication is used.

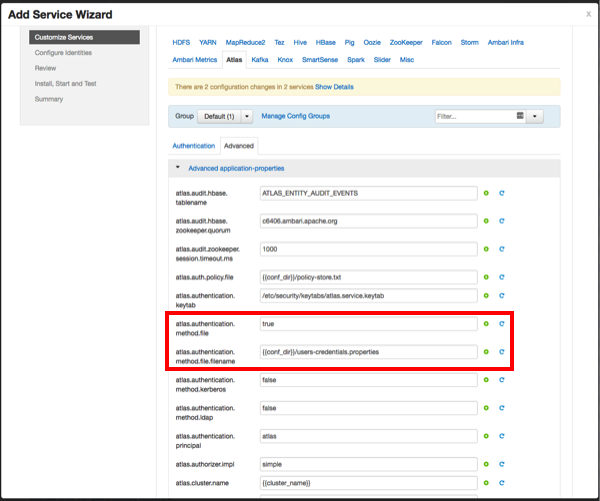

File-based Authentication

Select None to default to file-based authentication.

When file-based authentication is selected, the following properties are automatically set under Advanced application-properties on the Advanced tab.

Table 2.1. Apache Atlas File-based Configuration Settings

| Property | Value |

|---|---|

| atlas.authentication.method.file | true |

| atlas.authentication.method.file.filename | {{conf_dir}}/users-credentials.properties |

The users-credentials.properties file should have the following

format:

username=group::sha256password admin=ADMIN::e7cf3ef4f17c3999a94f2c6f612e8a888e5b1026878e4e19398b23bd38ec221a

The user group can be ADMIN, DATA_STEWARD, or

DATA_SCIENTIST.

The password is encoded with the sha256 encoding method and can

be generated using the UNIX tool:

echo -n "Password" | sha256sum e7cf3ef4f17c3999a94f2c6f612e8a888e5b1026878e4e19398b23bd38ec221a -

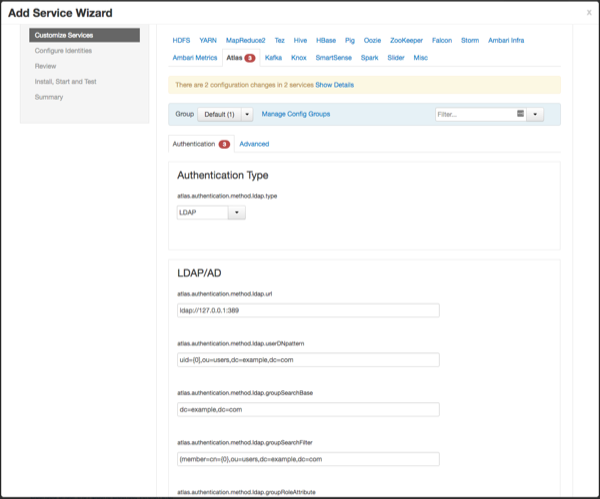

LDAP Authentication

To enable LDAP authentication, select LDAP, then set the following configuration properties.

Table 2.2. Apache Atlas LDAP Configuration Settings

| Property | Sample Values |

|---|---|

| atlas.authentication.method.ldap.url | ldap://127.0.0.1:389 |

| atlas.authentication.method.ldap.userDNpattern | uid={0],ou=users,dc=example,dc=com |

| atlas.authentication.method.ldap.groupSearchBase | dc=example,dc=com |

| atlas.authentication.method.ldap.groupSearchFilter | (member=cn={0},ou=users,dc=example,dc=com |

| atlas.authentication.method.ldap.groupRoleAttribute | cn |

| atlas.authentication.method.ldap.base.dn | dc=example,dc=com |

| atlas.authentication.method.ldap.bind.dn | cn=Manager,dc=example,dc=com |

| atlas.authentication.method.ldap.bind.password | PassW0rd |

| atlas.authentication.method.ldap.referral | ignore |

| atlas.authentication.method.ldap.user.searchfilter | (uid={0}) |

| atlas.authentication.method.ldap.default.role | ROLE_USER |

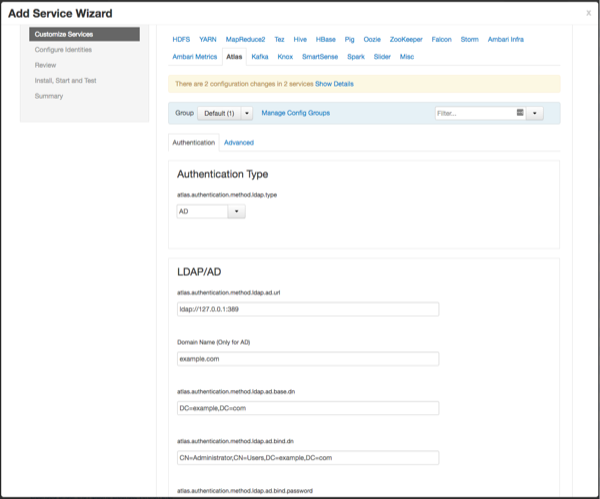

AD Authentication

To enable AD authentication, select AD, then set the following configuration properties.

Table 2.3. Apache Atlas AD Configuration Settings

| Property | Sample Values |

|---|---|

| atlas.authentication.method.ldap.ad.url | ldap://127.0.0.1:389 |

| Domain Name (Only for AD) | example.com |

| atlas.authentication.method.ldap.ad.base.dn | DC=example,DC=com |

| atlas.authentication.method.ldap.ad.bind.dn | CN=Administrator,CN=Users,DC=example,DC=com |

| atlas.authentication.method.ldap.ad.bind.password | PassW0rd |

| atlas.authentication.method.ldap.ad.referral | ignore |

| atlas.authentication.method.ldap.ad.user.searchfilter | (sAMAccountName={0}) |

| atlas.authentication.method.ldap.ad.default.role | ROLE_USER |

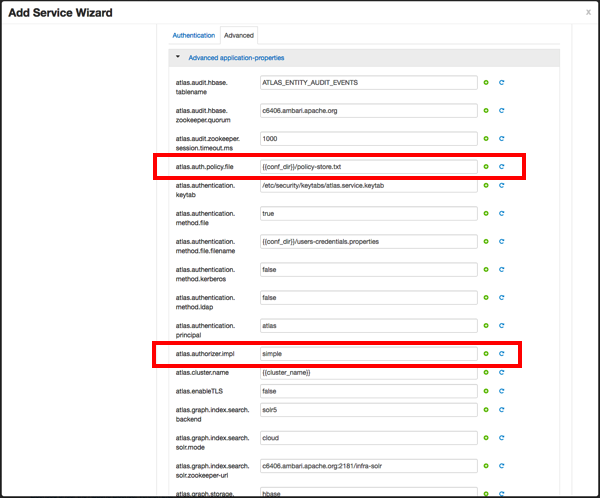

Authorization Settings

Two authorization methods are available for Atlas: Simple and Ranger.

Simple Authorization

The default setting is Simple, and the following properties are automatically set under Advanced application-properties on the Advanced tab.

Table 2.4. Apache Atlas Simple Authorization

| Property | Value |

|---|---|

| atlas.authorizer.impl | simple |

| atlas.auth.policy.file | {{conf_dir}}/policy-store.txt |

The policy-store.txt file has the following format:

Policy_Name;;User_Name:Operations_Allowed;;Group_Name:Operations_Allowed;;Resource_Type:Resource_Name

For example:

adminPolicy;;admin:rwud;;ROLE_ADMIN:rwud;;type:*,entity:*,operation:*,taxonomy:*,term:* userReadPolicy;;readUser1:r,readUser2:r;;DATA_SCIENTIST:r;;type:*,entity:*,operation:*,taxonomy:*,term:* userWritePolicy;;writeUser1:rwu,writeUser2:rwu;;BUSINESS_GROUP:rwu,DATA_STEWARD:rwud;;type:*,entity:*,operation:*,taxonomy:*,term:*

In this example readUser1, readUser2,

writeUser1 and writeUser2 are the user IDs, each with

its corresponding access rights. The User_Name, Group_Name

and Operations_Allowed are comma-separated lists.

Authorizer Resource Types:

Operation

Type

Entity

Taxonomy

Term

Unknown

Operations_Allowed are r = read,

w = write, u = update, d = delete

Ranger Authorization

Ranger Authorization is activated by enabling the Ranger Atlas plug-in in Ambari.

Configuring Atlas Tagsync in Ranger

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

Before configuring Atlas Tagsync in Ranger, you must enable Ranger Authorization in Atlas by enabling the Ranger Atlas plug-in in Ambari. |

For information about configuring Atlas Tagsync in Ranger, see Configure Ranger Tagsync.

Configuring Atlas High Availability

For information about configuring High Availability (HA) for Apache Atlas, see Apache Atlas High Availability.

Configuring Atlas Security

Additional Requirements for Atlas with Ranger and Kerberos

Currently additional configuration steps are required for Atlas with Ranger and in Kerberized environments.

Additional Requirements for Atlas with Ranger

When Atlas is used with Ranger, perform the following additional configuration steps:

Create the following HBase policy:

table: atlas_titan, ATLAS_ENTITY_AUDIT_EVENTS

user: atlas

permission: Read, Write, Create, Admin

Create following Kafka policies:

topic=ATLAS_HOOK

permission=publish, create; group=public

permission=consume, create; user=atlas (for non-kerberized environments, set group=public)

topic=ATLAS_ENTITIES

permission=publish, create; user=atlas (for non-kerberized environments, set group=public)

permission=consume, create; group=public

Additional Requirements for Atlas with Kerberos without Ranger

When Atlas is used in a Kerberized environment without Ranger, perform the following additional configuration steps:

Start the HBase shell with the user identity of the HBase admin user ('hbase')

Execute the following command in HBase shell, to enable Atlas to create necessary HBase tables:

grant 'atlas', 'RWXCA'

Start (or restart) Atlas, so that Atlas would create above HBase tables

Execute the following command in HBase shell, to revoke global permissions granted to 'atlas' user:

revoke 'atlas'

Execute the following commands in HBase shell, to enable Atlas to access necessary HBase tables:

grant 'atlas', 'RWXCA', 'atlas_titan'

grant 'atlas', 'RWXCA', 'ATLAS_ENTITY_AUDIT_EVENTS'

Kafka – To grant permissions to a Kafka topic, run the following commands as the Kafka user:

/usr/hdp/current/kafka-broker/bin/kafka-acls.sh --topic ATLAS_HOOK --allow-principals * --operations All --authorizer-properties "zookeeper.connect=hostname:2181" /usr/hdp/current/kafka-broker/bin/kafka-acls.sh --topic ATLAS_ENTITIES --allow-principals * --operations All --authorizer-properties "zookeeper.connect=hostname:2181"

Enabling Atlas HTTPS

For information about enabling HTTPS for Apache Atlas, see Enable SSL for Apache Atlas.

Hive CLI Security

If you have Oozie, Storm, or Sqoop Atlas hooks enabled, the Hive CLI can be used with these components. You should be aware that the Hive CLI may not be secure without taking additional measures.

Installing Sample Atlas Metadata

You can use the quick_start.py Python script to install sample

metadata to view in the Atlas web UI. Use the following steps to install the sample

metadata:

Log in to the Atlas host server using a command prompt.

Run the following command as the Atlas user:

su atlas -c '/usr/hdp/current/atlas-server/bin/quick_start.py'

When prompted, type in the Atlas user name and password. When the script finishes running, the following confirmation message appears:

Example data added to Apache Atlas Server!!!

If Kerberos is enabled,

kinitis required to execute thequick_start.pyscript.

After you have installed the sample metadata, you can explore the Atlas web UI.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

If you are using the HDP Sandbox, you do not need to run the Python script to populate Atlas with sample metadata. |

Updating the Atlas Ambari Configuration

When you update the Atlas configuration settings in Ambari, Ambari marks the services that require restart, and you can select Actions > Restart All Required to restart all services that require a restart.

![[Important]](../common/images/admon/important.png) | Important |

|---|---|

Apache Oozie requires a restart after an Atlas configuration update, but may not be included in the services marked as requiring restart in Ambari. Select Oozie > Service Actions > Restart All to restart Oozie along with the other services. |

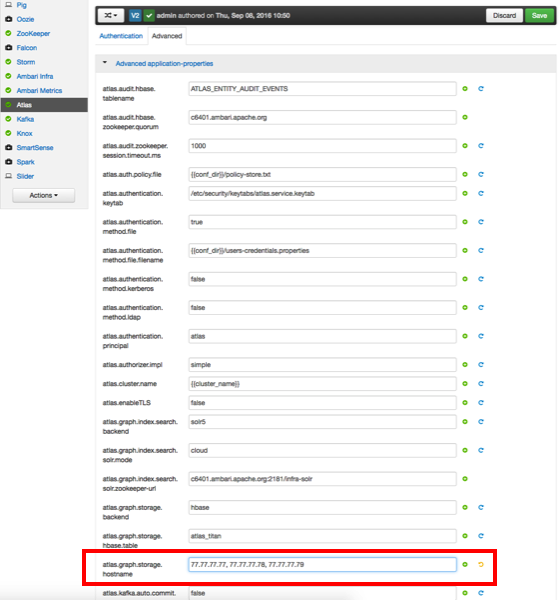

Using Distributed HBase as the Atlas Metastore

Apache HBase can be configured to run in stand-alone and distributed mode. The Atlas Ambari installer uses the stand-alone Ambari HBase instance as the Atlas Metastore by default. The default stand-alone HBase configuration should work well for POC (Proof of Concept) deployments, but you should consider using distributed HBase as the Atlas Metastore for production deployments. Distributed HBase also requires a ZooKeeper quorum.

Use the following steps to configure Atlas for distributed HBase.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

This procedure does not represent a migration of the Graph Database, so any existing lineage reports will be lost. |

On the Ambari dashboard, select Atlas > Configs > Advanced, then select Advanced application-properties.

Set the value of the

atlas.graph.storage.hostnameproperty to the value of the distributed HBase ZooKeeper quorum. This value is a comma-separated list of the servers in the distributed HBase ZooKeeper quorum:host1.mydomain.com,host2.mydomain.com,host3.mydomain.com

Click Save to save your changes, then restart Atlas and all other services that require a restart. As noted previously, Oozie requires a restart after an Atlas configuration change (even if it is not marked as requiring a restart).

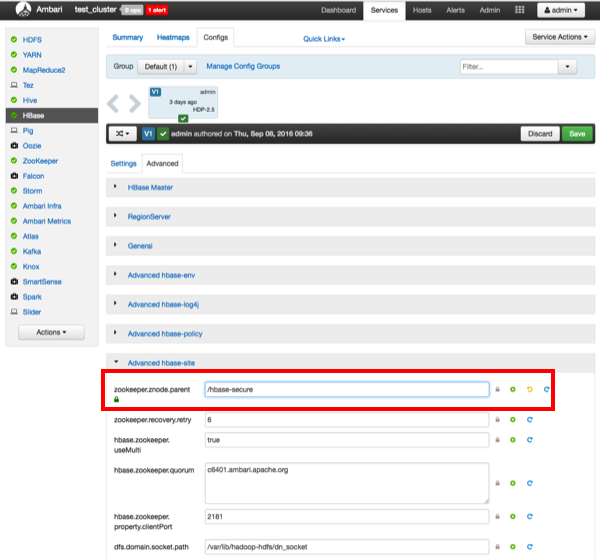

If HBase is running in secure mode, select HBase > Configs > Advanced on the Ambari dashboard, then select Advanced hbase-site. Set the value of the

zookeeper.znode.parentproperty to/hbase-secure(if HBase is not running in secure mode, you can leave this property set to the default/hbase-unsecurevalue).

Click Save to save your changes, then restart HBase and all other services that require a restart.