Chapter 1. HDP Data Governance

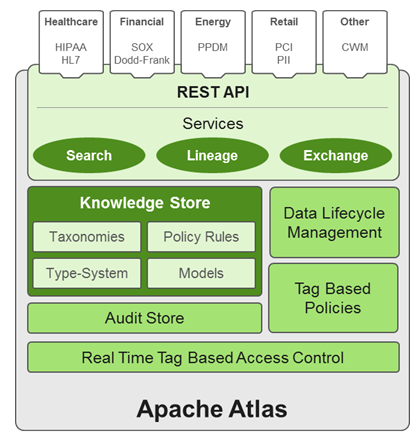

Apache Atlas provides governance capabilities for Hadoop that use both prescriptive and forensic models enriched by business taxonomical metadata. Atlas is designed to exchange metadata with other tools and processes within and outside of the Hadoop stack, thereby enabling platform-agnostic governance controls that effectively address compliance requirements.

Apache Atlas enables enterprises to effectively and efficiently address their compliance requirements through a scalable set of core governance services. These services include:

Search and Proscriptive Lineage – facilitates pre-defined and ad hoc exploration of data and metadata, while maintaining a history of data sources and how specific data was generated.

Metadata-driven data access control.

Flexible modeling of both business and operational data.

Data Classification – helps you to understand the nature of the data within Hadoop and classify it based on external and internal sources.

Metadata interchange with other metadata tools.

Apache Atlas Features

Apache Atlas is a low-level service in the Hadoop stack that provides core metadata services. Atlas currently provides metadata services for the following components:

Hive

Ranger

Sqoop

Storm/Kafka (limited support)

Falcon (limited support)

Apache Atlas provides the following features:

Knowledge store that leverages existing Hadoop metastores: Categorized into a business-oriented taxonomy of data sets, objects, tables, and columns. Supports the exchange of metadata between HDP foundation components and third-party applications or governance tools.

Data lifecycle management: Leverages existing investment in Apache Falcon with a focus on provenance, multi-cluster replication, data set retention and eviction, late data handling, and automation.

Audit store: Historical repository for all governance events, including security events (access, grant, deny), operational events related to data provenance and metrics. The Atlas audit store is indexed and searchable for access to governance events.

Security: Integration with HDP security that enables you to establish global security policies based on data classifications and that leverages Apache Ranger plug-in architecture for security policy enforcement.

Policy engine: Fully extensible policy engine that supports metadata-based, geo-based, and time-based rules that rationalize at runtime.

RESTful interface: Supports extensibility by way of REST APIs to third-party applications so you can use your existing tools to view and manipulate metadata in the HDP foundation components.

Atlas Architecture

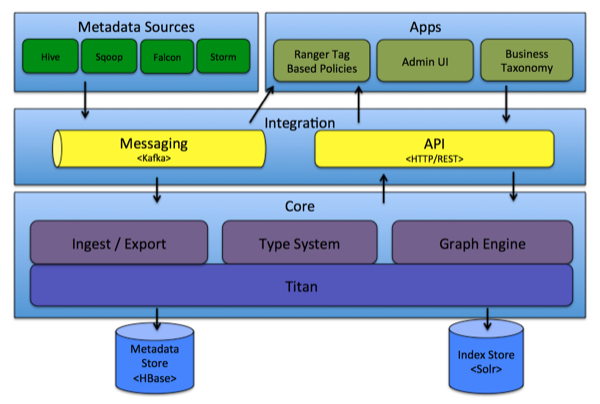

Core

This category contains the components that implement the core of Atlas functionality, including:

Type System: Atlas allows you to define a model for metadata objects. The model is composed of "types" definitions. "Entities" are instances of Types that represent the actual metadata objects. The Type system allows you to define and manage types and entities. All metadata objects managed by Atlas out-of-the-box (such as Hive tables) are modelled using types and represented as entities.

One key point to note is that the generic nature of the modelling in Atlas allows data stewards and integrators to define both technical metadata and business metadata. It is also possible to use Atlas to define rich relationships between technical and business metadata.

Ingest / Export: The Ingest component allows metadata to be added to Atlas. Similarly, the Export component exposes metadata changes detected by Atlas to be raised as events. Consumers can use these change events to react to metadata changes in real time.

Graph Engine: Internally, Atlas represents metadata objects using a Graph model. This facilitates flexibility and rich relationships between metadata objects. The Graph Engine is a component that is responsible for translating between types and entities of the Type System, as well as the underlying Graph model. In addition to managing the Graph objects, The Graph Engine also creates the appropriate indices for the metadata objects to facilitate efficient searches.

Titan: Currently, Atlas uses the Titan Graph Database to store the metadata objects. Titan is used as a library within Atlas. Titan uses two stores. The Metadata store is configured to use HBase by default, and the Index store is configured to use Solr. It is also possible to use BerkeleyDB as the Metadata store, and ElasticSearch as the Index store, by building with those corresponding profiles. The Metadata store is used for storing the metadata objects, and the Index store is used for storing indices of the Metadata properties to enable efficient search.

Integration

You can manage metadata in Atlas using the following methods:

API: All functionality of Atlas is exposed to end users via a REST API that allows types and entities to be created, updated, and deleted. It is also the primary mechanism to query and discover the types and entities managed by Atlas.

Atlas Admin UI: This component is a web-based application that allows data stewards and scientists to discover and annotate metadata. Of primary importance here is a search interface and SQL-like query language that can be used to query the metadata types and objects managed by Atlas. The Admin UI is built using the Atlas REST API.

Messaging: In addition to the API, you can integrate with Atlas using a messaging interface that is based on Kafka. This is useful both for communicating metadata objects to Atlas, and also to transmit metadata change events from Atlas to applications. The messaging interface is particularly useful if you would like to use a more loosely coupled integration with Atlas that could allow for better scalability and reliability. Atlas uses Apache Kafka as a notification server for communication between hooks and downstream consumers of metadata notification events. Events are written by the hooks and Atlas to different Kafka topics.

Metadata Sources

Currently, Atlas supports ingesting and managing metadata from the following sources:

Hive

Sqoop

Storm/Kafka (limited support)

Falcon (limited support)

As a result of his integration:

There are metadata models that Atlas defines natively to represent objects of these components.

Atlas provides mechanisms to ingest metadata objects from these components (in real time, or in batch mode in some cases).

Atlas-Ranger Integration

Atlas provides data governance capabilities and serves as a common metadata store that is designed to exchange metadata both within and outside of the Hadoop stack. Ranger provides a centralized user interface that can be used to define, administer and manage security policies consistently across all the components of the Hadoop stack. The Atlas-Ranger unites the data classification and metadata store capabilities of Atlas with security enforcement in Ranger.

You can use Atlas and Ranger to implement dynamic classification-based security policies, in addition to role-based security policies. Ranger’s centralized platform empowers data administrators to define security policy based on Atlas metadata tags or attributes and apply this policy in real-time to the entire hierarchy of assets including databases, tables, and columns, thereby preventing security violations.

Ranger-Atlas Access Policies

Classification-based access controls: A data asset such as a table or column can be marked with the metadata tag related to compliance or business taxonomy (such as “PCI”). This tag is then used to assign permissions to a user or group. This represents an evolution from role-based entitlements, which require discrete and static one-to-one mapping between user/group and resources such as tables or files. As an example, a data steward can create a classification tag “PII” (Personally Identifiable Information) and assign certain Hive table or columns to the tag “PII”. By doing this, the data steward is denoting that any data stored in the column or the table has to be treated as “PII”. The data steward now has the ability to build a security policy in Ranger for this classification and allow certain groups or users to access the data associated with this classification, while denying access to other groups or users. Users accessing any data classified as “PII” by Atlas would be automatically enforced by the Ranger policy already defined.

Data Expiry-based access policy: For certain business use cases, data can be toxic and have an expiration date for business usage. This use case can be achieved with Atlas and Ranger. Apache Atlas can assign expiration dates to a data tag. Ranger inherits the expiration date and automatically denies access to the tagged data after the expiration date.

Location-specific access policies: Similar to time-based access policies, administrators can now customize entitlements based on geography. For example, a US-based user might be granted access to data while she is in a domestic office, but not while she is in Europe. Although the same user may be trying to access the same data, the different geographical context would apply, triggering a different set of privacy rules to be evaluated.

Prohibition against dataset combinations: With Atlas-Ranger integration, it is now possible to define a security policy that restricts combining two data sets. For example, consider a scenario in which one column consists of customer account numbers, and another column contains customer names. These columns may be in compliance individually, but pose a violation if combined as part of a query. Administrators can now apply a metadata tag to both data sets to prevent them from being combined.

Cross Component Lineage

Apache Atlas now provides the ability to visualize cross-component lineage, delivering a complete view of data movement across a number of analytic engines such as Apache Storm, Kafka, Falcon, and Hive.

This functionality offers important benefits to data stewards and auditors. For example, data that starts as event data through a Kafka bolt or Storm Topology is also analyzed as an aggregated dataset through Hive, and then combined with reference data from a RDBMS via Sqoop, can be governed by Atlas at every stage of its lifecycle. Data stewards, Operations, and Compliance now have the ability to visualize a data set’s lineage, and then drill down into operational, security, and provenance-related details. As this tracking is done at the platform level, any application that uses these engines will be natively tracked. This allows for extended visibility beyond a single application view.