Preparing to create a Hive replication policy

Before you create the Hive replication policies in Cloudera Replication Manager, you must prepare the clusters and verify cluster access and cloud credentials.

-

Do the source cluster and target cluster meet the requirements to create a Hive

replication policy?

The following image shows the support matrix based on the lowest supported End of Support (EoS) versions that you can use for Hive replication policies. Consult the Support matrix for Cloudera Replication Manager for the complete list of supporting clusters and scenarios:

Figure 1. Lowest supported versions for Hive replication policies -

Is the source CDH cluster or source Cloudera Base on premises cluster registered as a

classic cluster on the Management Console?

CDH clusters and Cloudera Base on premises clusters are managed by Cloudera Manager. To enable these on-premises clusters for Replication Manager, you must register them as Classic Clusters on the Management Console. After registration, you can use them for data migration purposes.

For information about registering an on-premises cluster as a classic cluster, see Adding a CDH cluster and Adding a Cloudera Base on premises cluster.

- Does the target Data Hub use Cloudera Manager 7.9.0 or higher? If not, upgrade Cloudera Manager to version 7.9.0 or higher.

-

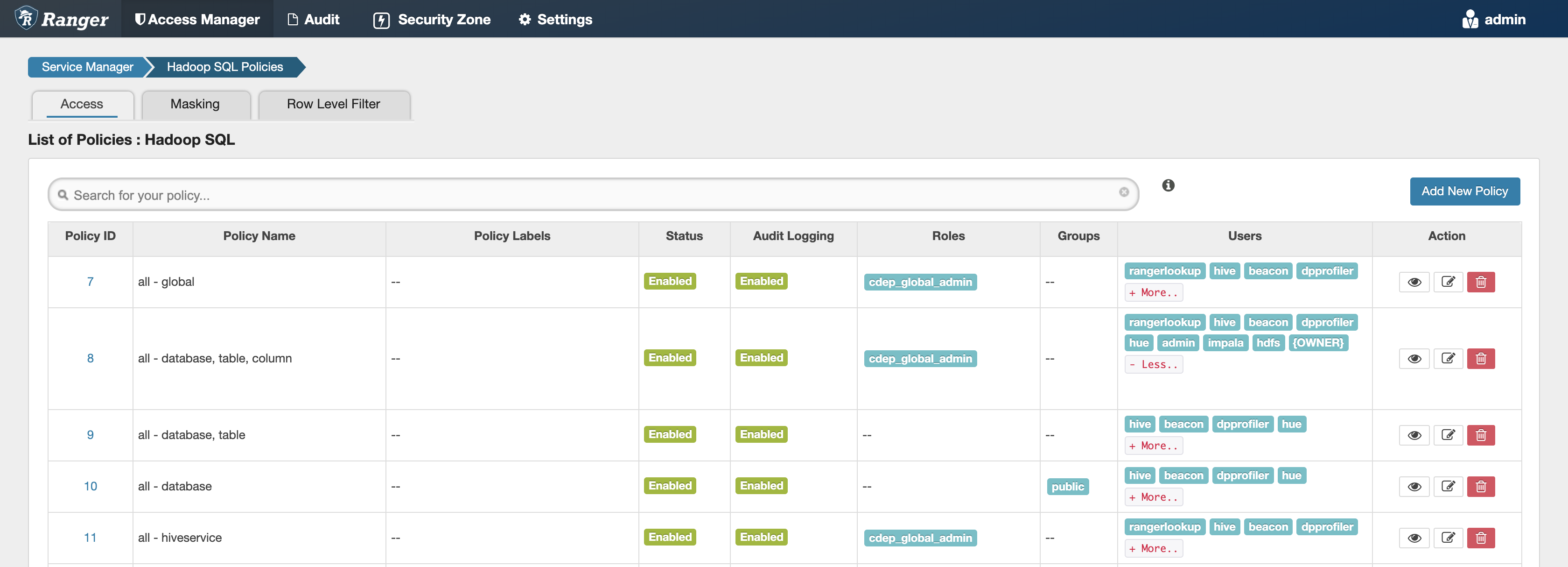

Have you configured the all-database, table, column Ranger

policy for the hdfs user on the source and target cluster

to perform all the operations on all databases and tables?

The hdfs user role is used to import Hive Metastore and must have access to all Hive datasets, including all operations. Otherwise, Hive import fails during the replication process.To provide access, go to the Ranger Admin Web UI > Service Manager > Hadoop_SQL Policies > Access section, and provide hdfs user permission to the all-database, table, column policy name.

Before you select the Additional Settings > Include Sentry Permissions with Metadata option during the Hive replication policy creation process, ensure that the hive user has the Admin privileges in the Ranger service on the target cluster. This is because the import operation of the Ranger policies is performed by the hive user.

Before you select the Additional Settings > Include Sentry Permissions with Metadata option during the Hive replication policy creation process, ensure that the hive user has the Admin privileges in the Ranger service on the target cluster. This is because the import operation of the Ranger policies is performed by the hive user.To provide the Admin privileges to the hive user, perform the following steps:

- Go to the Ranger Admin Web UI.

- Click the Settings > Users/Groups/Roles option.

- Click hive.

- Select Admin in the Select Role field on the User Detail page.

- Click Save.

The User list shows hive with the Admin role.

For more information, see Ranger documentation about user editing.

-

Is an external account configured on the source CDH cluster's Cloudera Manager which allows the CDH cluster to access

Cloudera cloud storage?

-

Do you have the required cluster access to create replication policies?

Power users, the user who onboarded the source and target clusters, and users with ClassicClusterAdmin or ClassicClusterUser resource roles can create replication policies on clusters for which they have access. For more information, see Understanding account roles and resource roles.

-

Do you have the required cluster access to view the replication policies?

Existing Hive replication policies are visible to users who have access to the source cluster in the replication policy. A warning appears if you do not have access to the source cluster.

If you can view the policies, you can perform other actions on the policy including policy update and policy delete operations.

-

Is the required cloud credential that you want to use in the replication policy

registered with the Replication Manager service?

For more information, see Working with cloud credentials.

-

Are the following ports open and available for Replication Manager?

Table 1. Minimum ports required for Hive replication policies Connectivity required for Default Port Type Description Data transfer from classic cluster hosts to cloud storage 80 or 443 (TLS) Outbound Outgoing port. All classic cluster nodes must be able to access S3/ADLS Gen2 endpoint. Cloudera Manager Admin Console HTTP 7180 or 7183 (when TLS enabled) Inbound Incoming port. Open on the source cluster to enable the target Cloudera Manager in cloud to communicate to the on-premises Cloudera Manager. Classic cluster 443 for CCMv2 Outbound Connecting the source classic cluster to the Cloudera Management Console through Cluster Connectivity Manager (CCM)

For more information, see Outbound network access for CCM,and CCM overview.

Consider the following best practices while using on Microsoft Azure ADLS Gen2 (ABFS):- Ensure that the on-premises cluster (port 443) can access the https://login.microsoftonline.com endpoint. This is because the Hadoop client in the on-premises cluster (CDH/) connects to the endpoint to acquire the access tokens before it connects to Azure ADLS storage. For more information, see the General Azure guidelines row in the Azure-specific endpoints table.

- Ensure that the steps mentioned in the General Azure guidelines and Azure Data Lake Storage Gen 2 rows in the Azure-specific endpoints table are complete so that the endpoint connects to the target path successfully.

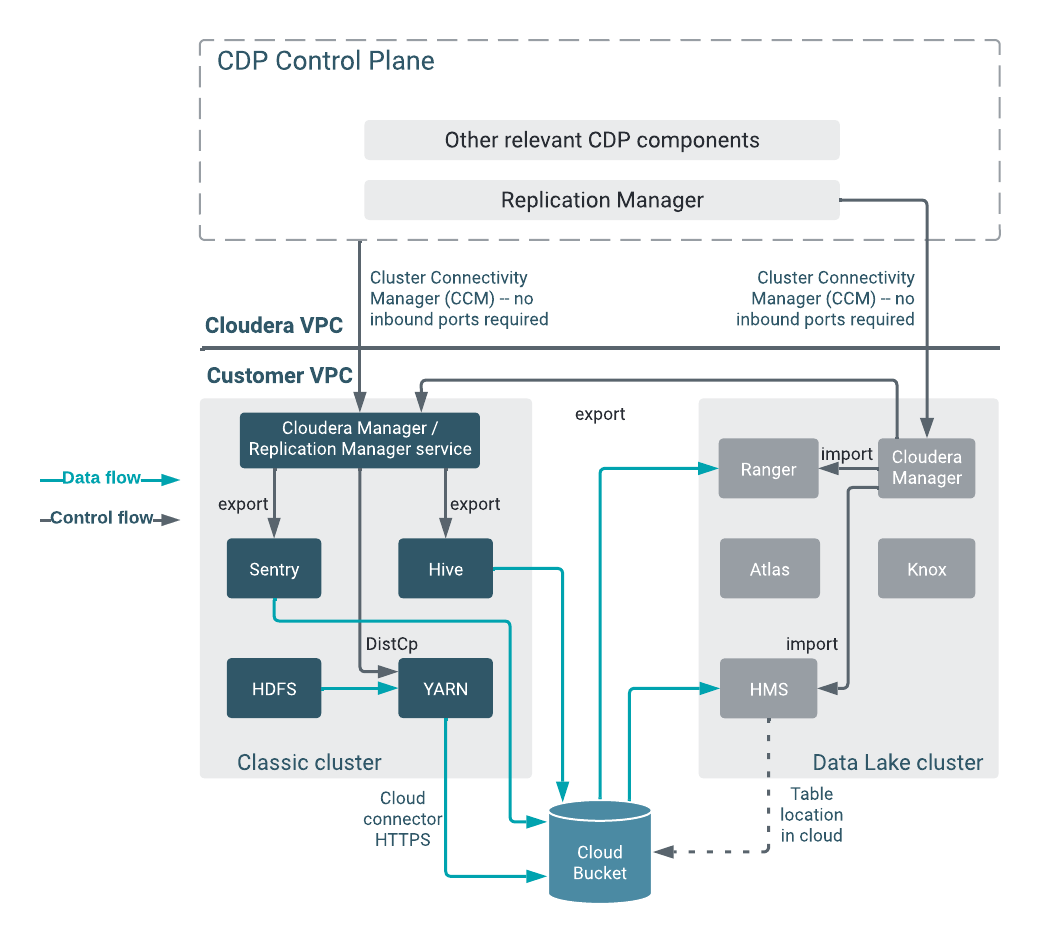

The following system architecture diagram shows the interaction between components during Hive replication using Hive replication policies:Figure 2. System architecture diagram for Hive replication in Cloudera Replication Manager