Troubleshooting replication policies in Cloudera Replication Manager

The troubleshooting scenarios in this topic help you to troubleshoot issues in the Cloudera Replication Manager.

Different methods to identify errors related to failed replication policy

What are the different methods to identify errors while troubleshooting a failed replication policy?

Replication Policies page does not display all the replication policies

The "Replication Policies" page might not display all the replication policies depending on various factors. In such scenarios, you can choose to reload the page, choose a load page option, or use CDP CLIs to view and monitor the replication policies and its statistics.

Problem

When a Cloudera Manager instance is slow, that is while handling more than 650 replication policies or when it is generally under heavy load, it might slow down the ‘policy list request’ operation. In such scenarios, the replication policies take more time than expected to appear, or might not get displayed on the Replication Policies page.

Solution

HDFS replication policy fails due to export HTTPS_PROXY environment variable

HDFS replication policies fail when the export HTTPS_PROXY environment variable is set to access AWS through proxy servers. How to resolve this issue?

Remedy

Cannot find destination clusters for HBase replication policies

When you ping destination clusters using their host names, the source cluster hosts for HBase replication policies do not find the destination clusters. How to resolve this issue?

Cause

This might occur for on-premises clusters such as Cloudera Base on premises clusters or CDH clusters because the source clusters are not on the same network as the destination Data Hub. Therefore, hostnames cannot be resolved by the DNS service on the source cluster.

Remedy

10.115.74.181 dx-7548-worker2.dx-hbas.x2-8y.dev.dr.work

10.115.72.28 dx-7548-worker1.dx-hbas.x2-8y.dev.dr.work

10.115.73.231 dx-7548-worker0.dx-hbas.x2-8y.dev.dr.work

10.115.72.20 dx-7548-master1.dx-hbas.x2-8y.dev.dr.work

10.115.74.156 dx-7548-master0.dx-hbas.x2-8y.dev.dr.work

10.115.72.70 dx-7548-leader0.dx-hbas.x2-8y.dev.dr.work

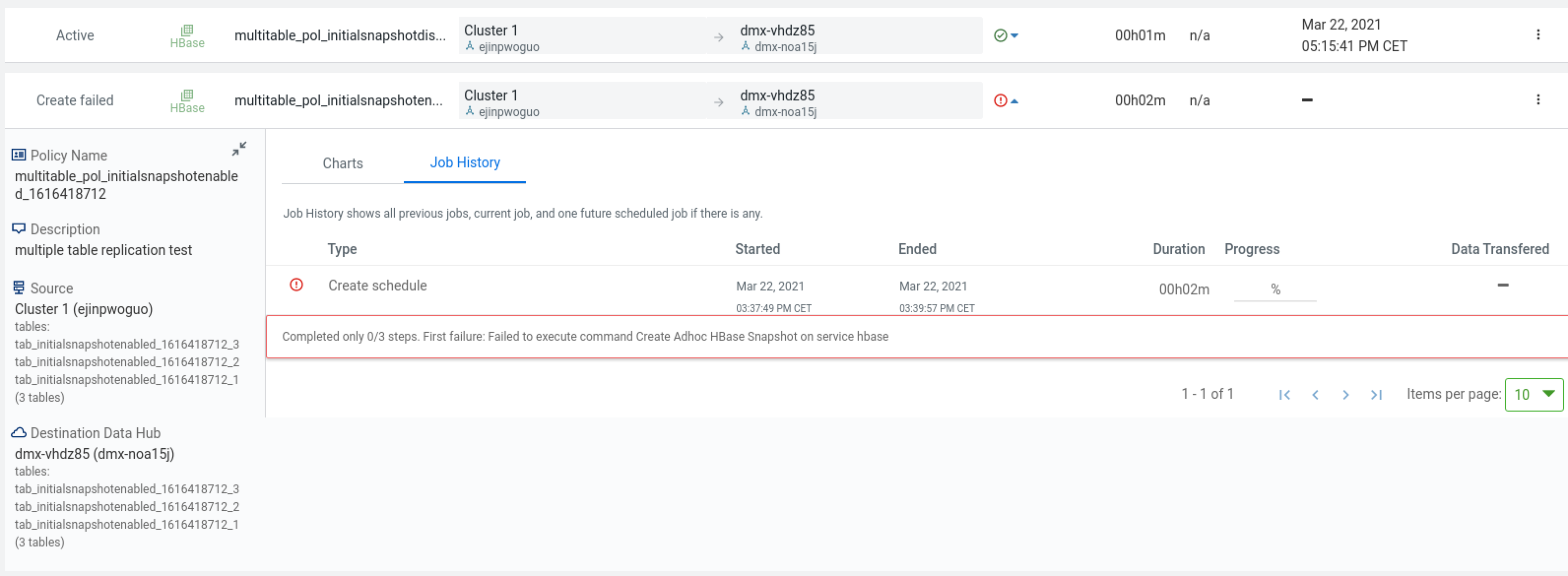

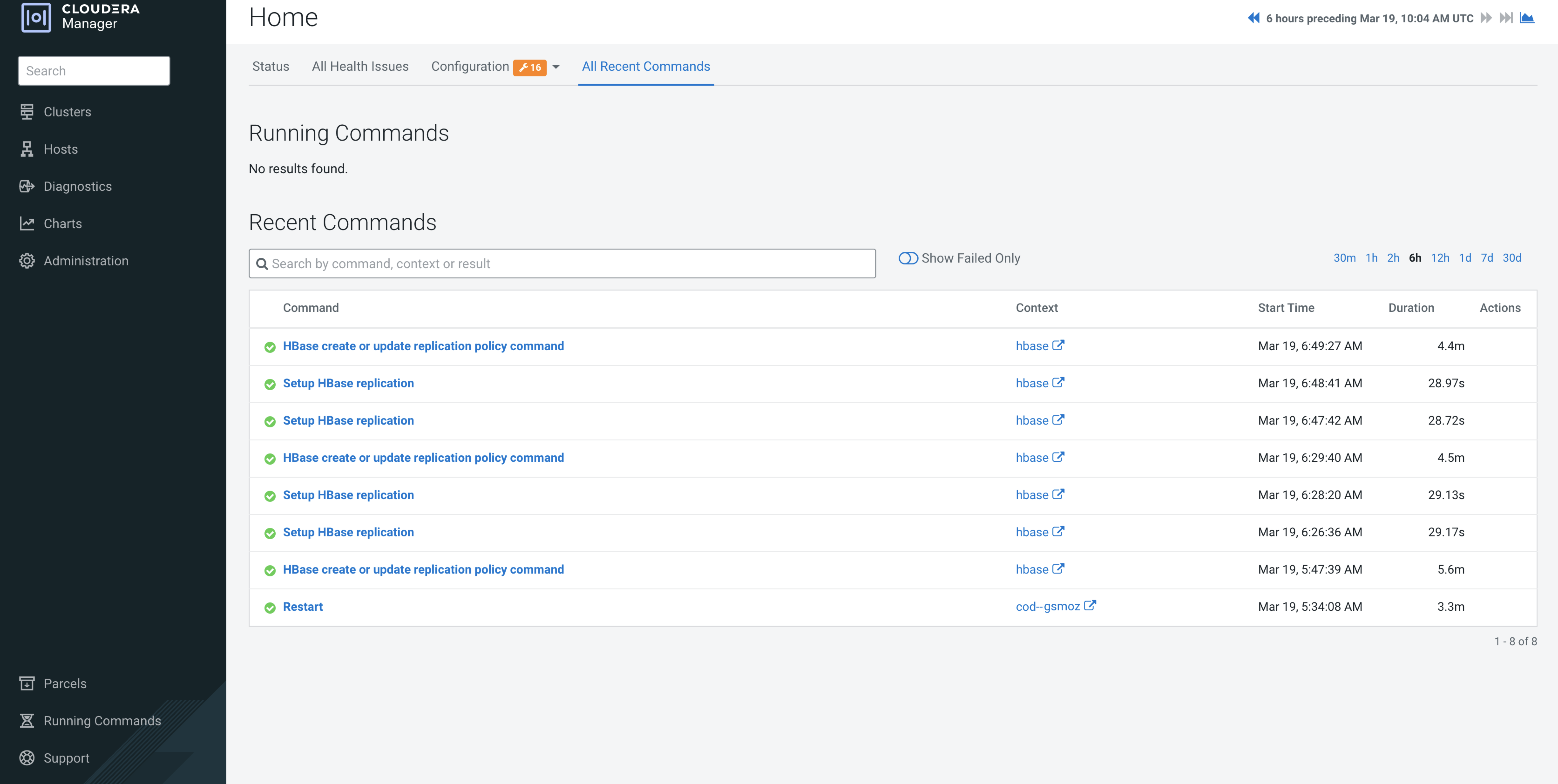

HBase replication policy fails when Perform Initial Snapshot is chosen

An HBase replication policy fails for COD on Microsoft Azure when the "Perform Initial Snapshot" option is chosen but data replication is successful when the option is not chosen. How to resolve this issue?

Cause

This issue appears when the required managed identity of source roles are not assigned.

Remedy

Optimize HBase replication policy performance when replicating HBase tables with several TB data

Can HBase replication policy performance be optimized when replicating HBase tables with several TB of data if the "Perform Initial Snapshot" option is chosen during HBase replication policy creation?

Complete the following manual steps to optimize HBase replication policy performance when replicating several TB of HBase data if you choose the Perform Initial Snapshot option during the HBase replication policy creation process.

Remedy

Partition metadata replication takes a long time to complete

How can partition metadata replication be improved when the Hive tables use several Hive partitions?

Hive metadata replication process takes a long time to complete when the Hive tables use several Hive partitions. This is because the Hive partition parameters are compared during the import stage of the partition metadata replication process and if the exported and existing partition parameters do not match, the partition is dropped and recreated. You can configure a key-value pair to support partition metadata replication.

Replicating Hive nested tables

Cloudera Replication Manager does not support Hive nested tables. What do I do if there are Hive nested tables in the source cluster?

Cloudera Replication Manager does not support Hive nested tables for replication. Therefore, it is recommended that you move the nested tables to a different location in HDFS and then replicate Hive external tables. However, if this is not possible, you can perform the following steps in the given order as a workaround.

Solution

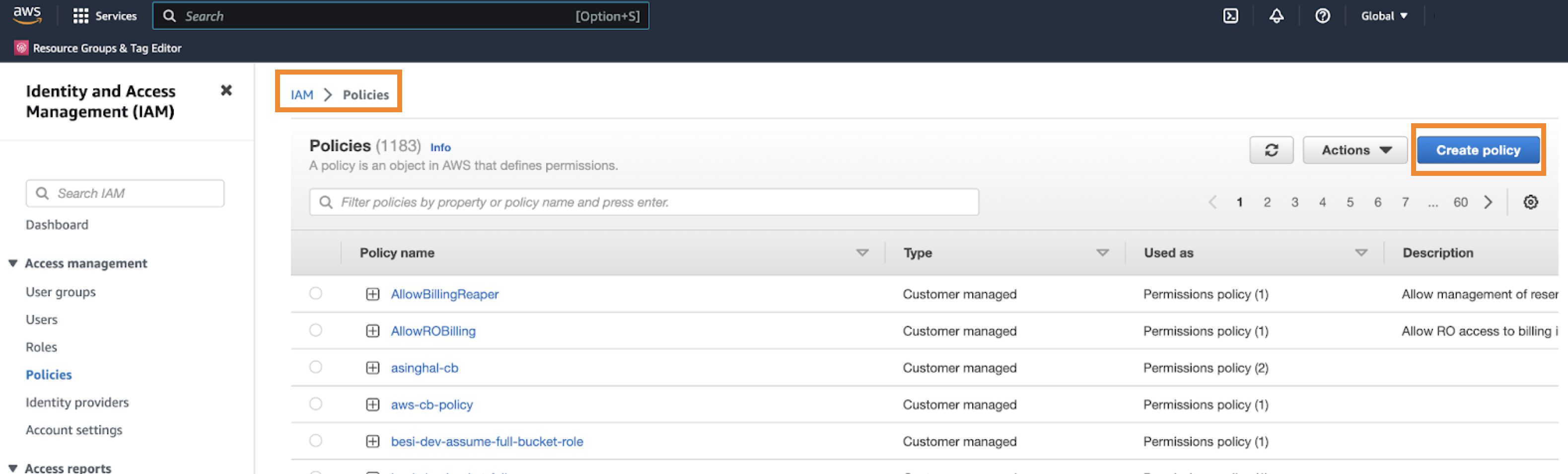

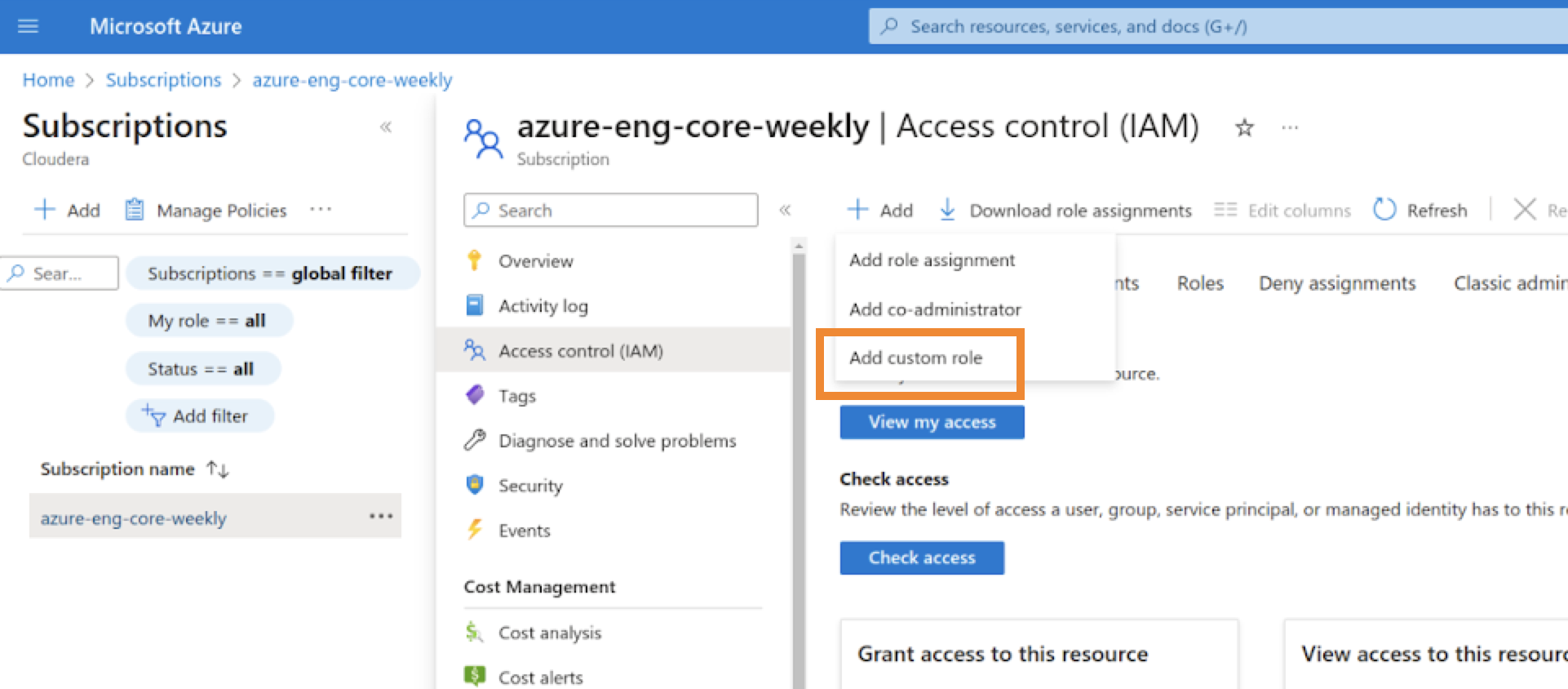

Target HBase folder is deleted when HBase replication policy fails

When the snapshot export fails during the HBase replication policy job run, the target HBase folder in the destination Data Hub or COD gets deleted.

You can either revoke the delete permission for the user, or ensure that you use an access key/role that does not have delete permissions to the required storage component.

The following steps show how to create an access key in AWS and an Azure service principal, which do not have delete permission for the storage component.

Solution in AWS

Solution in Microsoft Azure

- Login to Microsoft Azure.

-

Click on the page in Microsoft Azure, and complete the following

steps:

-

Click on the page, and complete the following steps:

- On the Role tab, select the custom role previously created. Click Next.

- On the Members tab, select User, group, or service principal for Assign access to field, and select the required service principal.

- Click Review + assign.

- Click Review + assign on the Conditions (optional) tab.

-

Click Add principal on the page.

How do I verify whether the target HBase folder in the destination Data Hub or COD does not get deleted if the snapshot export fails during the HBase replication policy job run?

To verify if the delete operation is allowed on the service principal that you previously created, perform the following steps:- Open the Azure Cloud Shell terminal.

- Login using the service principal that you created previously using the az login --service-principal -u [***CLIENT ID***] -p [***CLIENT SECRET***] --tenant [***TENANT ID***] command.

- Delete an arbitrarily created temporary file from the account using the az storage fs file delete --path [***TEMPORARY FILE***] -f data --account-name [***ACCOUNT NAME***] --auth-mode login command.

Replicate HBase data in existing and future tables

Errors might appear when you try to replicate HBase data from existing tables and future tables in a database using the “Replicate Database” option during the HBase replication policy creation process. These errors appear when there are compatibility issues.

The following list shows a few errors that might appear and how to mitigate these issues: