Creating virtual clusters

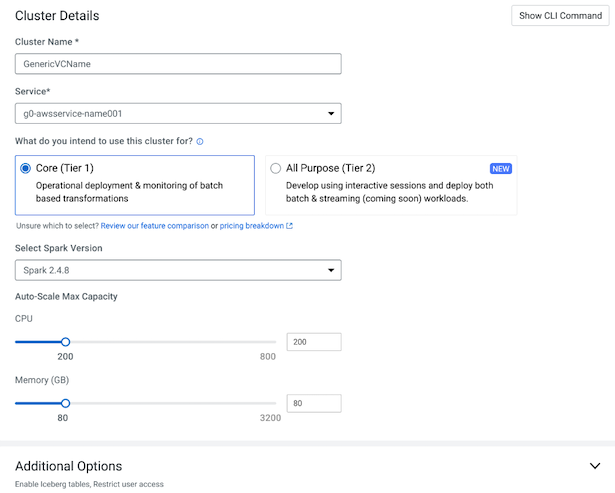

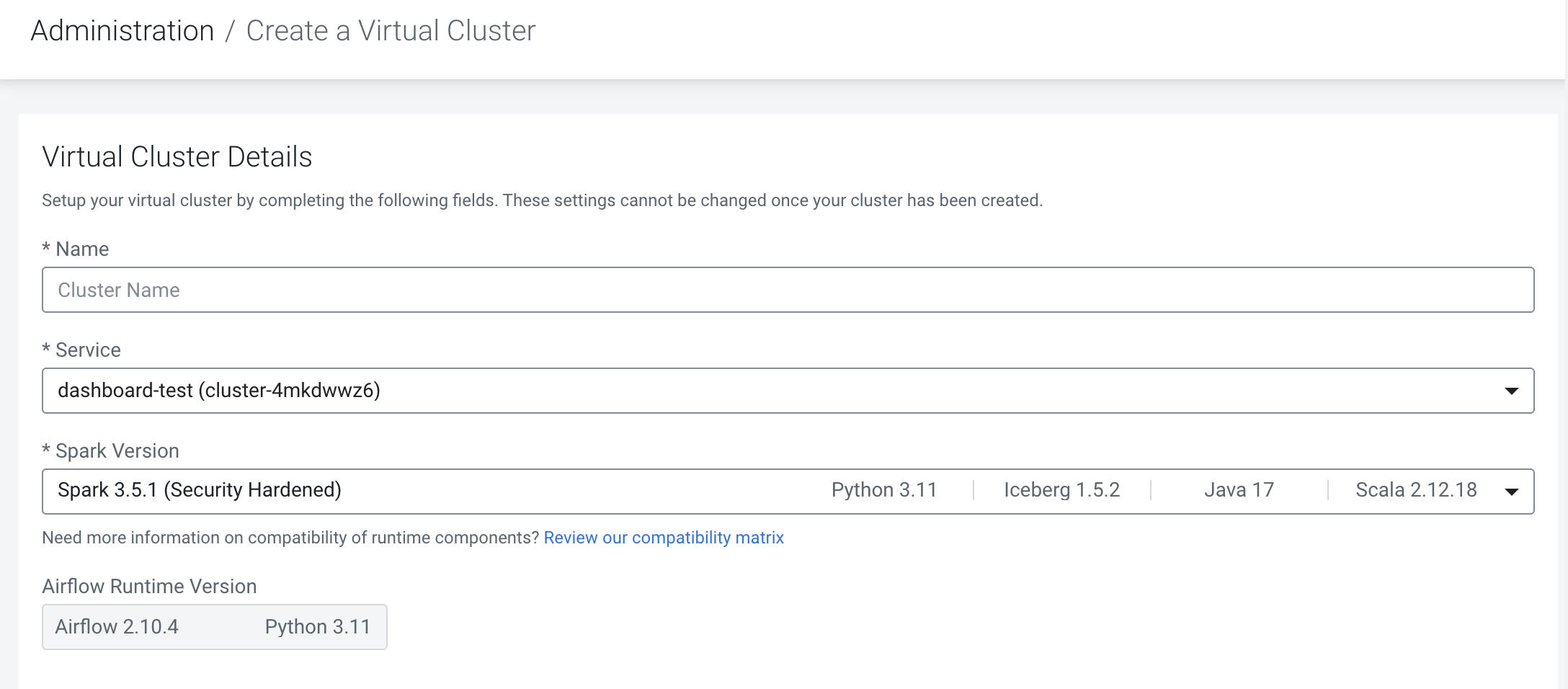

In Cloudera Data Engineering, a virtual cluster is an individual auto-scaling cluster with defined CPU and memory ranges. Jobs are associated with virtual clusters, and virtual clusters are associated with an environment. You can create as many virtual clusters as you need. See Recommendations for scaling Cloudera Data Engineering ceployments linked below.

To create a virtual cluster, you must have an environment with Cloudera Data Engineering enabled.

at the top right to create a

new virtual cluster.

at the top right to create a

new virtual cluster.