Preemption

As mentioned previously, a scenario can occur in which a queue has a guaranteed level of cluster resources, but must wait to run applications because other queues are utilizing all of the available resources. If Preemption is enabled, higher-priority applications do not have to wait because lower priority applications have taken up the available capacity. With Preemption enabled, under-served queues can begin to claim their allocated cluster resources almost immediately, without having to wait for other queues' applications to finish running.

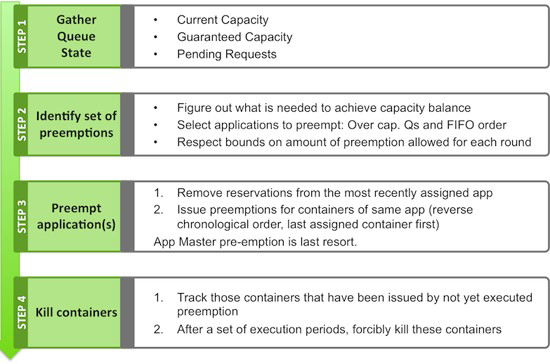

Preemption Workflow

Preemption is governed by a set of capacity monitor policies, which must be enabled by

setting the yarn.resourcemanager.scheduler.monitor.enable property to

"true". These capacity monitor policies apply Preemption in configurable

intervals based on defined capacity allocations, and in as graceful a manner as possible.

Containers are only killed as a last resort. The following image demonstrates the

Preemption workflow:

Preemption Configuration

The following properties in the /etc/hadoop/conf/yarn-site.xml

file on the ResourceManager host are used to enable and configure Preemption.

Property:

yarn.resourcemanager.scheduler.monitor.enableValue:

trueDescription: Setting this property to "true" enables Preemption. It enables a set of periodic monitors that affect the Capacity Scheduler. This default value for this property is "false" (disabled).

Property:

yarn.resourcemanager.scheduler.monitor.policiesValue:

org.apache.hadoop.yarn.server.resourcemanager.monitor.capacity.ProportionalCapacityPreemptionPolicyDescription: The list of SchedulingEditPolicy classes that interact with the scheduler. The only policy currently available for preemption is the “ProportionalCapacityPreemptionPolicy”.

Property:

yarn.resourcemanager.monitor.capacity.preemption.monitoring_intervalValue:

3000Description: The time in milliseconds between invocations of this policy. Setting this value to a longer time interval will cause the Capacity Monitor to run less frequently.

Property:

yarn.resourcemanager.monitor.capacity.preemption.max_wait_before_killValue:

15000Description: The time in milliseconds between requesting a preemption from an application and killing the container. Setting this to a higher value will give applications more time to respond to preemption requests and gracefully release Containers.

Property:

yarn.resourcemanager.monitor.capacity.preemption.total_preemption_per_roundValue:

0.1Description: The maximum percentage of resources preempted in a single round. You can use this value to restrict the pace at which Containers are reclaimed from the cluster. After computing the total desired preemption, the policy scales it back to this limit. This should be set to (memory-of-one-NodeManager)/(total-cluster-memory). For example, if one NodeManager has 32 GB, and the total cluster resource is 100 GB, the total_preemption_per_round should set to 32/100 = 0.32. The default value is 0.1 (10%).

Property:

yarn.resourcemanager.monitor.capacity.preemption.natural_termination_factorValue:

0.2Description: Similar to total_preemption_per_round, you can apply this factor to slow down resource preemption after the preemption target is computed for each queue (for example, “give me 5 GB back from queue-A”). For example, if 5 GB is needed back, in the first cycle preemption takes back 1 GB (20% of 5GB), 0.8 GB (20% of the remaining 4 GB) in the next, 0.64 GB (20% of the remaining 3.2 GB) next, and so on. You can increase this value to speed up resource reclamation. The default value is 0.2, meaning that at most 20% of the target capacity is preempted in a cycle.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

For more information about Preemption, see Better SLAS Via Resource Preemption in the YARN Capacity Scheduler. |