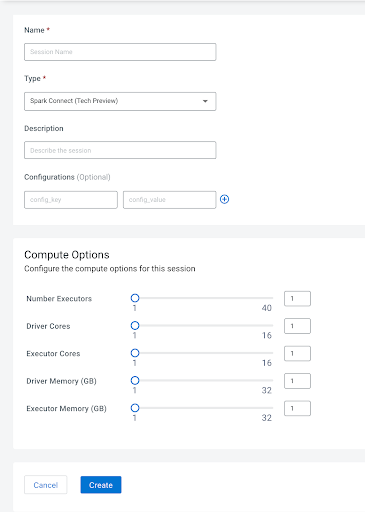

Configuring external IDE Spark Connect sessions

Learn about how to configure a Spark Connect Session with .

Before you create a Spark Connect Session, perform the following steps:

- Create a Service.

- Create a Virtual cluster. You must select All Purpose (Tier 2) in the Virtual Cluster option and Spark 3.4.1 as the Spark version.

- Initialize the virtual cluster.

- Initialize users in virtual clusters.

- If you are using an OpenShift cluster, then run the following

command:

$ oc -n openshift-ingress-operator annotate ingresscontrollers/default ingress.operator.openshift.io/default-enable-http2=true